Ways to Improve Your IMU Performance

Team designs four-legged robotic system that can walk a balance beam

Researchers mimic the human hippocampus to improve autonomous navigation

The Top 5 Things Every Manufacturing CIO Should Know

Robots are everywhere – improving how they communicate with people could advance human-robot collaboration

Emotionally intelligent’ robots could improve their interactions with people. Andriy Onufriyenko/Moment via Getty Images

By Ramana Vinjamuri (Assistant Professor of Computer Science and Electrical Engineering, University of Maryland, Baltimore County)

Robots are machines that can sense the environment and use that information to perform an action. You can find them nearly everywhere in industrialized societies today. There are household robots that vacuum floors and warehouse robots that pack and ship goods. Lab robots test hundreds of clinical samples a day. Education robots support teachers by acting as one-on-one tutors, assistants and discussion facilitators. And medical robotics composed of prosthetic limbs can enable someone to grasp and pick up objects with their thoughts.

Figuring out how humans and robots can collaborate to effectively carry out tasks together is a rapidly growing area of interest to the scientists and engineers that design robots as well as the people who will use them. For successful collaboration between humans and robots, communication is key.

Robotics can help patients recover physical function in rehabilitation. BSIP/Universal Images Group via Getty Images

How people communicate with robots

Robots were originally designed to undertake repetitive and mundane tasks and operate exclusively in robot-only zones like factories. Robots have since advanced to work collaboratively with people with new ways to communicate with each other.

Cooperative control is one way to transmit information and messages between a robot and a person. It involves combining human abilities and decision making with robot speed, accuracy and strength to accomplish a task.

For example, robots in the agriculture industry can help farmers monitor and harvest crops. A human can control a semi-autonomous vineyard sprayer through a user interface, as opposed to manually spraying their crops or broadly spraying the entire field and risking pesticide overuse.

Robots can also support patients in physical therapy. Patients who had a stroke or spinal cord injury can use robots to practice hand grasping and assisted walking during rehabilitation.

Another form of communication, emotional intelligence perception, involves developing robots that adapt their behaviors based on social interactions with humans. In this approach, the robot detects a person’s emotions when collaborating on a task, assesses their satisfaction, then modifies and improves its execution based on this feedback.

For example, if the robot detects that a physical therapy patient is dissatisfied with a specific rehabilitation activity, it could direct the patient to an alternate activity. Facial expression and body gesture recognition ability are important design considerations for this approach. Recent advances in machine learning can help robots decipher emotional body language and better interact with and perceive humans.

Robots in rehab

Questions like how to make robotic limbs feel more natural and capable of more complex functions like typing and playing musical instruments have yet to be answered.

I am an electrical engineer who studies how the brain controls and communicates with other parts of the body, and my lab investigates in particular how the brain and hand coordinate signals between each other. Our goal is to design technologies like prosthetic and wearable robotic exoskeleton devices that could help improve function for individuals with stroke, spinal cord and traumatic brain injuries.

One approach is through brain-computer interfaces, which use brain signals to communicate between robots and humans. By accessing an individual’s brain signals and providing targeted feedback, this technology can potentially improve recovery time in stroke rehabilitation. Brain-computer interfaces may also help restore some communication abilities and physical manipulation of the environment for patients with motor neuron disorders.

Brain-computer interfaces could allow people to control robotic arms by thought alone. Ramana Kumar Vinjamuri, CC BY-ND

The future of human-robot interaction

Effective integration of robots into human life requires balancing responsibility between people and robots, and designating clear roles for both in different environments.

As robots are increasingly working hand in hand with people, the ethical questions and challenges they pose cannot be ignored. Concerns surrounding privacy, bias and discrimination, security risks and robot morality need to be seriously investigated in order to create a more comfortable, safer and trustworthy world with robots for everyone. Scientists and engineers studying the “dark side” of human-robot interaction are developing guidelines to identify and prevent negative outcomes.

Human-robot interaction has the potential to affect every aspect of daily life. It is the collective responsibility of both the designers and the users to create a human-robot ecosystem that is safe and satisfactory for all.

Ramana Vinjamuri receives funding from National Science Foundation.

This article is republished from The Conversation under a Creative Commons license. Read the original article.

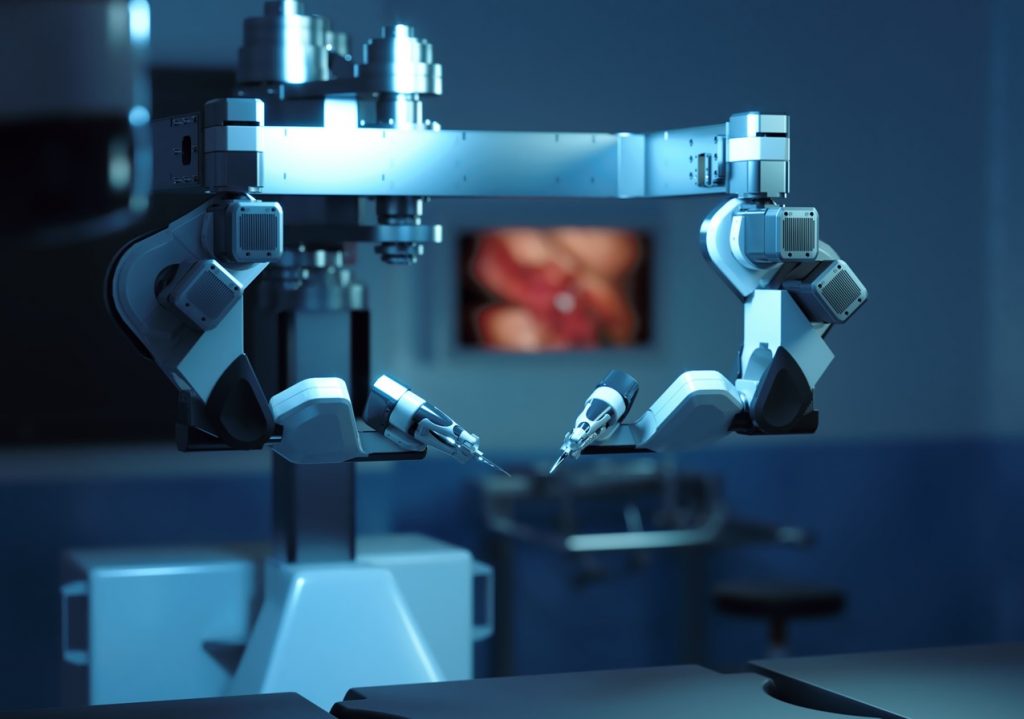

Robot assistants in the operating room promise safer surgery

Advanced robotics can help surgeons carry out procedures where there is little margin for error. © Microsure BV, 2022

In a surgery in India, a robot scans a patient’s knee to figure out how best to carry out a joint replacement. Meanwhile, in an operating room in the Netherlands, another robot is performing highly challenging microsurgery under the control of a doctor using joysticks.

Such scenarios look set to become more common. At present, some manual operations are so difficult they can be performed by only a small number of surgeons worldwide, while others are invasive and depend on a surgeon’s specific skill.

Advanced robotics are providing tools that have the potential to enable more surgeons to carry out such operations and do so with a higher rate of success.

‘We’re entering the next revolution in medicine,’ said Sophie Cahen, chief executive officer and co-founder of Ganymed Robotics in Paris.

New knees

Cahen leads the EU-funded Ganymed project, which is developing a compact robot to make joint-replacement operations more precise, less invasive and – by extension – safer.

The initial focus is on a type of surgery called total knee arthroplasty (TKA), though Ganymed is looking to expand to other joints including the shoulder, ankle and hip.

Ageing populations and lifestyle changes are accelerating demand for such surgery, according to Cahen. Interest in Ganymed’s robot has been expressed in many quarters, including distributors in emerging economies such as India.

‘Demand is super-high because arthroplasty is driven by the age and weight of patients, which is increasing all over the world,’ Cahen said.

Arm with eyes

Ganymed’s robot will aim to perform two main functions: contactless localisation of bones and collaboration with surgeons to support joint-replacement procedures.

It comprises an arm mounted with ‘eyes’, which use advanced computer-vision-driven intelligence to examine the exact position and orientation of a patient’s anatomical structure. This avoids the need to insert invasive rods and optical trackers into the body.

“We’re entering the next revolution in medicine.”

– Sophie Cahen, Ganymed

Surgeons can then perform operations using tools such as sagittal saws – used for orthopaedic procedures – in collaboration with the robotic arm.

The ‘eyes’ aid precision by providing so-called haptic feedback, which prevents the movement of instruments beyond predefined virtual boundaries. The robot also collects data that it can process in real time and use to hone procedures further.

Ganymed has already carried out a clinical study on 100 patients of the bone-localisation technology and Cahen said it achieved the desired precision.

‘We were extremely pleased with the results – they exceeded our expectations,’ she said.

Now the firm is performing studies on the TKA procedure, with hopes that the robot will be fully available commercially by the end of 2025 and become a mainstream tool used globally.

‘We want to make it affordable and accessible, so as to democratise access to quality care and surgery,’ said Cahen.

Microscopic matters

Robots are being explored not only for orthopaedics but also for highly complex surgery at the microscopic level.

The EU-funded MEETMUSA project has been further developing what it describes as the world’s first surgical robot for microsurgery certified under the EU’s ‘CE’ regulatory regime.

Called MUSA, the small, lightweight robot is attached to a platform equipped with arms able to hold and manipulate microsurgical instruments with a high degree of precision. The platform is suspended above the patient during an operation and is controlled by the surgeon through specially adapted joysticks.

In a 2020 study, surgeons reported using MUSA to treat breast-cancer-related lymphedema – a chronic condition that commonly occurs as a side effect of cancer treatment and is characterised by a swelling of body tissues as a result of a build-up of fluids.

MUSA’s robotic arms. Microsure BV, 2022

To carry out the surgery, the robot successfully sutured – or connected – tiny lymph vessels measuring 0.3 to 0.8 millimetre in diameter to nearby veins in the affected area.

‘Lymphatic vessels are below 1 mm in diameter, so it requires a lot of skill to do this,’ said Tom Konert, who leads MEETMUSA and is a clinical field specialist at robot-assisted medical technology company Microsure in Eindhoven, the Netherlands. ‘But with robots, you can more easily do it. So far, with regard to the clinical outcomes, we see really nice results.’

Steady hands

When such delicate operations are conducted manually, they are affected by slight shaking in the hands, even with highly skilled surgeons, according to Konert. With the robot, this problem can be avoided.

MUSA can also significantly scale down the surgeon’s general hand movements rather than simply repeating them one-to-one, allowing for even greater accuracy than with conventional surgery.

‘When a signal is created with the joystick, we have an algorithm that will filter out the tremor,’ said Konert. ‘It downscales the movement as well. This can be by a factor-10 or 20 difference and gives the surgeon a lot of precision.’

In addition to treating lymphedema, the current version of MUSA – the second, after a previous prototype – has been used for other procedures including nerve repair and soft-tissue reconstruction of the lower leg.

Next generation

Microsure is now developing a third version of the robot, MUSA-3, which Konert expects to become the first one available on a widespread commercial basis.

“When a signal is created with the joystick, we have an algorithm that will filter out the tremor.”

– Tom Konert, MEETMUSA

This new version will have various upgrades, such as better sensors to enhance precision and improved manoeuvrability of the robot’s arms. It will also be mounted on a cart with wheels rather than a fixed table to enable easy transport within and between operating theatres.

Furthermore, the robots will be used with exoscopes – a novel high-definition digital camera system. This will allow the surgeon to view a three-dimensional screen through goggles in order to perform ‘heads-up microsurgery’ rather than the less-comfortable process of looking through a microscope.

Konert is confident that MUSA-3 will be widely used across Europe and the US before a 2029 target date.

‘We are currently finalising product development and preparing for clinical trials of MUSA-3,’ he said. ‘These studies will start in 2024, with approvals and start of commercialisation scheduled for 2025 to 2026.’

MEETMUSA is also looking into the potential of artificial intelligence (AI) to further enhance robots. However, Konert believes that the aim of AI solutions may be to guide surgeons towards their goals and support them in excelling rather than achieving completely autonomous surgery.

‘I think the surgeon will always be there in the feedback loop, but these tools will definitely help the surgeon perform at the highest level in the future,’ he said.

Research in this article was funded via the EU’s European Innovation Council (EIC).

This article was originally published in Horizon, the EU Research and Innovation magazine.

Using drones and lasers, researchers pinpoint greenhouse gas leaks

Sheet-Jamming Technology Revolutionizes Soft Robotics Grippers

Robot Talk Episode 44 – Kat Thiel

Claire chatted to Kat Thiel from Manchester Metropolitan University all about collaborative robots, micro-factories, and fashion manufacturing.

Kat Thiel is a Senior Research Associate at Manchester Metropolitan University’s Manchester Fashion Institute with a research focus on Fashion Practice Research and Industry 4.0., investigating agile cobotic tooling solutions for localised fashion manufacturing. Previously a researcher at the Royal College of Art, she worked on the Future Fashion Factory report ‘Benchmarking the Feasibility of the Micro-Factory Model for the UK Fashion Industry’ and co-produced produced the highly influential report ‘Reshoring UK Garment Manufacturing with Automation’ with Innovate UK KTN.