Source: OpenAI’s DALL·E 2 with prompt “a hyperrealistic picture of a robot reading the news on a laptop at a coffee shop”

Welcome to the 2nd edition of Robo-Insight, a biweekly robotics news update! In this post, we are excited to share a range of remarkable advancements in the field, showcasing progress in hazard mapping, surface crawling, pump controls, adaptive gripping, surgery, health assistance, and mineral extraction. These developments exemplify the continuous evolution and potential of robotics technology.

Advancing hazard mapping through robot collaboration

In the domain of hazard mapping, researchers have developed a collaborative scheme that utilizes both ground and aerial robots for hazard mapping of contaminated areas. The team improved the quality of density maps and lowered estimation errors by using a heterogeneous coverage control technique. In comparison to homogeneous alternatives, the strategy optimizes the deployment of robots based on each one’s unique characteristics, producing better estimation values and shorter operation times. This study has important ramifications for hazard response tactics, enabling collaborative robot systems to map hazardous compounds in a more effective and precise manner.

An environment where the model is simulated. Source.

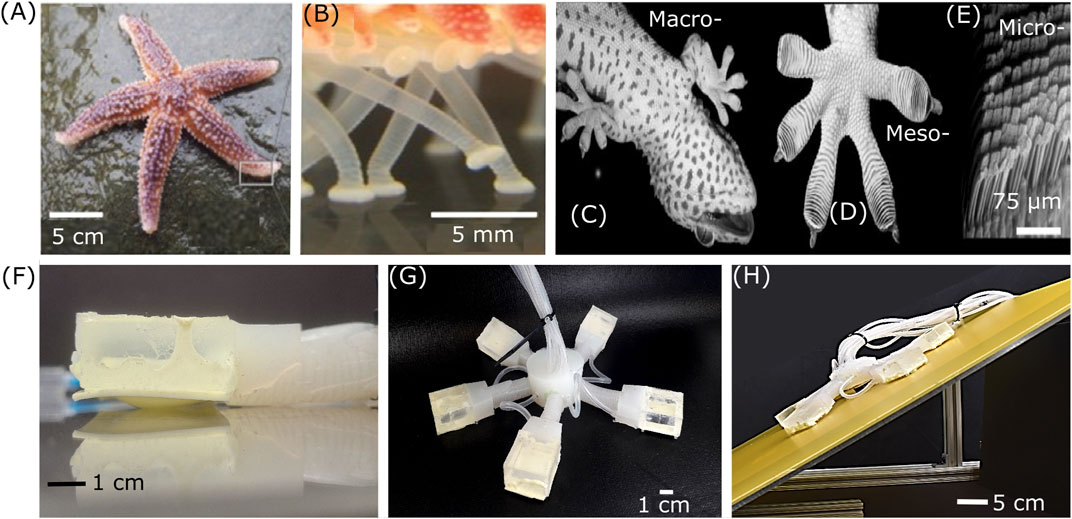

A new bioinspired crawler robot

And speaking of ground robots, a special soft robot created by researchers from Carnegie Mellon University, combines the gait patterns of sea stars and geckos. This innovative robot demonstrates enhanced crawling ability on different surfaces, including slopes, by utilizing limb motion inspired by sea stars and adhesive patches inspired by geckos. The robot’s capability to adhere to surfaces and navigate is achieved through the integration of pneumatic actuators and specially designed gecko patches. This breakthrough in soft robotics holds potential for a wide range of applications, particularly in aquatic environments.

Bioinspiration images. Source.

New pumps for soft robots used for cocktails

Also in relation to soft robotics, Harvard University researchers have created a compact, soft peristaltic pump that addresses the major challenge of bulky and rigid power components in the field of soft robotics. The pump can handle a variety of fluids with various viscosities and has changeable pressure flow thanks to electrically operated dielectric elastomer actuators. The pump can be used to make cocktails. However, its application is also far greater as it can be used in manufacturing, biological therapies, and food handling because of its small size and adaptability. The advancement creates new opportunities for soft robots to carry out delicate jobs and maneuver through challenging conditions.

The soft pump that can power the robots. Source: Harvard

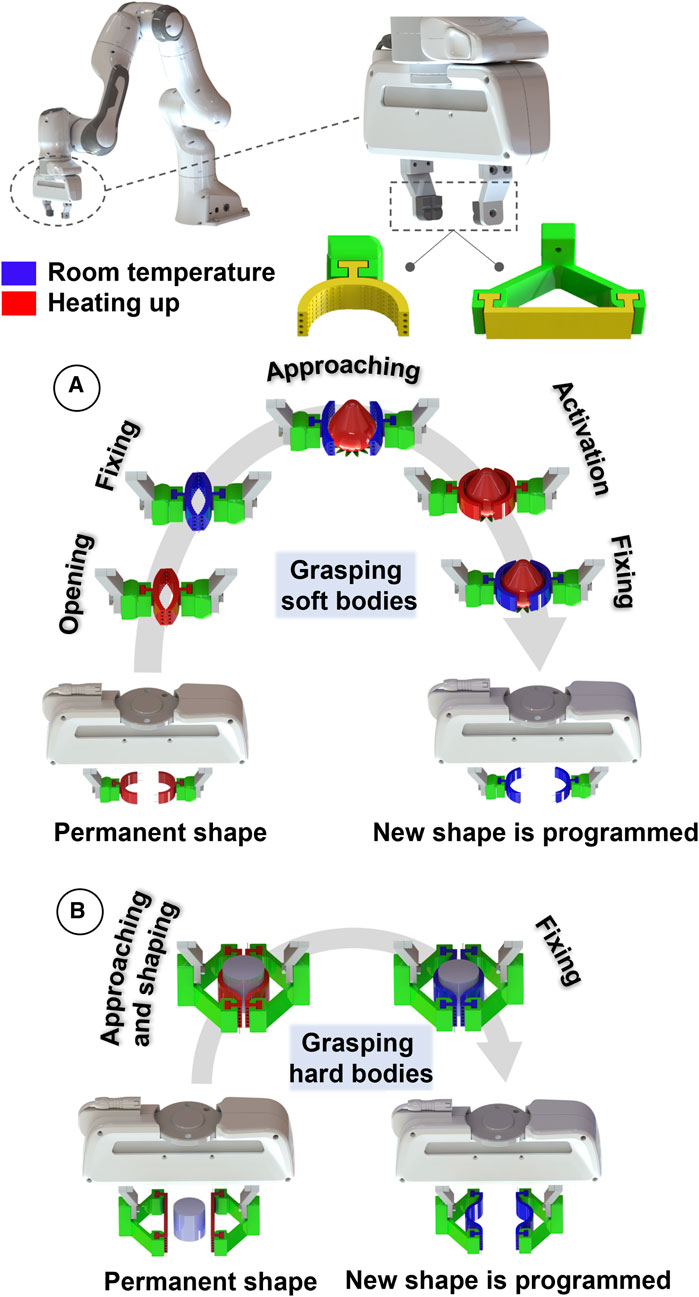

Robotic fingertips with shape-shifting capabilities

Shifting our focus to robotic gripping, using vitrimeric shape memory polymers, researchers from Brubotics, Vrije Universiteit Brussel, and Imec have created form-adaptive fingertips for robotic grippers. When subjected to particular circumstances, these polymers can reversibly alter their mechanical characteristics. For delicate objects, the fingertips are curled, while hard bodies have straight fingertips. By heating the shape-adaptive fingertips over their glass transition temperature and reshaping them with outside forces, the fingertips can be programmed. The researchers showed that the fingertips can grab and move objects of various forms, showing promise for adaptive sorting and production lines.

The Shape Adaption process, Source

ChatGPT used as a key tool for advancing robotic surgery

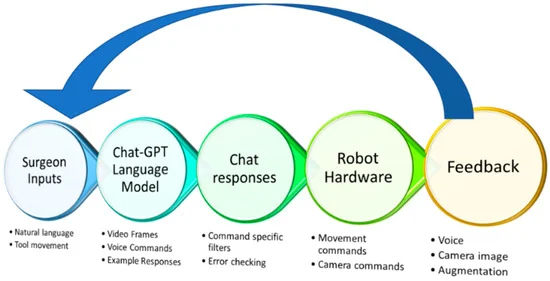

In the field of robotic surgery, to improve the accessibility and functionality of the da Vinci Surgical Robot, researchers at Wayne State University recently developed a ChatGPT-enabled interface. The ChatGPT language model’s strength enables the system to comprehend and react to the surgeon’s natural language commands. The implementation enables commands like tracking surgical tools, locating tools, taking photos, and starting/stopping video recording, providing straightforward and user-friendly interaction with the robot. Even though the system’s accuracy and usefulness showed promise, there are still issues to be resolved, such as network latency, errors, and control over model replies. The long-term effects and prospective influence of the natural language interface in surgical settings need to be assessed through additional research and development.

This shows the process the model goes through. Source

Wearable robot that could act as a personal health assistant

And speaking of robots in healthcare, researchers from the University of Maryland have developed Calico, a small wearable robot that can attach to clothing and perform various health assistance tasks. Weighing just 18 grams, Calico can act as a stethoscope, monitor vital signs, and guide users through fitness routines. By embedding neodymium magnets into the clothing track, the robot can determine its location and plan a path across the body. With a 20-gram payload capacity and speeds of up to 227 mm/s, Calico offers promising potential for healthcare monitoring and assistance in the future.

Calico over clothing. Source: University of Maryland

Swiss robots join forces for mineral exploration

Finally, in the realm of lunar material extraction, Legged robots are being developed by Swiss engineers from ETH Zurich as part of ground-breaking research to get them ready for mineral prospecting trips to the moon. The researchers are teaching the robots teamwork in order to guarantee their usefulness even in the case of faults. The team intends to maximize productivity and account for any shortcomings by combining experts and a generalist robot outfitted with a variety of measuring and analytical tools. The robots’ autonomy will also be improved by the researchers, allowing them to delegate jobs to one another while yet preserving control and intervention choices for operators.

An image of the trio of legged robots during a test in a Swiss gravel quarry. (Photograph: ETH Zurich / Takahiro Miki) Source: ETH Zurich

These recent advancements across different domains demonstrate the diverse and evolving nature of robotics technology, opening up new possibilities for applications in various industries. The continuous progress in robotics showcases the innovative efforts and potential impact that these technologies hold for the future.

Sources:

- Agung Nugroho Jati, Bambang Riyanto Trilaksono, Muhammad, E., & Widyawardana Adiprawita. (2023). Collaborative ground and aerial robots in hazard mapping based on heterogeneous coverage.

- Acharya, S., Roberts, P., Rane, T., Singhal, R., Hong, P., Ranade, V., Majidi, C., Webster-Wood, V., & Reeja-Jayan, B. (2023, June 16). Gecko adhesion based sea star crawler robot.

- Pump powers soft robots, makes cocktails. (n.d.). Seas.harvard.edu. Retrieved July 19, 2023.

- Kashef Tabrizian, S., Alabiso, W., Shaukat, U., Terryn, S., Rossegger, E., Brancart, J., Legrand, J., Schlögl, S., & Vanderborght, B. (2023, June 30). VITRIMERIC shape memory polymer-based fingertips for adaptive grasping. Frontiers.

- Pandya, A. (2023). ChatGPT-Enabled daVinci Surgical Robot Prototype: Advancements and Limitations. Robotics, 12(4), 97.

- A Wearable Robotic Assistant That’s All Over You. (n.d.). Robotics.umd.edu. Retrieved July 19, 2023.

- Robot team on lunar exploration tour. (2023, July 12).