CMES Robotics Introduces Innovative Mixed Case Palletizing Solutions

Computer science researcher creates flexible robots from soft modules

ICON Injection Molding Deploys Formic Tend to Boost Production by 20% with Automation Partnership that is “Too Good to be True”

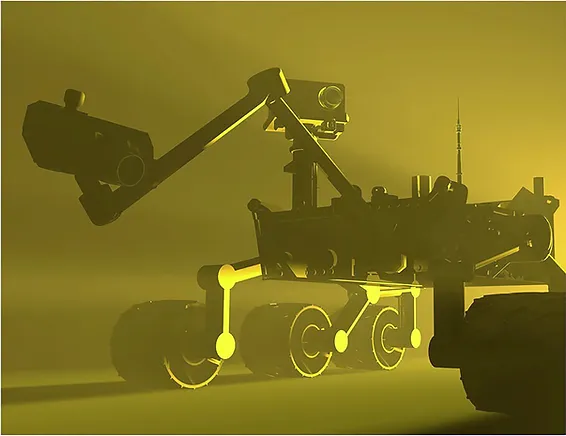

The Strange: Scifi Mars robots meet real-world bounded rationality

Even with the addition of a strange mineral, robots still obey the principle of bounded rationality in artificial intelligence set forth by Herb Simon.

I cover bounded rationality in my Science Robotics review (image courtesy of @SciRobotics) but I am adding some more details here.

Did you like the Western True Grit? Classic scifi like The Martian Chronicles? Scifi horror like Annihilation? Steam punk? How about robots? If yes to any or all of the above, The Strange by Nathan Ballingrud is for you! It’s a captivating book. And as a bonus, it’s a great example of the real world principle of bounded rationality.

First off, let’s talk about the book. The Strange is set in a counterfactual Confederate States of America colony on Mars circa 1930s, evocative of Ray Bradbury’s The Martian Chronicles. The colony makes money by mining the Strange, a green mineral which amplifies the sapience and sentience of the Steam Punk robots called Engines. The planet is capable of supporting human life, though conditions are tenuous and though the colony is self-sufficient, all communication with Earth has suddenly stopped without a reason and its long term survival is now in question. The novel’s protagonist is Annabel Crisp, a delightfully frank and unfiltered 13 year old heroine straight out of Charles Portis’ classic Western novel, True Grit. She and Watson, the dishwashing Engine from her parent’s small restaurant, embark on a dangerous trek to recover stolen property and right a plethora of wrongs. Along the way, they deal with increasingly less friendly humans and Engines.

It’s China Meiville’s New Weird meets the Wild West.

Really.

What makes The Strange different from other horror novels is that the Engines (and humans) don’t exceed their intrinsic capabilities but rather the mineral focuses or concentrates on the existing capabilities. In humans it amplifies the deepest aspects of character, a coward becomes more clever at being a coward, a person determined to return to Earth will go to unheard of extreme measures. Yet, the human will not do anything they weren’t already capable of. In robots, it adds a veneer of personality and the ability to converse through natural language, but Engines are still limited by physical capabilities and intrinsic software functionality.

The novel indirectly illustrates important real world concepts in machine intelligence and bounded rationality:

- One is that intelligence is robotics is tailored to the task it is designed for. While the public assumes a general purpose artificial general intelligence that can be universally applied to any task or work domain, robotics focuses on developing forms of intelligence needed to accomplish specific tasks. For example, a factory robot may need to learn to mimic (and improve on) how a person performs a function, but it doesn’t need to be like Sophia and speak to audiences about the role of artificial intelligence in society.

- Another important distinction is that the Public often conflates four related but separate concepts: sapience (intelligence, expertise), sentience (feelings, self awareness), autonomy (ability to adapt how it executes a task or mission), and initiative (ability to modify or discard the task or mission to meet the objectives). In sci-fi, a robot may have all four, but in real-life they typically have very narrow sapience, no sentience, autonomy limited to the tasks they are designed for, and no initiative.

These concepts fall under a larger idea first proposed in the 1950s by Herb Simon, a Nobel Prize winner in economics and a founder of the field of artificial intelligence- the idea is bounded rationality. Bounded rationality states that all decision-making agents, be they human or machine, have limits imposed by their computational resources (IQ, compute hardware, etc.), time, information (either too much or too little). For AI and robots the limits include the algorithms- the core programming. Even humans with high IQs make dumb decisions when they are tired, hungry, misinformed, or stressed. And no matter how smart, they stay within the constraints of their innate abilities. Only in fiction do people suddenly supersede their innate capabilities, and usually that takes something like a radioactive spider bite.

What would our real-world robots grow into if they were suddenly smarter? Would they be obsessive about a task, ignoring humans all together, possibly injuring or even killing them as the robots went about their tasks-sort of making OSHA violations? Would they hunt us down and kill us in one of the myriad ways detailed in Robopocalypse? Or would they deliver inventory, meals, and medicine with the kindly charm of an old-fashioned mailman? Would the social manipulation in healthcare and tutoring robots become real sentience, real caring?

Bounded rationality says it depends on us. The robots will simply do whatever we programmed them for within the limits of their hardware and the situation. Of course, that’s the problem- our programming is imperfect and we have trouble anticipating consequences. But for now, even if there was the Strange on Mars, Curiosity and Perseverance would keep on keeping on. And a big shout out to NASA- It’s hard to imagine how they could work better than they already do.

Pick up a copy of The Strange, it’s a great read. Plus Herb Simon’s Sciences of the Artificial. And don’t forget my books too! You can learn more about bounded rationality in science fiction here, in my textbook Introduction to AI Robotics and more science fiction that illustrates how robots work in Robotics Through Science Fiction and Learn AI and Human-Robot Interaction through Asimov’s I, Robot Stories.

The Strange: Scifi Mars robots meet real-world bounded rationality

Even with the addition of a strange mineral, robots still obey the principle of bounded rationality in artificial intelligence set forth by Herb Simon.

I cover bounded rationality in my Science Robotics review (image courtesy of @SciRobotics) but I am adding some more details here.

Did you like the Western True Grit? Classic scifi like The Martian Chronicles? Scifi horror like Annihilation? Steam punk? How about robots? If yes to any or all of the above, The Strange by Nathan Ballingrud is for you! It’s a captivating book. And as a bonus, it’s a great example of the real world principle of bounded rationality.

First off, let’s talk about the book. The Strange is set in a counterfactual Confederate States of America colony on Mars circa 1930s, evocative of Ray Bradbury’s The Martian Chronicles. The colony makes money by mining the Strange, a green mineral which amplifies the sapience and sentience of the Steam Punk robots called Engines. The planet is capable of supporting human life, though conditions are tenuous and though the colony is self-sufficient, all communication with Earth has suddenly stopped without a reason and its long term survival is now in question. The novel’s protagonist is Annabel Crisp, a delightfully frank and unfiltered 13 year old heroine straight out of Charles Portis’ classic Western novel, True Grit. She and Watson, the dishwashing Engine from her parent’s small restaurant, embark on a dangerous trek to recover stolen property and right a plethora of wrongs. Along the way, they deal with increasingly less friendly humans and Engines.

It’s China Meiville’s New Weird meets the Wild West.

Really.

What makes The Strange different from other horror novels is that the Engines (and humans) don’t exceed their intrinsic capabilities but rather the mineral focuses or concentrates on the existing capabilities. In humans it amplifies the deepest aspects of character, a coward becomes more clever at being a coward, a person determined to return to Earth will go to unheard of extreme measures. Yet, the human will not do anything they weren’t already capable of. In robots, it adds a veneer of personality and the ability to converse through natural language, but Engines are still limited by physical capabilities and intrinsic software functionality.

The novel indirectly illustrates important real world concepts in machine intelligence and bounded rationality:

- One is that intelligence is robotics is tailored to the task it is designed for. While the public assumes a general purpose artificial general intelligence that can be universally applied to any task or work domain, robotics focuses on developing forms of intelligence needed to accomplish specific tasks. For example, a factory robot may need to learn to mimic (and improve on) how a person performs a function, but it doesn’t need to be like Sophia and speak to audiences about the role of artificial intelligence in society.

- Another important distinction is that the Public often conflates four related but separate concepts: sapience (intelligence, expertise), sentience (feelings, self awareness), autonomy (ability to adapt how it executes a task or mission), and initiative (ability to modify or discard the task or mission to meet the objectives). In sci-fi, a robot may have all four, but in real-life they typically have very narrow sapience, no sentience, autonomy limited to the tasks they are designed for, and no initiative.

These concepts fall under a larger idea first proposed in the 1950s by Herb Simon, a Nobel Prize winner in economics and a founder of the field of artificial intelligence- the idea is bounded rationality. Bounded rationality states that all decision-making agents, be they human or machine, have limits imposed by their computational resources (IQ, compute hardware, etc.), time, information (either too much or too little). For AI and robots the limits include the algorithms- the core programming. Even humans with high IQs make dumb decisions when they are tired, hungry, misinformed, or stressed. And no matter how smart, they stay within the constraints of their innate abilities. Only in fiction do people suddenly supersede their innate capabilities, and usually that takes something like a radioactive spider bite.

What would our real-world robots grow into if they were suddenly smarter? Would they be obsessive about a task, ignoring humans all together, possibly injuring or even killing them as the robots went about their tasks-sort of making OSHA violations? Would they hunt us down and kill us in one of the myriad ways detailed in Robopocalypse? Or would they deliver inventory, meals, and medicine with the kindly charm of an old-fashioned mailman? Would the social manipulation in healthcare and tutoring robots become real sentience, real caring?

Bounded rationality says it depends on us. The robots will simply do whatever we programmed them for within the limits of their hardware and the situation. Of course, that’s the problem- our programming is imperfect and we have trouble anticipating consequences. But for now, even if there was the Strange on Mars, Curiosity and Perseverance would keep on keeping on. And a big shout out to NASA- It’s hard to imagine how they could work better than they already do.

Pick up a copy of The Strange, it’s a great read. Plus Herb Simon’s Sciences of the Artificial. And don’t forget my books too! You can learn more about bounded rationality in science fiction here, in my textbook Introduction to AI Robotics and more science fiction that illustrates how robots work in Robotics Through Science Fiction and Learn AI and Human-Robot Interaction through Asimov’s I, Robot Stories.

Training robotic arms with a hands-off approach

An origami-inspired universally deformable module for robotics applications

3D display could soon bring touch to the digital world

Copyright: Brian Johnson

Researchers at the Max Planck Institute for Intelligent Systems and the University of Colorado Boulder have developed a soft shape display, a robot that can rapidly and precisely change its surface geometry to interact with objects and liquids, react to human touch, and display letters and numbers – all at the same time. The display demonstrates high performance applications and could appear in the future on the factory floor, in medical laboratories, or in your own home.

Imagine an iPad that’s more than just an iPad—with a surface that can morph and deform, allowing you to draw 3D designs, create haiku that jump out from the screen and even hold your partner’s hand from an ocean away.

That’s the vision of a team of engineers from the University of Colorado Boulder (CU Boulder) and the Max Planck Institute for Intelligent Systems (MPI-IS) in Stuttgart, Germany. In a new study published in Nature Communications, they’ve created a one-of-a-kind shape-shifting display that fits on a card table. The device is made from a 10-by-10 grid of soft robotic “muscles” that can sense outside pressure and pop up to create patterns. It’s precise enough to generate scrolling text and fast enough to shake a chemistry beaker filled with fluid.

It may also deliver something even rarer: the sense of touch in a digital age.

“As technology has progressed, we started with sending text over long distances, then audio and later video,” said Brian Johnson, one of two lead authors of the new study who earned his doctorate in mechanical engineering at CU Boulder in 2022 and is now a postdoctoral researcher at the Max Planck Institute for Intelligent Systems. “But we’re still missing touch.”

The innovation builds off a class of soft robots pioneered by a team led by Christoph Keplinger, formerly an assistant professor of mechanical engineering at CU Boulder and now a director at MPI-IS. They’re called Hydraulically Amplified Self-Healing ELectrostatic (HASEL) actuators. The prototype display isn’t ready for the market yet. But the researchers envision that, one day, similar technologies could lead to sensory gloves for virtual gaming or a smart conveyer belt that can undulate to sort apples from bananas.

“You could imagine arranging these sensing and actuating cells into any number of different shapes and combinations,” said Mantas Naris, co-lead author of the paper and a doctoral student in the Paul M. Rady Department of Mechanical Engineering. “There’s really no limit to what these technologies could, ultimately, lead to.”

Playing the accordion

The project has its origins in the search for a different kind of technology: synthetic organs.

In 2017, researchers led by Mark Rentschler, professor of mechanical engineering and biomedical engineering, secured funding from the National Science Foundation to develop what they call sTISSUE—squishy organs that behave and feel like real human body parts but are made entirely out of plastic-like materials.

“You could use these artificial organs to help develop medical devices or surgical robotic tools for much less cost than using real animal tissue,” said Rentschler, a co-author of the new study.

In developing that technology, however, the team landed on the idea of a tabletop display.

The group’s design is about the size of a Scrabble game board and, like one of those boards, is composed of small squares arranged in a grid. In this case, each one of the 100 squares is an individual HASEL actuator. The actuators are made of plastic pouches shaped like tiny accordions. If you pass an electric current through them, fluid shifts around inside the pouches, causing the accordion to expand and jump up.

The actuators also include soft, magnetic sensors that can detect when you poke them. That allows for some fun activities, said Johnson.

“Because the sensors are magnet-based, we can use a magnetic wand to draw on the surface of the display,” he said.

Hear that?

Other research teams have developed similar smart tablets, but the CU Boulder display is softer, takes up a lot less room and is much faster. Each of its robotic muscles can move up to 3000 times per minute.

The researchers are focusing now on shrinking the actuators to increase the resolution of the display—almost like adding more pixels to a computer screen.

“Imagine if you could load an article onto your phone, and it renders as Braille on your screen,” Naris said.

The group is also working to flip the display inside out. That way, engineers could design a glove that pokes your fingertips, allowing you to “feel” objects in virtual reality.

And, Rentschler said, the display can bring something else: a little peace and quiet. “Our system is, essentially, silent. The actuators make almost no noise.”

Other CU Boulder co-authors of the new study include Nikolaus Correll, associate professor in the Department of Computer Science; Sean Humbert, professor of mechanical engineering; mechanical engineering graduate students Vani Sundaram, Angella Volchko and Khoi Ly; and alumni Shane Mitchell, Eric Acome and Nick Kellaris. Christoph Keplinger also served as a co-author in both of his roles at CU Boulder and MPI-IS.

Security Must Be Addressed Before Autonomous Delivery Can Thrive

A novel motion-capture system with robotic marker that could enhance human-robot interactions

The Rise of the Robots — So What?

Tompkins Industries Reaches New Heights with its Vertical Lift Automation System

Can charismatic robots help teams be more creative?

Image/Shutterstock.com

By Angharad Brewer Gillham, Frontiers science writer

Increasingly, social robots are being used for support in educational contexts. But does the sound of a social robot affect how well they perform, especially when dealing with teams of humans? Teamwork is a key factor in human creativity, boosting collaboration and new ideas. Danish scientists set out to understand whether robots using a voice designed to sound charismatic would be more successful as team creativity facilitators.

“We had a robot instruct teams of students in a creativity task. The robot either used a confident, passionate — ie charismatic — tone of voice or a normal, matter-of-fact tone of voice,” said Dr Kerstin Fischer of the University of Southern Denmark, corresponding author of the study in Frontiers in Communication. “We found that when the robot spoke in a charismatic speaking style, students’ ideas were more original and more elaborate.”

Can a robot be charismatic?

We know that social robots acting as facilitators can boost creativity, and that the success of facilitators is at least partly dependent on charisma: people respond to charismatic speech by becoming more confident and engaged. Fischer and her colleagues aimed to see if this effect could be reproduced with the voices of social robots by using a text-to-speech function engineered for characteristics associated with charismatic speaking, such as a specific pitch range and way of stressing words. Two voices were developed, one charismatic and one less expressive, based on a range of parameters which correlate with perceived speaker charisma.

The scientists recruited five classes of university students, all taking courses which included an element of team creativity. The students were told that they were testing a creativity workshop, which involved brainstorming ideas based on images and then using those ideas to come up with a new chocolate product. The workshop was led by videos of a robot speaking: introducing the task, reassuring the teams of students that there were no bad ideas, and then congratulating them for completing the task and asking them to fill out a self-evaluation questionnaire. The questionnaire evaluated the robot’s performance, the students’ own views on how their teamwork went, and the success of the session. The creativity of each session, as measured by the number of original ideas produced and how elaborate they were, was also measured by the researchers.

Powering creativity with charisma

The group that heard the charismatic voice rated the robot more positively, finding it more charismatic and interactive. Their perception of their teamwork was more positive, and they produced more original and elaborate ideas. They rated their teamwork more highly. However, the group that heard the non-charismatic voice perceived themselves as more resilient and efficient, possibly because a less charismatic leader led to better organization by the team members themselves, even though they produced fewer ideas.

“I had suspected that charismatic speech has very important effects, but our study provides clear evidence for the effect of charismatic speech on listener creativity,” said Dr Oliver Niebuhr of the University of Southern Denmark, co-author of the study. “This is the first time that such a link between charismatic voices, artificial speakers, and creativity outputs has been found.”

The scientists pointed out that although the sessions with the charismatic voice were generally more successful, not all the teams responded identically to the different voices: previous experiences in their different classes may have affected their response. Larger studies will be needed to understand how these external factors affected team performance.

“The robot was present only in videos, but one could suspect that more exposure or repeated exposure to the charismatic speaking style would have even stronger effects,” said Fischer. “Moreover, we have only varied a few features between the two robot conditions. We don’t know how the effect size would change if other or more features were varied. Finally, since charismatic speaking patterns differ between cultures, we would expect that the same stimuli will not yield the same results in all languages and cultures.”