Paper power: Origami technology makes its way into quadcopters

Trotting robots reveal emergence of animal gait transitions

Trotting robots reveal emergence of animal gait transitions

Talking AUTOMATE 2024 with TM Robotics

Talking AUTOMATE 2024 with TECH RIM Standards

Researchers use ChatGPT for choreographies with flying robots

An affordable miniature car-like robot to test control and estimation algorithms

Advancing AI’s Cognitive Horizons: 8 Significant Research Papers on LLM Reasoning

Simple next-token generation, the foundational technique of large language models (LLMs), is usually insufficient for tackling complex reasoning tasks. To address this limitation, various research teams have explored innovative methodologies aimed at enhancing the reasoning capabilities of LLMs. These enhancements are crucial for enabling these models to handle more intricate problems, thus significantly broadening their applicability and effectiveness.

In this article, we summarize some of the most prominent approaches developed to improve the reasoning of LLMs, thereby enhancing their ability to solve complex tasks. But before diving into these specific approaches, we suggest reviewing a few survey papers on the topic to gain a broader perspective and foundational understanding of the current research landscape.

If this in-depth educational content is useful for you, subscribe to our AI mailing list to be alerted when we release new material.

Overview Papers on Reasoning in LLMs

Several research papers provide a comprehensive survey of cutting-edge research on reasoning with large language models. Here are a few that might worth your attention:

- Reasoning with Language Model Prompting: A Survey. This paper, first published in December 2022, may not cover the most recent developments in LLM reasoning but still offers a comprehensive survey of available approaches. It identifies and details various methods, organizing them into categories such as strategic enhancements and knowledge enhancements. The authors describe multiple reasoning strategies, including chain-of-thought prompting and more sophisticated techniques that combine human-like reasoning processes with external computation engines to enhance performance.

- Towards Reasoning in Large Language Models: A Survey. This paper, also from December 2022, provides a comprehensive survey of reasoning in LLMs, discussing the current understanding, challenges, and methodologies for eliciting reasoning from LLMs, as well as evaluating their reasoning capabilities. The authors present a detailed analysis of various approaches to enhance reasoning, the development of benchmarks to measure reasoning abilities, and a discussion on the implications of these findings. They also explore the potential future directions in the field, aiming to bridge the gap between LLM capabilities and human-like reasoning.

- Large Language Models Cannot Self-Correct Reasoning Yet. In this more recent research paper from October 2023, the researchers from the Google DeepMind team critically examine the capability of LLMs to perform intrinsic self-correction, a process where an LLM corrects its initial responses without external feedback. They find that LLMs generally struggle to self-correct their reasoning, often performing worse after attempting to self-correct. This paper, to be soon presented at ICLR 2024, provides a detailed analysis of self-correction methods, demonstrating through various tests that improvements seen in previous studies typically rely on external feedback mechanisms, such as oracle labels, which are not always available or practical in real-world applications. The findings prompt a reevaluation of the practical applications of self-correction in LLMs and suggest directions for future research to address these challenges.

Now, let’s explore some specific strategies designed to enhance the reasoning capabilities of large language models.

Frameworks for Improving Reasoning in LLMs

1. Tree of Thoughts: Deliberate Problem Solving with Large Language Models

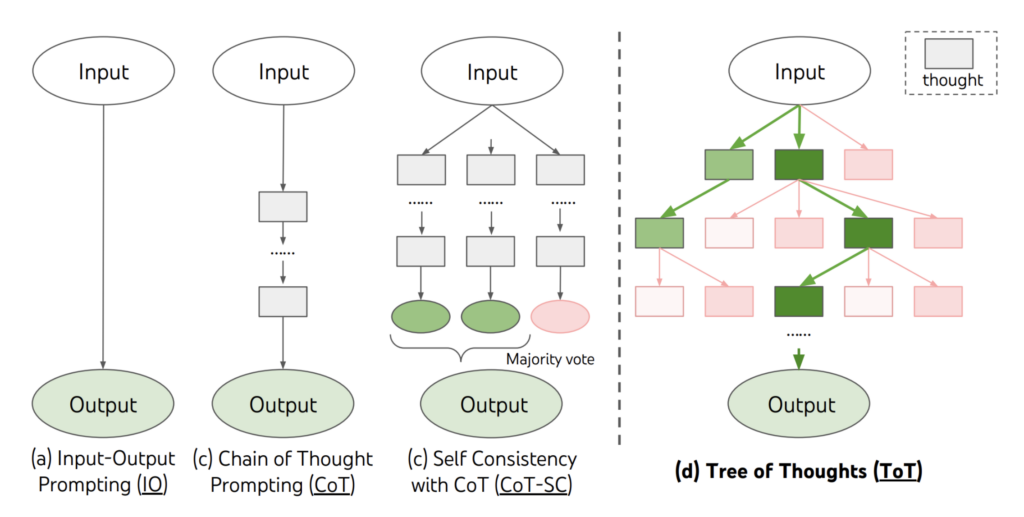

The researchers from Princeton University and Google DeepMind suggested a novel framework for language model inference called Tree of Thoughts (ToT). This framework extends the well-known chain-of-thought method by allowing the exploration of coherent text units, referred to as “thoughts,” which function as intermediate steps in problem-solving. The paper has been presented at NeurIPS 2023.

Key Ideas

- Problem Solving with Language Models. An original autoregressive method for generating text is not sufficient for a language model to be built toward a general problem solver. Instead, the authors suggest a Tree of Thoughts framework where each thought is a coherent language sequence that serves as an intermediate step toward problem solving.

- Self-evaluation. Using a high-level semantic unit such as a thought allows models to evaluate and backtrack their decisions, fostering a more comprehensive decision-making process.

- Breadth-first search or depth-first search. Ultimately, they integrate the language model’s ability to generate and assess varied thoughts with search algorithms like breadth-first search (BFS) and depth-first search (DFS). This integration facilitates a structured exploration of the tree of thoughts, incorporating both forward planning and the option to backtrack as necessary.

- New evaluation tasks. The authors also propose three new problems, Game of 24, Creative Writing, and Crosswords, that require deductive, mathematical, commonsense, and lexical reasoning abilities.

Key Results

- ToT has demonstrated substantial improvements over existing methods in the assignments requiring non-trivial planning or search.

- For instance, in the newly introduced Game of 24 task, ToT achieved a 74% success rate, a significant increase from the 4% success rate of GPT-4 using a chain-of-thought prompting method.

Implementation

- Code repository with all prompts is available on GitHub.

2. Least-to-Most Prompting Enables Complex Reasoning in Large Language Models

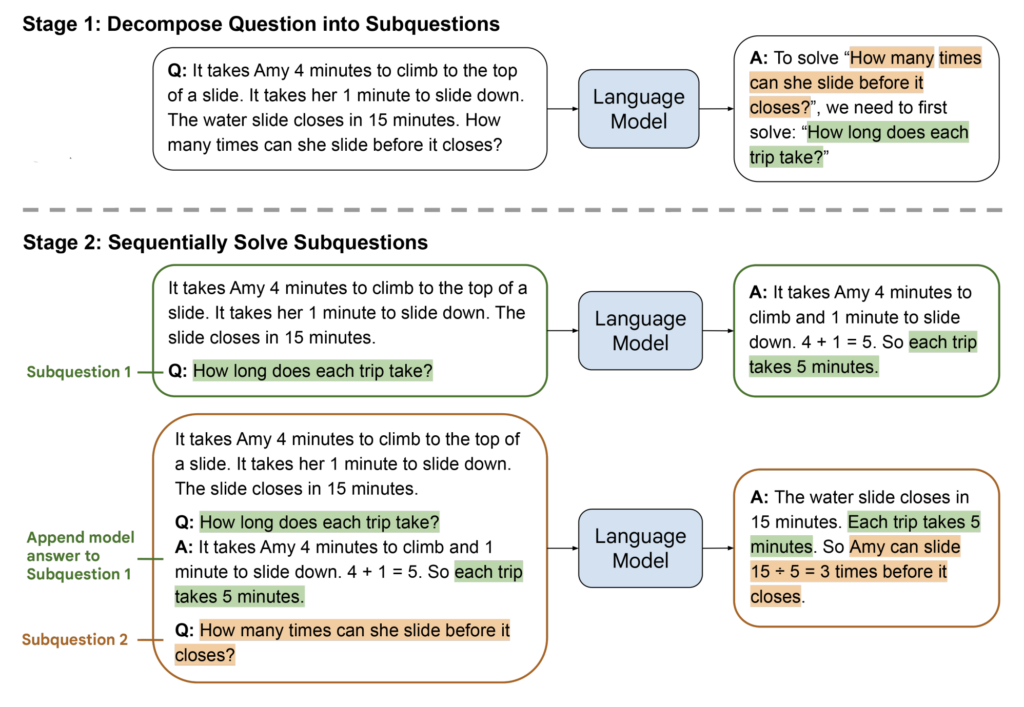

This paper from the Google Brain team presents a novel strategy for improving the reasoning capabilities of large language models through least-to-most prompting. This method involves decomposing complex problems into simpler subproblems that are solved sequentially, leveraging the solutions of prior subproblems to facilitate subsequent ones. It aims to address the shortcomings of chain-of-thought prompting by enhancing the model’s ability to generalize from easy to more challenging problems. The paper has been introduced at ICLR 2023.

Key Ideas

- Tackling easy-to-hard generalization problems. Considering that chain-of-thought prompting often falls short in tasks that require generalizing to solve problems more difficult than the provided examples, researchers propose tackling these easy-to-hard generalization issues with least-to-most prompting.

- Least-to-most prompting strategy. This new approach involves decomposing a problem into simpler subproblems, solving each sequentially with the help of the answers to previously solved subproblems. Both stages utilize few-shot prompting, eliminating the need for training or fine tuning in either phase.

- Combining with other prompting techniques. If necessary, the least-to-most prompting strategy can be combined with other techniques, like chain-of-thought or self-consistency.

Key Results

- Least-to-most prompting markedly outperforms both standard and chain-of-thought prompting in areas like symbolic manipulation, compositional generalization, and mathematical reasoning.

- For instance, using the least-to-most prompting technique, the GPT-3 code-davinci-002 model achieved at least 99% accuracy on the compositional generalization benchmark SCAN with only 14 exemplars, significantly higher than the 16% accuracy achieved with chain-of-thought prompting.

Implementation

- The prompts for all tasks are available in the Appendix of the research paper.

3. Multimodal Chain-of-Thought Reasoning in Language Models

This research paper introduces Multimodal-CoT, a novel approach for enhancing chain-of-thought (CoT) reasoning by integrating both language and vision modalities into a two-stage reasoning framework that separates rationale generation and answer inference. The study was conducted by a team affiliated with Shanghai Jiao Tong University and Amazon Web Services.

Key Ideas

- Integration of multimodal information. The proposed Multimodal-CoT framework uniquely combines text and image modalities in the chain-of-thought reasoning process.

- Two-stage reasoning framework. The framework separates the process into rationale generation and answer inference stages so that answer inference can leverage better generated rationales that are based on multimodal information.

- Addressing hallucinations in smaller models. To mitigate the frequent issue of hallucinations in language models with fewer than 100B parameters, the authors suggest fusing vision features with encoded language representations before inputting them into the decoder.

Key Results

- The Multimodal-CoT model with under 1B parameters significantly outperformed the existing state-of-the-art on the ScienceQA benchmark, achieving a 16% higher accuracy than GPT-3.5 and even surpassing human performance.

- In the error analysis, the researchers demonstrated that future studies could further enhance chain-of-thought reasoning by leveraging more effective vision features, incorporating commonsense knowledge, and implementing filtering mechanisms.

Implementation

- The code implementation is publicly available on GitHub.

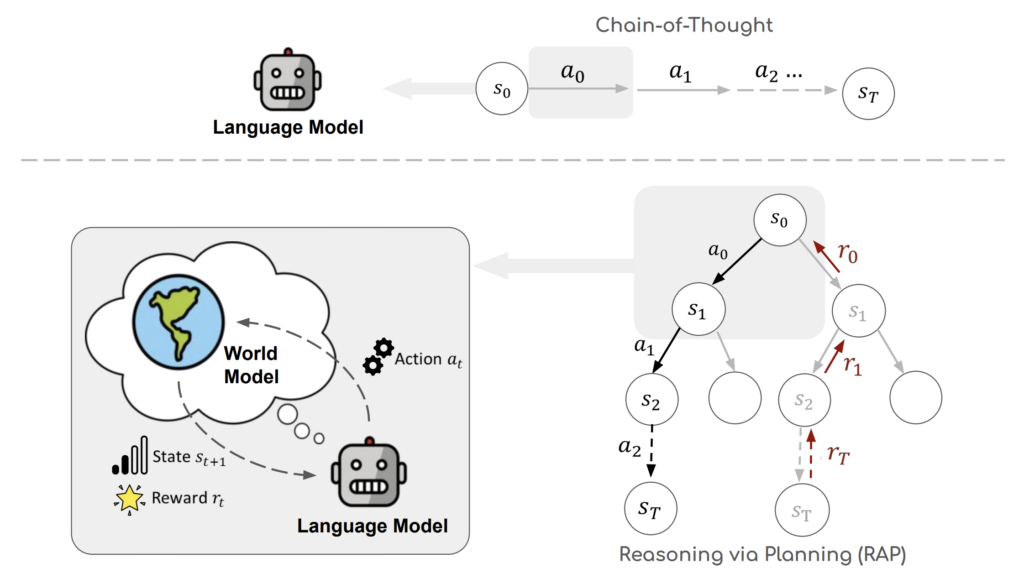

4. Reasoning with Language Model is Planning with World Model

In this paper, researchers from UC San Diego and the University of Florida contend that the inadequate reasoning abilities of LLMs originate from their lack of an internal world model to predict states and simulate long-term outcomes. To tackle this issue, they introduce a new framework called Reasoning via Planning (RAP), which redefines the LLM as both a world model and a reasoning agent. Presented at EMNLP 2023, the paper challenges the conventional application of LLMs by framing reasoning as a strategic planning task, similar to human cognitive processes.

Key Ideas

- Limitations of the current reasoning with LLMs. The authors argue that LLMs fail in simple tasks like creating action plans to move blocks to a target state because they (1) lack an internal world model to simulate the state of the world, (2) don’t have a reward mechanism to assess and guide the reasoning towards the desired state, and as a result, (3) are incapable of balancing exploration vs. exploitation to efficiently explore vast reasoning space.

- Reasoning via Planning (RAP). To address the above limitations, the research team suggests augmenting an LLM with a world model and enhancing its reasoning skills with principled planning through Monte Carlo Tree Search (MCTS). Interestingly, a world model is acquired by repurposing the LLM itself with appropriate prompts.

- Reasoning process. In the reasoning process introduced in the paper, the LLM strategically constructs a reasoning tree. It iteratively selects the most promising steps and uses its world model to anticipate future outcomes. Future rewards are then backpropagated to update the LLM’s current beliefs about these steps, guiding it to explore and refine better reasoning alternatives.

Key Results

- RAP is shown to be a versatile framework, capable of handling a wide array of complex reasoning tasks, consistently outperforming traditional LLM reasoning methods.

- In the Blocksworld task, RAP achieved a notable 64% success rate in 2/4/6-step problems, dramatically outperforming the CoT method. Additionally, LLaMA-33B equipped with RAP showed a 33% relative improvement over GPT-4 using CoT.

- RAP demonstrated superior results in mathematical reasoning tasks like GSM8K and logical inference tasks such as PrOntoQA, significantly surpassing baselines including CoT, least-to-most prompting, and self-consistency methods.

Implementation

- The code implementation is publicly available on GitHub.

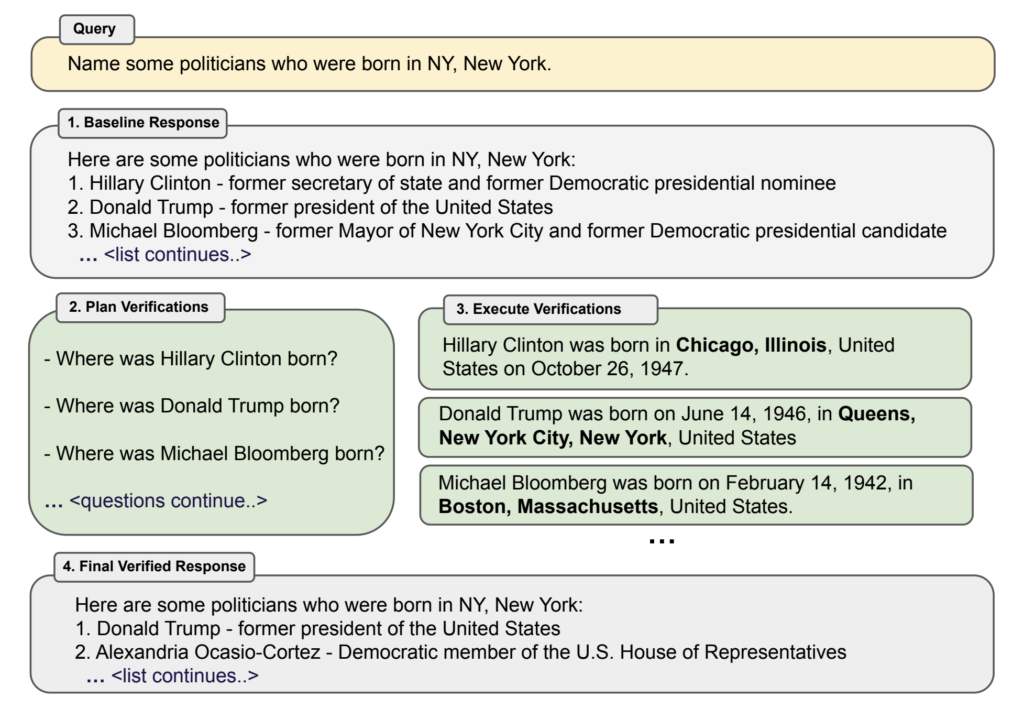

5. Chain-of-Verification Reduces Hallucination in Large Language Models

This research paper from the Meta AI team introduces the Chain-of-Verification (CoVe) method, aimed at reducing the occurrence of hallucinations – factually incorrect but plausible responses – by large language models. The paper presents a structured approach where the model generates an initial response, formulates verification questions, answers these independently, and integrates the verified information into a final response.

Key Ideas

- Chain-of-Verification (CoVe) method. CoVe first prompts the LLM to draft an initial response and then to generate verification questions that help to check the accuracy of this draft. The model answers these questions independently, avoiding biases from the initial response, and refines its final output based on these verifications.

- Factored variants. To address persistent hallucinations where models repeat inaccuracies from their own generated context, the authors propose enhancing the method with factored variants. These variants improve the system by segregating the steps in the verification chain. Specifically, they modify the CoVe process to answer verification questions independently of the original response. This separation prevents the conditioning on prior inaccuracies, thereby reducing repetition and enhancing overall performance.

Key Results

- The experiments demonstrated that CoVe reduced hallucinations across a variety of tasks, including list-based questions from Wikidata, closed book MultiSpanQA, and longform text generation.

- CoVe significantly enhances precision in list-based tasks, more than doubling the precision from the Llama 65B few-shot baseline in the Wikidata task, increasing from 0.17 to 0.36.

- In general QA challenges, such as those measured on MultiSpanQA, CoVe achieves a 23% improvement in F1 score, rising from 0.39 to 0.48.

- CoVe also boosts precision in longform text generation, with a 28% increase in FactScore from the few-shot baseline (from 55.9 to 71.4), accompanied by only a minor decrease in the average number of facts provided (from 16.6 to 12.3).

Implementation

- Prompt templates for the CoVe method are provided at the end of the research paper.

Advancing Reasoning in LLMs: Concluding Insights

The burgeoning field of enhancing the reasoning capabilities of LLMs is marked by a variety of innovative approaches and methodologies. The three overview papers provided a comprehensive exploration of the general principles and challenges associated with LLM reasoning. Further, the five specific papers we discussed illustrate that there can be a variety of strategies employed to push the boundaries of what LLMs can achieve. Each approach offers unique insights and methodologies that contribute to the evolving capabilities of LLMs, pointing towards a future where these models can perform sophisticated cognitive tasks, potentially transforming numerous industries and disciplines. As research continues to progress, it will be exciting to see how these models evolve and how they are integrated into practical applications, promising a new era of intelligent systems equipped with advanced reasoning abilities.

Enjoy this article? Sign up for more AI research updates.

We’ll let you know when we release more summary articles like this one.

The post Advancing AI’s Cognitive Horizons: 8 Significant Research Papers on LLM Reasoning appeared first on TOPBOTS.

Automate 2024 Product Preview

A six-armed robot for precision pollination

The Rise of Discount AI

Microsoft has decided to offer AI-on-the-cheap for businesses willing to settle for a little less than cutting-edge.

Specifically, the tech titan is peddling three new AI engines — from a new family of AI offerings dubbed Phi-3 — that are significantly less powerful than say ChatGPT-4 Turbo.

Even so, they often still get the job done.

Observes lead writer Karen Weise: “The smallest Phi-3 model can fit on a smartphone , so it can be used even if it’s not connected to the Internet.

“And it can run on the kinds of chips that power regular computers, rather than more expensive processors made by Nvidia.”

Adds Eric Boyd, a vice president at Microsoft: “I want my doctor to get things right.

“Other situations — where I am summarizing online user reviews — if it’s a little bit off, it’s not the end of the world.”

In other news and analysis on AI writing:

*In-Depth Guide: Ooh La La: France’s Answer to ChatGPT: This piece offers an in-depth look into yet another AI engine looking to compete with ChatGPT — Mistral AI, based in France.

While somewhat less impressive than ChatGPT, the AI engine has still earned high marks for its “transparent, portable, customizable and cost-effective models that require fewer computational resources than other popular LLMs (AI engines),” according to writer Ellen Glover.

“With substantial backing from prominent investors like Microsoft and Andreessen Horowitz — and a reported valuation of $5 billion — Mistral is positioning itself to be a formidable competitor in the increasingly crowded generative AI market,” Glover adds.

*Less Popular Than Your Average Cat Video: Only 23% of U.S. Adults Have Tried ChatGPT: Nearly a year-and-a-half since ChatGPT first stunned the world, only 23% of U.S. adults have actually used it, according to a new study from Pew.

For many who track the tech closely — and see the emergence of ChatGPT and similar AI as a pivotal moment in the history of humanity — the meager adoption rate is tough to understand.

Not surprisingly, young adults under 30 are most enthusiastic about ChatGPT — 43% have tried ChatGPT.

Oldest adults, 65-and-up, are least interested in the tech — only 6% have tried the AI, according to Pew.

*Microsoft’s Sweet Deal: Unlimited Access to GPT-4 Turbo: Subscribers to Microsoft 365 — its office productivity suite — are enjoying unlimited access to AI engine GPT-4 Turbo.

It’s currently considered by many as the most advanced AI engine on the planet.

That pricing — at $6.66/month for a Microsoft 365 subscription — is a significant perk from Microsoft.

In comparison, ChatGPT Plus customers pay $20/month for limited access to GPT-4Turbo.

Essentially, ChatGPT customers are only allowed to query the AI 50 times at-a-clip. Then they’re forced to wait three hours before they can query the AI engine again.

*Top AI Grammar Tools: Because Commas Are Hard!: Orbis Research has released its list of the top grammar and spell checkers on the market.

Here’s the rundown, with links to each tool’s pricing page:

*Hubspot Morphs With New AI Makeover: Popular marketing tool Hubspot has gone all-in on AI.

The tool now offers AI-powered content creation and auto-repurposing of content to multiple social media and similar networks.

Users can also take advantage of an AI-powered feature that ensures everything you create with Hubspot is rendered in your brand voice.

Plus, Hubspot AI will also transform your text content into spoken-word podcasts.

*Zoom’s AI Makeover: Now Summarizing The Meetings That You Slept Through: Add Zoom to the list of major software companies revamping with AI.

With the remade Zoom, you’ll find:

~AI summaries of meetings

~AI message thread summaries

~AI sentence completion

*Newsweek Goes Full AI: Reporters That Boot-up in Seconds: Brushing aside fears of job loss, Newsweek has fully embraced AI and is looking to integrate the tech as deeply as possible into the magazine’s operations.

Says Jennifer Cunningham, executive editor, Newsweek: “I think that the difference between newsrooms that embrace AI and newsrooms that shun AI is really going to prove itself over the next several months and years.

“We have really embraced AI as an opportunity — and not some sort of boogeyman that’s lurking in the newsroom.”

We’ll see.

*Forget One-Size-Fits-All: Amazon Hosts Virtually Any AI Engine: Businesses looking to work with their favorite AI engine may want to check out Amazon Web Services’ Bedrock, which is happy to host virtually any AI engine on the market.

Amazon’s approach is markedly different from proprietary companies like ChatGPT-maker Open AI, which only offers its proprietary AI engine to customers.

Essentially: Giving companies the ability to add do-it-yourself models (AI engines) to Bedrock makes it easier for enterprise developers and data scientists to work together, according to Swami Sivasubramanian, vice president of AI and data, Amazon Web Services.

*AI Big Picture: PCs Souped-Up With AI Chips Arrive: Businesses looking to save money by doing AI on a local PC — rather than in the cloud — now have a number of options.

Observes writer Isabelle Bousquette: “The biggest innovation in years has come for personal computers, as manufacturers integrate chips that enable them to run large-scale AI models (AI engines) directly on the device.

“But some CIOs remain unconvinced on whether the new devices are worth the cost.

“Some say they will make the investment first for just technical roles — like data scientists.

“Others say they will make the investment for even regular business users—but only when it’s already time for a refresh in the regular device replacement cycle.”

Share a Link: Please consider sharing a link to https://RobotWritersAI.com from your blog, social media post, publication or emails. More links leading to RobotWritersAI.com helps everyone interested in AI-generated writing.

–Joe Dysart is editor of RobotWritersAI.com and a tech journalist with 20+ years experience. His work has appeared in 150+ publications, including The New York Times and the Financial Times of London.

The post The Rise of Discount AI appeared first on Robot Writers AI.