Improving AGV efficiencies with updated battery technologies

Robot Talk Episode 128 – Making microrobots move, with Ali K. Hoshiar

Claire chatted to Ali K. Hoshiar from University of Essex about how microrobots move and work together.

Ali Hoshiar is a Senior Lecturer in Robotics at the University of Essex and Director of the Robotics for Under Millimetre Innovation (RUMI) Lab. He leads the EPSRC-funded ‘In-Target’ project and was awarded the university’s Best Interdisciplinary Research Award. His research focuses on microrobotics, soft robotics, and data-driven mechatronic systems for medical and agri-tech applications. He also holds an MBA, adding strategic and commercial insight to his technical work.

Robotic programming brings increased productivity and faster return on investment

Why GPS fails in cities. And how it was brilliantly fixed

Scientists suggest the brain may work best with 7 senses, not just 5

How Collaborative Robots Improve Flexibility, Safety, and ROI

Introducing the Gemini 2.5 Computer Use model

SoftBank buys $5.4 bn robotics firm to advance ‘physical AI’

Interview with Zahra Ghorrati: developing frameworks for human activity recognition using wearable sensors

In this interview series, we’re meeting some of the AAAI/SIGAI Doctoral Consortium participants to find out more about their research. Zahra Ghorrati is developing frameworks for human activity recognition using wearable sensors. We caught up with Zahra to find out more about this research, the aspects she has found most interesting, and her advice for prospective PhD students.

Tell us a bit about your PhD – where are you studying, and what is the topic of your research?

I am pursuing my PhD at Purdue University, where my dissertation focuses on developing scalable and adaptive deep learning frameworks for human activity recognition (HAR) using wearable sensors. I was drawn to this topic because wearables have the potential to transform fields like healthcare, elderly care, and long-term activity tracking. Unlike video-based recognition, which can raise privacy concerns and requires fixed camera setups, wearables are portable, non-intrusive, and capable of continuous monitoring, making them ideal for capturing activity data in natural, real-world settings.

The central challenge my dissertation addresses is that wearable data is often noisy, inconsistent, and uncertain, depending on sensor placement, movement artifacts, and device limitations. My goal is to design deep learning models that are not only computationally efficient and interpretable but also robust to the variability of real-world data. In doing so, I aim to ensure that wearable HAR systems are both practical and trustworthy for deployment outside controlled lab environments.

This research has been supported by the Polytechnic Summer Research Grant at Purdue. Beyond my dissertation work, I contribute to the research community as a reviewer for conferences such as CoDIT, CTDIAC, and IRC, and I have been invited to review for AAAI 2026. I was also involved in community building, serving as Local Organizer and Safety Chair for the 24th International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2025), and continuing as Safety Chair for AAMAS 2026.

Could you give us an overview of the research you’ve carried out so far during your PhD?

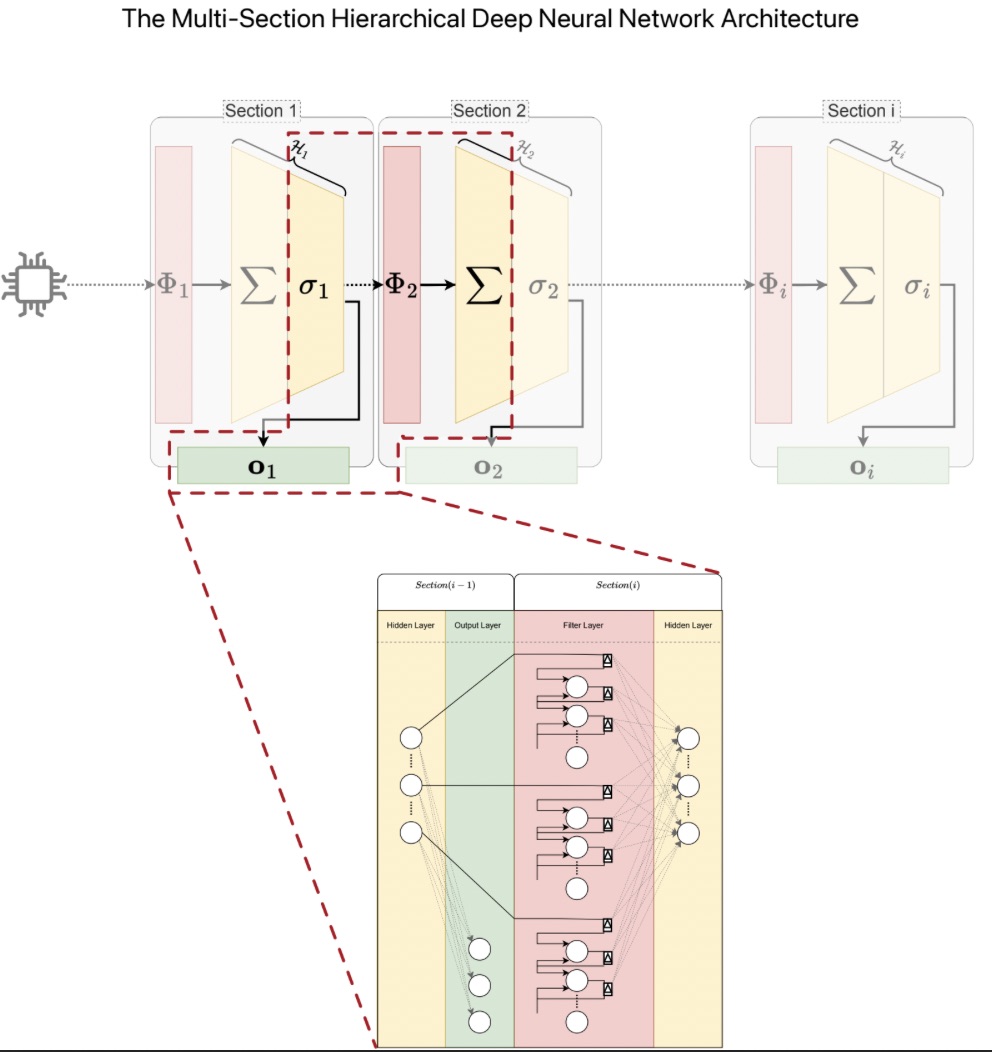

So far, my research has focused on developing a hierarchical fuzzy deep neural network that can adapt to diverse human activity recognition datasets. In my initial work, I explored a hierarchical recognition approach, where simpler activities are detected at earlier levels of the model and more complex activities are recognized at higher levels. To enhance both robustness and interpretability, I integrated fuzzy logic principles into deep learning, allowing the model to better handle uncertainty in real-world sensor data.

A key strength of this model is its simplicity and low computational cost, which makes it particularly well suited for real-time activity recognition on wearable devices. I have rigorously evaluated the framework on multiple benchmark datasets of multivariate time series and systematically compared its performance against state-of-the-art methods, where it has demonstrated both competitive accuracy and improved interpretability.

Is there an aspect of your research that has been particularly interesting?

Yes, what excites me most is discovering how different approaches can make human activity recognition both smarter and more practical. For instance, integrating fuzzy logic has been fascinating, because it allows the model to capture the natural uncertainty and variability of human movement. Instead of forcing rigid classifications, the system can reason in terms of degrees of confidence, making it more interpretable and closer to how humans actually think.

I also find the hierarchical design of my model particularly interesting. Recognizing simple activities first, and then building toward more complex behaviors, mirrors the way humans often understand actions in layers. This structure not only makes the model efficient but also provides insights into how different activities relate to one another.

Beyond methodology, what motivates me is the real-world potential. The fact that these models can run efficiently on wearables means they could eventually support personalized healthcare, elderly care, and long term activity monitoring in people’s everyday lives. And since the techniques I’m developing apply broadly to time series data, their impact could extend well beyond HAR, into areas like medical diagnostics, IoT monitoring, or even audio recognition. That sense of both depth and versatility is what makes the research especially rewarding for me.

What are your plans for building on your research so far during the PhD – what aspects will you be investigating next?

Moving forward, I plan to further enhance the scalability and adaptability of my framework so that it can effectively handle large scale datasets and support real-time applications. A major focus will be on improving both the computational efficiency and interpretability of the model, ensuring it is not only powerful but also practical for deployment in real-world scenarios.

While my current research has focused on human activity recognition, I am excited to broaden the scope to the wider domain of time series classification. I see great potential in applying my framework to areas such as sound classification, physiological signal analysis, and other time-dependent domains. This will allow me to demonstrate the generalizability and robustness of my approach across diverse applications where time-based data is critical.

In the longer term, my goal is to develop a unified, scalable model for time series analysis that balances adaptability, interpretability, and efficiency. I hope such a framework can serve as a foundation for advancing not only HAR but also a broad range of healthcare, environmental, and AI-driven applications that require real-time, data-driven decision-making.

What made you want to study AI, and in particular the area of wearables?

My interest in wearables began during my time in Paris, where I was first introduced to the potential of sensor-based monitoring in healthcare. I was immediately drawn to how discreet and non-invasive wearables are compared to video-based methods, especially for applications like elderly care and patient monitoring.

More broadly, I have always been fascinated by AI’s ability to interpret complex data and uncover meaningful patterns that can enhance human well-being. Wearables offered the perfect intersection of my interests, combining cutting-edge AI techniques with practical, real-world impact, which naturally led me to focus my research on this area.

What advice would you give to someone thinking of doing a PhD in the field?

A PhD in AI demands both technical expertise and resilience. My advice would be:

- Stay curious and adaptable, because research directions evolve quickly, and the ability to pivot or explore new ideas is invaluable.

- Investigate combining disciplines. AI benefits greatly from insights in fields like psychology, healthcare, and human-computer interaction.

- Most importantly, choose a problem you are truly passionate about. That passion will sustain you through the inevitable challenges and setbacks of the PhD journey.

Approaching your research with curiosity, openness, and genuine interest can make the PhD not just a challenge, but a deeply rewarding experience.

Could you tell us an interesting (non-AI related) fact about you?

Outside of research, I’m passionate about leadership and community building. As president of the Purdue Tango Club, I grew the group from just 2 students to over 40 active members, organized weekly classes, and hosted large events with internationally recognized instructors. More importantly, I focused on creating a welcoming community where students feel connected and supported. For me, tango is more than dance, it’s a way to bring people together, bridge cultures, and balance the intensity of research with creativity and joy.

I also apply these skills in academic leadership. For example, I serve as Local Organizer and Safety Chair for the AAMAS 2025 and 2026 conferences, which has given me hands-on experience managing events, coordinating teams, and creating inclusive spaces for researchers worldwide.

About Zahra

|

Zahra Ghorrati is a PhD candidate and teaching assistant at Purdue University, specializing in artificial intelligence and time series classification with applications in human activity recognition. She earned her undergraduate degree in Computer Software Engineering and her master’s degree in Artificial Intelligence. Her research focuses on developing scalable and interpretable fuzzy deep learning models for wearable sensor data. She has presented her work at leading international conferences and journals, including AAMAS, PAAMS, FUZZ-IEEE, IEEE Access, System and Applied Soft Computing. She has served as a reviewer for CoDIT, CTDIAC, and IRC, and has been invited to review for AAAI 2026. Zahra also contributes to community building as Local Organizer and Safety Chair for AAMAS 2025 and 2026. |

Friction-based landing gear enables drones to safely land on fast-moving vehicles

The agentic AI shift: From static products to dynamic systems

Agents are here. And they are challenging many of the assumptions software teams have relied on for decades, including the very idea of what a “product” is.

There is a scene in Interstellar where the characters are on a remote, water-covered planet. In the distance, what looks like a mountain range turns out to be enormous waves steadily building and towering over them. With AI, it has felt much the same. A massive wave has been building on the horizon for years.

Generative AI and Vibe Coding have already shifted how design and development happen. Now, another seismic shift is underway: agentic AI.

The question isn’t if this wave will hit — it already has. The question is how it will reshape the landscape enterprises thought they knew. From the vantage point of the production design team at DataRobot, these changes are reshaping not just how design is done, but also long-held assumptions about what products are and how they are built.

What makes agentic AI different from generative AI

Unlike predictive or generative AI, agents are autonomous. They make decisions, take action, and adapt to new information without constant human prompts. That autonomy is powerful, but it also clashes with the deterministic infrastructure most enterprises rely on.

Deterministic systems expect the same input to deliver the same output every time. Agents are probabilistic: the same input might trigger different paths, decisions, or outcomes. That mismatch creates new challenges around governance, monitoring, and trust.

These aren’t just theoretical concerns; they’re already playing out in enterprise environments.

To help enterprises run agentic systems securely and at scale, DataRobot co-engineered the Agent Workforce Platform with NVIDIA, building on their AI Factory design. In parallel, we co-developed business agents embedded directly into SAP environments.

Together, these efforts enable organizations to operationalize agents securely, at scale, and within the systems they already rely on.

Moving from pilots to production

Enterprises continue to struggle with the gap between experimentation and impact. MIT research recently found that 95% of generative AI pilots fail to deliver measurable results — often stalling when teams try to scale beyond proofs of concept.

Moving from experimentation to production involves significant technical complexity. Rather than expecting customers to build everything from the ground up, DataRobot shifted its approach.

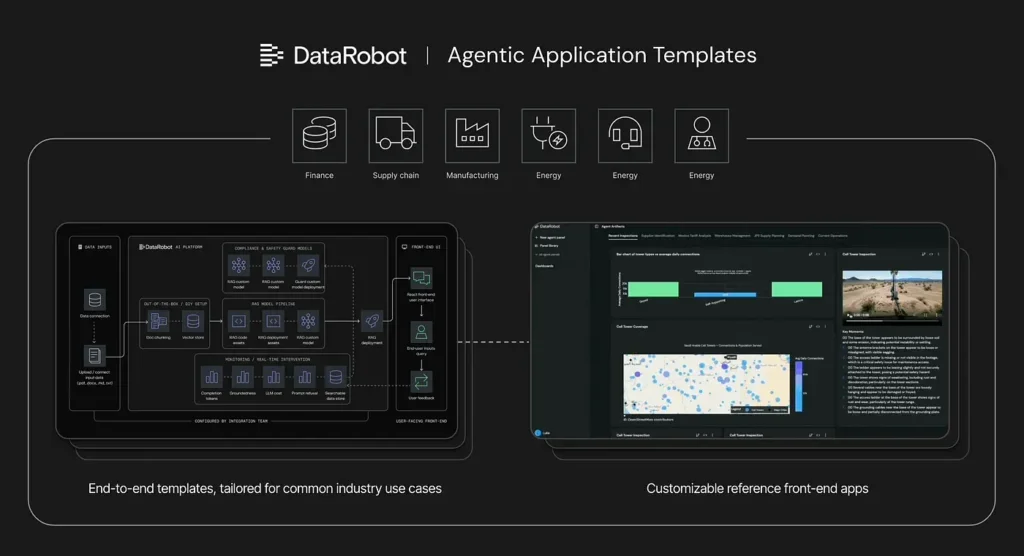

To use a food analogy: instead of handing customers a pantry of raw ingredients like components and frameworks, the company now delivers meal kits: agent and application templates with prepped components and proven recipes that work out of the box.

These templates codify best practices across common enterprise use cases. Practitioners can clone them, then swap or extend components using the platform or their preferred tools via API.

The impact: production-ready dashboards and applications in days, not months.

Changing how practitioners use the platform

This approach is also reshaping how AI practitioners interact with the platform. One of the biggest hurdles is creating front-end interfaces that consume the agents and models: apps for forecasting demand, generating content, retrieving knowledge, or exploring data.

Larger enterprises with dedicated development teams can handle this. But smaller organizations often rely on IT teams or AI experts, and app development is not their core skill.

To bridge that gap, DataRobot provides customizable reference apps as starting points. These work well when the use case is a close match, but they can be difficult to adapt for more complex or unique requirements.

Practitioners sometimes turn to open-source frameworks like Streamlit, but those often fall short of enterprise requirements for scale, security, and user experience.

To address this, DataRobot is exploring agent-driven approaches, such as supply chain dashboards that use agents to generate dynamic applications. These dashboards include rich visualizations and advanced interface components tailored to specific customer needs, powered by the Agent Workforce Platform on the back end.

The result is not just faster builds, but interfaces that practitioners without deep app-dev skills can create – while still meeting enterprise standards for scale, security, and user experience.

Agent-driven dashboards bring enterprise-grade design within reach for every team

Balancing control and automation

Agentic AI raises a paradox familiar from the AutoML era. When automation handles the “fun” parts of the work, practitioners can feel sidelined. When it tackles the tedious parts, it unlocks massive value.

DataRobot has seen this tension before. In the AutoML era, automating algorithm selection and feature engineering helped democratize access, but it also left experienced practitioners feeling control was taken away.

The lesson: automation succeeds when it accelerates expertise by removing tedious tasks, while preserving practitioner control over business logic and workflow design.

This experience shaped how we approach agentic AI: automation should accelerate expertise, not replace it.

Control in practice

This shift towards autonomous systems raises a fundamental question: how much control should be handed to agents, and how much should users retain? At the product level, this plays out in two layers:

- The infrastructure practitioners use to create and govern workflows

- The front-end applications people use to consume them.

Increasingly, customers are building both layers simultaneously, configuring the platform scaffolding while generative agents assemble the React-based applications on top.

Different user expectations

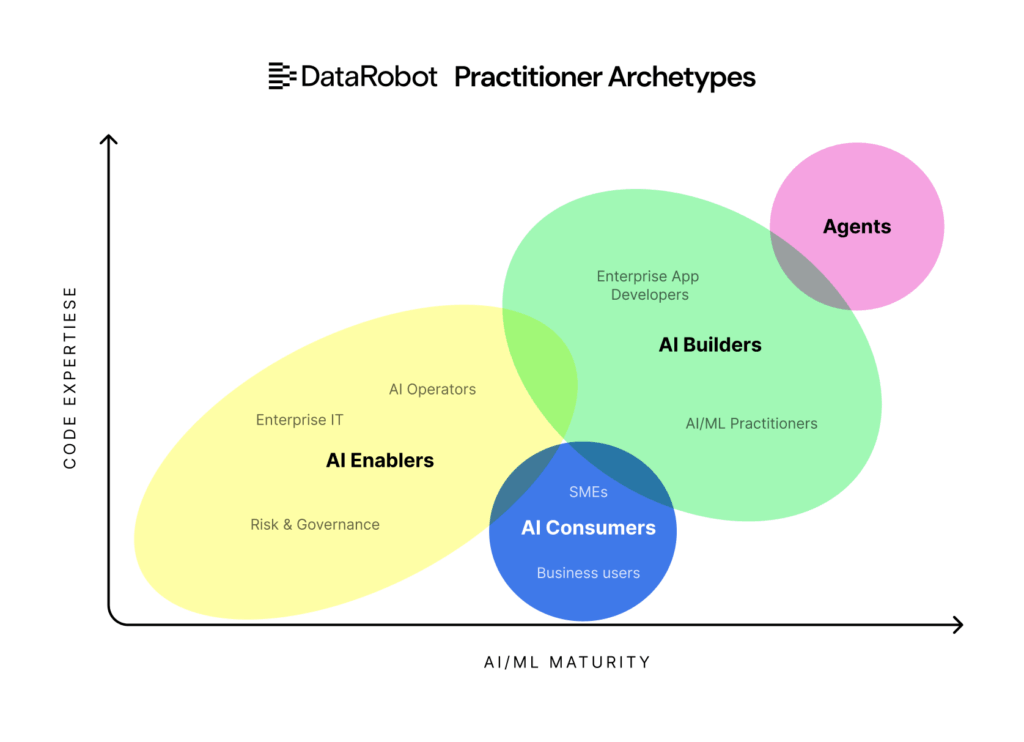

This tension plays out differently for each group:

- App developers are comfortable with abstraction layers, but still expect to debug and extend when needed.

- Data scientists want transparency and intervention.

- Enterprise IT teams want security, scalability, and systems that integrate with existing infrastructure.

- Business users just want results.

Now a new user type has emerged: the agents themselves.

They act as collaborators in APIs and workflows, forcing a rethink of feedback, error handling, and communication. Designing for all four user types (developers, data scientists, business users, and now agents) means governance and UX standards must serve both humans and machines.

Reality and risks

These are not prototypes; they are production applications already serving enterprise customers. Practitioners who may not be expert app developers can now create customer-facing software that handles complex workflows, visualizations, and business logic.

Agents manage React components, layout, and responsive design, while practitioners focus on domain logic and user workflows.

The same trend is showing up across organizations. Field teams and other non-designers are building demos and prototypes with tools like V0, while designers are starting to contribute production code. This democratization expands who can build, but it also raises new challenges.

Now that anyone can ship production software, enterprises need new mechanisms to safeguard quality, scalability, user experience, brand, and accessibility. Traditional checkpoint-based reviews won’t keep up; quality systems themselves must scale to match the new pace of development.

Designing systems, not just products

Agentic AI doesn’t just change how products are built; it changes what a “product” is. Instead of static tools designed for broad use cases, enterprises can now create adaptive systems that generate specific solutions for specific contexts on demand.

This shifts the role of product and design teams. Instead of delivering single products, they architect the systems, constraints, and design standards that agents use to generate experiences.

To maintain quality at scale, enterprises must prevent design debt from compounding as more teams and agents generate applications.

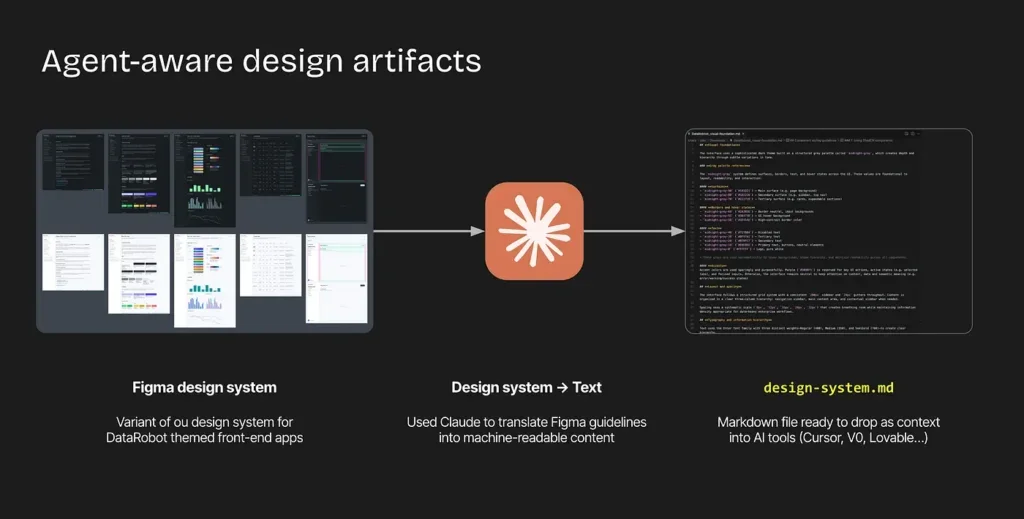

At DataRobot, the design system has been translated into machine-readable artifacts, including Figma guidelines, component specifications, and interaction principles expressed in markdown.

By encoding design standards upstream, agents can generate interfaces that remain consistent, accessible, and on-brand with fewer manual reviews that slow innovation.

Designing for agents as users

Another shift: agents themselves are now users. They interact with platforms, APIs, and workflows, sometimes more directly than humans. This changes how feedback, error handling, and collaboration are designed. Future-ready platforms will not only optimize for human-computer interaction, but also for human–agent collaboration.

Lessons for design leaders

As boundaries blur, one truth remains: the hard problems are still hard. Agentic AI does not erase these challenges — it makes them more urgent. And it raises the stakes for design quality. When anyone can spin up an app, user experience, quality, governance, and brand alignment become the real differentiators.

The enduring hard problems

- Understand context: What unmet needs are really being solved?

- Design for constraints: Will it work with existing architectures?

- Tie tech to value: Does this address problems that matter to the business?

Principles for navigating the shift

- Build systems, not just products: Focus on the foundations, constraints, and contexts that allow good experiences to emerge.

Exercise judgment: Use AI for speed and execution, but rely on human expertise and craft to decide what’s right.

Riding the wave

Like Interstellar, what once looked like distant mountains are actually massive waves. Agentic AI is not on the horizon anymore—it is here. The enterprises that learn to harness it will not just ride the wave. They will shape what comes next.

Learn more about the Agent Workforce Platform and how DataRobot helps enterprises move fro1m AI pilots to production-ready agentic systems.

The post The agentic AI shift: From static products to dynamic systems appeared first on DataRobot.