DOLPHIN AI uncovers hundreds of invisible cancer markers

Princeton’s AI reveals what fusion sensors can’t see

Conveyor Application Challenges to Consider: Scalability, Expansion, Automation, and Integration

Rethinking how robots move: Light and AI drive precise motion in soft robotic arm

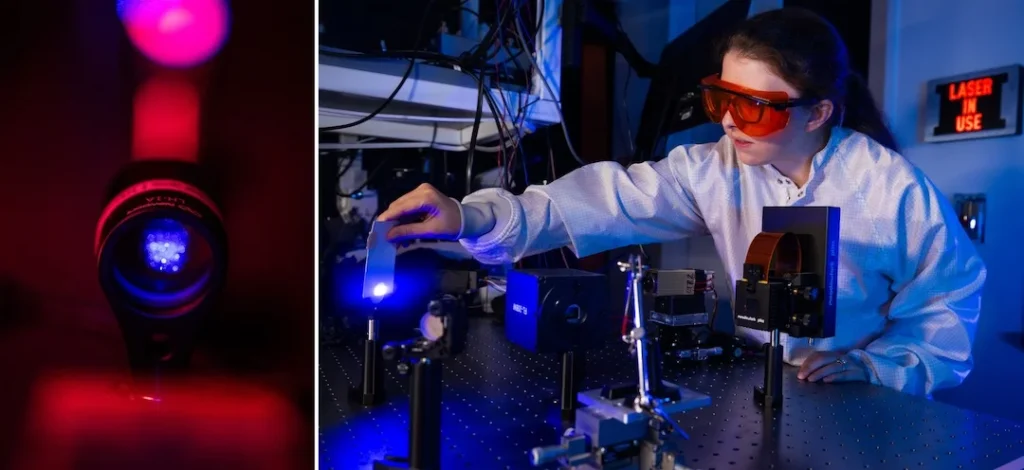

Photo credit: Jeff Fitlow/Rice University

Photo credit: Jeff Fitlow/Rice University

By Silvia Cernea Clark

Researchers at Rice University have developed a soft robotic arm capable of performing complex tasks such as navigating around an obstacle or hitting a ball, guided and powered remotely by laser beams without any onboard electronics or wiring. The research could inform new ways to control implantable surgical devices or industrial machines that need to handle delicate objects.

In a proof-of-concept study that integrates smart materials, machine learning and an optical control system, a team of Rice researchers led by materials scientist Hanyu Zhu used a light-patterning device to precisely induce motion in a robotic arm made from azobenzene liquid crystal elastomer ⎯ a type of polymer that responds to light.

According to the study published in Advanced Intelligent Systems, the new robotic system incorporates a neural network trained to predict the exact light pattern needed to create specific arm movements. This makes it easier for the robot to execute complex tasks without needing similarly complex input from an operator.

“This was the first demonstration of real-time, reconfigurable, automated control over a light-responsive material for a soft robotic arm,” said Elizabeth Blackert, a Rice doctoral alumna who is the first author on the study.

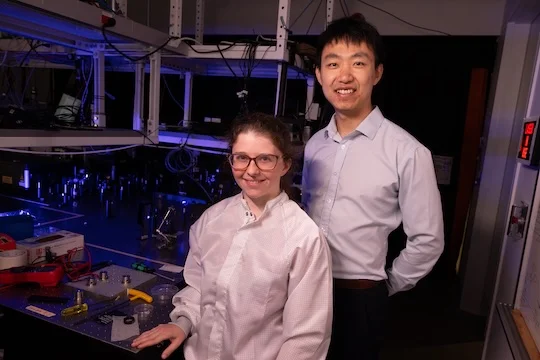

Elizabeth Blackert and Hanyu Zhu (Photo credit: Jeff Fitlow/Rice University).

Elizabeth Blackert and Hanyu Zhu (Photo credit: Jeff Fitlow/Rice University).

Conventional robots typically involve rigid structures with mobile elements like hinges, wheels or grippers to enable a predefined, relatively constrained range of motion. Soft robots have opened up new areas of application in contexts like medicine, where safely interacting with delicate objects is required. So-called continuum robots are a type of soft robot that forgoes mobility constraints, enabling adaptive motion with a vastly expanded degree of freedom.

“A major challenge in using soft materials for robots is they are either tethered or have very simple, predetermined functionality,” said Zhu, assistant professor of materials science and nanoengineering. “Building remotely and arbitrarily programmable soft robots requires a unique blend of expertise involving materials development, optical system design and machine learning capabilities. Our research team was uniquely suited to take on this interdisciplinary work.”

The team created a new variation of an elastomer that shrinks under blue laser light then relaxes and regrows in the dark ⎯ a feature known as fast relaxation time that makes real-time control possible. Unlike other light-sensitive materials that require harmful ultraviolet light or take minutes to reset, this one works with safer, longer wavelengths and responds within seconds.

“When we shine a laser on one side of the material, the shrinking causes the material to bend in that direction,” Blackert said. “Our material bends toward laser light like a flower stem does toward sunlight.”

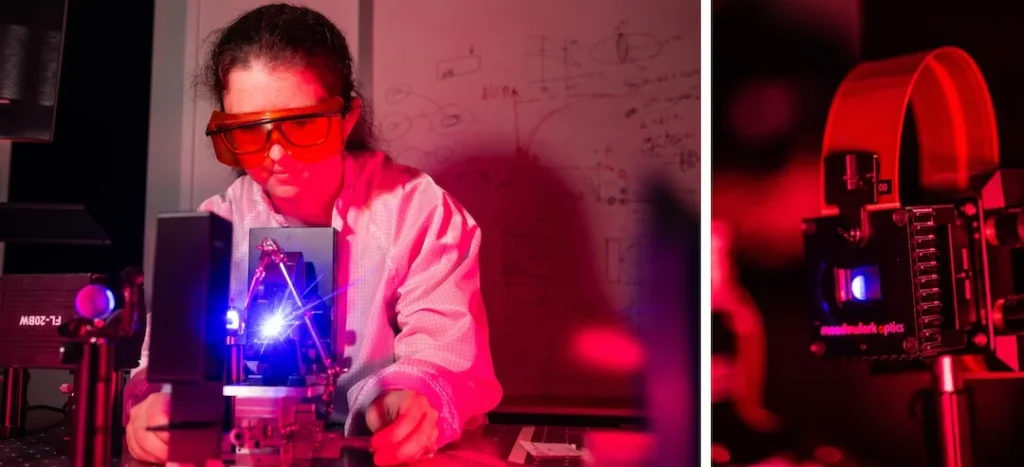

To control the material, the researchers used a spatial light modulator to split a single laser beam into multiple beamlets, each directed to a different part of the robotic arm. The beamlets can be turned on or off and adjusted in intensity, allowing the arm to bend or contract at any given point, much like the tentacles of an octopus. This technique can in principle create a robot with virtually infinite degrees of freedom ⎯ far beyond the capabilities of traditional robots with fixed joints.

“What is new here is using the light pattern to achieve complex changes in shape,” said Rafael Verduzco, professor and associate chair of chemical and biomolecular engineering and professor of materials science and nanoengineering. “In prior work, the material itself was patterned or programmed to change shape in one way, but here the material can change in multiple ways, depending on the laser beamlet pattern.”

To train such a multiparameter arm, the team ran a small number of combinations of light settings and recorded how the robot arm deformed in each case, using the data to train a convolutional neural network ⎯ a type of artificial intelligence used in image recognition. The model was then able to output the exact light pattern needed to create a desired shape such as flexing or a reach-around motion.

The current prototype is flat and moves in 2D, but future versions could bend in three dimensions with additional sensors and cameras.

Photo credit: Jeff Fitlow/Rice University

Photo credit: Jeff Fitlow/Rice University

“This is a step towards having safer, more capable robotics for various applications ranging from implantable biomedical devices to industrial robots that handle soft goods,” Blackert said.