Robot Talk Episode 138 – Robots in the environment, with Stefano Mintchev

Claire chatted to Stefano Mintchev from ETH Zürich about robots to explore and monitor the natural environment.

Stefano Mintchev is an Assistant Professor of Environmental Robotics at ETH Zürich in Switzerland. He has a Ph.D. in Bioinspired Robotics from Scuola Superiore Sant’Anna in Italy, and conducted postdoctoral research at EPFL in Switzerland, focused on bioinspired design principles for versatile aerial robots. At ETH Zürich, Stefano leads a research group working at the intersection of robotics and environmental science, developing robust and scalable bioinspired robotic technologies for monitoring and promoting the sustainable use of natural resources.

ReBeLMove Pro: modular robot platform for logistics, assembly and handling

At a Silicon Valley summit, robots fold laundry—and investors open their wallets

CASE STUDY STT SYSTEMS and STEMMER IMAGING: AUTOMOTIVE BOLT INSPECTION SYSTEM WITH GOCATOR SMART 3D LASER PROFILERS

A Quick Look at Multirotor Drone Maneuverability

Artificial tendons give muscle-powered robots a boost

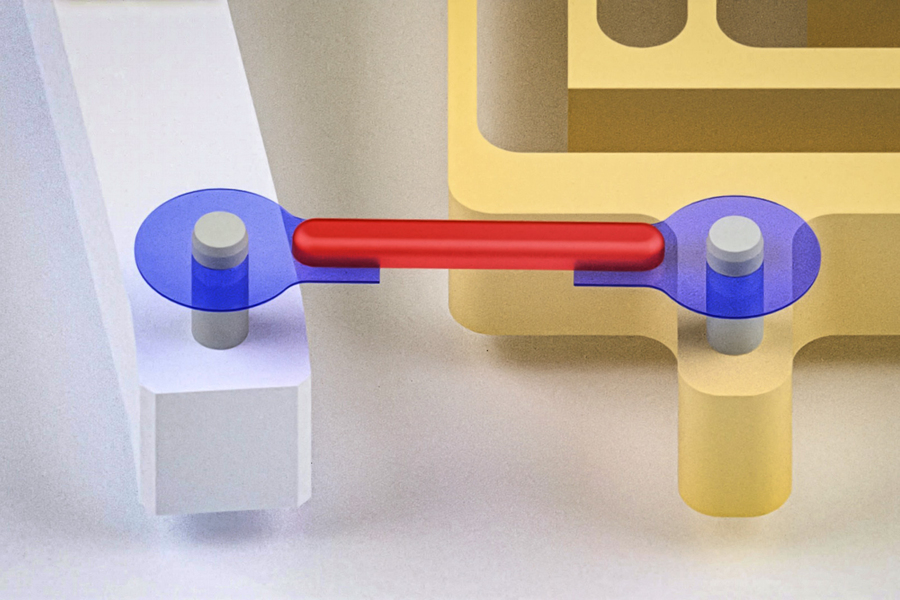

Researchers have developed artificial tendons for muscle-powered robots. They attached the rubber band-like tendons (blue) to either end of a small piece of lab-grown muscle (red), forming a “muscle-tendon unit.” Credit: Courtesy of the researchers; edited by MIT News.

Researchers have developed artificial tendons for muscle-powered robots. They attached the rubber band-like tendons (blue) to either end of a small piece of lab-grown muscle (red), forming a “muscle-tendon unit.” Credit: Courtesy of the researchers; edited by MIT News.

Our muscles are nature’s actuators. The sinewy tissue is what generates the forces that make our bodies move. In recent years, engineers have used real muscle tissue to actuate “biohybrid robots” made from both living tissue and synthetic parts. By pairing lab-grown muscles with synthetic skeletons, researchers are engineering a menagerie of muscle-powered crawlers, walkers, swimmers, and grippers.

But for the most part, these designs are limited in the amount of motion and power they can produce. Now, MIT engineers are aiming to give bio-bots a power lift with artificial tendons.

In a study which recently appeared in the journal Advanced Science, the researchers developed artificial tendons made from tough and flexible hydrogel. They attached the rubber band-like tendons to either end of a small piece of lab-grown muscle, forming a “muscle-tendon unit.” Then they connected the ends of each artificial tendon to the fingers of a robotic gripper.

When they stimulated the central muscle to contract, the tendons pulled the gripper’s fingers together. The robot pinched its fingers together three times faster, and with 30 times greater force, compared with the same design without the connecting tendons.

The researchers envision the new muscle-tendon unit can be fit to a wide range of biohybrid robot designs, much like a universal engineering element.

“We are introducing artificial tendons as interchangeable connectors between muscle actuators and robotic skeletons,” says lead author Ritu Raman, an assistant professor of mechanical engineering (MechE) at MIT. “Such modularity could make it easier to design a wide range of robotic applications, from microscale surgical tools to adaptive, autonomous exploratory machines.”

The study’s MIT co-authors include graduate students Nicolas Castro, Maheera Bawa, Bastien Aymon, Sonika Kohli, and Angel Bu; undergraduate Annika Marschner; postdoc Ronald Heisser; alumni Sarah J. Wu and Laura Rosado; and MechE professors Martin Culpepper and Xuanhe Zhao.

Muscle’s gains

Raman and her colleagues at MIT are at the forefront of biohybrid robotics, a relatively new field that has emerged in the last decade. They focus on combining synthetic, structural robotic parts with living muscle tissue as natural actuators.

“Most actuators that engineers typically work with are really hard to make small,” Raman says. “Past a certain size, the basic physics doesn’t work. The nice thing about muscle is, each cell is an independent actuator that generates force and produces motion. So you could, in principle, make robots that are really small.”

Muscle actuators also come with other advantages, which Raman’s team has already demonstrated: The tissue can grow stronger as it works out, and can naturally heal when injured. For these reasons, Raman and others envision that muscly droids could one day be sent out to explore environments that are too remote or dangerous for humans. Such muscle-bound bots could build up their strength for unforeseen traverses or heal themselves when help is unavailable. Biohybrid bots could also serve as small, surgical assistants that perform delicate, microscale procedures inside the body.

All these future scenarios are motivating Raman and others to find ways to pair living muscles with synthetic skeletons. Designs to date have involved growing a band of muscle and attaching either end to a synthetic skeleton, similar to looping a rubber band around two posts. When the muscle is stimulated to contract, it can pull the parts of a skeleton together to generate a desired motion.

But Raman says this method produces a lot of wasted muscle that is used to attach the tissue to the skeleton rather than to make it move. And that connection isn’t always secure. Muscle is quite soft compared with skeletal structures, and the difference can cause muscle to tear or detach. What’s more, it is often only the contractions in the central part of the muscle that end up doing any work — an amount that’s relatively small and generates little force.

“We thought, how do we stop wasting muscle material, make it more modular so it can attach to anything, and make it work more efficiently?” Raman says. “The solution the body has come up with is to have tendons that are halfway in stiffness between muscle and bone, that allow you to bridge this mechanical mismatch between soft muscle and rigid skeleton. They’re like thin cables that wrap around joints efficiently.”

“Smartly connected”

In their new work, Raman and her colleagues designed artificial tendons to connect natural muscle tissue with a synthetic gripper skeleton. Their material of choice was hydrogel — a squishy yet sturdy polymer-based gel. Raman obtained hydrogel samples from her colleague and co-author Xuanhe Zhao, who has pioneered the development of hydrogels at MIT. Zhao’s group has derived recipes for hydrogels of varying toughness and stretch that can stick to many surfaces, including synthetic and biological materials.

To figure out how tough and stretchy artificial tendons should be in order to work in their gripper design, Raman’s team first modeled the design as a simple system of three types of springs, each representing the central muscle, the two connecting tendons, and the gripper skeleton. They assigned a certain stiffness to the muscle and skeleton, which were previously known, and used this to calculate the stiffness of the connecting tendons that would be required in order to move the gripper by a desired amount.

From this modeling, the team derived a recipe for hydrogel of a certain stiffness. Once the gel was made, the researchers carefully etched the gel into thin cables to form artificial tendons. They attached two tendons to either end of a small sample of muscle tissue, which they grew using lab-standard techniques. They then wrapped each tendon around a small post at the end of each finger of the robotic gripper — a skeleton design that was developed by MechE professor Martin Culpepper, an expert in designing and building precision machines.

When the team stimulated the muscle to contract, the tendons in turn pulled on the gripper to pinch its fingers together. Over multiple experiments, the researchers found that the muscle-tendon gripper worked three times faster and produced 30 times more force compared to when the gripper is actuated just with a band of muscle tissue (and without any artificial tendons). The new tendon-based design also was able to keep up this performance over 7,000 cycles, or muscle contractions.

Overall, Raman saw that the addition of artificial tendons increased the robot’s power-to-weight ratio by 11 times, meaning that the system required far less muscle to do just as much work.

“You just need a small piece of actuator that’s smartly connected to the skeleton,” Raman says. “Normally, if a muscle is really soft and attached to something with high resistance, it will just tear itself before moving anything. But if you attach it to something like a tendon that can resist tearing, it can really transmit its force through the tendon, and it can move a skeleton that it wouldn’t have been able to move otherwise.”

The team’s new muscle-tendon design successfully merges biology with robotics, says biomedical engineer Simone Schürle-Finke, associate professor of health sciences and technology at ETH Zürich.

“The tough-hydrogel tendons create a more physiological muscle–tendon–bone architecture, which greatly improves force transmission, durability, and modularity,” says Schürle-Finke, who was not involved with the study. “This moves the field toward biohybrid systems that can operate repeatably and eventually function outside the lab.”

With the new artificial tendons in place, Raman’s group is moving forward to develop other elements, such as skin-like protective casings, to enable muscle-powered robots in practical, real-world settings.

This research was supported, in part, by the U.S. Department of Defense Army Research Office, the MIT Research Support Committee, and the National Science Foundation.

AI detects cancer but it’s also reading who you are

Plumbing the AI Revolution: Lenovo’s Strategic Pivot to Modernize the Enterprise Backbone

While the headlines of the ongoing AI revolution are often dominated by large language models and generative software, the silent war is being fought in the data center. The hardware required to feed, train, and infer upon these models is […]

The post Plumbing the AI Revolution: Lenovo’s Strategic Pivot to Modernize the Enterprise Backbone appeared first on TechSpective.

Ground robots teaming with soldiers in the battlefield

The brewing GenAI data science revolution

If you lead an enterprise data science team or a quantitative research unit today, you likely feel like you are living in two parallel universes.

In one universe, you have the “GenAI” explosion. Chatbots now write code and create art, and boardrooms are obsessed with how large language models (LLMs) will change the world. In the other universe, you have your day job: the “serious” work of predicting churn, forecasting demand, and detecting fraud using structured, tabular data.

For years, these two universes have felt completely separate. You might even feel that the GenAI hype rocketship has left your core business data standing on the platform.

But that separation is an illusion, and it is disappearing fast.

From chatbots to forecasts: GenAI arrives at tabular and time-series modeling

Whether you are a skeptic or a true believer, you have most certainly interacted with a transformer model to draft an email or a diffusion model to generate an image. But while the world was focused on text and pixels, the same underlying architectures have been quietly learning a different language: the language of numbers, time, and tabular patterns.

Take for instance SAP-RPT-1 and LaTable. The first uses a transformer architecture, and the second is a diffusion model; both are used for tabular data prediction.

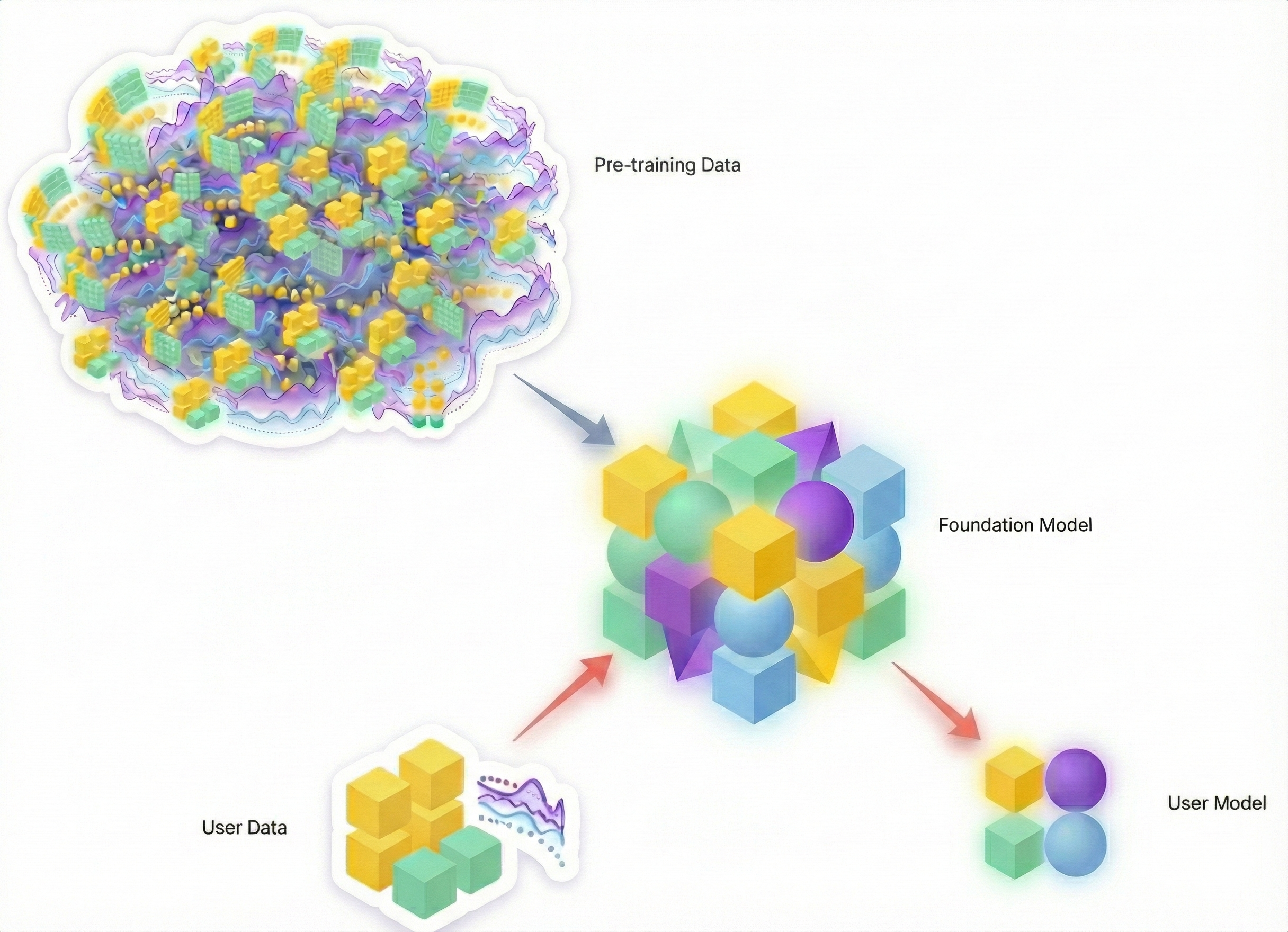

We are witnessing the emergence of data science foundation models.

These are not just incremental improvements to the predictive models you know. They represent a paradigm shift. Just as LLMs can “zero-shot” a translation task they weren’t explicitly trained for, these new models can look at a sequence of data, for example, sales figures or server logs, and generate forecasts without the traditional, labor-intensive training pipeline.

The pace of innovation here is staggering. By our count, since the beginning of 2025 alone, we have seen at least 14 major releases of foundation models specifically designed for tabular and time-series data. This includes impressive work from the teams behind Chronos-2, TiRex, Moirai-2, TabPFN-2.5, and TempoPFN (using SDEs for data generation), to name just a few frontier models.

Models have become model-producing factories

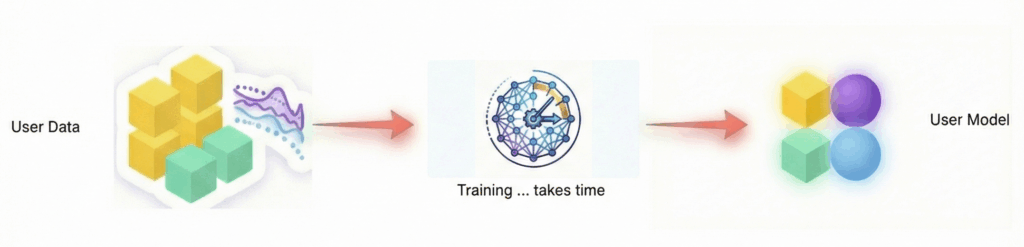

Traditionally, machine learning models were treated as static artifacts: trained once on historical data and then deployed to produce predictions.

That framing no longer holds. Increasingly, modern models behave less like predictors and more like model-generating systems, capable of producing new, situation-specific representations on demand.

We are moving toward a future where you won’t just ask a model for a single point prediction; you will ask a foundation model to generate a bespoke statistical representation—effectively a mini-model—tailored to the specific situation at hand.

The revolution isn’t coming; it’s already brewing in the research labs. The question now is: why isn’t it in your production pipeline yet?

The reality check: hallucinations and trend lines

If you’ve scrolled through the endless examples of grotesque LLM hallucinations online, including lawyers citing fake cases and chatbots inventing historical events, the thought of that chaotic energy infiltrating your pristine corporate forecasts is enough to keep you awake at night.

Your concerns are entirely justified.

Classical machine learning is the conservative choice for now

While the new wave of data science foundation models (our collective term for tabular and time-series foundation models) is promising, it is still very much in the early days.

Yes, model providers can currently claim top positions on academic benchmarks: all top-performing models on the time-series forecasting leaderboard GIFT-Eval and the tabular data leaderboard TabArena are now foundation models or agentic wrappers of foundation models. But in practice? The reality is that some of these “top-notch” models currently struggle to identify even the most basic trend lines in raw data.

They can handle complexity, but sometimes trip over the basics that a simple regression would nail it–check out the honest ablation studies in the TabPFN v2 paper, for instance.

Why we remain confident: the case for foundation models

While these models still face early limitations, there are compelling reasons to believe in their long-term potential. We have already discussed their ability to react instantly to user input, a core requirement for any system operating in the age of agentic AI. More fundamentally, they can draw on a practically limitless reservoir of prior information.

Think about it: who has a better chance at solving a complex prediction problem?

- Option A: A classical model that knows your data, but only your data. It starts from zero every time, blind to the rest of the world.

- Option B: A foundation model that has been trained on a mind-boggling number of relevant problems across industries, decades, and modalities—often augmented by vast amounts of synthetic data—and is then exposed to your specific situation.

Classical machine learning models (like XGBoost or ARIMA) do not suffer from the “hallucinations” of early-stage GenAI, but they also do not come with a “helping prior.” They cannot transfer wisdom from one domain to another.

The bet we are making, and the bet the industry is moving toward, is that eventually, the model with the “world’s experience” (the prior) will outperform the model that is learning in isolation.

The missing link: solving for reality, not leaderboards

Data science foundation models have a shot at becoming the next massive shift in AI. But for that to happen, we need to move the goalposts. Right now, what researchers are building and what businesses actually need remains disconnected.

Leading tech companies and academic labs are currently locked in an arms race for numerical precision, laser-focused on topping prediction leaderboards just in time for the next major AI conference. Meanwhile, they are paying relatively little attention to solving complex, real-world problems, which, ironically, pose the toughest scientific challenges.

The blind spot: interconnected complexity

Here is the crux of the problem: none of the current top-tier foundation models are designed to predict the joint probability distributions of several dependent targets.

That sounds technical, but the business implication is massive. In the real world, variables rarely move in isolation.

- City Planning: You cannot predict traffic flow on Main Street without understanding how it impacts (and is impacted by) the flow on 5th Avenue.

- Supply Chain: Demand for Product A often cannibalizes demand for Product B.

- Finance: Take portfolio risk. To understand true market exposure, a portfolio manager doesn’t simply calculate the worst-case scenario for every instrument in isolation. Instead, they run joint simulations. You cannot just sum up individual risks; you need a model that understands how assets move together.

The world is a messy, tangled web of dependencies. Current foundation models tend to treat it like a series of isolated textbook problems. Until these models can grasp that complexity, outputting a model that captures how variables dance together, they won’t replace existing solutions.

So, for the moment, your manual workflows are safe. But mistaking this temporary gap for a permanent safety net could be a grave mistake.

Today’s deep learning limits are tomorrow’s solved engineering problems

The missing pieces, such as modeling complex joint distributions, are not impossible laws of physics; they are simply the next engineering hurdles on the roadmap.

If the speed of 2025 has taught us anything, it is that “impossible” engineering hurdles have a habit of vanishing overnight. The moment these specific issues are addressed, the capability curve won’t just inch upward. It will spike.

Conclusion: the tipping point is closer than it appears

Despite the current gaps, the trajectory is clear and the clock is ticking. The wall between “predictive” and “generative” AI is actively crumbling.

We are rapidly moving toward a future where we don’t just train models on historical data; we consult foundation models that possess the “priors” of a thousand industries. We are heading toward a unified data science landscape where the output isn’t just a number, but a bespoke, sophisticated model generated on the fly.

The revolution is not waiting for perfection. It is iterating toward it at breakneck speed. The leaders who recognize this shift and begin treating GenAI as a serious tool for structured data before a perfect model reaches the market will be the ones who define the next decade of data science. The rest will be playing catch-up in a game that has already changed.

We are actively researching these frontiers at DataRobot to bridge the gap between generative capabilities and predictive precision. This is just the start of the conversation. Stay tuned—we look forward to sharing our insights and progress with you soon.

In the meantime, you can learn more about DataRobot and explore the platform with a free trial.

The post The brewing GenAI data science revolution appeared first on DataRobot.

Robotic arm successfully learns 1,000 manipulation tasks in one day

DataRobot Q4 update: driving success across the full agentic AI lifecycle

The shift from prototyping to having agents in production is the challenge for AI teams as we look toward 2026 and beyond. Building a cool prototype is easy: hook up an LLM, give it some tools, see if it looks like it’s working. The production system, now that’s hard. Brittle integrations. Governance nightmares. Infrastructure wasn’t built for the complexities and nuances of agents.

For AI developers, the challenge has shifted from building an agent to orchestrating, governing, and scaling it in a production environment. DataRobot’s latest release introduces a robust suite of tools designed to streamline this lifecycle, offering granular control without sacrificing speed.

New capabilities accelerating AI agent production with DataRobot

New features in DataRobot 11.2 and 11.3 help you close the gap with dozens of updates spanning observability, developer experience, and infrastructure integrations.

Together, these updates focus on one goal: reducing the friction between building AI agents and running them reliably in production.

The most impactful areas of these updates include:

- Standardized connectivity through MCP on DataRobot

- Secure agentic retrieval through Talk to My Docs (TTMDocs)

- Streamlined agent build and deploy through CLI tooling

- Prompt version control through Prompt Management Studio

- Enterprise governance and observability through resource monitoring

- Multi-model access through the expanded LLM Gateway

- Expanded ecosystem integrations for enterprise agents

The sections that follow focus on these capabilities in detail, starting with standardized connectivity, which underpins every production-grade agent system.

MCP on DataRobot: standardizing agent connectivity

Agents break when tools change. Custom integrations become technical debt. The Model Context Protocol (MCP) is emerging as the standard to solve this, and we’re making it production-ready.

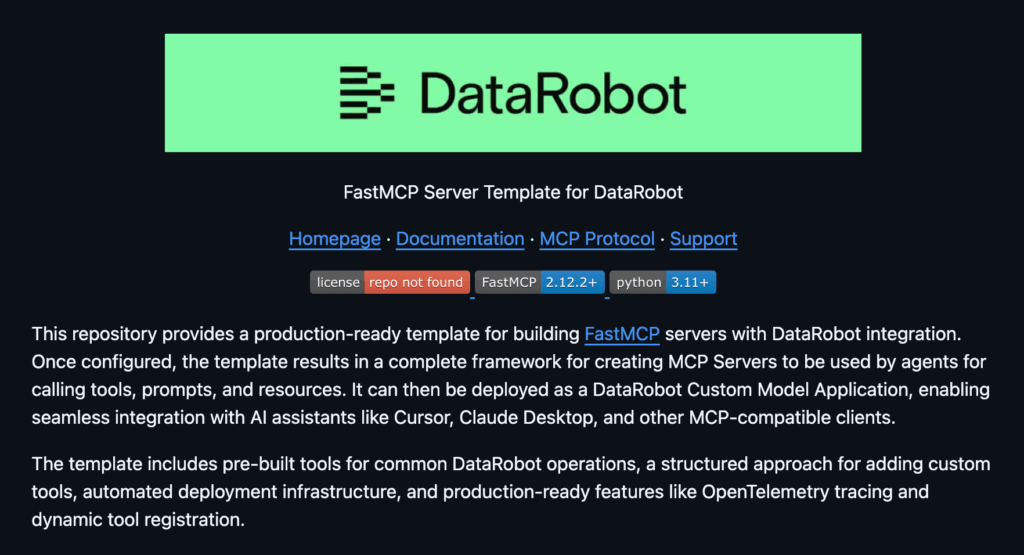

We’ve added an MCP server template to the DataRobot community GitHub.

- What’s new: An MCP server template you can clone, test locally, and deploy directly to your DataRobot cluster. Your agents get reliable access to tools, prompts, and resources without reinventing the integration layer every time. Easily convert your predictive models as tools that are discoverable by agents.

- Why it matters: With our MCP template, we’re giving you the open standard with enterprise guardrails already built in. Test on your laptop in the morning, deploy to production by afternoon.

Talk to My Docs: Secure, agentic knowledge retrieval

Everyone is building RAG. Almost nobody is building RAG with RBAC, audit trails, and the ability to swap models without rewriting code.

The “Talk to My Docs” application template brings natural language chat-style productivity across all your documents and is secured and governed for the enterprise.

- What’s new: A secure, governed chat interface that connects to Google Drive, Box, SharePoint, and local files. Unlike basic RAG, it handles complex formats from tables, spreadsheets, multi-doc synthesis while maintaining enterprise-grade access control.

- Why it matters: Your team needs ChatGPT-style productivity. Your security team needs proof that sensitive documents stay restricted. This does both, out of the box.

Agentic application starter template and CLI: Streamlined build and deployment

Getting an agent into production should not require days of scaffolding, wiring services together, or rebuilding containers for every small change. Setup friction slows experimentation and turns simple iterations into heavyweight engineering work.

To address this, DataRobot is introducing an agentic application starter template and CLI, both designed to reduce setup overhead across both code-first and low-code workflows.

- What’s new: An agentic application starter template and CLI that let developers configure agent components through a single interactive command. Out-of-the-box components include an MCP server, a FastAPI backend, and a React frontend. For teams that prefer a low-code approach, integration with NVIDIA’s NeMo Agent Toolkit enables agent logic and tools to be defined entirely through YAML. Runtime dependencies can now be added dynamically, eliminating the need to rebuild Docker images during iteration.

- Why it matters: By minimizing setup and rebuild friction, teams can iterate faster and move agents into production more reliably. Developers can focus on agent logic rather than infrastructure, while platform teams maintain consistent, production-ready deployment patterns.

Prompt management studio: DevOps for prompts

As prompts move from experiments to production assets, ad hoc editing quickly becomes a liability. Without versioning and traceability, teams struggle to reproduce results or safely iterate.

To address this, DataRobot introduces the Prompt Management Studio, bringing software-style discipline to prompt engineering.

- What’s new: A centralized registry that treats prompts as version-controlled assets. Teams can track changes, compare implementations, and revert to stable versions as prompts move through development and deployment.

- Why it matters: By applying DevOps practices to prompts, teams gain reproducibility and control, making it easier to transition from prototyping to production without introducing hidden risk.

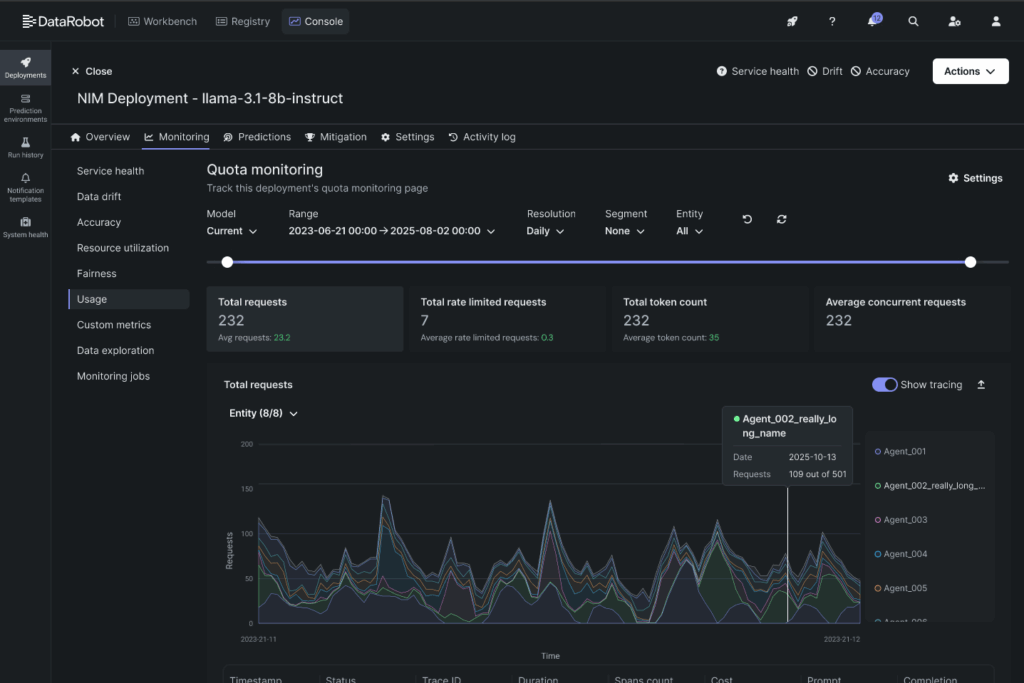

Multi-tenant governance and resource monitoring: Operational control at scale

As AI agents scale across teams and workloads, visibility and control become non-negotiable. Without clear insight into resource usage and enforceable limits, performance bottlenecks and cost overruns quickly follow.

- What’s new: The enhanced Resource Monitoring tab provides detailed visibility into CPU and memory utilization, helping teams identify bottlenecks and manage trade-offs between performance and cost. In parallel, Multi-tenant AI Governance introduces token-based access with configurable rate limits to ensure fair resource consumption across users and agents.

- Why it matters: Developers gain clear insight into how agent workloads behave in production, while platform teams can enforce guardrails that prevent noisy neighbors and uncontrolled resource usage as systems scale.

Expanded LLM Gateway: Multi-model access without credential sprawl

As teams experiment with agent behavior and reasoning, access to multiple foundation models becomes essential. Managing separate credentials, rate limits, and integrations across providers quickly introduces operational overhead.

- What’s new: The expanded LLM Gateway adds support for Cerebras and Together AI alongside Anthropic, providing access to models such as Gemma, Mistral, Qwen, and others through a single, governed interface. All models are accessed using DataRobot-managed credentials, eliminating the need to manage individual API keys.

- Why it matters: Teams can evaluate and deploy agents across multiple model providers without increasing security risk or operational complexity. Platform teams maintain centralized control, while developers gain flexibility to choose the right model for each workload.

New supporting ecosystem integrations

Jira and Confluence connectors: To power your vector databases, DataRobot provides a cohesive ecosystem for building enterprise-ready, knowledge-aware agents.

NVIDIA NIM Integration: Deploy Llama 4, Nemotron, GPT-OSS, and 50+ GPU-optimized models without the MLOps complexity. Pre-built containers, production-ready from day one.

Milvus Vector Database: Direct integration with the leading open-source VDB, plus the ability to select distance metrics that actually matter for your classification and clustering tasks.

Azure Repos & Git Integration: Seamless version control for Codespaces development with Azure Repos or self-hosted Git providers. No manual authentication required. Your code stays centralized where your team already works.

Get hands-on with DataRobot’s Agentic AI

If you’re already a customer, you can spin up the GenAI Test Drive in seconds. No new account. No sales call. Just 14 days of full access inside your existing SaaS environment to test these features with your actual data.

Not a customer yet? Start a 14-day free trial and explore the full platform.

For more information, please visit our Version 11.2 and Version 11.3 release notes in the DataRobot docs.

The post DataRobot Q4 update: driving success across the full agentic AI lifecycle appeared first on DataRobot.