Bill Smart, Professor of Mechanical Engineering and Robotics at Oregon State University, helped start competitions as part of ICRA. In this episode, Bill dives into the high-level decisions involved with creating a meaningful competition. The conversation explores how competitions are there to showcase research, potential ideas for future competitions, the exciting phase of robotics we are currently in, and the intersection of robotics, ethics, and law.

Bill Smart

Dr. Smart does research in the areas of robotics and machine learning. In robotics, Smart is particularly interested in improving the interactions between people and robots; enabling robots to be self-sufficient for weeks and months at a time; and determining how they can be used as personal assistants for people with severe motor disabilities. In machine learning, Smart is interested in developing strategies for teaching robots to act effectively (or even optimally), based on long-term interactions with the world and given intermittent and at times incorrect feedback on their performance.

Links

- Download mp3 (29.1 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

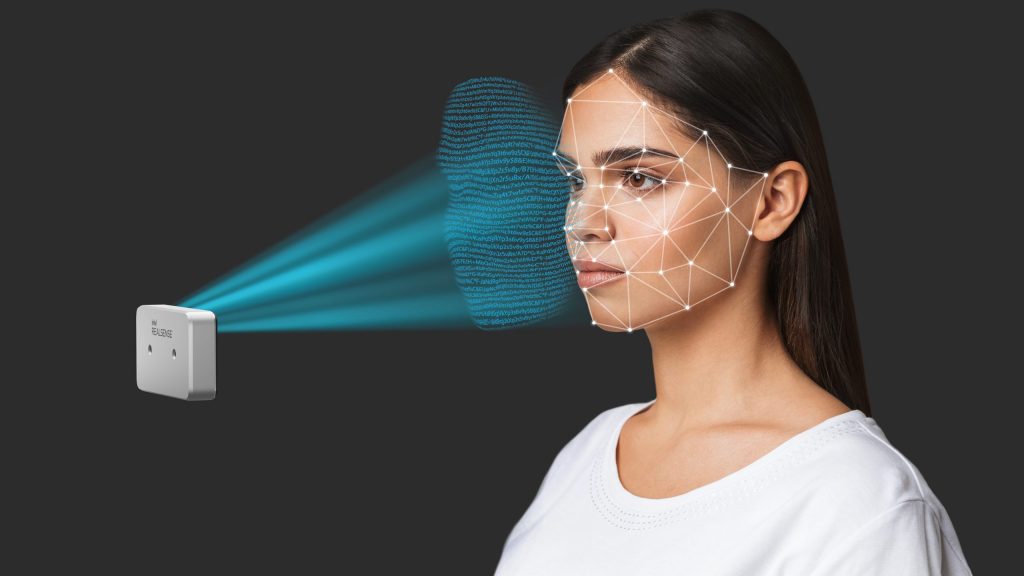

Marketing. Product Management and Customer Support teams. He joined Intel in 2018 after a few years as an Executive Advisor working with startups in the IoT, AI, Flash Array, and SaaS markets. Before his Executive Advisor role, Joel spent two years as Vice President of Product Line Management at Seagate Technology with responsibility for their $13B product portfolio. He joined Seagate from Toshiba, where Joel spent 4 years as Vice President of Marketing and Product Management for Toshiba’s HDD and SSD product lines. Joel joined Toshiba with Fujitsu’s Storage Business acquisition, where Joel spent 12 years as Vice President of Marketing, Product Management, and Business Development. Joel’s Business Development efforts at Fujitsu focused on building emerging market business units in Security, Biometric Sensors, H.264 HD Video Encoders, 10GbE chips, and Digital Signage. Joel earned his bachelor’s degree in Electrical Engineering and Math from the University of Maryland. Joel also graduated from Fujitsu’s Global Knowledge Institute Executive MBA leadership program.

Marketing. Product Management and Customer Support teams. He joined Intel in 2018 after a few years as an Executive Advisor working with startups in the IoT, AI, Flash Array, and SaaS markets. Before his Executive Advisor role, Joel spent two years as Vice President of Product Line Management at Seagate Technology with responsibility for their $13B product portfolio. He joined Seagate from Toshiba, where Joel spent 4 years as Vice President of Marketing and Product Management for Toshiba’s HDD and SSD product lines. Joel joined Toshiba with Fujitsu’s Storage Business acquisition, where Joel spent 12 years as Vice President of Marketing, Product Management, and Business Development. Joel’s Business Development efforts at Fujitsu focused on building emerging market business units in Security, Biometric Sensors, H.264 HD Video Encoders, 10GbE chips, and Digital Signage. Joel earned his bachelor’s degree in Electrical Engineering and Math from the University of Maryland. Joel also graduated from Fujitsu’s Global Knowledge Institute Executive MBA leadership program.

After several years in the architectural profession, Pietro founded Pliant Energy Systems to explore renewable energy concepts he first pondered while earning his first degree in marine biology and oceanography. With funding from four federal agencies he has broadened the application of these concepts into marine propulsion and a highly novel robotics platform.

After several years in the architectural profession, Pietro founded Pliant Energy Systems to explore renewable energy concepts he first pondered while earning his first degree in marine biology and oceanography. With funding from four federal agencies he has broadened the application of these concepts into marine propulsion and a highly novel robotics platform.