A core objective of RoboCup is to promote and advance robotics and AI research through the challenges offered by its various leagues. The ultimate goal of the soccer competition is that, by 2050, a team of fully autonomous humanoid robots will defeat the most recent winner of the FIFA World Cup. To bring this vision closer to reality, the RoboCup Federation has announced several changes to the leagues. We spoke with Alessandra Rossi, a trustee who has been involved in the humanoid soccer league for many years, to learn more.

Could you start by introducing yourself and tell us how you’ve been involved in RoboCup throughout the years, because you’ve been involved in so many aspects of the competition!

I am Alessandra Rossi from the University of Naples “Federico II”, where I am an Assistant Professor of Computer Science. I began working with and collaborating in RoboCup in 2016, when I started my PhD at the University of Hertfordshire in the UK. I am still affiliated with the University of Hertfordshire, as I remain a member of the humanoid KidSize team Bold Hearts, the longest continuously active team in the UK. After a few years, I became the team leader of Bold Hearts.

In 2019, I became a member of both the Technical Committee and the Organizing Committee of the Humanoid League. After serving on the Technical Committee for two years, I was elected to the Executive Committee of the Humanoid League. In 2025, I was elected to the Board of Trustees for the first time.

Over the years, I have steadily increased my involvement and commitment to RoboCup. I have always sought to actively engage the RoboCup community, both during competitions and outside of competition periods. I also work to encourage engagement between the major and junior leagues and to participate in regional RoboCup events.

While working at the University of Hertfordshire as a Visiting Lecturer, we launched an online module that uses RoboCup as a benchmark for teaching robotics to undergraduate students. The module is still running. I initially served as the module leader, and this role has since been taken over by our Bold Hearts teammate, Bente Riegler.

Last year, Maike Paetzel-Prüsmann, Merel Keijsers, and I (as lead authors), in collaboration with several trustees and many members from different leagues, published a paper on the current and future challenges in humanoid robotics. The paper was published in Autonomous Robots and is, to the best of my knowledge, the first to involve such a large and diverse group of contributors from across the RoboCup leagues. It discusses research within RoboCup and the collaboration and synergies between the leagues.

Group photo of the humanoid league teams at RoboCup 2025.

Group photo of the humanoid league teams at RoboCup 2025.

I understand that there are some changes planned for the leagues. Could say something about that, and specifically the changes that affect the soccer and the humanoid side.

The 2050 goal of the RoboCup Federation, as many people are probably aware, is for a team of humanoid robots to play against the winners of the FIFA World Cup. To achieve this, it is necessary to push further in that direction. One of the key changes, therefore, will be a stronger focus on humanoid robots.

Another major change will be the merger of the Standard Platform League (SPL) and the KidSize Humanoid League. This merged league will have the freedom to define its exact format and to develop a new roadmap that aligns the entire league toward a shared objective. While the 2050 goal itself remains unchanged, the path toward achieving it will need to be adjusted.

It is crucial to continue fostering the engagement of teams in the leagues that will be affected by these changes. At the same time, we must recognize that technology is advancing rapidly. Over the past year, in particular, we have seen significant progress in both hardware platforms and large language models. As RoboCup serves as a global benchmark for robotics research, we should continuously strive to advance technology and research—while still having fun.

Soccer is the complex task and behavior we are studying, and it is complex in many dimensions: from physical control and robot motion, to communication and strategy, and even human-like interactions. These include responding to the referee’s whistle, verbal and non-verbal communication among team members, interactions with the coach, and communication with the referee. All of these aspects will ultimately need to be incorporated into the humanoid league.

The RoboCup Federation has agreed some new partnerships with Unitree, Fourier and Booster. What impact will this have on the humanoid league? Will there be a standard platform element with teams using a specific humanoid robot?

I believe we will see a mix of different robots. With the three companies currently sponsoring RoboCup, we have already seen that their robots can achieve a wide range of behaviors, and there have been significant improvements in robot control. Some of these robots can walk very quickly—almost to the point of running.

Initially, there may be the possibility of multiple teams using the same platform. However, we must keep in mind that both hardware and software can become obsolete very quickly, so we need to remain open to multiple options. A robot that is state of the art today may no longer be so in a year or two. As a result, committing to a single standard platform could limit future progress.

For this reason, the current idea is to remain open to multiple platforms. Many teams already have excellent custom-built robots, and further improvements to these platforms should be encouraged. That said, the exact structure has not yet been decided, and these decisions will be made in consultation with the teams. It is important to give the RoboCup community the time it needs to adapt and move forward.

There have been some big advances in the humanoid adult-size league in the past couple of years. What improvements stood out to you at RoboCup2025 in Brazil?

One major change is that we have added extra robots to each team. Previously, teams played with just two robots per side, but matches are now played three versus three.

Another important improvement is the reduced presence of humans on the field. There is no longer a handler assigned to each robot. In the past, a team member had to walk behind the robots in case they fell and risked being damaged.

I have actually played in a match against the winning humanoid team. Naturally, the human team won, but it was an enjoyable and very interesting game, as the robots were surprisingly fast.

Action from the human vs humanoid match at RoboCup 2025.

Further action from the human vs humanoid match at RoboCup 2025.

What has been the general reaction from the RoboCup community to the changes? I guess it depends on which league you’re in as to how much it affects you.

Yes, it depends on which league you are part of. The reactions have been a mix of excitement and passion. Of course, everyone is keen to see improvements, and participants have always been prepared for changes to the rules and the league structures. However, there are still some open questions, and teams are waiting to see how things will evolve. Tomorrow, there will be a meeting with the President and several trustees to address questions raised by the leagues.

The overall direction of RoboCup, guided by the 2050 goal has not changed. Each league has been extremely valuable and has contributed in different ways toward achieving that goal. RoboCup has also been immensely valuable for robotics research more broadly. Beyond being fun, the challenges involved in making robots play soccer are extraordinarily complex. The research and solutions developed within RoboCup can be applied to many other fields and applications.

About Alessandra Rossi

|

Alessandra is Assistant Professor at the University of Naples Federico II, Italy. Her PhD thesis was part of the Marie Sklodowska-Curie Research ETN SECURE project at the University of Hertfordshire (UK). Her research interests include Human–(Multi) Robot Interaction, social robotics, trust, XAI, multi-agent systems and user profiling. She is Project Manager and co-supervisor of the MSCA PERSEO (955778), TRAIL (101072488) and SWEET (101168792). She is also co-PI of the project ERROR (FA8655-23-1-7060), and part of several national and international projects. Alessandra is also trustee member of RoboCup Federation, and member of the Humanoid League team called Bold Hearts. She is Chair of the IEEE P3108 |

“Study Design”, and a member of the “Appendix” groups, she is Program Chair of IEEE RO-MAN 2027, she has been Robotic Challenge Chair at ICSR 2025, Special Session Chair of IEEE RO-MAN 2024, Publicity Chair of IEEE RO-MAN 2022 and 2023, Organising Chair of the 26th RoboCup International Symposium 2023, and she is on the program committee of several international conferences on human–robot interaction and artificial intelligence.

“Study Design”, and a member of the “Appendix” groups, she is Program Chair of IEEE RO-MAN 2027, she has been Robotic Challenge Chair at ICSR 2025, Special Session Chair of IEEE RO-MAN 2024, Publicity Chair of IEEE RO-MAN 2022 and 2023, Organising Chair of the 26th RoboCup International Symposium 2023, and she is on the program committee of several international conferences on human–robot interaction and artificial intelligence.  The Industrial League arena at RoboCup2025.

The Industrial League arena at RoboCup2025.

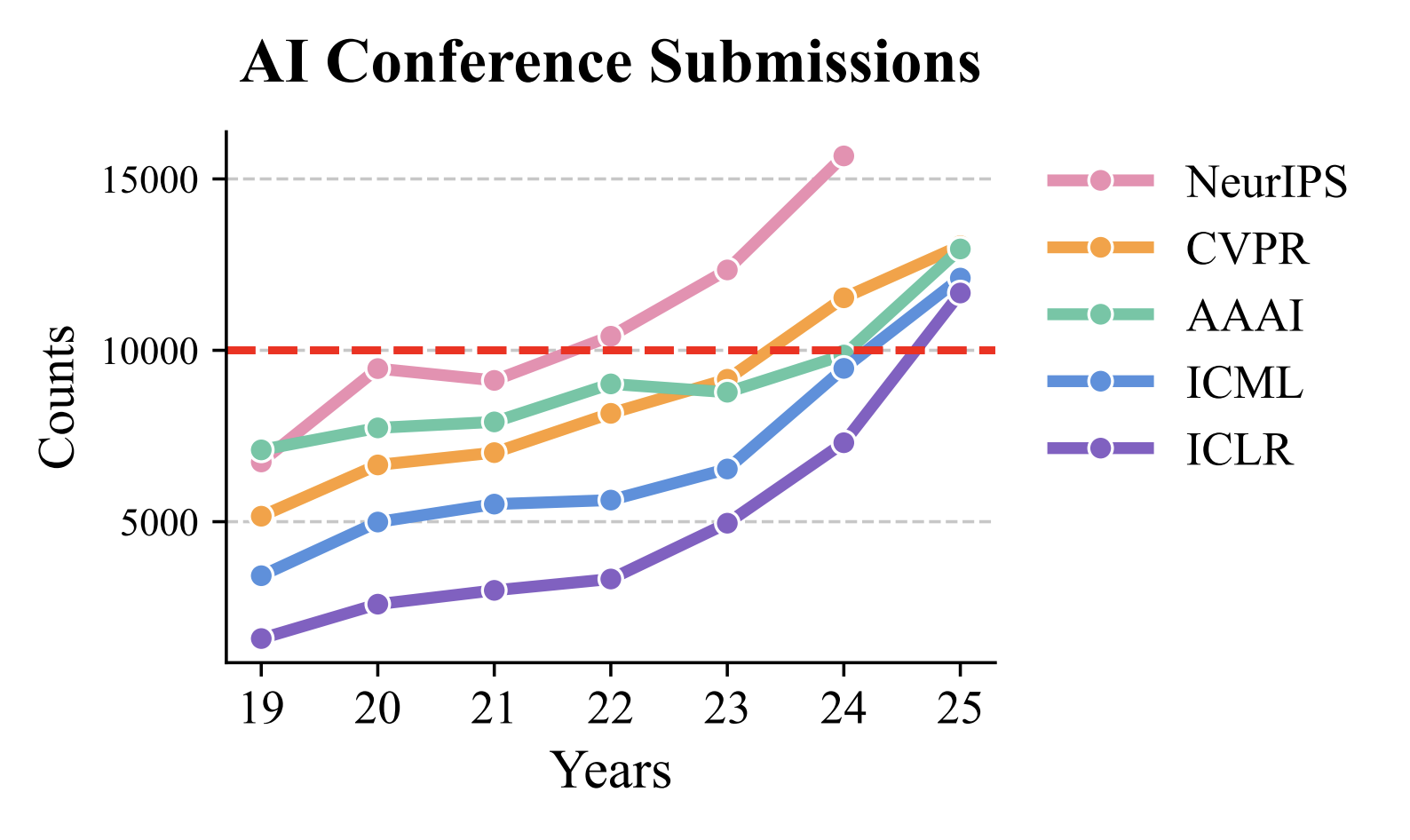

Submissions to some of the major AI conferences over the past few years.

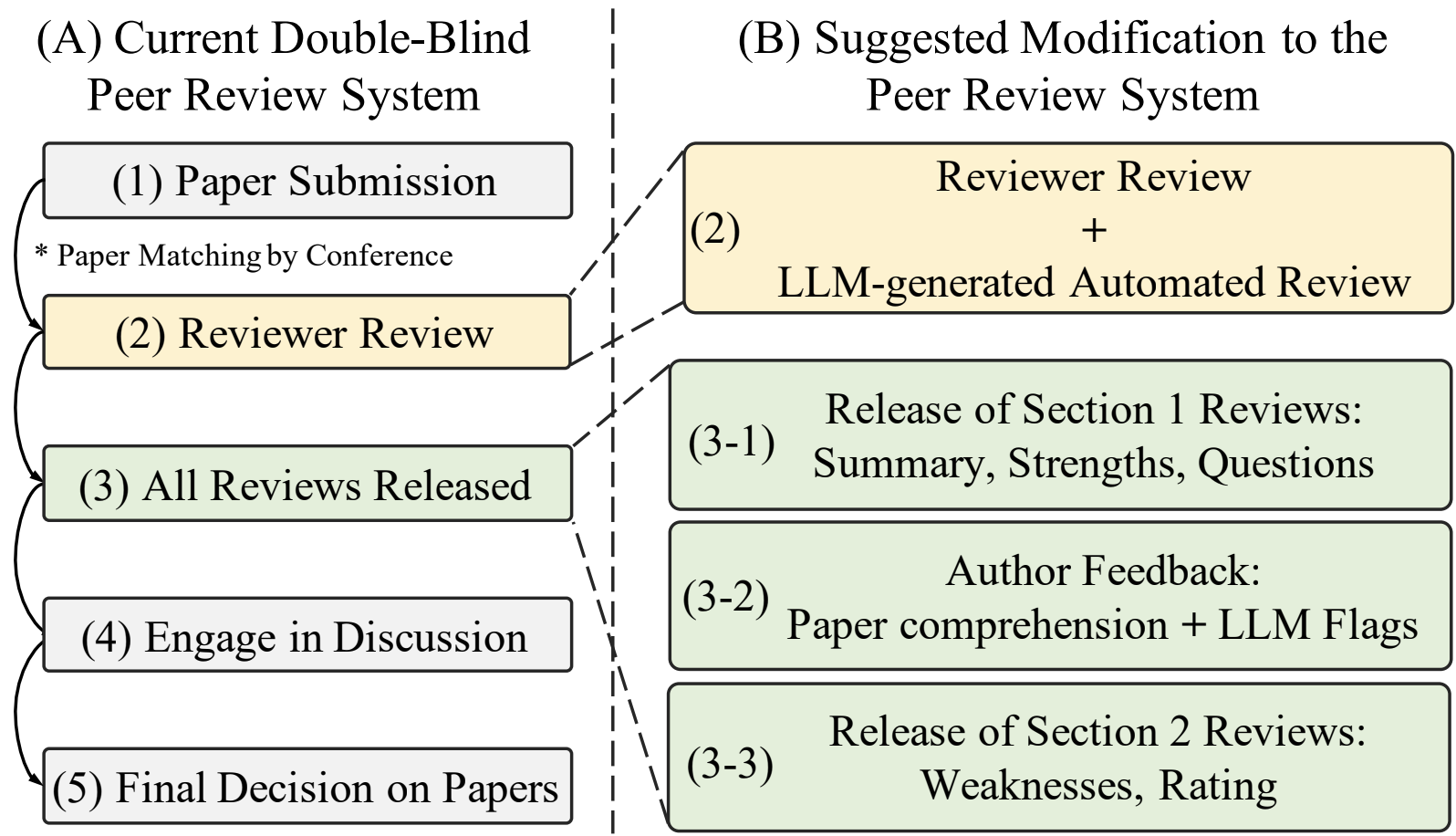

Submissions to some of the major AI conferences over the past few years. The authors’ proposed modification to the peer-review system.

The authors’ proposed modification to the peer-review system.

RoboCup@Work League teams at the event in Brazil.

RoboCup@Work League teams at the event in Brazil. Three robots from the @Work competition in Brazil.

Three robots from the @Work competition in Brazil.

RoboCup 2025 – Salvador, Brazil (@robocup2025)

RoboCup 2025 – Salvador, Brazil (@robocup2025)

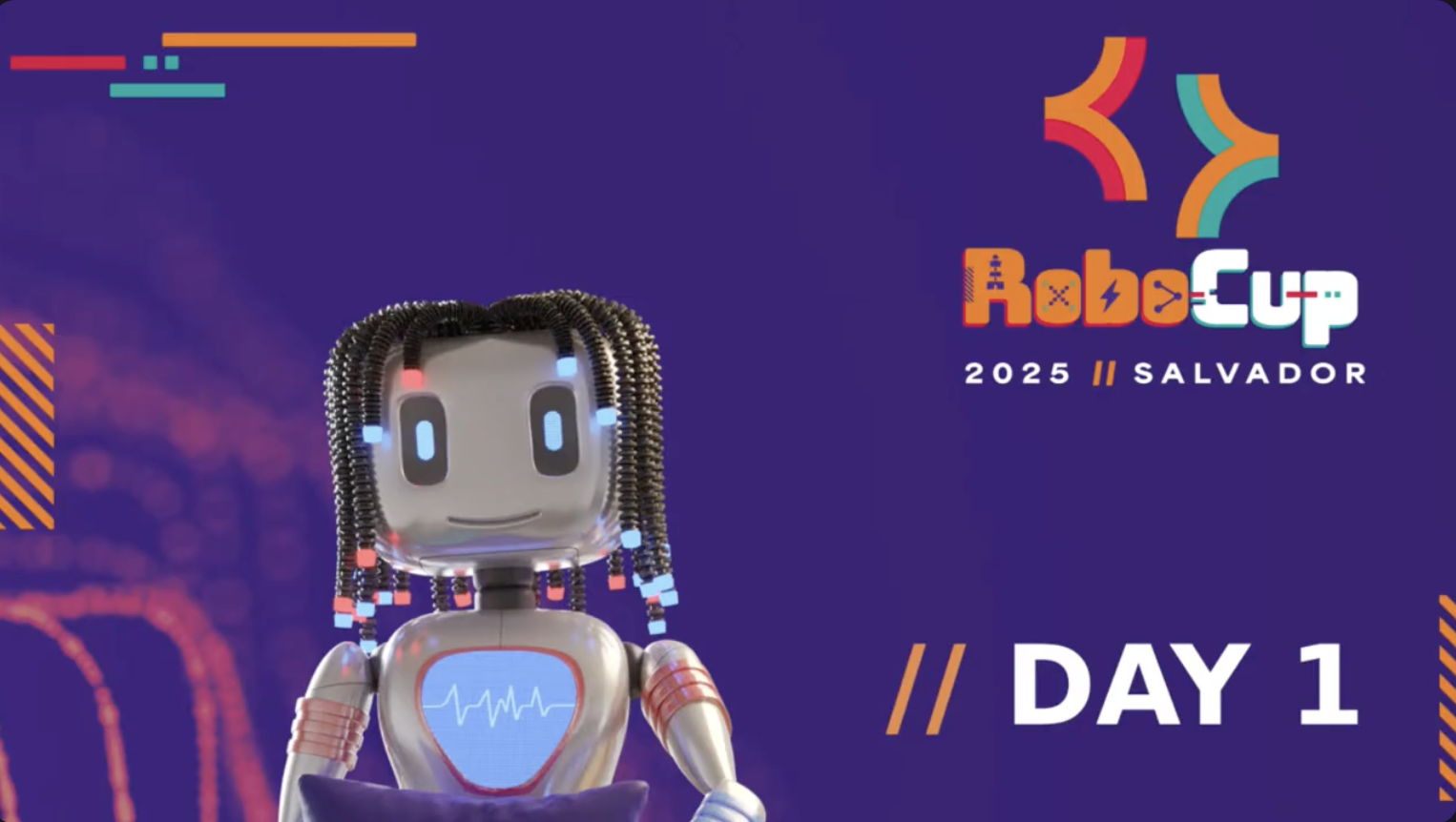

A screenshot from the new simulator that will be trialled for a special challenge at RoboCup2025.

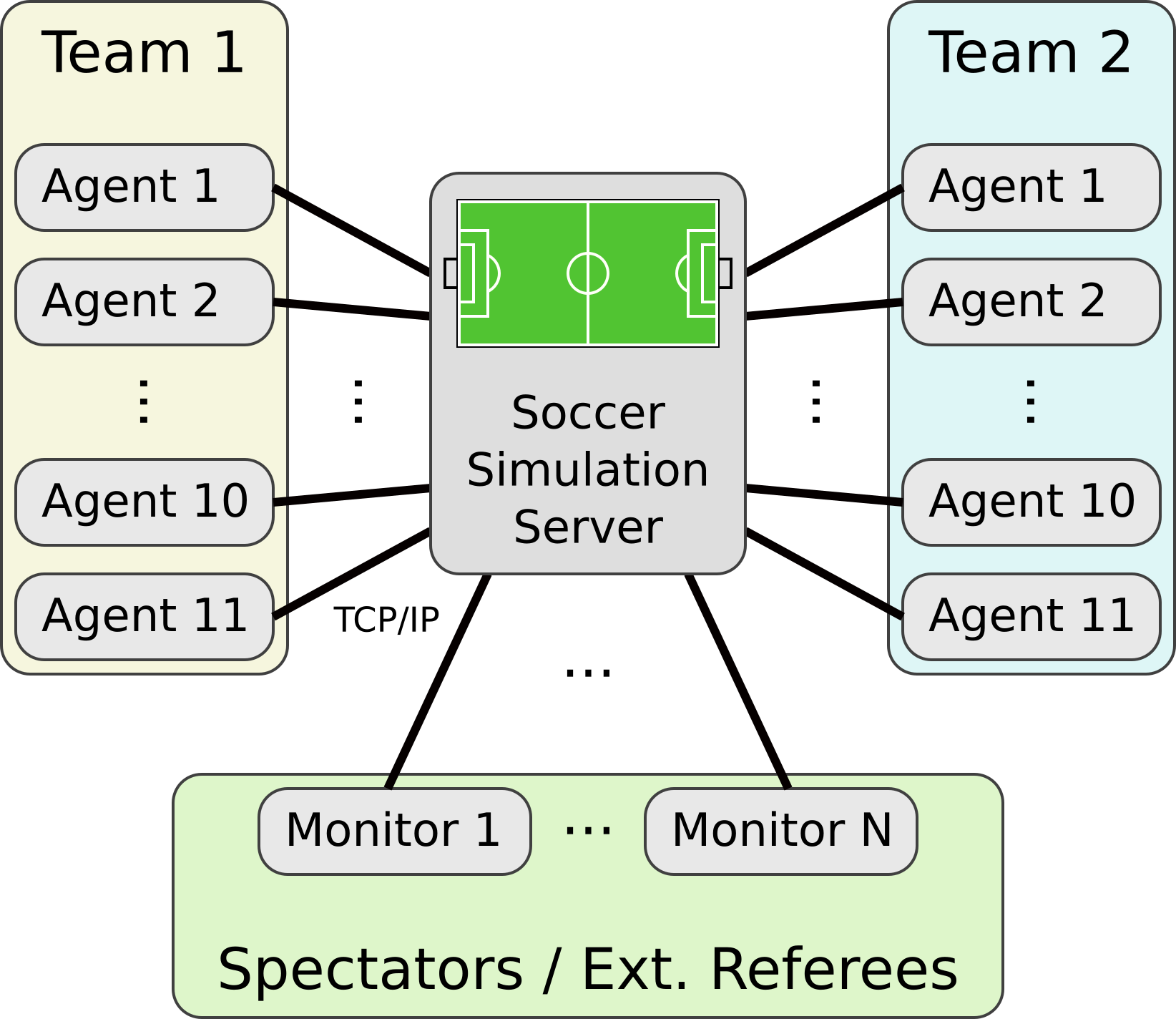

A screenshot from the new simulator that will be trialled for a special challenge at RoboCup2025. An illustration of the simulator set-up.

An illustration of the simulator set-up.

Kick-off in a Small Size League match. Image credit: Nicolai Ommer.

Kick-off in a Small Size League match. Image credit: Nicolai Ommer.

The RoboCupRescue arena at RoboCup2024, Eindhoven.

The RoboCupRescue arena at RoboCup2024, Eindhoven. The RoboCupRescue arena at ICRA2025 (one of the qualifying events for RoboCup2025). Credit: Adam Jacoff.

The RoboCupRescue arena at ICRA2025 (one of the qualifying events for RoboCup2025). Credit: Adam Jacoff. An example of one of the lanes (challenges) that teams tackle during competition. Credit: Adam Jacoff.

An example of one of the lanes (challenges) that teams tackle during competition. Credit: Adam Jacoff. One of the four-legged robots with wheels as feet. Image from video taken at ICRA 2025, of the robot tackling one of the test lanes. Credit: Adam Jacoff.

One of the four-legged robots with wheels as feet. Image from video taken at ICRA 2025, of the robot tackling one of the test lanes. Credit: Adam Jacoff.

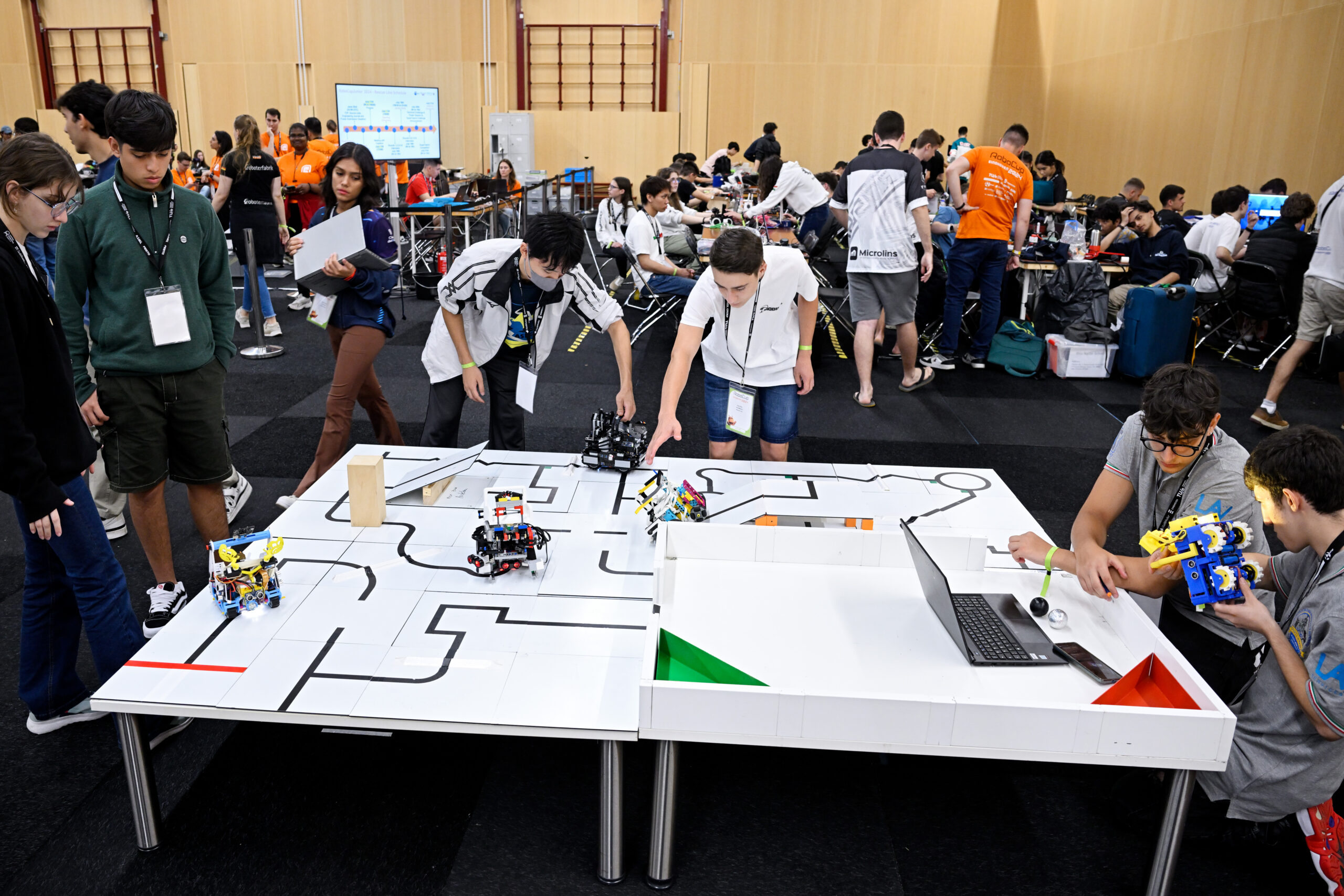

Action from RoboCupJunior Rescue at RoboCup 2024. Photo: RoboCup/Bart van Overbeeke.

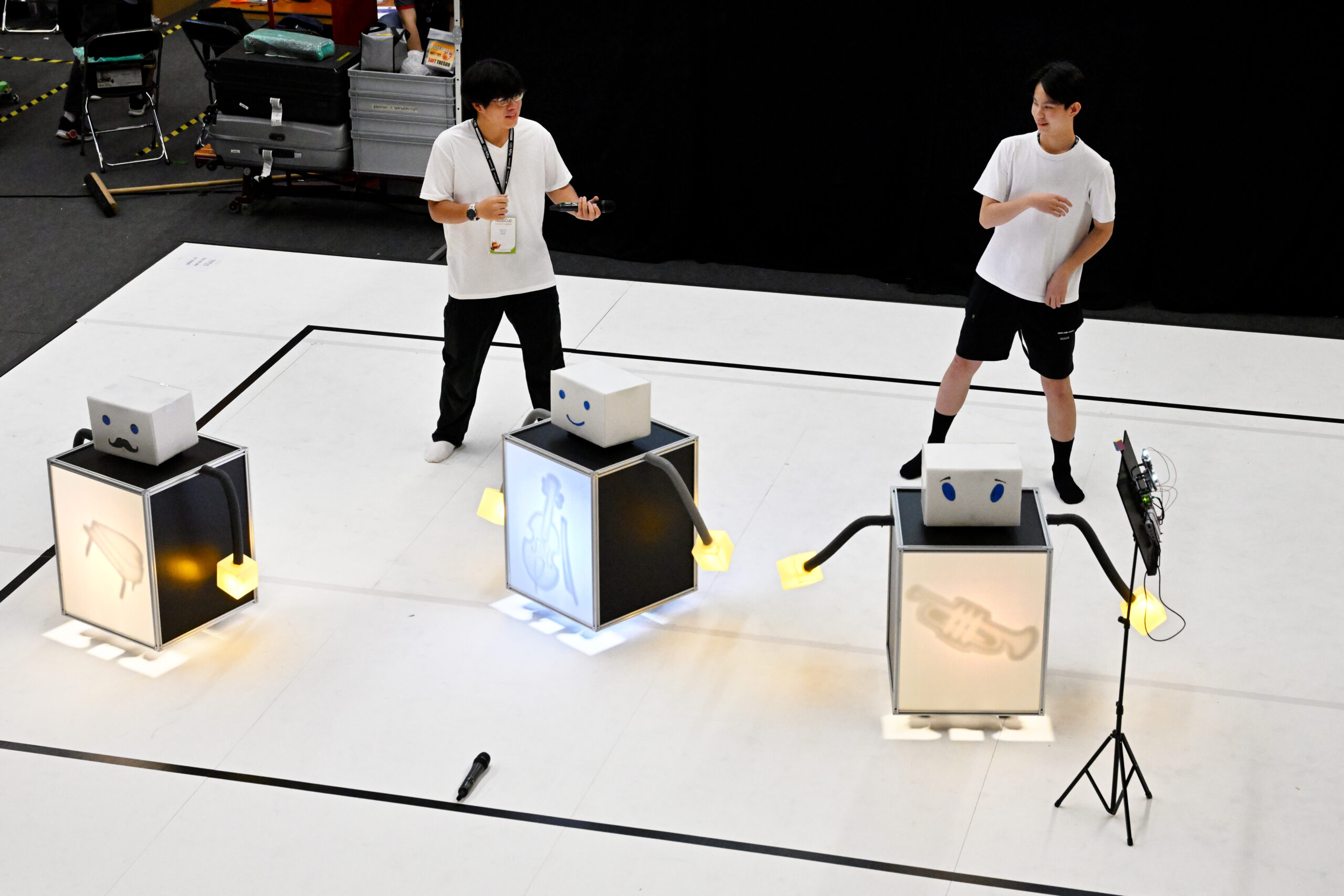

Action from RoboCupJunior Rescue at RoboCup 2024. Photo: RoboCup/Bart van Overbeeke. RoboCupJunior On Stage at RoboCup 2024. Photo: RoboCup/Bart van Overbeeke.

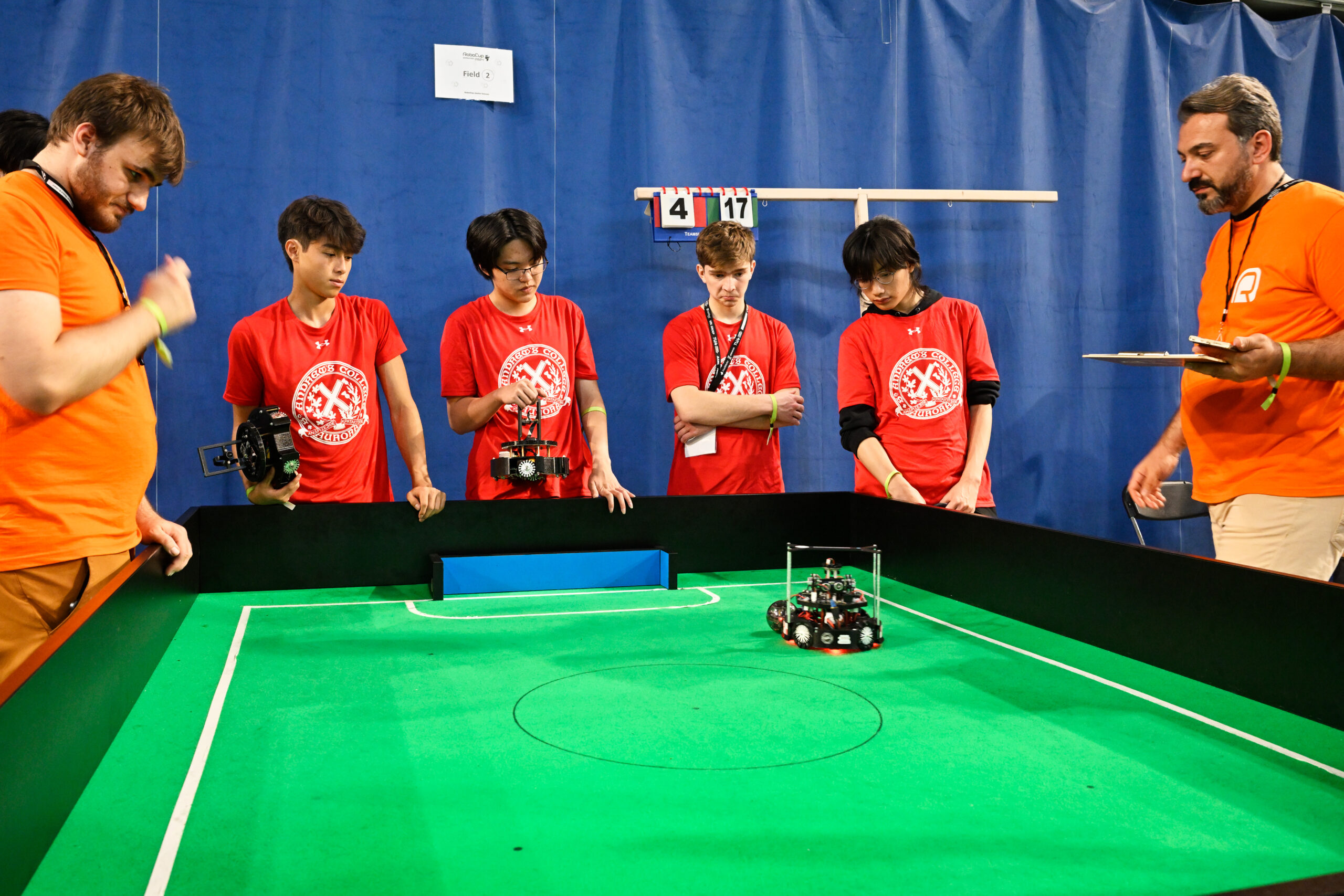

RoboCupJunior On Stage at RoboCup 2024. Photo: RoboCup/Bart van Overbeeke. RoboCupJunior Soccer at RoboCup 2024. Photo: RoboCup/Bart van Overbeeke.

RoboCupJunior Soccer at RoboCup 2024. Photo: RoboCup/Bart van Overbeeke. RoboCupJunior Rescue at RoboCup 2024. Photo: RoboCup/Bart van Overbeeke.

RoboCupJunior Rescue at RoboCup 2024. Photo: RoboCup/Bart van Overbeeke. RoboCupJunior Rescue at RoboCup 2024. Photo: RoboCup/Bart van Overbeeke.

RoboCupJunior Rescue at RoboCup 2024. Photo: RoboCup/Bart van Overbeeke.