Agentic AI is moving fast. In post one of this series, we looked at why agentic AI will fail without an AI gateway — the risks of cost sprawl, brittle workflows, and runaway complexity when there’s no unifying layer in place. In post two, we showed you how to tell whether a platform qualifies as a true AI gateway that brings abstraction, control, and agility together so enterprises can scale without breaking.

This post takes the next step, giving you a readiness check to avoid painful missteps or costly rework.

The risk is clear: The more progress you make without a gateway, the harder it becomes to retrofit one — and the more exposure you carry.

A true AI gateway needs to be customizable and future-proof by design, adapting as your architecture, policies, and budget evolve. The key is starting fast with a gateway that scales and adjusts with you rather than wasting effort on brittle builds that can’t keep up.

Let’s walk through the essential questions to help you assess where you stand and what it will take to support an AI gateway.

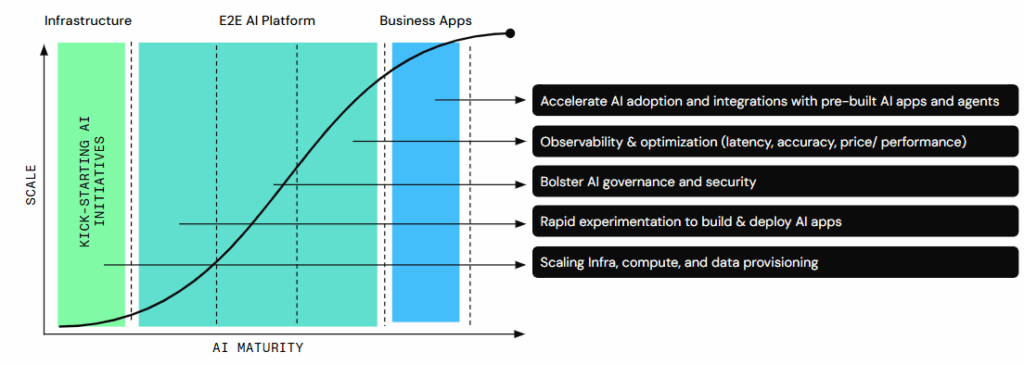

Where are you on the agentic AI maturity curve?

Before you decide whether you’re ready for an AI gateway, you need to know where your organization stands. Most AI leaders aren’t starting from zero, but aren’t exactly at the finish line, either.

Here’s a simple framework to pinpoint your AI maturity level:

- Stage 1: Infrastructure readiness: You’ve provisioned compute and environments. You can run early experiments, but nothing’s deployed yet. If this describes you, you’re still in the foundational phase where progress is more about setup than outcomes.

- Stage 2: Initial experimentation: You’ve deployed one or two agentic AI use cases into production. Teams are experimenting rapidly, and the business is starting to see value. This stage is marked by visible momentum, but your AI efforts remain limited in scope and maturity.

- Stage 3: Governance in place: Your AI is in production and maintained. You’ve implemented enterprise-grade security, compliance, and performance monitoring. You have real AI governance, not just experimentation. Reaching this point signals you’ve moved from ad hoc adoption to structured, enterprise-level operations.

- Stage 4: Optimization and observability: You’re scaling AI across more use cases. Dashboards, diagnostics, and optimization tools are helping you fine-tune performance, cost, and reliability. You’re pushing for efficiency and clarity. Here, maturity shows up in your ability to measure impact, compare trade-offs, and refine outcomes systematically.

- Stage 5: Full business integration: Agentic AI is embedded across your organization, threaded into business processes via apps and automations. At this stage, AI is no longer a project or program, but a fabric of how the business runs day to day.

Most enterprises today sit between Stage 2 and Stage 3 of their agentic AI journey. Pinpointing your current stage will help you determine what to focus on to reach the next level of maturity while protecting the progress already achieved.

When should you start thinking about an AI gateway?

Waiting until “later” is what gets teams in trouble. By the time you feel the pain of not having one, you may already be facing rework, compliance risk, or ballooning costs. Here’s how your readiness maps to the maturity curve:

Stage 1: Infrastructure readiness

Gateway thinking should begin toward the end of this stage when your infrastructure is ready and early experiments are underway. This is where you’ll want to start identifying the control, abstraction, and agility you’ll need as you scale, because without that early alignment, each new experiment adds complexity that becomes harder to untangle later. A gateway lens helps you design for growth instead of patching over gaps down the road.

Stage 2: Initial experimentation

This is the ideal window of opportunity. You’ve got one or two use cases in production, which means complexity and risk are about to ramp up as more teams adopt AI, integrations multiply, and governance demands increase. Use this stage to assess readiness and shape gateway requirements before chaos multiplies.

That means looking closely at how your pilots are performing, where handoffs break down, and which controls you’ll need as adoption spreads. It’s also the time to define baseline requirements, like policy enforcement, monitoring, and tool interoperability, so the gateway reflects real needs rather than guesswork.

Stage 3: Governance in place

Ideally, you should already have a gateway by this stage. Without one, you’re likely duplicating effort, losing visibility, or struggling to enforce policies consistently. Implementing governance without a gateway makes scaling difficult because every new use case adds another layer of manual oversight and inconsistent enforcement.

That opens hidden gaps in security and compliance as teams create their own workarounds or bypass approval steps, leaving you vulnerable to issues like untracked data access, audit failures, or even regulatory fines.

At this point, risks stop being theoretical and surface as operational bottlenecks, mounting liability, and roadblocks that prevent you from moving beyond controlled experimentation into enterprise-scale adoption.

Stage 4: Optimization and observability

It’s not too late for an AI gateway at this point, but you’re in the danger zone. Most workflows are live and the number of tools you’re using has multiplied, which means complexity and scale are increasing rapidly. A gateway can still help optimize cost and observability, but implementation will be harder, rework will be inevitable, and overhead will be higher because every policy, integration, and workflow has to be shoehorned into systems already in motion.

The real risk here is runaway inefficiency: The more you scale without central control, the more complexity turns from an asset into a burden.

Stage 5: Full business integration

This is the point where rolling out an AI gateway gets painful. Retrofitting at this stage means ripping out redundancies like duplicate data pipelines and overlapping automations, untangling a sprawl of disconnected tools that don’t talk to each other, and trying to enforce consistent policies across teams that have built their own rules for access, security, and approvals. Costs spike, and efficiency gains are slow as every fix requires unlearning and rebuilding what’s already in use.

At this level, not having a gateway becomes a systemic drag where AI is deeply embedded organization-wide, but hidden inefficiencies prevent it from reaching its full potential.

TL;DR: Stage 2 is the sweet spot for standing up an AI gateway, Stage 3 is the last safe window, Stage 4 is a scramble, and Stage 5 is a headache (and a liability).

What should you already have in place?

Even if you’re early in your maturity journey, an AI gateway only delivers value if it’s set up on the right foundation. Think of it like building a highway: You can’t manage traffic at scale until the lanes are paved, the signals are working, and the on-ramps are in place.

Without the basics, adding a central control system just creates bottlenecks. So, if you’re missing the essentials, it’s too soon for a gateway. With the basics under your belt, the gateway becomes the load-bearing structure that keeps everything aligned, enforceable, and scalable.

At minimum, here’s what you should have in place before you’re ready for an AI gateway:

A few AI use cases in production

You don’t need dozens — just enough to prove AI is delivering real value. For example, your support team might use an AI assistant to triage tickets. Or finance could run a workflow that extracts data from invoices and reconciles it with purchase orders.

Why?: A gateway is about scaling and governing what already exists. Without real, active use cases, you have nothing to abstract or optimize. Think about the highway example above: If there’s no live traffic on the road, there’s nothing for signals to manage.

Core agentic components

Your environment should already include some mix of:

- LLMs: The engine that powers reasoning and generation.

- Unstructured data processing pipelines, pre-processing for video/images/RAG, or orchestration logic: The bridge between messy data and usable inputs.

- Vector databases: The memory layer that makes retrieval fast and relevant.

- APIs in active use: The connectors that let everything talk and work together.

Why?: A gateway is most effective when it can connect and coordinate across components. These are your lanes, signals, and interchanges. They may not be fancy, but they keep traffic moving. If your architecture is still theoretical, the gateway has nothing to route, secure, or govern.

At least one defined workflow

A defined workflow should illustrate the path from raw input to real output, showing how your AI moves beyond theory into practice. It could be as simple as: LLM pulls from a vector DB → processes data → outputs results to a dashboard.

Why?: Gateways work best when they wrap around real flows — not isolated tools. Without at least one production workflow, you won’t yet have a demonstrated need for governance or observability for a critical system.

Regulatory or operational mandates

Regulations and internal mandates shape how AI should be designed, deployed, and monitored in your organization. From GDPR and HIPAA to enterprise audit requirements, these rules dictate data handling, access control, and accountability. An AI gateway becomes the natural enforcement point, embedding compliance and auditability into the workflow so that growth doesn’t come at the expense of security or trust.

Why?: Because the control layer of an AI gateway is what helps you meet those requirements at scale. These are your traffic laws and safety codes. As AI adoption expands, mandates multiply by use case, region, and department.

For example, a healthcare workflow may need HIPAA compliance, while a customer support bot handling EU data must follow GDPR. A gateway scales with that complexity, providing policy enforcement and auditability without manual effort.

Do you have a documented agentic AI strategy?

A gateway can’t enforce what isn’t defined.

If your team hasn’t articulated what constraints the agentic AI needs to operate under, the success criteria it should meet, and the growth phases you defined, your gateway has nothing to optimize, secure, or scale.

A well-documented agentic AI strategy gives the gateway a clear mission and should spell out:

- Where agentic AI will be used: Identify where agentic AI will operate (e.g., marketing analytics, customer operations) so the gateway can apply guardrails, permissions, and visibility by domain.

- An adoption and growth plan: Map how AI will expand (from pilots to enterprise scale) so the gateway can orchestrate rollout, provisioning, and monitoring consistently.

- Success criteria: Establish measurable outcomes (ROI, cycle-time reduction, cost efficiency) the gateway can track through observability and reporting.

- Governance and security mandates: Specify frameworks (GDPR, SOC 2, HIPAA) and review cadences so the gateway can automate enforcement and auditing.

- Budget alignment and resourcing plans: Clarify ownership of gateway operations, covering who approves, maintains, and funds control systems, to build in accountability from day one.

- Best practices for scale: Define universal policies (data access, API usage, prompt management) that the gateway can standardize across teams to prevent drift and duplication.

Do you have regulatory or operational mandates to fulfill?

Every enterprise operates under mandates that define how AI is implemented and secured. The real question is whether your systems can enforce them automatically at scale.

An AI gateway makes at-scale enforcement possible. It embeds policy controls, access management, logging, and auditability into every agentic workflow, turning compliance from a manual burden into a continuous safeguard. Without that unified layer, enforcement breaks down and risks (including possible fines) multiply.

Consider the mandates your gateway needs to operationalize:

- Legal and regulatory requirements by region or sector: For example, healthcare teams must maintain HIPAA compliance, while global enterprises face GDPR and cross-border data transfer rules — all of which the gateway enforces through policy and access control.

- Internal compliance rules: These often include model approval workflows, data retention policies, and audit trails to prove accountability. Without a central control layer, these processes quickly become inconsistent across departments.

- Documentation needs: AI explainability and traceability aren’t just “nice to have” — they’re often mandatory for internal audits or external regulators. Finance teams, for example, may need to demonstrate how automated credit models reach decisions. The gateway embeds these into workflows, automatically logging activity and decisions for regulators or internal review.

Are your governance, security, and approval inputs ready?

Governance and security are how you translate compliance intent into operational reality, and what keeps audit fire drills and access loopholes from derailing scale. Building on your regulatory mandates, your gateway should automate enforcement, consistently applying approvals, permissions, and audit trails across every workflow.

But your gateway can’t enforce rules you haven’t set. That means having:

- Defined roles, responsibilities, and permission hierarchies (RBAC, approvals): Clarify who can build, approve, or deploy AI workflows.

- Internal policies for responsible AI, data ethics, and usage boundaries: Set guidelines like requiring human-in-the-loop review or restricting model access to sensitive data.

- Security protocols aligned to each use case’s sensitivity: Maintain stronger safeguards for financial or healthcare data, lighter ones for internal knowledge bots.

- Infrastructure support for audit trails and enforcement: Use automated logs and version histories that make compliance reviews seamless.

A gateway doesn’t invent rules. It executes on the ones you’ve set. If you haven’t mapped who can do what — and under what conditions — you can’t scale agentic AI safely.

Measuring ROI from your gateway

Every AI program reaches a point where cost control becomes strategy. A gateway helps you reach that point sooner, turning unpredictable, hidden costs into measurable efficiency gains. The setup investment pays itself back quickly once governance, observability, and scale are unified.

Without a gateway, costs are higher and harder to see: Teams lose time to manual reviews, DevOps hours pile up, and brittle architectures lock you into tools you’ve outgrown.

Multiply that across every use case, and missed savings compound into real financial strain.

A gateway eliminates those drains across several areas:

- Operational load: Automating governance and monitoring cuts DevOps overhead and rework time, freeing teams to focus on delivery instead of repair.

- Financial exposure: Continuous enforcement and auditability reduce compliance risk, regulatory penalties, and remediation costs.

- Technical debt: Standardized orchestration prevents overbuilding, compute overuse, and vendor lock-in, which reduces the need for expensive rebuilds later.

- Opportunity cost: With consistent controls in place, you can test new tools, scale proven use cases faster, and capture competitive advantage sooner.

Think about two companies starting their agentic AI journey. Company A invests in a gateway early, while Company B tries to scale without it.

Company A’s return on investment (ROI) compounds over time. The upfront investment pays off through lower operating costs, faster innovation cycles, and reduced risk exposure. Company B may save upfront by skipping the setup costs, but the costs catch up later in rework, downtime, and missed growth opportunities.

Ultimately, the outcome is cost discipline that scales with your AI ecosystem — managing spend and turning compliance and agility into continuous ROI.

Take the next step

This readiness check is designed to help you avoid the missteps that slow AI maturity, from costly rework to mounting risk. The further you advance without an AI gateway, the more complicated it becomes to stand one up.

The best time to act is when early pilots start proving value. That’s the stage when oversight and scalability begin to intersect. By pinpointing where you sit on the maturity curve and confirming you have core use cases, foundational workflows, and clear policies in place, you can stand up a gateway that strengthens what’s already working instead of rebuilding later.

Whether you build or buy doesn’t matter. What matters is whether or not you’re prepared to support a gateway designed to match your architecture and enforce your policies while evolving with your budget.

If you’re ready to turn assessment into action, start with our Enterprise Guide to Agentic AI. It’s your roadmap for designing a gateway strategy that scales safely, efficiently, and without compromise.

The post How do you know if you’re ready to stand up an AI gateway? appeared first on DataRobot.