“Learning about the social implications of the technology you’re working on is really important,” says senior Austen Roberson. Photo: Jodi Hilton

By Laura Rosado | MIT News correspondent

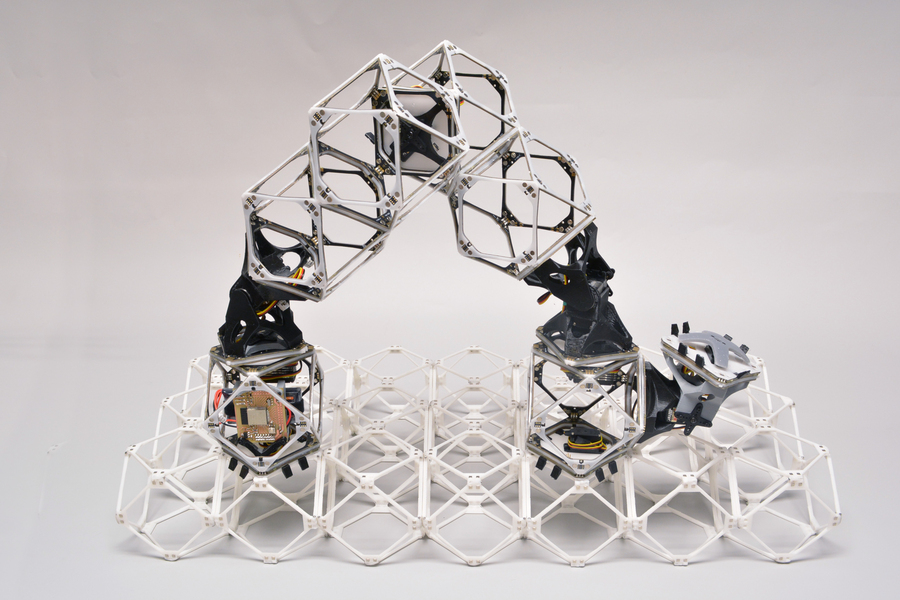

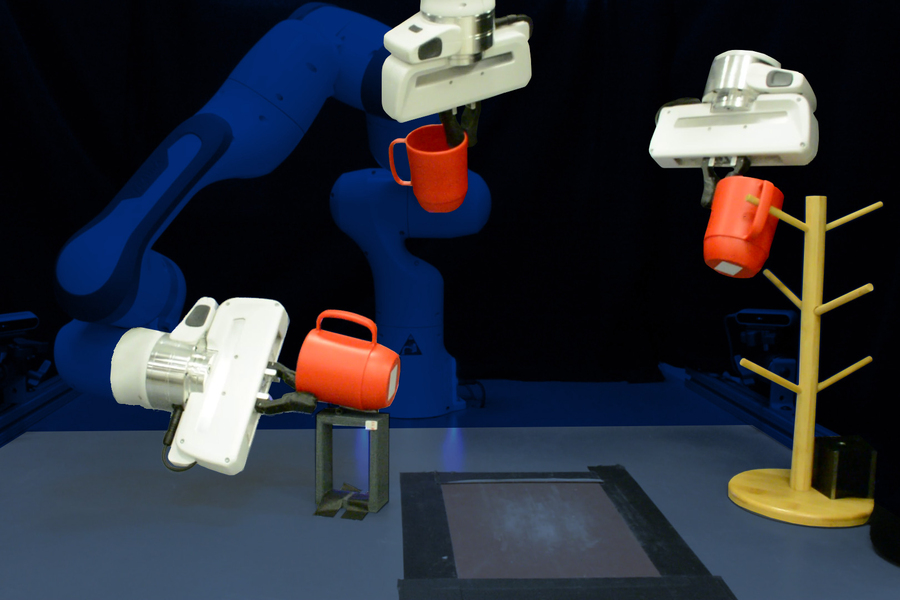

Austen Roberson’s favorite class at MIT is 2.S007 (Design and Manufacturing I-Autonomous Machines), in which students design, build, and program a fully autonomous robot to accomplish tasks laid out on a themed game board.

“The best thing about that class is everyone had a different idea,” says Roberson. “We all had the same game board and the same instructions given to us, but the robots that came out of people’s minds were so different.”

The game board was Mars-themed, with a model shuttle that could be lifted to score points. Roberson’s robot, nicknamed Tank Evans after a character from the movie “Surf’s Up,” employed a clever strategy to accomplish this task. Instead of spinning the gears that would raise the entire mechanism, Roberson realized a claw gripper could wrap around the outside of the shuttle and lift it manually.

“That wasn’t the intended way,” says Roberson, but his outside-of-the-box strategy ending up winning him the competition at the conclusion of the class, which was part of the New Engineering Education Transformation (NEET) program. “It was a really great class for me. I get a lot of gratification out of building something with my hands and then using my programming and problem-solving skills to make it move.”

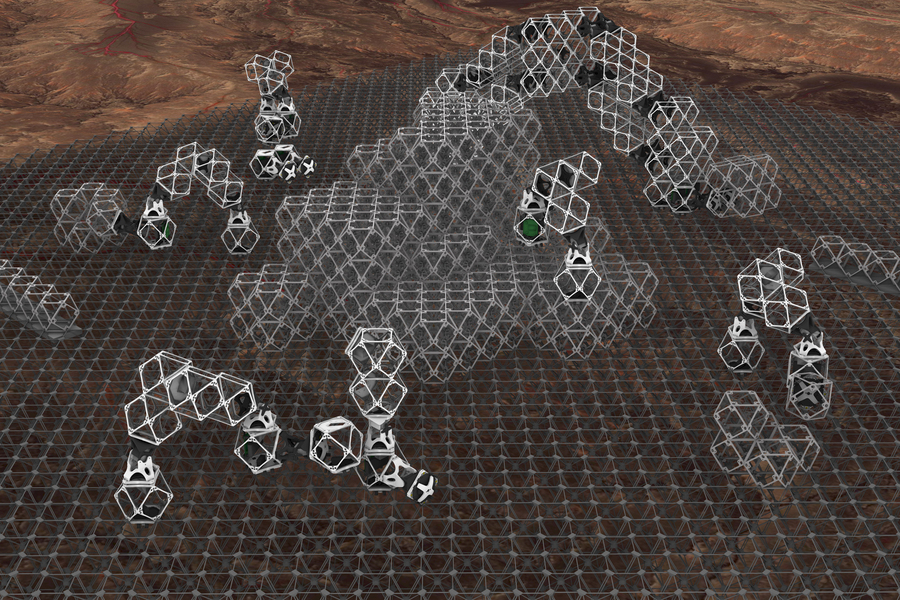

Roberson, a senior, is majoring in aerospace engineering with a minor in computer science. As his winning robot demonstrates, he thrives at the intersection of both fields. He references the Mars Curiosity Rover as the type of project that inspires him; he even keeps a Lego model of Curiosity on his desk.

“You really have to trust that the hardware you’ve made is up to the task, but you also have to trust your software equally as much,” says Roberson, referring to the challenges of operating a rover from millions of miles away. “Is the robot going to continue to function after we’ve put it into space? Both of those things have to come together in such a perfect way to make this stuff work.”

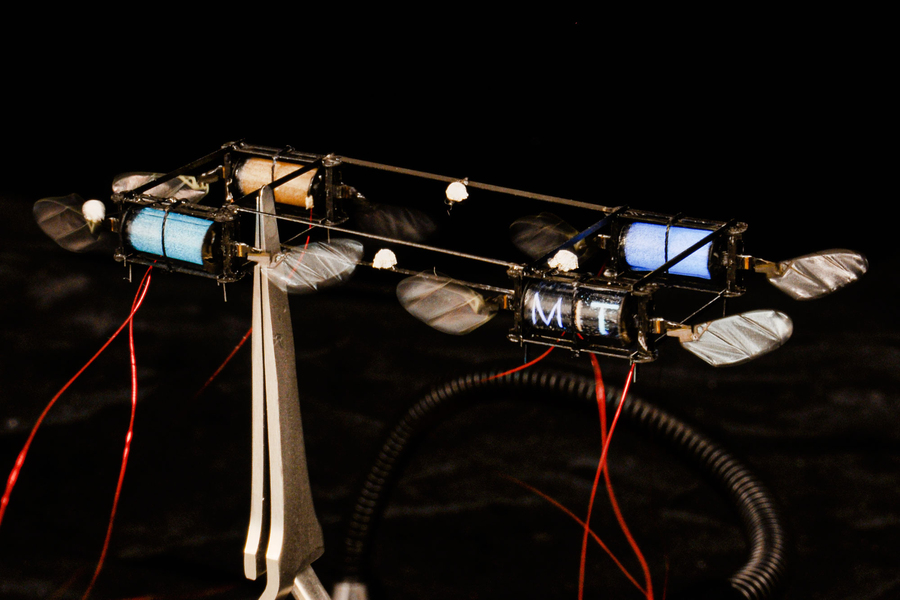

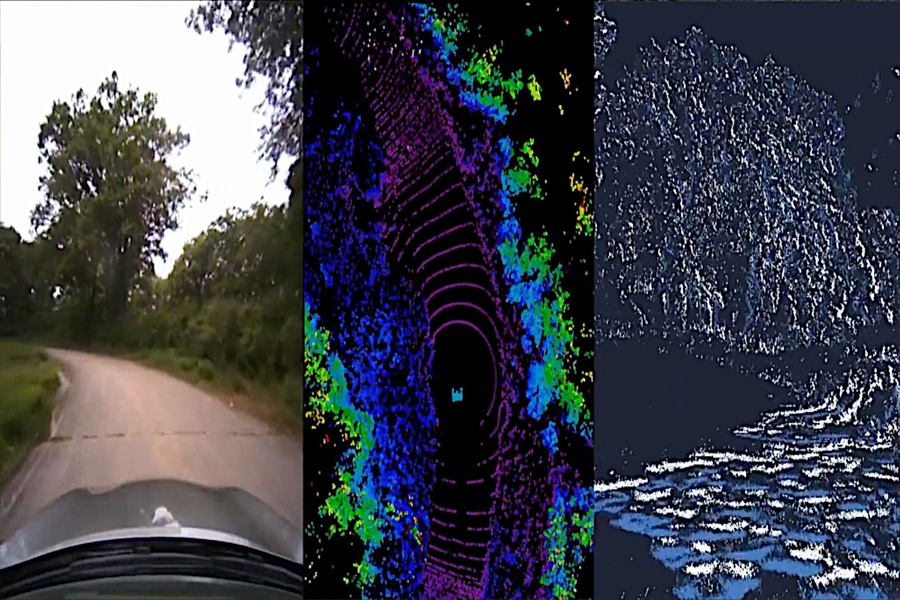

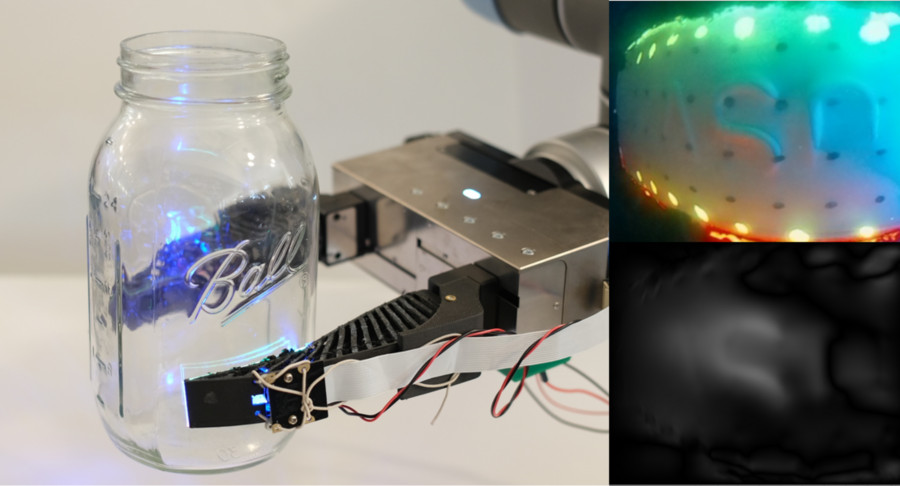

Outside of formal classwork, Roberson has pursued multiple research opportunities at MIT that blend his academic interests. He’s worked on satellite situational awareness with the Space Systems Laboratory, tested drone flight in different environments with the Aerospace Controls Laboratory, and is currently working on zero-shot machine learning for anomaly detection in big datasets with the Mechatronics Research Laboratory.

“Whether that be space exploration or something else, all I can hope for is that I’m making an impact, and that I’m making a difference in people’s lives,” says Roberson. Photo: Jodi Hilton

Even while tackling these challenging technical problems head-on, Roberson is also actively thinking about the social impact of his work. He takes classes in the Program on Science, Technology, and Society, which has taught him not only how societal change throughout history has been driven by technological advancements, but also how to be a thoughtful engineer in his own career.

“Learning about the social implications of the technology you’re working on is really important,” says Roberson, acknowledging that his work in automation and machine learning needs to address these questions. “Sometimes, we get caught up in technology for technology’s sake. How can we take these same concepts and bring them to people to help in a tangible, physical way? How have we come together as a scientific community to really affect social change, and what can we do in the future to continue affecting that social change?”

Roberson is already working through what these questions mean for him personally. He’s been a member of the National Society of Black Engineers (NSBE) throughout his entire college experience, which includes serving on the executive board for two years. He’s helped to organize workshops focused on everything from interview preparation to financial literacy, as well as social events to build community among members.

“The mission of the organization is to increase the number of culturally responsible Black engineers that excel academically, succeed professionally, and positively impact the community,” says Roberson. “My goal with NSBE was to be able to provide a resource to help everybody get to where they wanted to be, to be the vehicle to really push people to be their best, and to provide the resources that people needed and wanted to advance themselves professionally.”

In fact, one of his most memorable MIT experiences is the first conference he attended as a member of NSBE.

“Being able to see all different these people from all of these different schools able to come together as a family and just talk to each other, it’s a very rewarding experience,” Roberson says. “It’s important to be able to surround yourself with people who have similar professional goals and share similar backgrounds and experiences with you. It’s definitely the proudest I’ve been of any club at MIT.”

Looking toward his own career, Roberson wants to find a way to work on fast-paced, cutting-edge technologies that move society forward in a positive way.

“Whether that be space exploration or something else, all I can hope for is that I’m making an impact, and that I’m making a difference in people’s lives,” says Roberson. “I think learning about space is learning about ourselves as well. The more you can learn about the stuff that’s out there, you can take those lessons to reflect on what’s down here as well.”