Photo by Will Langford

By David L. Chandler

Years ago, MIT Professor Neil Gershenfeld had an audacious thought. Struck by the fact that all the world’s living things are built out of combinations of just 20 amino acids, he wondered: Might it be possible to create a kit of just 20 fundamental parts that could be used to assemble all of the different technological products in the world?

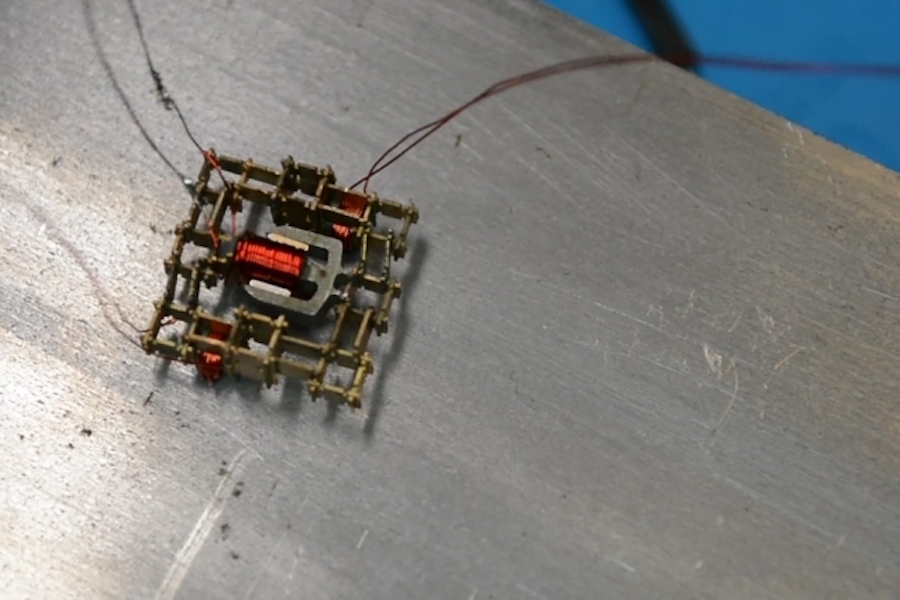

Gershenfeld and his students have been making steady progress in that direction ever since. Their latest achievement, presented this week at an international robotics conference, consists of a set of five tiny fundamental parts that can be assembled into a wide variety of functional devices, including a tiny “walking” motor that can move back and forth across a surface or turn the gears of a machine.

Previously, Gershenfeld and his students showed that structures assembled from many small, identical subunits can have numerous mechanical properties. Next, they demonstrated that a combination of rigid and flexible part types can be used to create morphing airplane wings, a longstanding goal in aerospace engineering. Their latest work adds components for movement and logic, and will be presented at the International Conference on Manipulation, Automation and Robotics at Small Scales (MARSS) in Helsinki, Finland, in a paper by Gershenfeld and MIT graduate student Will Langford.

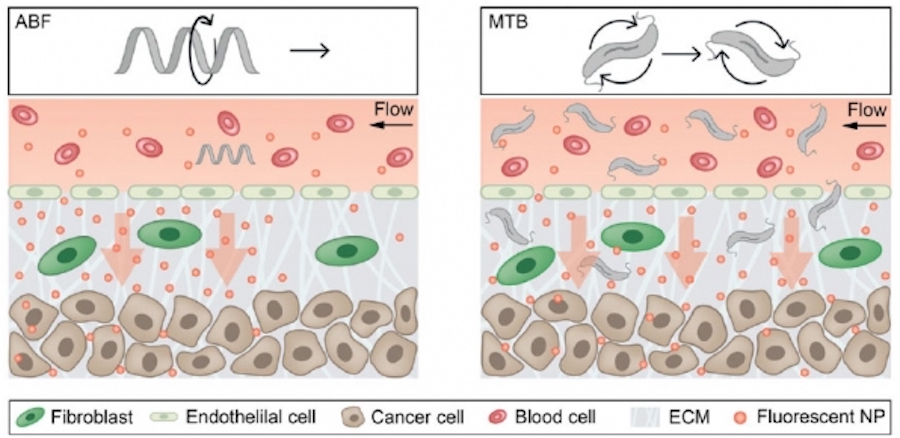

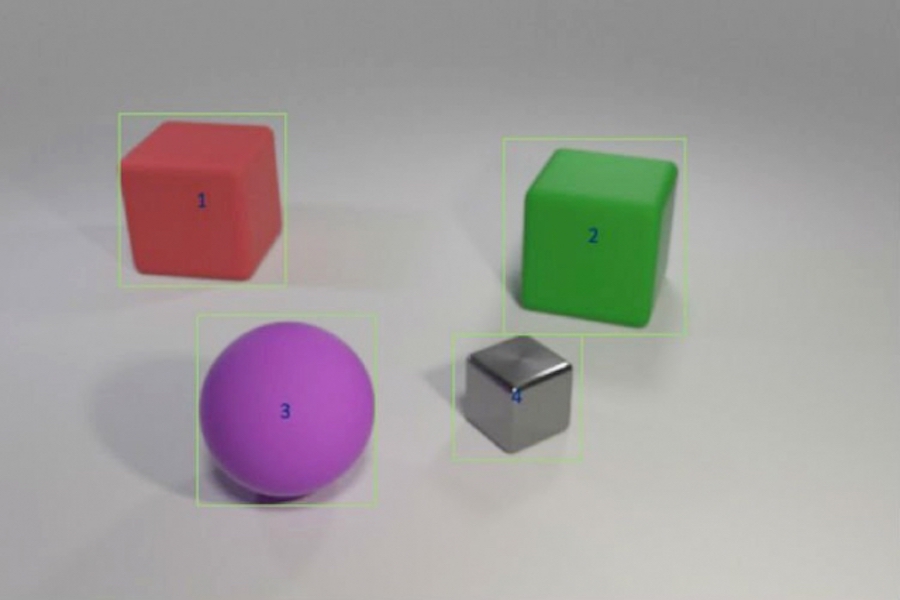

Their work offers an alternative to today’s approaches to contructing robots, which largely fall into one of two types: custom machines that work well but are relatively expensive and inflexible, and reconfigurable ones that sacrifice performance for versatility. In the new approach, Langford came up with a set of five millimeter-scale components, all of which can be attached to each other by a standard connector. These parts include the previous rigid and flexible types, along with electromagnetic parts, a coil, and a magnet. In the future, the team plans to make these out of still smaller basic part types.

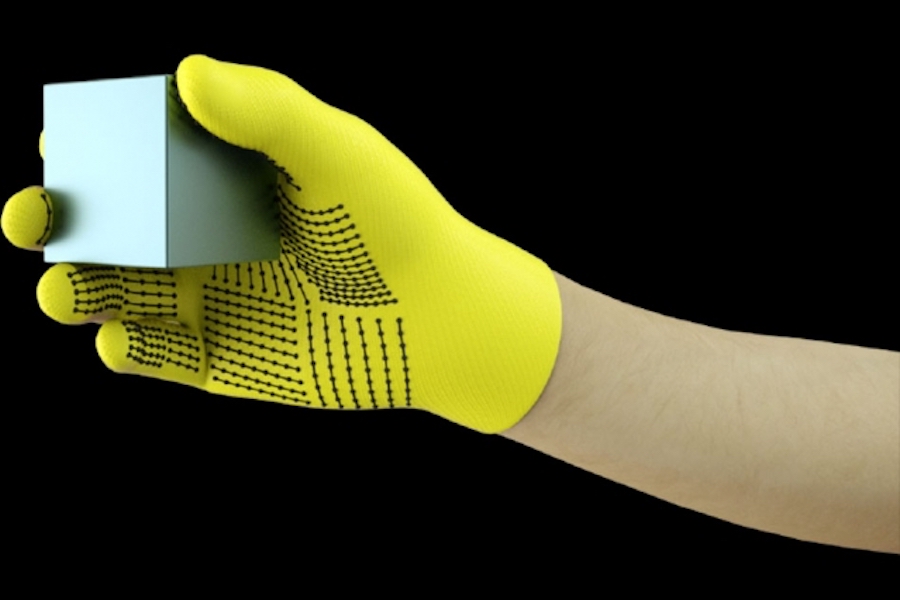

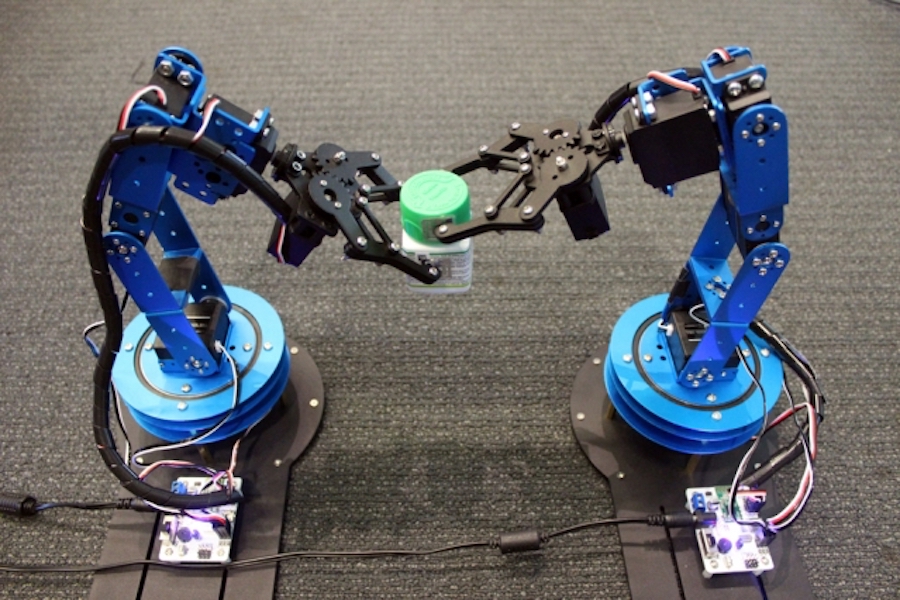

Using this simple kit of tiny parts, Langford assembled them into a novel kind of motor that moves an appendage in discrete mechanical steps, which can be used to turn a gear wheel, and a mobile form of the motor that turns those steps into locomotion, allowing it to “walk” across a surface in a way that is reminiscent of the molecular motors that move muscles. These parts could also be assembled into hands for gripping, or legs for walking, as needed for a particular task, and then later reassembled as those needs change. Gershenfeld refers to them as “digital materials,” discrete parts that can be reversibly joined, forming a kind of functional micro-LEGO.

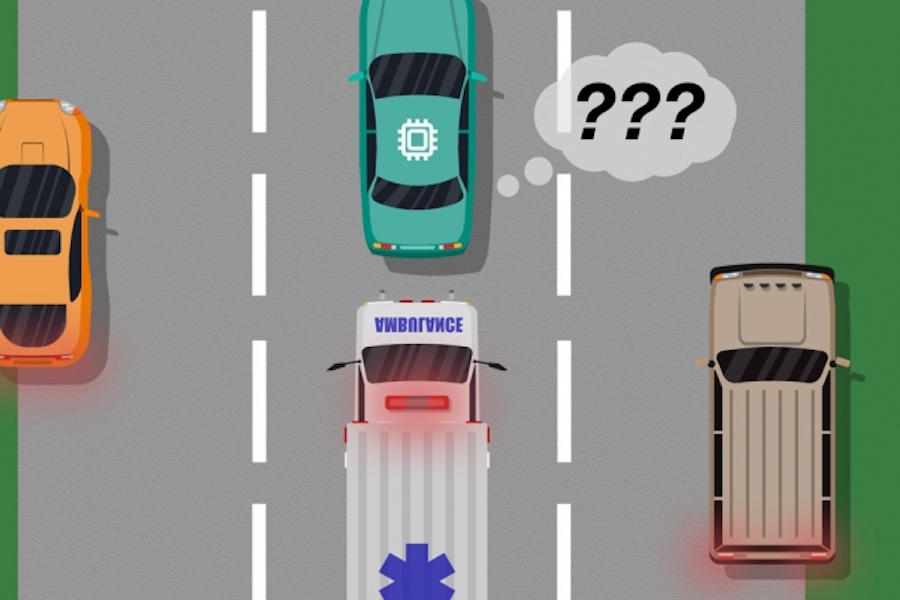

The new system is a significant step toward creating a standardized kit of parts that could be used to assemble robots with specific capabilities adapted to a particular task or set of tasks. Such purpose-built robots could then be disassembled and reassembled as needed in a variety of forms, without the need to design and manufacture new robots from scratch for each application.

Langford’s initial motor has an ant-like ability to lift seven times its own weight. But if greater forces are required, many of these parts can be added to provide more oomph. Or if the robot needs to move in more complex ways, these parts could be distributed throughout the structure. The size of the building blocks can be chosen to match their application; the team has made nanometer-sized parts to make nanorobots, and meter-sized parts to make megarobots. Previously, specialized techniques were needed at each of these length scale extremes.

“One emerging application is to make tiny robots that can work in confined spaces,” Gershenfeld says. Some of the devices assembled in this project, for example, are smaller than a penny yet can carry out useful tasks.

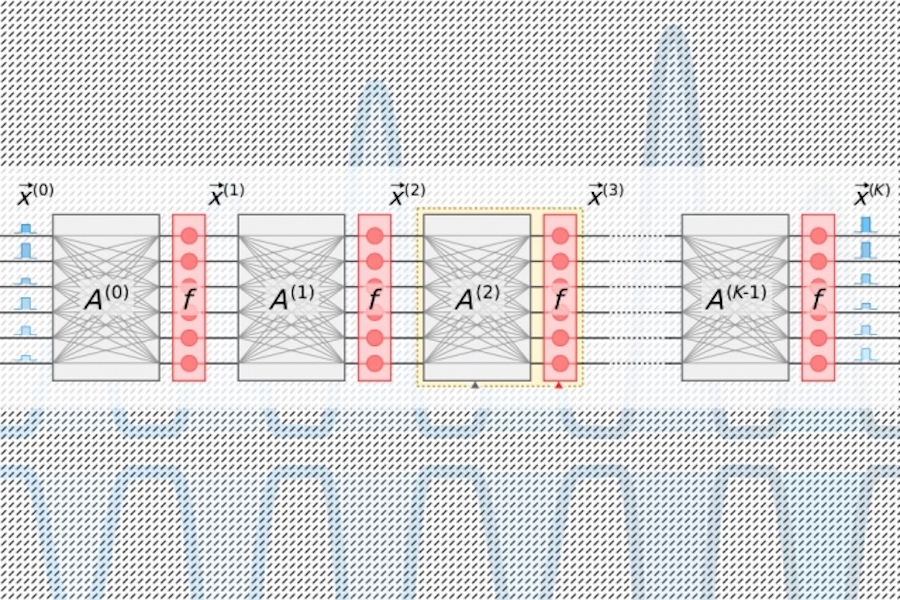

To build in the “brains,” Langford has added part types that contain millimeter-sized integrated circuits, along with a few other part types to take care of connecting electrical signals in three dimensions.

The simplicity and regularity of these structures makes it relatively easy for their assembly to be automated. To do that, Langford has developed a novel machine that’s like a cross between a 3-D printer and the pick-and-place machines that manufacture electronic circuits, but unlike either of those, this one can produce complete robotic systems directly from digital designs. Gershenfeld says this machine is a first step toward to the project’s ultimate goal of “making an assembler that can assemble itself out of the parts that it’s assembling.”

By Rob Matheson

By Rob Matheson