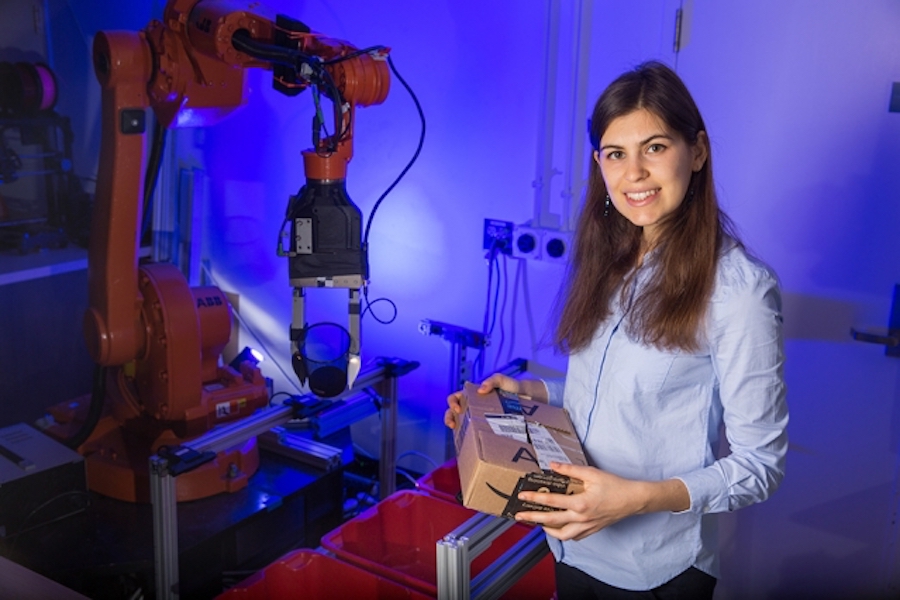

Photo: Joseph DelPreto/MIT CSAIL

Getting robots to do things isn’t easy: Usually, scientists have to either explicitly program them or get them to understand how humans communicate via language.

But what if we could control robots more intuitively, using just hand gestures and brainwaves?

A new system spearheaded by researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) aims to do exactly that, allowing users to instantly correct robot mistakes with nothing more than brain signals and the flick of a finger.

Building off the team’s past work focused on simple binary-choice activities, the new work expands the scope to multiple-choice tasks, opening up new possibilities for how human workers could manage teams of robots.

By monitoring brain activity, the system can detect in real-time if a person notices an error as a robot does a task. Using an interface that measures muscle activity, the person can then make hand gestures to scroll through and select the correct option for the robot to execute.

The team demonstrated the system on a task in which a robot moves a power drill to one of three possible targets on the body of a mock plane. Importantly, they showed that the system works on people it’s never seen before, meaning that organizations could deploy it in real-world settings without needing to train it on users.

“This work combining EEG and EMG feedback enables natural human-robot interactions for a broader set of applications than we’ve been able to do before using only EEG feedback,” says CSAIL Director Daniela Rus, who supervised the work. “By including muscle feedback, we can use gestures to command the robot spatially, with much more nuance and specificity.”

PhD candidate Joseph DelPreto was lead author on a paper about the project alongside Rus, former CSAIL postdoc Andres F. Salazar-Gomez, former CSAIL research scientist Stephanie Gil, research scholar Ramin M. Hasani, and Boston University Professor Frank H. Guenther. The paper will be presented at the Robotics: Science and Systems (RSS) conference taking place in Pittsburgh next week.

In most previous work, systems could generally only recognize brain signals when people trained themselves to “think” in very specific but arbitrary ways and when the system was trained on such signals. For instance, a human operator might have to look at different light displays that correspond to different robot tasks during a training session.

Not surprisingly, such approaches are difficult for people to handle reliably, especially if they work in fields like construction or navigation that already require intense concentration.

Meanwhile, Rus’ team harnessed the power of brain signals called “error-related potentials” (ErrPs), which researchers have found to naturally occur when people notice mistakes. If there’s an ErrP, the system stops so the user can correct it; if not, it carries on.

“What’s great about this approach is that there’s no need to train users to think in a prescribed way,” says DelPreto. “The machine adapts to you, and not the other way around.”

For the project the team used “Baxter,” a humanoid robot from Rethink Robotics. With human supervision, the robot went from choosing the correct target 70 percent of the time to more than 97 percent of the time.

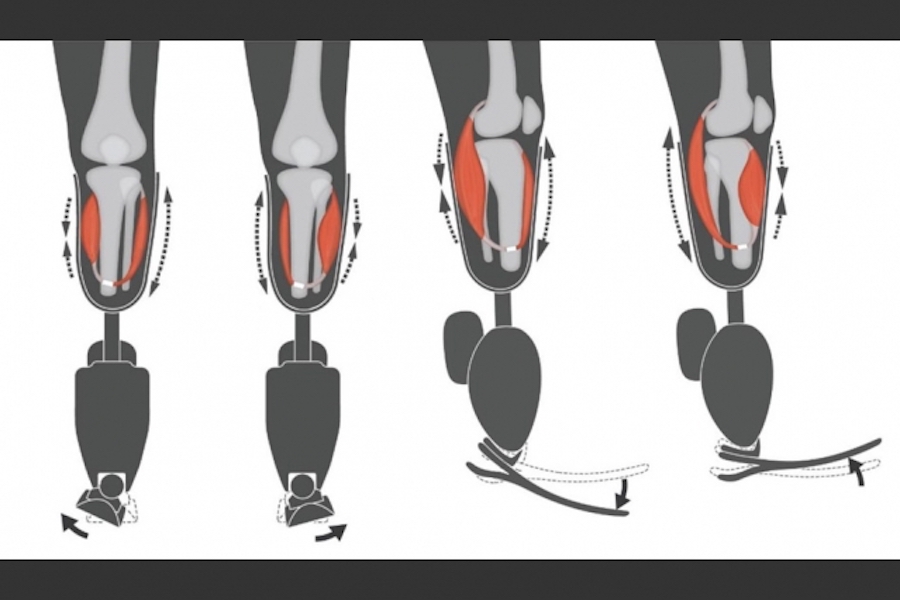

To create the system the team harnessed the power of electroencephalography (EEG) for brain activity and electromyography (EMG) for muscle activity, putting a series of electrodes on the users’ scalp and forearm.

Both metrics have some individual shortcomings: EEG signals are not always reliably detectable, while EMG signals can sometimes be difficult to map to motions that are any more specific than “move left or right.” Merging the two, however, allows for more robust bio-sensing and makes it possible for the system to work on new users without training.

“By looking at both muscle and brain signals, we can start to pick up on a person’s natural gestures along with their snap decisions about whether something is going wrong,” says DelPreto. “This helps make communicating with a robot more like communicating with another person.”

The team says that they could imagine the system one day being useful for the elderly, or workers with language disorders or limited mobility.

“We’d like to move away from a world where people have to adapt to the constraints of machines,” says Rus. “Approaches like this show that it’s very much possible to develop robotic systems that are a more natural and intuitive extension of us.”