Robot Talk Episode 147 – Miniature living robots, with Maria Guix

Claire chatted to Maria Guix from the University of Barcelona about combining electronics and biology to create biohybrid robots with emergent properties.

Maria Guix is a chemist and nanotechnology researcher in the University of Barcelona’s ChemInFlow lab, developing miniaturised living robots and integrating flexible sensors into microfluidic platforms to better understand biohybrid robotic platforms. Her PhD research at the Autonomous University of Barcelona focussed on nanomaterials for biosensing. She has held postdoctoral positions at IFW Dresden, Purdue University, and the Institute for Bioengineering of Catalonia, advancing biocompatible micromotors, magnetic microrobot automation, and functional living robots.

Broadcom Inc. (AVGO) — AI Equity Research Update | March 2026

This analysis was produced by an AI financial research system. All data is sourced exclusively from publicly available filings, earnings transcripts, government data, and free financial aggregators — no proprietary data, paid research, or institutional tools are used. Every figure cited can be independently verified by the reader using the sources listed at the end...

The post Broadcom Inc. (AVGO) — AI Equity Research Update | March 2026 appeared first on 1redDrop.

Researchers are combining drones and AI to make removing land mines faster and safer

Graphene-based ‘artificial skin’ brings human-like touch closer to robots

Reachy 2: The Open-Source Humanoid Robot Redefining Human-Machine Interaction

Humanoid robots master parkour and acquire human-like agility

Developing an optical tactile sensor for tracking head motion during radiotherapy: an interview with Bhoomika Gandhi

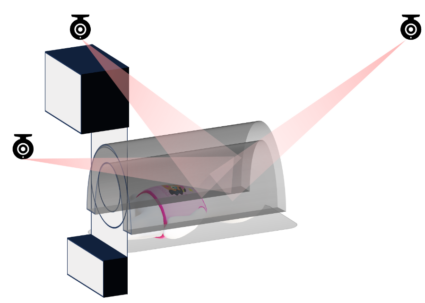

Illustration of the radiotherapy room and the occlusion problem faced by ceiling-mounted cameras in this application.

Illustration of the radiotherapy room and the occlusion problem faced by ceiling-mounted cameras in this application.

What was the topic of your PhD research and why was it an interesting area?

My topic of research was developing an optical tactile sensor to track head motion during radiotherapy. I worked on both the hardware and software development of this sensor, though my focus was mostly on the software side. Its importance comes from the fact that during radiotherapy, patients undergoing head and neck cancer treatment are typically immobilised. This is usually done using a thermoplastic mask, which can feel very claustrophobic, or a stereotactic frame. Frames are more common for brain cancers, but they have to be surgically inserted into the patient’s skull using pins. Either of these immobilisation tools may be used depending on the situation. When patients are uncomfortable, they are more likely to move, which affects the accuracy of treatment, especially with thermoplastic masks.

Another major issue is that current systems use ceiling-mounted cameras to record patient motion. These cameras cannot be placed too close to the patient because of the electromagnetic environment around the equipment. Their view is also frequently occluded because the patient moves into a tunnel to receive the ionising beams, which makes it difficult to capture rotational motion.

One alternative is an infrared camera with a nose marker, but this only captures translational motion. Currently, when a nose tracker detects movement beyond a certain threshold, treatment is paused, the patient is repositioned, and treatment resumes. It is difficult to adapt this system to reliably measure the rotational motion of the patient’s head in the radiotherapy environment.

This is where the Motion Capture Pillow (MCP) comes in, which contains the optical tactile sensor I developed. The goal with this system is similar to the nose tracker, but with more accurate rotational feedback for the radiographer. It can be placed beneath the patient’s head and attached to the treatment bed. It estimates how much the patient’s neck is rotating and improves patient comfort. Radiographers can receive real-time feedback on both translational and rotational movement. The advantages of this system are that there are no occlusions, because the pillow is in direct contact with the patient’s head, and it is more compatible with radiotherapy environments because the sensor is non-ferromagnetic. Its premise is to maintain patient comfort, stay compact and easy to integrate into the pre-existing systems for radiotherapy, whilst improving the accuracy of the treatment through real-time head tracking.

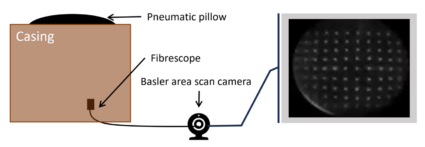

Labelled diagram of the Motion Capture Pillow – Optical tactile sensor for head tracking during radiotherapy. The pneumatic pillow is a deformable rubber-like sheet with embedded white markers, held in its convex shape using air pressure. The fibrescope represents a non-ferromagnetic fibre optic bundle used as a lens extension to an area scan camera. The camera is ferromagnetic and will require safe positioning and fixation.

Labelled diagram of the Motion Capture Pillow – Optical tactile sensor for head tracking during radiotherapy. The pneumatic pillow is a deformable rubber-like sheet with embedded white markers, held in its convex shape using air pressure. The fibrescope represents a non-ferromagnetic fibre optic bundle used as a lens extension to an area scan camera. The camera is ferromagnetic and will require safe positioning and fixation.

What were the main contributions of your work?

There were four main contributions to my work. The first contribution focused on making the system more non-ferromagnetic and improving the imaging and tracking approach. Previous work used a webcam and binary image processing within the optical tactile sensor to track marker displacement. I ultimately decided to use a fibrescope, optical flow tracking algorithm, and grayscale imaging instead, which improved the sensor’s tracking ability.

The second contribution focused on optimising marker density. The optical tactile sensor consists of an array of markers on a deformable rubber-like sheet, resembling a pillow. The deformation of these markers is captured by the camera. I investigated how dense the marker array needed to be by adjusting the spacing between markers to determine what worked best for this application.

The third contribution involved sensor fusion to improve reliability and robustness. To do this, I integrated a gyroscope and used Kalman filtering to fuse data from the gyroscope and the MCP. This was important for Gamma Knife systems, which are radiosurgery platforms used for brain cancers. They tend to have higher accuracy requirements than linear accelerators, which are commonly used for head and neck cancers, and lower constraints on the use of ferromagnetic components.

The final contribution was a participatory design study conducted in collaboration with clinicians and the social sciences department. We explored how the MCP could be integrated into hospital workflows and assessed its feasibility.

How feasible is it to integrate this sensor into hospital workflows?

Clinicians did seem to be very on board with it, but the study was more qualitative than quantitative. While they felt the idea had merit, there were reservations about adopting new technology and the associated learning curve.

They were also concerned about accuracy. Improving accuracy and reliability is essential for clinicians to feel confident using the system. At present, further development is needed before it can be widely implemented.

What future work is planned in this area?

One area to investigate is the differences between the mannequin and participant data. The pillow shape is controlled by a pneumatic system with a pressure sensor and air pump. When the patient or mannequin moves, pressure changes occur. The system compensates to maintain a set pressure, but this introduces errors in the motion readings. The mannequin produced more errors than the participant data. It may not accurately simulate human motion on the pillow, and the testing setup may introduce discrepancies that do not reflect real-world behaviour.

So, future work includes stabilising and refining the pressure control system to improve reliability. If necessary, reconsidering the use of gel on the sensors could be an option. Gel had been used previously but was abandoned due to clinician concerns about attenuation of ionising beams. However, if avoiding gel significantly compromises sensor performance, revisiting this approach may be worthwhile.

In addition, more participant data collection is needed. Not all previously collected data could be used due to ground-truth measurements being partially occluded in the experimental setup. Additional participant studies would provide a clearer understanding of performance across different individuals. Another priority is improving the fibrescope’s resolution and angle to better visualise high-density marker arrays. Hardware upgrades would help ensure a clearer field of view and improve overall system performance.

About Bhoomika

|

Bhoomika Gandhi is a recent PhD graduate from the University of Sheffield Medical Robotics group. Her undergraduate degree was in Bioengineering – Medical Devices and Instruments, with control engineering and robotics being the key themes. |

Bio-inspired methods help guide coordination in underwater robot swarms

Will AI drones, robots and wearable sensors revolutionize workplace safety?

A simple hand photo may be the key to detecting a serious disease

Broadcom Inc. (AVGO) — AI Equity Research | March 2026

This analysis was produced by an AI financial research system. All data is sourced exclusively from publicly available filings, earnings transcripts, government data, and free financial aggregators — no proprietary data, paid research, or institutional tools are used. Every figure cited can be independently verified by the reader using the sources listed at the end...

The post Broadcom Inc. (AVGO) — AI Equity Research | March 2026 appeared first on 1redDrop.

Why the Hybrid SOC Is Your Next Use of AI

Human-only SOCs are unsustainable, but AI-only SOCs are still well out of reach of current technology. The industry has answered by increasingly adopting hybrid approaches. Today, hybrid SOCs are the method of choice for teams looking to leverage the capabilities […]

The post Why the Hybrid SOC Is Your Next Use of AI appeared first on TechSpective.

Why the Hybrid SOC Is Your Next Use of AI

Human-only SOCs are unsustainable, but AI-only SOCs are still well out of reach of current technology. The industry has answered by increasingly adopting hybrid approaches. Today, hybrid SOCs are the method of choice for teams looking to leverage the capabilities […]

The post Why the Hybrid SOC Is Your Next Use of AI appeared first on TechSpective.