Smarting from the wild popularity of NanoBanana – the new image maker from Google – ChatGPT’s maker has released a major upgrade of its own.

The verdict from AI enthusiast Grant Harvey, lead writer for The Neuron newsletter: OpenAI has grabbed back the picture-making crown.

It’s once again best overall AI image editor/generator on the market.

For Harvey’s shoot-out analysis between NanoBanana and OpenAI GPT Image 1.5, check-out this excellent once-over.

In other news and analysis on AI writing:

*AI Earns Dubious Distinction for the ‘Word of the Year’: AI ‘slop’ – a label for the torrent of substandard content that is sometimes auto-generated by AI – is now the Word of the Year.

Observes writer Lucas Ropek: “These new tools have even led to what has been dubbed a ‘slop economy,’ in which gluts of AI-generated content can be milked for advertising money.”

Presenters of the award: Publishers of the Merriam-Webster Dictionary.

*Google Gemini Adds a Key AI Research Tool: Google is currently integrating a key research tool to its Gemini chatbot, which collates up to 50 PDFs or other research docs for you – and then unleashes AI on them to help you analyze everything.

Dubbed Google “NotebookLM,” the tool has been extremely popular with researchers and other thinkers -– and will be even more useful once its integration with the Gemini chatbot is fully rolled-out.

Observes writer Alexey Shabanov: “The update supports multiple notebook attachments, making it possible to bring substantial datasets into Gemini.”

*AI Fables for Kids – Complete With Values: Neo-Aesop has released a new AI app designed to create hyper-personalized Aesop-like fables for kids.

Playing with the app, users can choose their own characters, settings and virtues for each story. In the process, the child reader and his/her favorite animals can also become the heroes in each tale.

Observes Lindsay Hiebert, founder, Neo-Aesop: “There are no ads, no doom-scrolling and no engagement traps. Just stories that invite real conversation between a parent and a child.”

*Star in Your Own AI-Generated Fiction: Ever wish you could auto-generate fiction that features you and your friends as the main characters?

Vivibook has you covered.

Designed as the AI platform for people who want to be the story, Vivibook takes care of all the narrative, the story arc, the chapter breakdowns, the plot twists – as well as the psychological evolution of the characters.

*Major Keyword Generator Integrates Seamlessly With ChatGPT: Writers who spend a great deal of time ensuring their content appears high-up in search engine returns (Search Engine Optimization) just got a big break.

Semrush – a market leader in helping writers generate content keywords designed to attract the search engines – has been fully integrated into ChatGPT.

The integration enables users to access live Semrush data and intelligence without ever needing to leave the ChatGPT interface.

*Turnkey AI Marketing for Small Businesses – At Your Service: Small businesses looking for an all-in-one solution for AI-driven marketing may want to check-out PoshListings.

It’s a turnkey system that offers:

–Web site analysis, along with strategies for improvement

–AI content for articles, ads and social posts

–Multi-channel publishing to Google, social media and local directories

–Automated email and SMS promotion

–Predictive AI analytics

*Daily Summaries of Your Gmail and Calendar – Courtesy of AI: Google is out with a new AI tool – dubbed CC – that serves up daily summaries of everything that pops-up in your Gmail and Google Calendar.

Observes writer Lance Whitney: “By connecting to your Gmail and Google Calendar content, CC can see what awaits you in your inbox and calendar.

“The tool then boils it all down into a game plan for you to follow for the day.”

*Copilot’s Latest Upgrade: A Video Tour: Key ChatGPT competitor Microsoft Copilot is packing more of a punch these days and sporting a host of new features, including:

–Deep, day-to-day knowledge of who you are, what

you do and what your company, team or group does

–Voice summaries of your upcoming workday

–Voice-driven content creation

–Voice-driven email creation

–Agent-driven Web research, in the background

–Integration with Word, Excel and PowerPoint AI agents

–Written financial reports auto-generated from Excel

–Auto-generated, written reports sourced from other Microsoft apps

Essentially: This is an extremely helpful walk-through from The Neuron’s Editor, Corey Noles, which features Callie August, director, Microsoft 365 Copilot.

*AI BIG PICTURE: Free AI from China Keeps U.S. Tech Titans on Their Toes: While still holding a slim lead, major AI players like ChatGPT, Gemini and Claude are feeling the nip-at-their-heels of ‘nearly as good’ – and free – AI alternatives from China.

Key Chinese players like DeepSeek and Qwen, for example, are within chomping distance of the U.S. marketing leaders — and are Open Source, or freely available for download and tinkering.

One caveat: Researchers have found AI code embedded in some Chinese AI that can be activated to forward your data along to the Chinese Communist Party.

Share a Link: Please consider sharing a link to https://RobotWritersAI.com from your blog, social media post, publication or emails. More links leading to RobotWritersAI.com helps everyone interested in AI-generated writing.

–Joe Dysart is editor of RobotWritersAI.com and a tech journalist with 20+ years experience. His work has appeared in 150+ publications, including The New York Times and the Financial Times of London.

The post ChatGPT’s New AI Image Maker: Number One appeared first on Robot Writers AI.

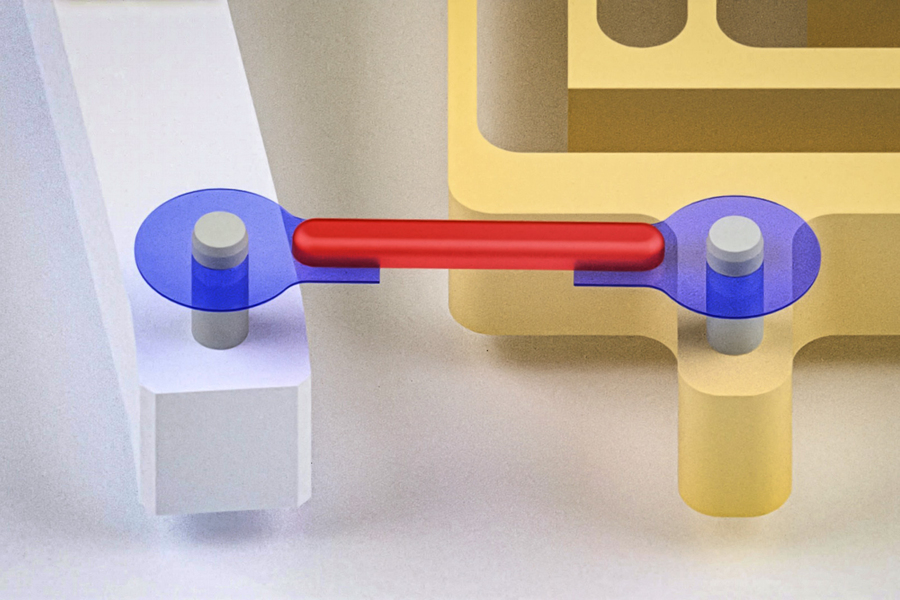

Researchers have developed artificial tendons for muscle-powered robots. They attached the rubber band-like tendons (blue) to either end of a small piece of lab-grown muscle (red), forming a “muscle-tendon unit.” Credit: Courtesy of the researchers; edited by MIT News.

Researchers have developed artificial tendons for muscle-powered robots. They attached the rubber band-like tendons (blue) to either end of a small piece of lab-grown muscle (red), forming a “muscle-tendon unit.” Credit: Courtesy of the researchers; edited by MIT News.