By Lindsay Brownell

Most people associate the term “wearable” with a fitness tracker, smartwatch, or wireless earbuds. But what if cutting-edge biotechnology were integrated into your clothing, and could warn you when you were exposed to something dangerous?

A team of researchers from the Wyss Institute for Biologically Inspired Engineering at Harvard University and the Massachusetts Institute of Technology has found a way to embed synthetic biology reactions into fabrics, creating wearable biosensors that can be customized to detect pathogens and toxins and alert the wearer.

The team has integrated this technology into standard face masks to detect the presence of the SARS-CoV-2 virus in a patient’s breath. The button-activated mask gives results within 90 minutes at levels of accuracy comparable to standard nucleic acid-based diagnostic tests like polymerase chain reactions (PCR). The achievement is reported in Nature Biotechnology.

“We have essentially shrunk an entire diagnostic laboratory down into a small, synthetic biology-based sensor that works with any face mask, and combines the high accuracy of PCR tests with the speed and low cost of antigen tests,” said co-first author Peter Nguyen, Ph.D., a Research Scientist at the Wyss Institute. “In addition to face masks, our programmable biosensors can be integrated into other garments to provide on-the-go detection of dangerous substances including viruses, bacteria, toxins, and chemical agents.”

Taking cells out of the equation

The SARS-CoV-2 biosensor is the culmination of three years of work on what the team calls their wearable freeze-dried cell-free (wFDCF) technology, which is built upon earlier iterations created in the lab of Wyss Core Faculty member and senior author Jim Collins. The technique involves extracting and freeze-drying the molecular machinery that cells use to read DNA and produce RNA and proteins. These biological elements are shelf-stable for long periods of time and activating them is simple: just add water. Synthetic genetic circuits can be added to create biosensors that can produce a detectable signal in response of the presence of a target molecule.

The researchers first applied this technology to diagnostics by integrating it into a tool to address the Zika virus outbreak in 2015. They created biosensors that can detect pathogen-derived RNA molecules and coupled them with a colored or fluorescent indicator protein, then embedded the genetic circuit into paper to create a cheap, accurate, portable diagnostic. Following their success embedding their biosensors into paper, they next set their sights on making them wearable.

“Other groups have created wearables that can sense biomolecules, but those techniques have all required putting living cells into the wearable itself, as if the user were wearing a tiny aquarium. If that aquarium ever broke, then the engineered bugs could leak out onto the wearer, and nobody likes that idea,” said Nguyen. He and his teammates started investigating whether their wFDCF technology could solve this problem, methodically testing it in more than 100 different kinds of fabrics.

Then, the COVID-19 pandemic struck.

Pivoting from wearables to face masks

“We wanted to contribute to the global effort to fight the virus, and we came up with the idea of integrating wFDCF into face masks to detect SARS-CoV-2. The entire project was done under quarantine or strict social distancing starting in May 2020. We worked hard, sometimes bringing non-biological equipment home and assembling devices manually. It was definitely different from the usual lab infrastructure we’re used to working under, but everything we did has helped us ensure that the sensors would work in real-world pandemic conditions,” said co-first author Luis Soenksen, Ph.D., a Postdoctoral Fellow at the Wyss Institute.

The team called upon every resource they had available to them at the Wyss Institute to create their COVID-19-detecting face masks, including toehold switches developed in Core Faculty member Peng Yin’s lab and SHERLOCK sensors developed in the Collins lab. The final product consists of three different freeze-dried biological reactions that are sequentially activated by the release of water from a reservoir via the single push of a button.

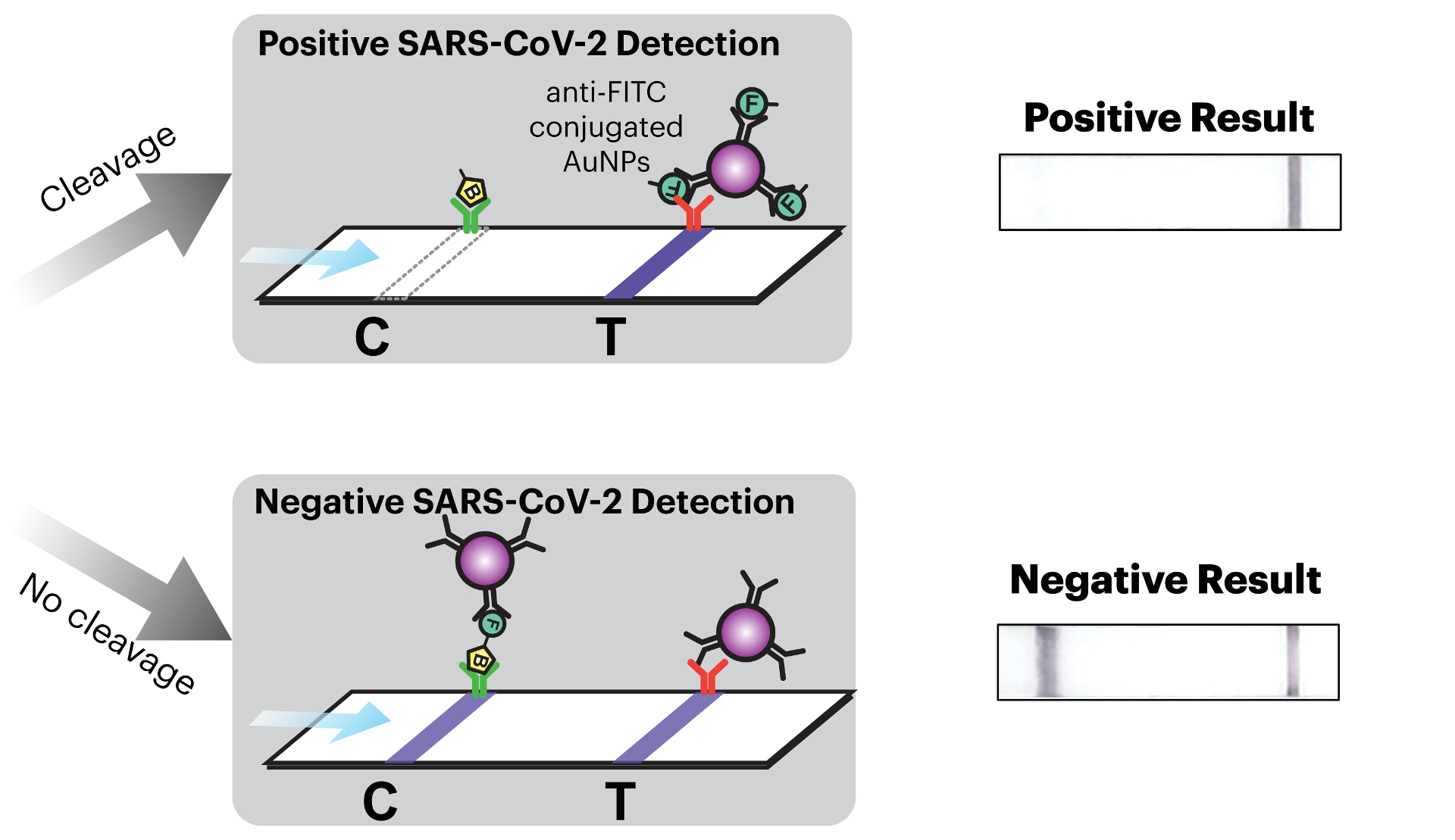

The first reaction cuts open the SARS-CoV-2 virus’ membrane to expose its RNA. The second reaction is an amplification step that makes numerous double-stranded copies of the Spike-coding gene from the viral RNA. The final reaction uses CRISPR-based SHERLOCK technology to detect any Spike gene fragments, and in response cut a probe molecule into two smaller pieces that are then reported via a lateral flow assay strip. Whether or not there are any Spike fragments available to cut depends on whether the patient has SARS-CoV-2 in their breath. This difference is reflected in changes in a simple pattern of lines that appears on the readout portion of the device, similar to an at-home pregnancy test.

The wFDCF face mask is the first SARS-CoV-2 nucleic acid test that achieves high accuracy rates comparable to current gold standard RT-PCR tests while operating fully at room temperature, eliminating the need for heating or cooling instruments and allowing the rapid screening of patient samples outside of labs.

“This work shows that our freeze-dried, cell-free synthetic biology technology can be extended to wearables and harnessed for novel diagnostic applications, including the development of a face mask diagnostic. I am particularly proud of how our team came together during the pandemic to create deployable solutions for addressing some of the world’s testing challenges,” said Collins, Ph.D., who is also the Termeer Professor of Medical Engineering & Science at MIT.

Beyond the COVID-19 pandemic

The Wyss Institute’s wearable freeze-dried cell-free (wFDCF) technology can quickly diagnose COVID-19 from virus in patients’ breath, and can also be integrated into clothing to detect a wide variety of pathogens and other dangerous substances. Credit: Wyss Institute at Harvard University

The face mask diagnostic is in some ways the icing on the cake for the team, which had to overcome numerous challenges in order to make their technology truly wearable, including capturing droplets of a liquid substance within a flexible, unobtrusive device and preventing evaporation. The face mask diagnostic omits electronic components in favor of ease of manufacturing and low cost, but integrating more permanent elements into the system opens up a wide range of other possible applications.

In their paper, the researchers demonstrate that a network of fiber optic cables can be integrated into their wFCDF technology to detect fluorescent light generated by the biological reactions, indicating detection of the target molecule with a high level of accuracy. This digital signal can be sent to a smartphone app that allows the wearer to monitor their exposure to a vast array of substances.

“This technology could be incorporated into lab coats for scientists working with hazardous materials or pathogens, scrubs for doctors and nurses, or the uniforms of first responders and military personnel who could be exposed to dangerous pathogens or toxins, such as nerve gas,” said co-author Nina Donghia, a Staff Scientist at the Wyss Institute.

The team is actively searching for manufacturing partners who are interested in helping to enable the mass production of the face mask diagnostic for use during the COVID-19 pandemic, as well as for detecting other biological and environmental hazards.

“This team’s ingenuity and dedication to creating a useful tool to combat a deadly pandemic while working under unprecedented conditions is impressive in and of itself. But even more impressive is that these wearable biosensors can be applied to a wide variety of health threats beyond SARS-CoV-2, and we at the Wyss Institute are eager to collaborate with commercial manufacturers to realize that potential,” said Don Ingber, M.D., Ph.D., the Wyss Institute’s Founding Director. Ingber is also the Judah Folkman Professor of Vascular Biology at Harvard Medical School and Boston Children’s Hospital, and Professor of Bioengineering at the Harvard John A. Paulson School of Engineering and Applied Sciences.

Additional authors of the paper include Nicolaas M. Angenent-Mari and Helena de Puig from the Wyss Institute and MIT; former Wyss and MIT member Ally Huang who is now at Ampylus; Rose Lee, Shimyn Slomovic, Geoffrey Lansberry, Hani Sallum, Evan Zhao, and James Niemi from the Wyss Institute; and Tommaso Galbersanini from Dreamlux.

This research was supported by the Defense Threat Reduction Agency under grant HDTRA1-14-1-0006, the Paul G. Allen Frontiers Group, the Wyss Institute for Biologically Inspired Engineering, Harvard University, Johnson & Johnson through the J&J Lab Coat of the Future QuickFire Challenge award, CONACyT grant 342369 / 408970, and MIT-692 TATA Center fellowship 2748460.