Call for keynote speakers at the first Reddit Robotics Showcase (RRS2021)

The reddit r/robotics subreddit is a global online community of 138,000 users, ranging from hobbyists and students to academics and industry professionals. This year, we have invited our community to share their work as part of an online showcase. No matter how big or small, all projects are welcome, and a work in progress is valid. The showcase is as much about people sharing their robotics experiences as their projects, hence this is not a formal conference or symposium.

The showcase date is planned for the weekend of July 31st. On the day, successful applicants will join a video call on the official discord and to give a brief presentation (5 or 10mins) about their work, followed by a short question and answer session. Presentations will be livestreamed To Robohub’s YouTube channel, to allow for larger audience participation, and to create a publication (arXiv) of the showcase, available for everyone. If you would like to find out more about the event, click here.

So with that being said, we are looking for potential keynote speakers, to give a 20-40 minutes public friendly presentation, followed by a Q&A. It could be of your own research, or an overview of the research lab of business that you work for.

If you are interested in giving a keynote presentation, please email Olly Smith at olly.smith1994@gmail.com

Explainable AI (XAI): A survey of recents methods, applications and frameworks

JAX for Machine Learning: how it works and why learn it

Helping soft robots turn rigid on demand

You’re Never Too Small for Industrial Robots

One robot on Mars is robotics, ten robots are automation

The difference between robotics and automation is almost nonexistent and yet has a huge difference in everything from trade shows, marketing, publications to academic conferences and journals. This week, the difference was expressed as an opportunity in the Dear Colleague Letter below from Professor Ken Goldberg, CITRIS CPAR and UC Berkeley, who suggested that students whose papers were rejected from ICRA, revise them for CASE, the Conference on Automation Science and Engineering. This opportunity was expressed beautifully in the title quote from Professor Raja Chatila, ex President of IEEE Robotics and Automation Society and current President of IEEE Global Society on Ethics of Autonomous and Intelligent Systems. “One robot on Mars is robotics, ten robots on Mars is automation.”

Dear Colleagues,

Over 2000 papers were declined by ICRA today, including many that can be

effectively revised for another conference such as IEEE CASE (deadline 15

March).

IEEE CASE, the annual Conference on Automation Science and Engineering, is

a major IEEE conference that is one of three fully-supported IEEE

conferences in our field (with ICRA and IROS).

In 2021 CASE will be held 23-27 August. It will be hybrid, with a live

component in Lyon France and an online component:

https://case2021.sciencesconf.

IEEE CASE was founded in 2006 so is smaller but growing quickly. The

acceptance rate for the last CASE was about 56%, higher than ICRA 2021

(48%), IROS, or RSS. I consider this a feature not a bug: it is an

excellent venue for exploratory and novel projects.

IEEE CASE continues the classic conference model of featuring a 10-15 min

oral presentation of each paper in contrast to poster sessions. This is

particularly exciting for students, who get the valuable experience of

lecturing and fielding questions in front of an audience of peers.

IEEE CASE also has a tradition of spotlighting papers nominated for awards

such as Best Paper, Best Student Paper, etc. Each nominated paper is

presented in special single session track on Day 1, where everyone at the

conference attends and there is a lively Q&A led by judges.

IEEE CASE emphasizes Automation. Automation is very closely related to

Robotics. There is substantial overlap, but Automation emphasizes

efficiency, robustness, durability, safety, cost effectiveness. Automation

also includes topics such as optimization and applications such as

transportation and mfg. I like how RAS President Raj Chatila summed up the

relationship 10 years ago: “One robot on Mars is robotics, ten robots on

Mars is automation.”

In China there are over 100 university departments

focused on Automation. The impact factor for the IEEE Transactions on

Automation Science and Engineering (T-ASE) this year is on par with T-RO

and higher than IJRR. Automation is important to put robotics into

practice.

Professor, Industrial Engineering and Operations Research

William S. Floyd Jr. Distinguished Chair in Engineering, UC Berkeley

Director, CITRIS People and Robots Lab

A world first: A robot able to ‘hear’ through the ear of a locust

A robot that analyzes shoppers’ behavior

Researchers introduce a new generation of tiny, agile drones

Envisioning the 6G Future

Researchers introduce a new generation of tiny, agile drones

By Daniel Ackerman

If you’ve ever swatted a mosquito away from your face, only to have it return again (and again and again), you know that insects can be remarkably acrobatic and resilient in flight. Those traits help them navigate the aerial world, with all of its wind gusts, obstacles, and general uncertainty. Such traits are also hard to build into flying robots, but MIT Assistant Professor Kevin Yufeng Chen has built a system that approaches insects’ agility.

Chen, a member of the Department of Electrical Engineering and Computer Science and the Research Laboratory of Electronics, has developed insect-sized drones with unprecedented dexterity and resilience. The aerial robots are powered by a new class of soft actuator, which allows them to withstand the physical travails of real-world flight. Chen hopes the robots could one day aid humans by pollinating crops or performing machinery inspections in cramped spaces.

Chen’s work appears this month in the journal IEEE Transactions on Robotics. His co-authors include MIT PhD student Zhijian Ren, Harvard University PhD student Siyi Xu, and City University of Hong Kong roboticist Pakpong Chirarattananon.

Typically, drones require wide open spaces because they’re neither nimble enough to navigate confined spaces nor robust enough to withstand collisions in a crowd. “If we look at most drones today, they’re usually quite big,” says Chen. “Most of their applications involve flying outdoors. The question is: Can you create insect-scale robots that can move around in very complex, cluttered spaces?”

According to Chen, “The challenge of building small aerial robots is immense.” Pint-sized drones require a fundamentally different construction from larger ones. Large drones are usually powered by motors, but motors lose efficiency as you shrink them. So, Chen says, for insect-like robots “you need to look for alternatives.”

The principal alternative until now has been employing a small, rigid actuator built from piezoelectric ceramic materials. While piezoelectric ceramics allowed the first generation of tiny robots to take flight, they’re quite fragile. And that’s a problem when you’re building a robot to mimic an insect — foraging bumblebees endure a collision about once every second.

Chen designed a more resilient tiny drone using soft actuators instead of hard, fragile ones. The soft actuators are made of thin rubber cylinders coated in carbon nanotubes. When voltage is applied to the carbon nanotubes, they produce an electrostatic force that squeezes and elongates the rubber cylinder. Repeated elongation and contraction causes the drone’s wings to beat — fast.

Chen’s actuators can flap nearly 500 times per second, giving the drone insect-like resilience. “You can hit it when it’s flying, and it can recover,” says Chen. “It can also do aggressive maneuvers like somersaults in the air.” And it weighs in at just 0.6 grams, approximately the mass of a large bumble bee. The drone looks a bit like a tiny cassette tape with wings, though Chen is working on a new prototype shaped like a dragonfly.

“Achieving flight with a centimeter-scale robot is always an impressive feat,” says Farrell Helbling, an assistant professor of electrical and computer engineering at Cornell University, who was not involved in the research. “Because of the soft actuators’ inherent compliance, the robot can safely run into obstacles without greatly inhibiting flight. This feature is well-suited for flight in cluttered, dynamic environments and could be very useful for any number of real-world applications.”

Helbling adds that a key step toward those applications will be untethering the robots from a wired power source, which is currently required by the actuators’ high operating voltage. “I’m excited to see how the authors will reduce operating voltage so that they may one day be able to achieve untethered flight in real-world environments.”

Building insect-like robots can provide a window into the biology and physics of insect flight, a longstanding avenue of inquiry for researchers. Chen’s work addresses these questions through a kind of reverse engineering. “If you want to learn how insects fly, it is very instructive to build a scale robot model,” he says. “You can perturb a few things and see how it affects the kinematics or how the fluid forces change. That will help you understand how those things fly.” But Chen aims to do more than add to entomology textbooks. His drones can also be useful in industry and agriculture.

Chen says his mini-aerialists could navigate complex machinery to ensure safety and functionality. “Think about the inspection of a turbine engine. You’d want a drone to move around [an enclosed space] with a small camera to check for cracks on the turbine plates.”

Other potential applications include artificial pollination of crops or completing search-and-rescue missions following a disaster. “All those things can be very challenging for existing large-scale robots,” says Chen. Sometimes, bigger isn’t better.

An autonomous underwater robot saves people from drowning

Novel soft tactile sensor with skin-comparable characteristics for robots

Rapid robotics for operator safety: what a bottle picker can do

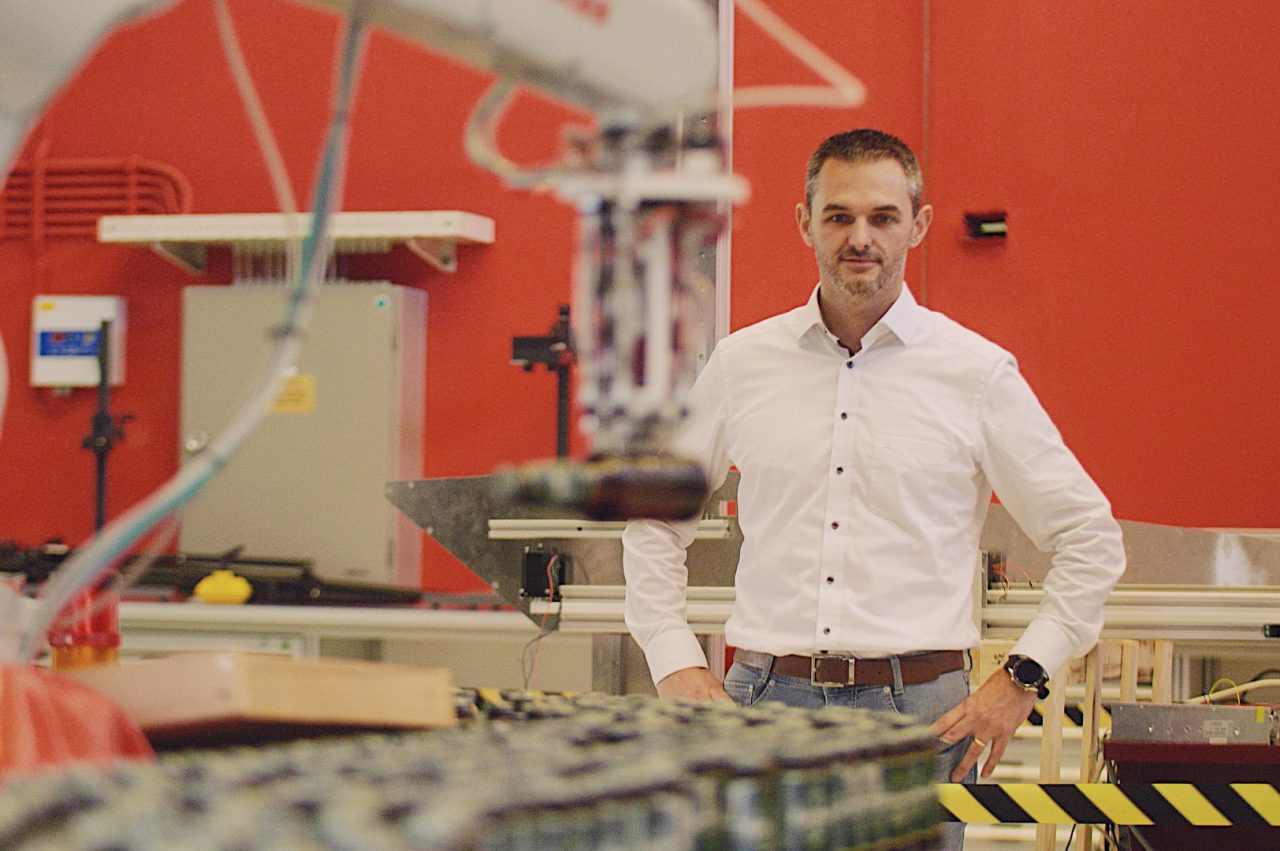

Dutch brewing company Heineken is one of the largest beer producers in the world with more than 70 production facilities globally. From small breweries to mega-plants, its logistics and production processes are increasingly complex and its machinery ever more advanced. The global beer giant therefore began looking for robotics solutions to make its breweries safer and more attractive for employees while enabling a more flexible organisation.

The environment is constantly changing and the robot has to be able to respond immediately.

Shobhit Yadav, mechatronics engineer smart industries and robotics at TNO

Automatically adapting to the situation

Dennis van der Plas, senior global lead packaging lines at Heineken, says, “We are becoming a high-tech company and attracting more and more technically trained staff. Repetitive tasks – like picking up fallen bottles from the conveyor belt will not provide them job satisfaction.” As part of the SMITZH innovation programme, Heineken and RoboHouse fieldlab, with support from the Netherlands Organisation for Applied Scientific Research (TNO), have developed a solution on the basis of flexible manufacturing: automated handling of unexpected situations.

According to Shobhit Yadav of TNO, flexible manufacturing is one of the most important developments in smart industry. “Today, manufacturing companies mainly produce small series on demand. It means that manufacturers have to be able to make many different products. This can be achieved either with a large number of production lines or with a small number that are flexible enough to adapt.” The Heineken project fell into the second category and involved developing a robot that could recognise different kinds of beer bottles that had fallen over on the conveyor. The robot had to pick them up while the belt was still moving. “The environment is constantly changing and the robot has to be able to respond immediately”, explains Shobhit. “This is a typical example of a flexible production line that automatically adapts to the situation.”

Robotics for a safe and enjoyable working environment

Industrial robots have obviously been around for a while. “The automotive industry deploys robots for welding car parts, whereas our sector uses them for automatically palletising products”, says Dennis. “But with this project we took a different approach. Our starting point was not a question of which robots exist and how they could be used. Instead, we focused on the needs and wishes of the people in the breweries, the operators who control and maintain the machines and how robots could support them in their work.”

The solution, in other words, had to lead not only to process optimisation but also – especially – to improved safety and greater job satisfaction. In addition, it would result in Heineken becoming a better employer. It is why Dennis and his colleague Wessel Reurslag, global lead packaging engineering & robotics, asked the operators what they would need to make their work safer and more interesting. One of the use cases that emerged was picking up bottles that had fallen over on the conveyor belt: repetitive but also unsafe as the glass bottles could break.

The lab is the place to meet for anyone involved in robotics.

Wessel Reurslag, global lead packaging engineering & robotics at Heineken

Experimenting without a business case

Heineken initially made contact with RoboHouse field lab through a sponsorship project with X!Delft, an initiative that strengthens corporate innovation and closes the gap between industry and Delft University of Technology. “The lab is the place to meet for anyone involved in robotics”, says Wessel. “It is also linked to SMITZH and thus connected to TNO.”

The parties soon realised that their ambitions overlapped. Heineken was seeking independent advice and both TNO and RoboHouse were looking for an applied research project that focused on flexible manufacturing. “This kind of partnership is very valuable to all involved”, says Shobhit. “SMITZH allows us at TNO to work with current issues in the industry and establish valuable contacts, which makes our research more relevant. In turn, manufacturers have somewhere to go with their questions and problems regarding smart technologies.”

Accessible way to do research and experiment

The guys at Heineken have nothing but praise for the innovative collaboration with TNO and RoboHouse. Dennis says, “The great thing is that all project partners were in it to learn something. At RoboHouse, we had access to the expertise of robotics engineers and state-of-the-art technologies like robotic arms. We supplied the bottle conveyor ourselves and TNO also added knowledge to the mix. It is an accessible way to do research and experiment. This would be a lot more difficult with a business case, which must involve an operational advantage from the start.”

Through close cooperation, we really developed a joint product.

Bas van Mil, mechanical engineer and robot gripper expert at RoboHouse

A joint product by TNO and RoboHouse

TNO and RoboHouse distilled two research goals from the use cases presented by Heineken: enabling real-time robot control and using vision technology to direct the robots with cameras. The main challenge involved devising a solution that could be applied to Heineken’s high-speed packaging lines. TNO worked on the control and movements of the robot, while RoboHouse took on the vision technology aspect. This entailed recognising the fallen bottles, developing the system’s software-based control and building the ‘gripper’ to pick up the bottles.

In many existing robotic systems, […] the robot will do a ‘blind pick.’

Bas van Mil, mechanical engineer and robot gripper expert at RoboHouse

“Communication between the robot and the computer is very important”, explains Bas van Mil, mechanical engineer at RoboHouse. “Our input and TNO’s work were complementary. For example, RoboHouse did not possess Shobhit’s knowledge of control technology, which is indispensable for controlling the robot. Through close cooperation, we really developed a joint product.”

Every millisecond counts

The biggest challenge in detecting and tracking fallen beer bottles is that they never stop moving. As Bas explains, “They do not just move in the direction of the bottle conveyor but can also roll around on the belt itself. In many existing robotic systems, the camera takes a single photo that informs the movements of the robot. The robot will do a ‘blind pick’ with no way of knowing whether anything has changed since the photo was taken. This only works if the environment stays the same – but in this case, it doesn’t.”

The solution involves a system in which the camera and the movements of the robot are constantly connected to each other. Bas says, “Every millisecond counts as the bottle will disappear from view and the robot will still try to pick it up from the spot where it was half a second ago.” A RoboHouse programmer developed the camera software to be as fast and efficient as possible. The field lab even purchased a powerful computer running an advanced AI system especially for the project.

We are receiving feedback as well as requests for the new robotic systems from breweries all over the globe.

Dennis van der Plas, senior global lead packaging lines at Heineken

TNO and RoboHouse then wrote a programme together that determines the speed of the robot from the moment a fallen bottle is detected. This enables the robot to move with the bottle based on its calculated speed. It is what makes this robot so different from existing ones. “The robot responds immediately to changes”, says Shobhit. “In fact, it is 30 per cent faster than the current top speed of Heineken’s bottle conveyors. As a result, it has a wide range of applications and can be used in a variety of environments with different production speeds.”

Smarter thanks to independent partners

Heineken valued not just the successful innovation but also the independent character of TNO and RoboHouse during the development process. Dennis says, “We now have a much better idea of what is technically feasible, what the challenges are and what we can realistically ask of our technical suppliers. Thanks to this project, we can act much more like a smart buyer and make smarter demands of our suppliers. This information is relevant to have, especially as we operate in such an innovative field where we do not just buy parts off the shelf. After all, if I ask too little, I will not get the best out of my project. But if I ask too much, it affects our relationship with the supplier.”

The project has also served as a source of inspiration for Dennis’s colleagues worldwide. “We have been sharing videos and reports from SMITZH on the intranet, building a kind of community within Heineken. We are receiving feedback as well as requests for the new robotic systems from breweries all over the globe.” To meet the demand, Dennis and Wessel want to supply breweries with a ready-to-use version. “We are currently looking for parties that can make the technology available and provide support services.”

Meanwhile, RoboHouse and TNO aim to continue optimising the robot. “This is only the pilot version”, says Bas. “We can still improve its flexibility, for example by installing a different vision module, thereby making the technology even more widely applicable.” Both organisations are therefore looking for use cases in which they can use the same technology to solve other problems. “We are looking at the bigger picture”, explains Shobhit. “This project could serve as a model for similar challenges in other industries.”

Platform for valuable connections

All parties emphasise the benefits of working together and sharing knowledge to achieve a successful and relevant innovation. “There are very few places like SMITZH where manufacturing companies can go with these kinds of questions”, says Shobhit. “SMITZH is quite unique in that sense and is vitally important because it offers a specific platform for appropriate collaborations.” Wessel agrees: “If there is one thing this project has made clear, it is that producers like Heineken, tech companies and knowledge institutions should collaborate more intensively to substantiate these kinds of projects. You won’t find a solution in a PDF or a presentation. There can be no genuine solution until it is made real and put into practice.”

Do you have a similar use case and are you, like Heineken, searching for a practical solution? Or would you first like to learn more about RoboHouse or this specific project? Feel free to contact our lead engineer Bas van Mil, or email SMITZH with questions about its collaboration programme, or contact Shobhit Yadav at TNO more information.

SMITZH and future of work fieldlab RoboHouse

SMITZH is an innovation programme focused on smart manufacturing solutions in West Holland. It brings together supply and demand to stimulate the industrial application of smart manufacturing technologies and help regional companies innovate. Each SMITZH project consists of at least one manufacturing company and a fieldlab. RoboHouse served as the fieldlab in this project.

The post Rapid Robotics for Operator Safety: What a Bottle Picker Can Do appeared first on RoboValley.