In conversation with AI: building better language models

In conversation with AI: building better language models

Engineers study bird flight with an eye toward improving uncrewed drones

Clarifying the physics of walking: For multi-legged creatures, it’s a lot like slithering

Using C-shaped wheels, this rover can climb over more challenging lunar terrain

Robo-bug: A rechargeable, remote-controllable cyborg cockroach

#IJCAI invited talk: engineering social and collaborative agents with Ana Paiva

Anton Grabolle / Better Images of AI / Human-AI collaboration / Licenced by CC-BY 4.0

Anton Grabolle / Better Images of AI / Human-AI collaboration / Licenced by CC-BY 4.0

The 31st International Joint Conference on Artificial Intelligence and the 25th European Conference on Artificial Intelligence (IJACI-ECAI 2022) took place from 23-29 July, in Vienna. In this post, we summarise the presentation by Ana Paiva, University of Lisbon and INESC-ID. The title of her talk was “Engineering sociality and collaboration in AI systems”.

Robots are widely used in industrial settings, but what happens when they enter our everyday world, and, specifically, social situations? Ana believes that social robots, chatbots and social agents have the potential to change the way we interact with technology. She envisages a hybrid society where humans and AI systems work in tandem. However, for this to be realised we need to carefully consider how such robots will interact with us socially and collaboratively. In essence, our world is social, so when machines enter they need to have some capabilities to interact with this social world.

Ana took us through the theory of what it means to the social. There are three aspects to this:

- Social understanding: the capacity to perceive others, exhibit theory of mind and respond appropriately.

- Intrapersonal competencies: the capability to communicate socially, establish relationships and adapt to others.

- Social responsibility: the capability to take actions towards the social environment, follow norms and adopt morally appropriate actions.

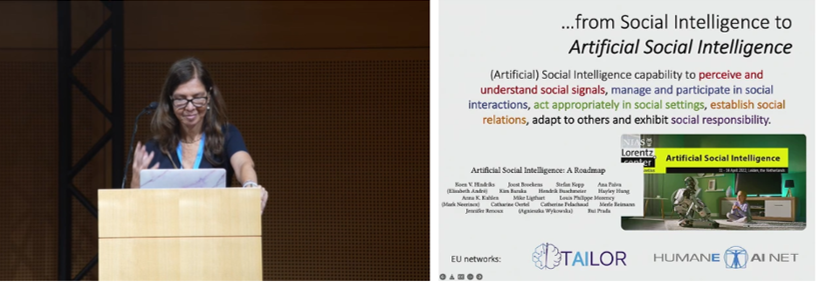

Screenshot from Ana’s talk.

Screenshot from Ana’s talk.

Ana wants to go from this notion of social intelligence to what is called artificial social intelligence, which can be defined as: “the capability to perceive and understand social signals, manage and participate in social interactions, act appropriately in social settings, establish social relations, adapt to others, and exhibit social responsibility.”

As an engineer, she likes to build things, and, on seeing the definition above, wonders how she can pass from said definition to a model that will allow her to build social machines. This means looking at social perception, social modelling and decision making, and social acting. A lot of Ana’s work revolves around design, study and development for achieving this kind of architecture.

Ana gave us a flavour of some of the projects that she and her groups have carried out with regards to trying to engineer sociality and collaboration in robots and other agents.

One of these projects was called “Teach me how to write”, and it centres on using robots to improve the handwriting abilities of children. In this project the team wanted to create a robot that kids could teach to write. Through teaching the robot it was hypothesised that they would, in turn, improve their own skills.

The first step was to create and train a robot that could learn how to write. They used learning from demonstration to train a robotic arm to draw characters. The team realised that if they wanted to teach the kids to write, the robot had to learn and improve, and it had to make mistakes in order to be able to improve. They studied the taxonomy of handwriting mistakes that are made by children, so that they could put those mistakes into the system, and so that the robot could learn from the kids how to fix the mistakes.

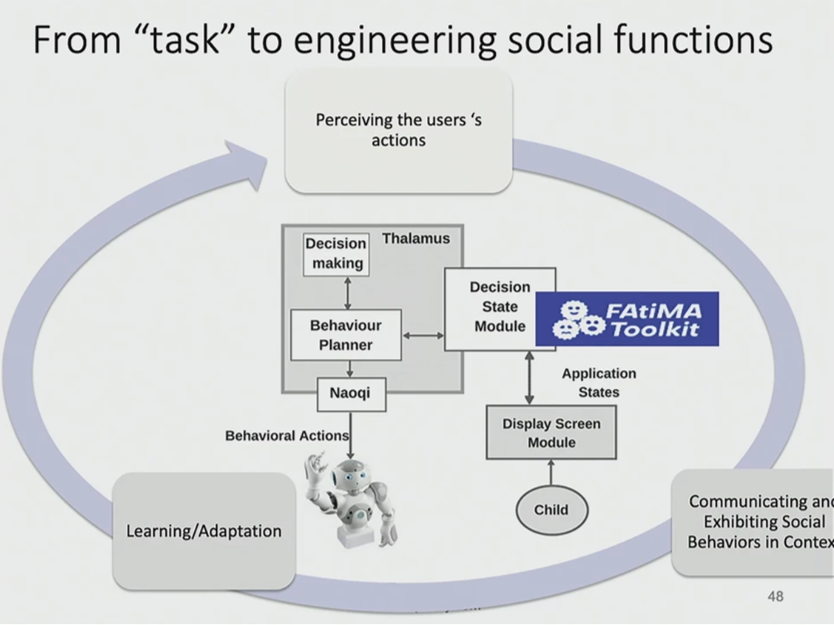

You can see the system architecture in the figure below, and it includes the handwriting task element, and social behaviours. To add these social behaviours they used a toolkit developed in Ana’s lab, called FAtiMA. This toolkit can be integrated into a framework and is an affective agent architecture for creating autonomous characters that can evoke empathic responses.

Screenshot from Ana’s talk. System architecture.

Screenshot from Ana’s talk. System architecture.

In terms of actually using and evaluating the effectiveness of the robot, they couldn’t actually put the robot arm in the classroom as it was too big, unwieldy and dangerous. Therefore, they had to use a Nao robot, which moved its arms like it was writing, but it didn’t actually write.

Taking part in the study were 24 Portuguese-speaking children, and they participated in four sessions over the course of a few weeks. They assigned the robot two contrasting competencies: “learning” (where the robot improved over the course of the sessions) and “non-learning” (where the robot’s abilities remained constant). They measured the kids’ writing ability and improvement, and they used questionnaires to find out what the children thought about the friendliness of the robot, and their own teaching abilities.

They found that the children who worked with learning robot significantly improved their own abilities. They also found that the robot’s poor writing abilities did not affect the children’s fondness for it.

You can find out more about this project, and others, on Ana’s website.

Universal Robots’ New UR20 Collaborative Robot Makes U.S. Debut at IMTS 2022, Expanding Cobot Automation in Machining Industry

A tiny, magnetically actuated gearbox that gives microrobots more power

Powering Drones with Improved Mobile Mapping Systems

Robot that stocks drinks is newest thing at the corner store

Spotlights on Three Exhibitors from CVPR 2022

Retrocausal:

The team at Retrocausal built a computer vision platform that allows any manufacturer to rapidly set up an activity recognition pipeline to assist with manual assemblies. Using any standard camera pointed at an assembly station, the system can learn the assembly procedure and give real-time feedback.

Deci:

At Deci, they are tackling the problem of taking a Machine Learning model optimized for a single hardware platform and adapting it to work on a different hardware platform.

When developing an ML model running on the edge, a time-consuming development cycle is made to optimize the model to run at peak capacity. However, over time hardware platforms get updated and companies need to make the decision of whether or not to update their hardware and dedicate engineering resources to manually tweak a multitude of settings.

Deci allows companies to upload their models to the cloud, then automatically optimize them for running on a variety of different hardware platforms that they own in their facilities.

StegAI:

Dr. Eric Wengrowski co-founded StegAI after publishing a paper in CVPR 2019 on embedding “fingerprints” that are embedded in images and videos that are invisible to the human eye, but detectable by their software.

Their product modulates the pixels in an image in such a way that even after compressing, resizing, or printing out the images, their deep learning algorithms will still be able to detect the origin of the image.

This powerful tool is used by social media firms to protect against potential copyright infringement when users upload content to their platforms