Exoskeletons with personalize-your-own settings

Silicone raspberry used to train harvesting robots

Kicks, pranks, dog pee: The hard life of food delivery robots

Who’s driving that food delivery bot? It might be a Gen Z gamer

Automation for the Pharmaceutical Industry, Laboratories and Analysis.

Mining garbage and the circular economy

Developing a crowd-friendly robotic wheelchair

How Computers Deliver the Ability to See

Helen Greiner: Solar Powered Robotic Weeding | Sense Think Act Podcast #16

In this episode, Audrow Nash speaks to Helen Greiner, CEO at Tertill, which makes a small solar powered weeding robot for vegetable gardens. The conversation begins with an overview of Helen’s previous robotics experience, including at as a student at MIT, Co-founder at iRobot, Founder and CEO at CyPhyWorks, and in advising government research in robotics, AI, and machine learning. From there, Helen explains the design of the Tertill robot, how it works, and her high hopes for this simple robot: to help reduce the environmental impact of the agriculture industry by helping people to grow their own food. In the last part of the conversation, Helen speaks broadly about her experience in robotics startups, the robotics industry, and the future of robotics.

Episode Links

Podcast info

Gain a Competitive Edge with Cold Chain Automation

Handheld surgical robot can help stem fatal blood loss

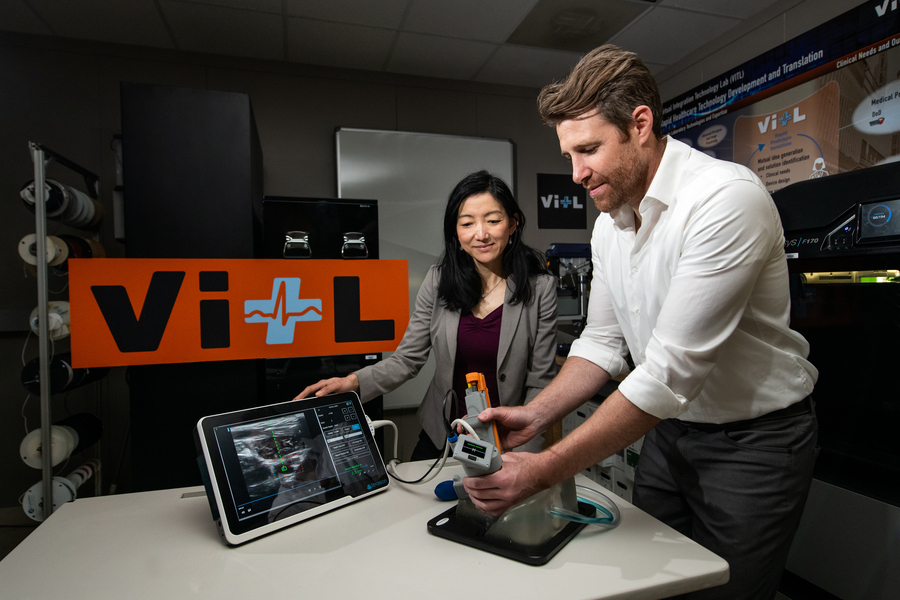

Matt Johnson (right) and Laura Brattain (left) test a new medical device on an artificial model of human tissue and blood vessels. The device helps users to insert a needle and guidewire quickly and accurately into a vessel, a crucial first step to halting rapid blood loss. Photo: Nicole Fandel.

By Anne McGovern | MIT Lincoln Laboratory

After a traumatic accident, there is a small window of time when medical professionals can apply lifesaving treatment to victims with severe internal bleeding. Delivering this type of care is complex, and key interventions require inserting a needle and catheter into a central blood vessel, through which fluids, medications, or other aids can be given. First responders, such as ambulance emergency medical technicians, are not trained to perform this procedure, so treatment can only be given after the victim is transported to a hospital. In some instances, by the time the victim arrives to receive care, it may already be too late.

A team of researchers at MIT Lincoln Laboratory, led by Laura Brattain and Brian Telfer from the Human Health and Performance Systems Group, together with physicians from the Center for Ultrasound Research and Translation (CURT) at Massachusetts General Hospital, led by Anthony Samir, have developed a solution to this problem. The Artificial Intelligence–Guided Ultrasound Intervention Device (AI-GUIDE) is a handheld platform technology that has the potential to help personnel with simple training to quickly install a catheter into a common femoral vessel, enabling rapid treatment at the point of injury.

“Simplistically, it’s like a highly intelligent stud-finder married to a precision nail gun.” says Matt Johnson, a research team member from the laboratory’s Human Health and Performance Systems Group.

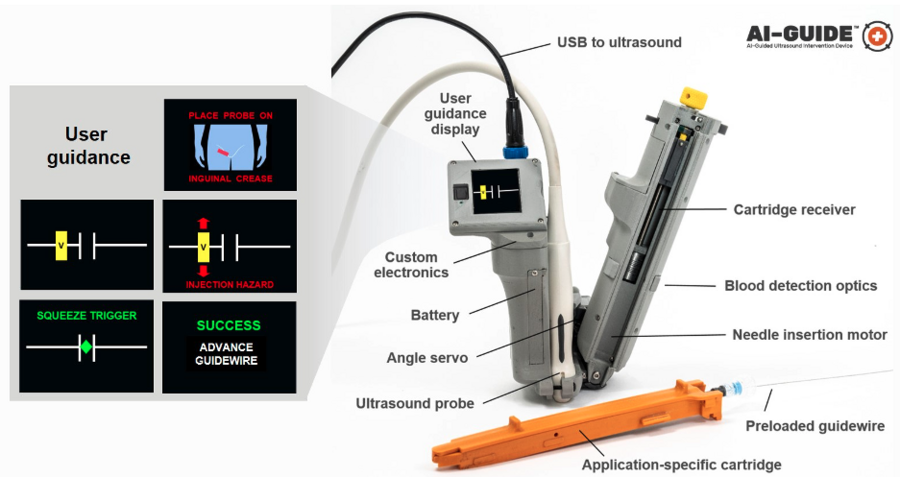

AI-GUIDE is a platform device made of custom-built algorithms and integrated robotics that could pair with most commercial portable ultrasound devices. To operate AI-GUIDE, a user first places it on the patient’s body, near where the thigh meets the abdomen. A simple targeting display guides the user to the correct location and then instructs them to pull a trigger, which precisely inserts the needle into the vessel. The device verifies that the needle has penetrated the blood vessel, and then prompts the user to advance an integrated guidewire, a thin wire inserted into the body to guide a larger instrument, such as a catheter, into a vessel. The user then manually advances a catheter. Once the catheter is securely in the blood vessel, the device withdraws the needle and the user can remove the device.

With the catheter safely inside the vessel, responders can then deliver fluid, medicine, or other interventions.

AI-GUIDE automates nearly every step of the process to locate and insert a needle, guidewire, and catheter into a blood vessel to facilitate lifesaving treatment. The version of the device shown here is optimized to locate the femoral blood vessels, which are in the upper thigh. Image courtesy of the researchers.

As easy as pressing a button

The Lincoln Laboratory team developed the AI in the device by leveraging technology used for real-time object detection in images.

“Using transfer learning, we trained the algorithms on a large dataset of ultrasound scans acquired by our clinical collaborators at MGH,” says Lars Gjesteby, a member of the laboratory’s research team. “The images contain key landmarks of the vascular anatomy, including the common femoral artery and vein.”

These algorithms interpret the visual data coming in from the ultrasound that is paired with AI-GUIDE and then indicate the correct blood vessel location to the user on the display.

“The beauty of the on-device display is that the user never needs to interpret, or even see, the ultrasound imagery,” says Mohit Joshi, the team member who designed the display. “They are simply directed to move the device until a rectangle, representing the target vessel, is in the center of the screen.”

For the user, the device may seem as easy to use as pressing a button to advance a needle, but to ensure rapid and reliable success, a lot is happening behind the scenes. For example, when a patient has lost a large volume of blood and becomes hypotensive, veins that would typically be round and full of blood become flat. When the needle tip reaches the center of the vein, the wall of the vein is likely to “tent” inward, rather than being punctured by the needle. As a result, though the needle was injected to the proper location, it fails to enter the vessel.

To ensure that the needle reliably punctures the vessel, the team engineered the device to be able to check its own work.

“When AI-GUIDE injects the needle toward the center of the vessel, it searches for the presence of blood by creating suction,” says Josh Werblin, the program’s mechanical engineer. “Optics in the device’s handle trigger when blood is present, indicating that the insertion was successful.” This technique is part of why AI-GUIDE has shown very high injection success rates, even in hypotensive scenarios where veins are likely to tent.

Lincoln Laboratory researchers and physicians from the Massachusetts General Hospital Center for Ultrasound Research and Translation collaborated to build the AI-GUIDE system. Photo courtesy of Massachusetts General Hospital.

Recently, the team published a paper in the journal Biosensors that reports on AI-GUIDE’s needle insertion success rates. Users with medical experience ranging from zero to greater than 15 years tested AI-GUIDE on an artificial model of human tissue and blood vessels and one expert user tested it on a series of live, sedated pigs. The team reported that after only two minutes of verbal training, all users of the device on the artificial human tissue were successful in placing a needle, with all but one completing the task in less than one minute. The expert user was also successful in quickly placing both the needle and the integrated guidewire and catheter in about a minute. The needle insertion speed and accuracy were comparable to that of experienced clinicians operating in hospital environments on human patients.

Theodore Pierce, a radiologist and collaborator from MGH, says AI-GUIDE’s design, which makes it stable and easy to use, directly translates to low training requirements and effective performance. “AI-GUIDE has the potential to be faster, more precise, safer, and require less training than current manual image-guided needle placement procedures,” he says. “The modular design also permits easy adaptation to a variety of clinical scenarios beyond vascular access, including minimally invasive surgery, image-guided biopsy, and imaging-directed cancer therapy.”

In 2021, the team received an R&D 100 Award for AI-GUIDE, recognizing it among the year’s most innovative new technologies available for license or on the market.

What’s next?

Right now, the team is continuing to test the device and work on fully automating every step of its operation. In particular, they want to automate the guidewire and catheter insertion steps to further reduce risk of user error or potential for infection.

“Retraction of the needle after catheter placement reduces the chance of an inadvertent needle injury, a serious complication in practice which can result in the transmission of diseases such as HIV and hepatitis,” says Pierce. “We hope that a reduction in manual manipulation of procedural components, resulting from complete needle, guidewire, and catheter integration, will reduce the risk of central line infection.”

AI-GUIDE was built and tested within Lincoln Laboratory’s new Virtual Integration Technology Lab (VITL). VITL was built in order to bring a medical device prototyping capability to the laboratory.

“Our vision is to rapidly prototype intelligent medical devices that integrate AI, sensing — particularly portable ultrasound — and miniature robotics to address critical unmet needs for both military and civilian care,” says Laura Brattain, who is the AI-GUIDE project co-lead and also holds a visiting scientist position at MGH. “In working closely with our clinical collaborators, we aim to develop capabilities that can be quickly translated to the clinical setting. We expect that VITL’s role will continue to grow.”

AutonomUS, a startup company founded by AI-GUIDE’s MGH co-inventors, recently secured an option for the intellectual property rights for the device. AutonomUS is actively seeking investors and strategic partners.

“We see the AI-GUIDE platform technology becoming ubiquitous throughout the health-care system,” says Johnson, “enabling faster and more accurate treatment by users with a broad range of expertise, for both pre-hospital emergency interventions and routine image-guided procedures.”

This work was supported by the U.S. Army Combat Casualty Care Research Program and Joint Program Committee – 6. Nancy DeLosa, Forrest Kuhlmann, Jay Gupta, Brian Telfer, David Maurer, Wes Hill, Andres Chamorro, and Allison Cheng provided technical contributions, and Arinc Ozturk, Xiaohong Wang, and Qian Li provided guidance on clinical use.

Careers in robotics: What is a robotics PhD?

This relatively general post focuses on robotics-related PhD programs in the American educational system. Much of this will not apply to universities in other countries, or to other departments in American universities. This post will take you through the overall life cycle of a PhD and is intended as a basic overview for anyone unfamiliar with the process, whether they are considering a PhD or have a loved one who is currently in a PhD program and just want to learn more about what they are doing.

The basics

A PhD (doctoral degree) in engineering or a DEng (Doctorate of Engineering) is the highest degree that you can earn in engineering. This is generally a degree that people only earn one of, if they earn one at all. Unlike a bachelor’s degree or a master’s degree, a PhD studying a topic relevant to robotics should be free and students should receive a modest stipend for their living expenses. There are very few stand-alone robotics PhDs programs, so people generally join robotics labs through PhD programs in electrical engineering, computer science, or mechanical engineering.

Joining a lab

In some programs, students are matched with a lab when they are accepted to the university. This matching is not random: If a university works this way, a professor has to have a space in their lab, see the application, and decide that the student would be a good fit for their lab. Essentially, the professor “hires” the student to join their lab.

Other programs accept cohorts of students who take courses in the first few years and pick professors to work with by some deadline in the program. The mechanism through which students and professors pair up is usually rotations: Students perform a small research project in each of several labs and then join one of the labs they rotated in.

The advisor

Regardless of how a student gets matched up with their advisor, the advisor has a lot of power to make their graduate school experience a positive one or a negative one. Someone who is a great advisor for one student may not be a great advisor for another. If you are choosing an advisor, it pays to pay attention to the culture in a lab, and whether you personally feel supported by that environment and the type of mentorship that your advisor offers. In almost every case, this is more important for your success in the PhD program than the specifics of the project you will work on or the prestige of the project, collaborators, or lab.

Qualifiers

PhD programs typically have qualifiers at some point in the first three years. Some programs use a test-based qualifier system, either creating a specific qualifier test or using tests from final exams of required courses. In some programs, you are tested by a panel of faculty who ask the student questions about course material that they are expected to have learned by this point. In other programs, the student performs a research project and then presents it to a panel of faculty.

Some universities view the qualifiers as a hurdle that almost all of the admitted PhD students should be able to pass, and some universities view them as a method to weed out students from the PhD program. If you are considering applying to PhD programs, it is worth paying attention to this cultural difference between programs, and not taking it too personally if you do not pass the qualifiers at a school that weeds out half of their students. After all, you were qualified enough to be accepted. It is also important to remember, if you join either kind of program, that if you do not pass your qualifiers, usually what happens is that you leave the program with a free master’s degree. Your time in the program will not be wasted!

The author testing a robot on a steep dune face on a research field trip at Oceano Dunes.

Research

Some advisors will start students on a research project as soon as they join the lab, typically by attaching them to an existing project so that they can get a little mentorship before starting their own project. Some advisors will wait until the student is finished with qualifiers. Either way, it is worth knowing that a PhD student’s relationship to their PhD project is likely different from any project they have ever been involved with before.

For any other research project, there is another person – the advisor, an older graduate student, a post doc – who has designed the project or at least worked with the student to come up with parameters for success. The scope of previous research projects would typically be one semester or one summer, resulting in one or two papers at most. In contrast, a PhD student’s research project is expected to span multiple years (at least three), should result in multiple publications, and is designed primarily by the student. It is not just that the student has ownership over their project, but that they are responsible for it in a way that they have never been responsible for such a large open-ended project before. It is also their primary responsibility – not one project alongside many others. This can be overwhelming for a lot of students, which is why it is impolite to ask a PhD student how much longer they expect their PhD to take.

The committee

The “committee” is a group of professors that work in a related area to the student’s. Their advisor is on the committee, but it must include other professors as well. Typically, students need to have a mix of professors from their school and at least one other institution. These professors provide ongoing mentorship on the thesis project. They are the primary audience for the thesis proposal and defense, and will ultimately decide what is sufficient for the student to do in order to graduate. If you are a student choosing your committee, keep in mind that you will benefit greatly from having supportive professors on your committee, just like you will benefit from having a supportive advisor.

Proposing and defending the thesis

When students are expected to propose a thesis project varies widely by program. In some programs, students propose a topic as part of their qualifier process. In others, students have years after finishing their qualifiers to propose a topic – and can propose as little as a semester before they defend!

The proposal and defense both typically take the form of a presentation followed by questions from the committee and the audience. In the proposal, the student outlines the project they plan to do, and presents criteria that they and their committee should agree on as the required minimum for them to do in order to graduate. The defense makes the case that the student has hit those requirements.

After the student presents, the committee will ask them some questions, will confer, and then will either tell the student that they passed or failed. It is very uncommon for a PhD student to fail their defense, and it is generally considered a failure on the part of the advisor rather than the student if this happens, because the advisor shouldn’t have let the student present an unfinished thesis. After the defense, there may be some corrections to the written thesis document or even a few extra experiments, but typically the student does not need to present their thesis again in order to graduate.

The bottom line

A PhD is a long training process to teach students how to become independent researchers. Students will take classes and perform research, and will also likely teach or develop coursework. If this is something you’re thinking about, it’s important to learn about what you might be getting yourself into – and if it’s a journey one of your loved ones is starting on, you should know that it’s not just more school!