Soft robots use camera and shadows to sense human touch

Fabricating fully functional drones

By Rachel Gordon | MIT CSAIL

From Star Trek’s replicators to Richie Rich’s wishing machine, popular culture has a long history of parading flashy machines that can instantly output any item to a user’s delight.

While 3D printers have now made it possible to produce a range of objects that include product models, jewelry, and novelty toys, we still lack the ability to fabricate more complex devices that are essentially ready-to-go right out of the printer.

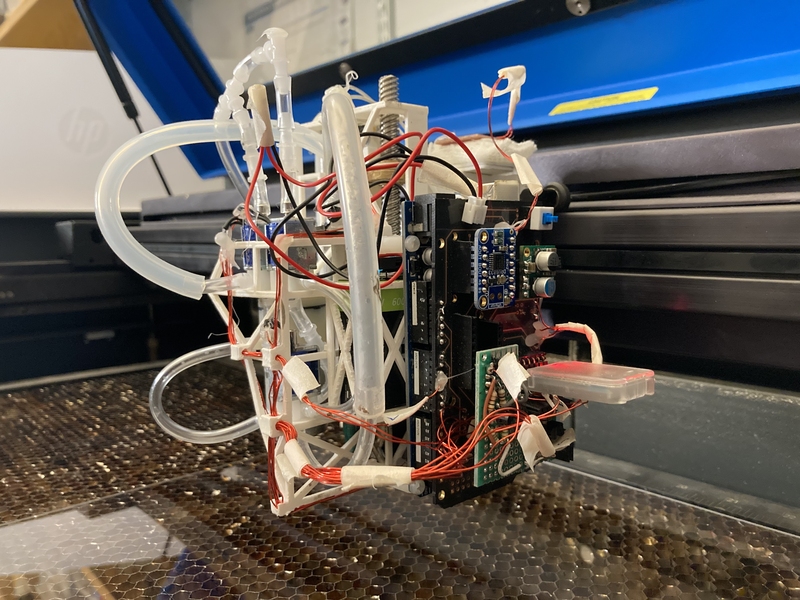

A group from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) recently developed a new system to print functional, custom-made devices and robots, without human intervention. Their single system uses a three-ingredient recipe that lets users create structural geometry, print traces, and assemble electronic components like sensors and actuators.

“LaserFactory” has two parts that work in harmony: a software toolkit that allows users to design custom devices, and a hardware platform that fabricates them.

CSAIL PhD student Martin Nisser says that this type of “one-stop shop” could be beneficial for product developers, makers, researchers, and educators looking to rapidly prototype things like wearables, robots, and printed electronics.

“Making fabrication inexpensive, fast, and accessible to a layman remains a challenge,” says Nisser, lead author on a paper about LaserFactory that will appear in the ACM Conference on Human Factors in Computing Systems in May. “By leveraging widely available manufacturing platforms like 3D printers and laser cutters, LaserFactory is the first system that integrates these capabilities and automates the full pipeline for making functional devices in one system.”

Inside LaserFactory

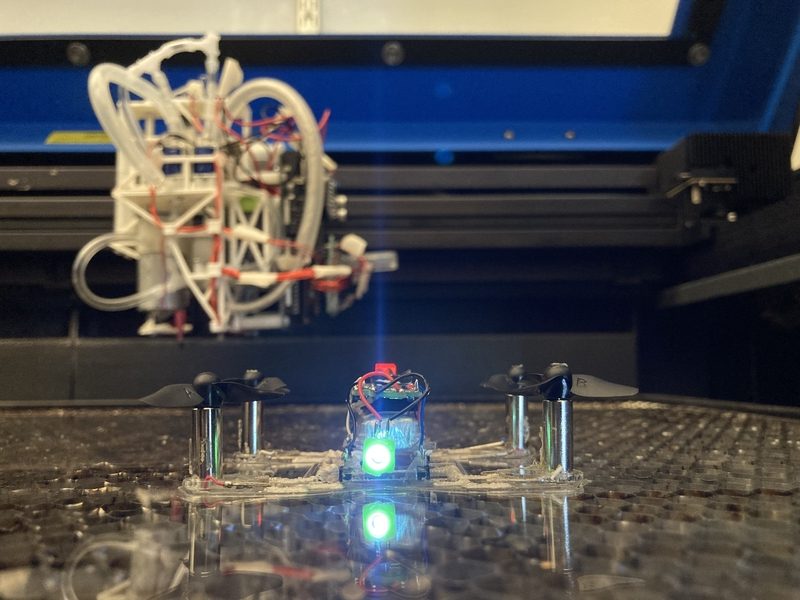

Let’s say a user has aspirations to create their own drone. They’d first design their device by placing components on it from a parts library, and then draw on circuit traces, which are the copper or aluminum lines on a printed circuit board that allow electricity to flow between electronic components. They’d then finalize the drone’s geometry in the 2D editor. In this case, they’d use propellers and batteries on the canvas, wire them up to make electrical connections, and draw the perimeter to define the quadcopter’s shape.

The user can then preview their design before the software translates their custom blueprint into machine instructions. The commands are embedded into a single fabrication file for LaserFactory to make the device in one go, aided by the standard laser cutter software. On the hardware side, an add-on that prints circuit traces and assembles components is clipped onto the laser cutter.

Similar to a chef, LaserFactory automatically cuts the geometry, dispenses silver for circuit traces, picks and places components, and finally cures the silver to make the traces conductive, securing the components in place to complete fabrication.

The device is then fully functional, and in the case of the drone, it can immediately take off to begin a task — a feature that could in theory be used for diverse jobs such as delivery or search-and-rescue operations.

As a future avenue, the team hopes to increase the quality and resolution of the circuit traces, which would allow for denser and more complex electronics.

As well as fine-tuning the current system, the researchers hope to build on this technology by exploring how to create a fuller range of 3D geometries, potentially through integrating traditional 3D printing into the process.

“Beyond engineering, we’re also thinking about how this kind of one-stop shop for fabrication devices could be optimally integrated into today’s existing supply chains for manufacturing, and what challenges we may need to solve to allow for that to happen,” says Nisser. “In the future, people shouldn’t be expected to have an engineering degree to build robots, any more than they should have a computer science degree to install software.”

This research is based upon work supported by the National Science Foundation. The work was also supported by a Microsoft Research Faculty Fellowship and The Royal Swedish Academy of Sciences.

How humans can build better teamwork with robots

Drivers and Restraints to the Collaborative Robot Market

Soft robots for ocean exploration and offshore operations: A perspective

Most of the ocean is unknown. Yet we know that the most challenging environments on the planet reside in it. Understanding the ocean in its totality is a key component for the sustainable development of human activities and for the mitigation of climate change, as proclaimed by the United Nations. We are glad to share our perspective about the role of soft robots in ocean exploration and offshore operations at the outset of the ocean decade (2021-2030).

In this study of the Soft Systems Group (part of The School of Engineering at The University of Edinburgh), we focus on the two ends of the water column: the abyss and the surface. The former is mostly unexplored, containing unknown physical, biological and chemical properties; the latter is where industrial offshore activities take place, and where human operators face dangerous environmental conditions.

The analysis of recent developments in soft robotics brought to light their potential in solving some of the challenges that industry and scientists are facing at sea. The paper offers a discussion about how we can use the latest technological advances in soft robotics to overcome the limitations of existing technology. We synthesise the crucial characteristics that future marine robots should include.

Today we know that industrial growth needs to be supported by deep environmental and technological knowledge in order to develop human activities in a sustainable manner. Therefore, the remotest areas of the ocean constitute a common frontier for oceanography, robotics and offshore industry. The first challenge then is to create an interdisciplinary space where marine scientists, ocean engineers, roboticists and industry can communicate.

The offshore renewable energy sector is growing fast. By definition, wind and wave energy is better sourced from areas of the seas with a high energy content, which translates into wave dominated environments. In such conditions, operating in the vicinity of an offshore platform is dangerous for personnel and potentially destructive for traditional robots.

Recent development in soft robotics unveiled novel, bio-inspired maneuvering techniques, fluidic logic data logging capabilities and unprecedented dexterity. These characteristics would enable data collection, maintenance and repair interventions, unfeasible with rigid robots.

Traditional devices, often tethered, rigid and with limited activity range, cannot tackle the environmental conditions where ocean exploration is needed the most. The devices needed for ocean exploration require an innovative payload and delicate sampling capabilities. Soft sensors and soft gripping techniques are optimum candidates to push ocean exploration into fragile and unknown areas that require delicate sampling and gentle navigation. The inherent compliance of soft robots also protects the environment and the payload from damage. More importantly, recent studies have highlighted the potential for the manufacturing of soft robots to be entirely biocompatible and, hence, to minimise the impact on the natural environment in case of loss or mass deployment.

In a nutshell, soft robots for marine exploration and offshore deployment offer the advantage, over traditional devices, of novel navigation and manipulation techniques, unprecedented sensing and sampling capabilities, biocompatibility and novel memory and data logging methods. With this study we wish to invite a multidisciplinary approach in order to create novel sustainable soft systems.

Advanced Control Systems – Stand-Alone SCADA Units with LTE

Routes to Professional Success in Automation

How Many Axes Does Your Robotic Positioner Need?

Wielding a laser beam deep inside the body

A microrobotic opto-electro-mechanical device able to steer a laser beam with high speed and a large range of motion could enhance the possibilities of minimally invasive surgeries

By Benjamin Boettner

Minimally invasive surgeries in which surgeons gain access to internal tissues through natural orifices or small external excisions are common practice in medicine. They are performed for problems as diverse as delivering stents through catheters, treating abdominal complications, and performing transnasal operations at the skull base in patients with neurological conditions.

The ends of devices for such surgeries are highly flexible (or “articulated”) to enable the visualization and specific manipulation of the surgical site in the target tissue. In the case of energy-delivering devices that allow surgeons to cut or dry (desiccate) tissues, and stop internal bleeds (coagulate) deep inside the body, a heat-generating energy source is added to the end of the device. However, presently available energy sources delivered via a fiber or electrode, such as radio frequency currents, have to be brought close to the target site, which limits surgical precision and can cause unwanted burns in adjacent tissue sections and smoke development.

Laser technology, which already is widely used in a number of external surgeries, such as those performed in the eye or skin, would be an attractive solution. For internal surgeries, the laser beam needs to be precisely steered, positioned and quickly repositioned at the distal end of an endoscope, which cannot be accomplished with the currently available relatively bulky technology.

Responding to an unmet need for a robotic surgical device that is flexible enough to access hard to reach areas of the G.I. tract while causing minimal peripheral tissue damage, Researchers at the Wyss Institute and Harvard SEAS have developed a laser steering device that has the potential to improve surgical outcomes for patients. Credit: Wyss Institute at Harvard University

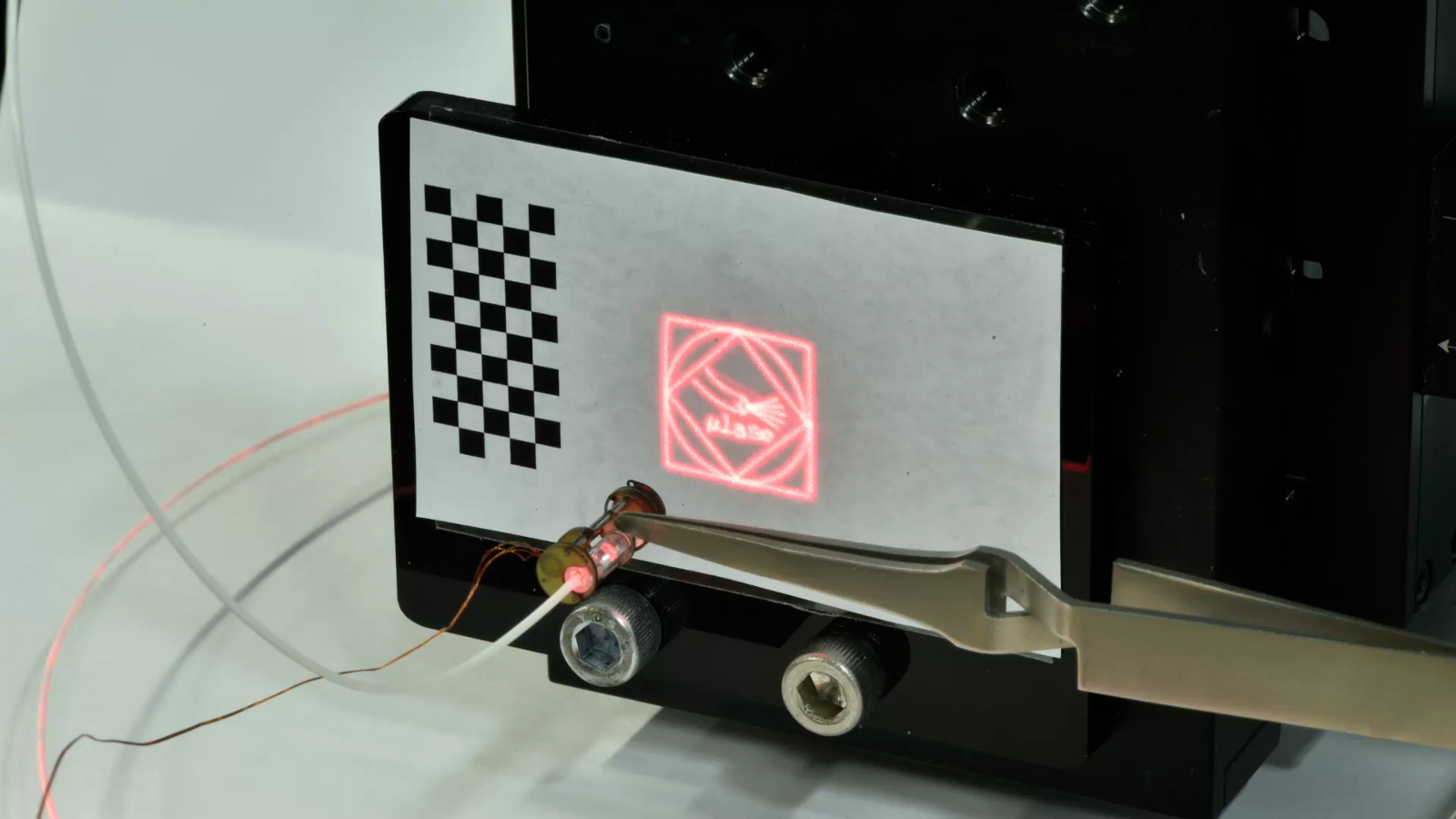

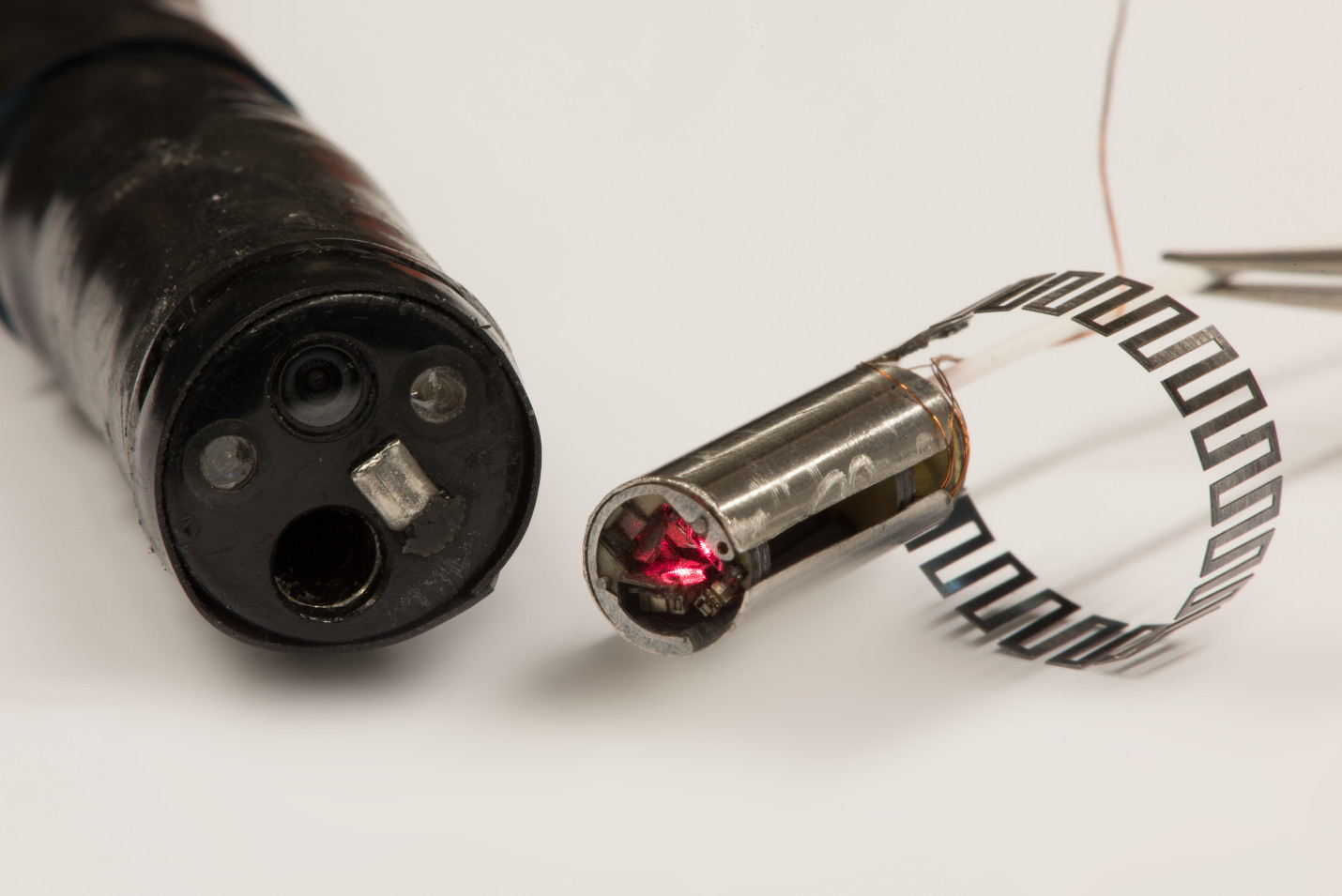

Now, robotic engineers led by Wyss Associate Faculty member Robert Wood, Ph.D., and postdoctoral fellow Peter York, Ph.D., at Harvard University’s Wyss Institute for Biologically Inspired Engineering and John A. Paulson School for Engineering and Applied Science (SEAS) have developed a laser-steering microrobot in a miniaturized 6×16 millimeter package that operates with high speed and precision, and can be integrated with existing endoscopic tools. Their approach, reported in Science Robotics, could help significantly enhance the capabilities of numerous minimally invasive surgeries.

In this multi-disciplinary approach, we managed to harness our ability to rapidly prototype complex microrobotic mechanisms…provide clinicians with a non-disruptive solution that could allow them to advance the possibilities of minimally invasive surgeries in the human body with life-altering or potentially life-saving impact.

Robert Wood

“To enable minimally invasive laser surgery inside the body, we devised a microrobotic approach that allows us to precisely direct a laser beam at small target sites in complex patterns within an anatomical area of interest,” said York, the first and corresponding author on the study and a postdoctoral fellow on Wood’s microrobotics team. “With its large range of articulation, minimal footprint, and fast and precise action, this laser-steering end-effector has great potential to enhance surgical capabilities simply by being added to existing endoscopic devices in a plug-and-play fashion.”

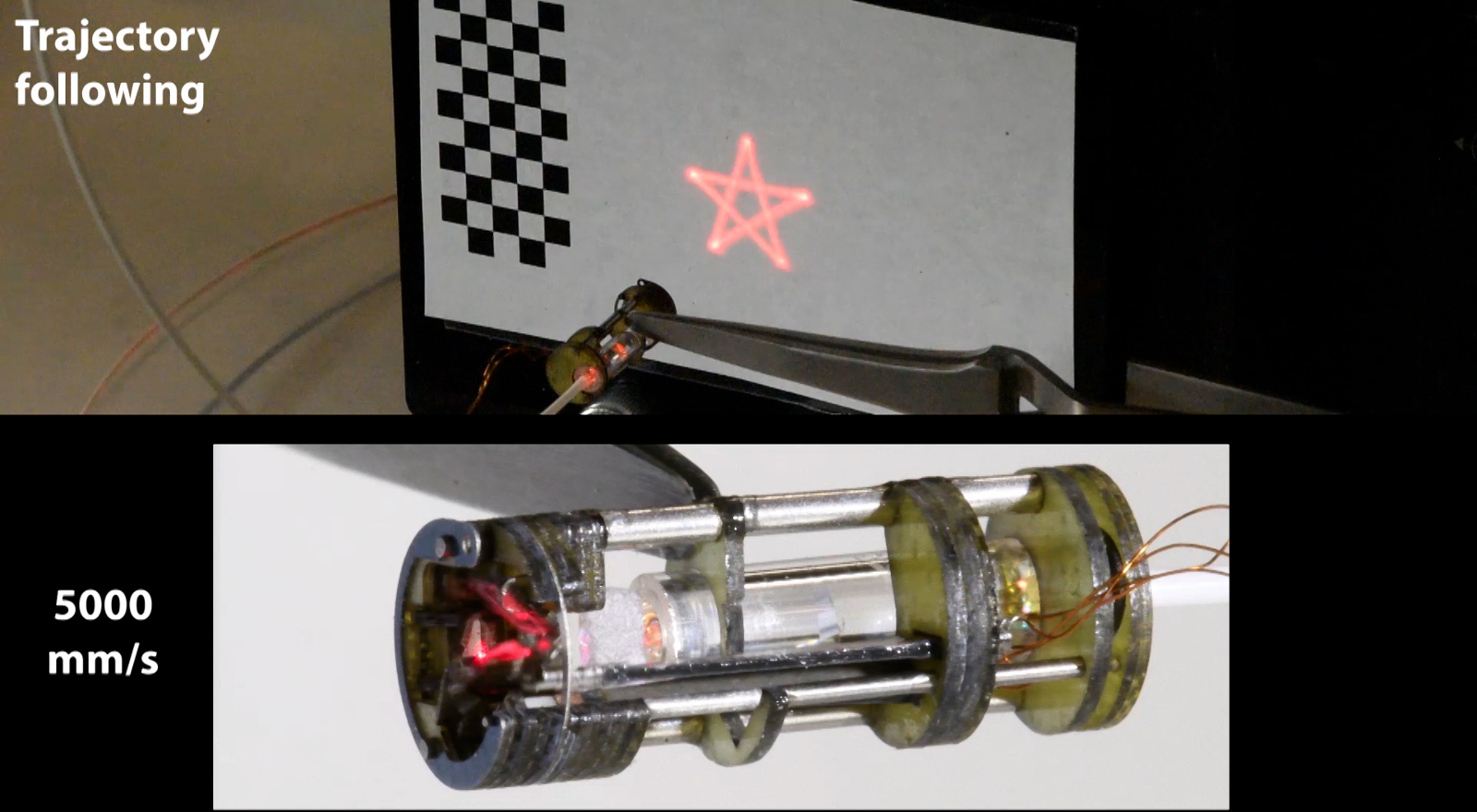

The team needed to overcome the basic challenges in design, actuation, and microfabrication of the optical steering mechanism that enables tight control over the laser beam after it has exited from an optical fiber. These challenges, along with the need for speed and precision, were exacerbated by the size constraints – the entire mechanism had to be housed in a cylindrical structure with roughly the diameter of a drinking straw to be useful for endoscopic procedures.

“We found that for steering and re-directing the laser beam, a configuration of three small mirrors that can rapidly rotate with respect to one another in a small ‘galvanometer’ design provided a sweet spot for our miniaturization effort,” said second author Rut Peña, a mechanical engineer with micro-manufacturing expertise in Wood’s group. “To get there, we leveraged methods from our microfabrication arsenal in which modular components are laminated step-wise onto a superstructure on the millimeter scale – a highly effective fabrication process when it comes to iterating on designs quickly in search of an optimum, and delivering a robust strategy for mass-manufacturing a successful product.”

The team demonstrated that their laser-steering end-effector, miniaturized to a cylinder measuring merely 6 mm in diameter and 16 mm in length, was able to map out and follow complex trajectories in which multiple laser ablations could be performed with high speed, over a large range, and be repeated with high accuracy.

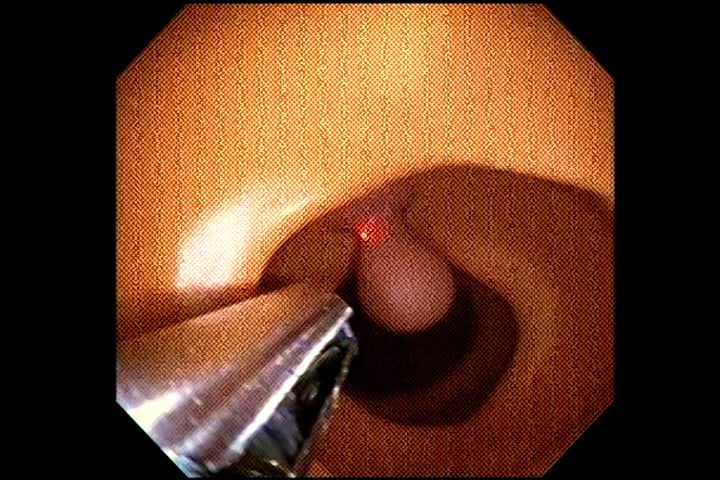

To further show that the device, when attached to the end of a common colonoscope, could be applied to a life-like endoscopic task, York and Peña, advised by Wyss Clinical Fellow Daniel Kent, M.D., successfully simulated the resection of polyps by navigating their device via tele-operation in a benchtop phantom tissue made of rubber. Kent also is a resident physician in general surgery at the Beth Israel Deaconess Medical Center.

“In this multi-disciplinary approach, we managed to harness our ability to rapidly prototype complex microrobotic mechanisms that we have developed over the past decade to provide clinicians with a non-disruptive solution that could allow them to advance the possibilities of minimally invasive surgeries in the human body with life-altering or potentially life-saving impact,” said senior author Wood, Ph.D., who also is the Charles River Professor of Engineering and Applied Sciences at SEAS.

Wood’s microrobotics team together with technology translation experts at the Wyss Institute have patented their approach and are now further de-risking their medical technology (MedTech) as an add-on for surgical endoscopes.

“The Wyss Institute’s focus on microrobotic devices and this new laser-steering device developed by Robert Wood’s team working across disciplines with clinicians and experts in translation will hopefully revolutionize how minimally invasive surgical procedures are carried out in a number of disease areas,” said Wyss Founding Director Donald Ingber, M.D., Ph.D., who is also the Judah Folkman Professor of Vascular Biology at Harvard Medical School and Boston Children’s Hospital, and Professor of Bioengineering at SEAS.

The study was funded by the National Science Foundation under award #CMMI-1830291, and the Wyss Institute for Biologically Inspired Engineering.