RealAnt: A low-cost quadruped robot that can learn via reinforcement learning

Coval introduces a vacuum chamber for gripping protective masks

Robot hands one step closer to human thanks to AI algorithms

Improved remote control of robots

Responding to the Market Need for Quality

Why soft skills could power the rise of robot leaders

How to use Docker containers and Docker Compose for Deep Learning applications

Robots Partnering With Humans: at FPT Industrial Factory 4.0 is Already a Reality Thanks to Collaboration With Comau

Ultra-sensitive and resilient sensor for soft robotic systems

By Leah Burrows / SEAS communications

Newly engineered slinky-like strain sensors for textiles and soft robotic systems survive the washing machine, cars and hammers.

Think about your favorite t-shirt, the one you’ve worn a hundred times, and all the abuse you’ve put it through. You’ve washed it more times than you can remember, spilled on it, stretched it, crumbled it up, maybe even singed it leaning over the stove once. We put our clothes through a lot and if the smart textiles of the future are going to survive all that we throw at them, their components are going to need to be resilient.

Now, researchers from the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) and the Wyss Institute for Biologically Inspired Engineering have developed an ultra-sensitive, seriously resilient strain sensor that can be embedded in textiles and soft robotic systems. The research is published in Nature.

“Current soft strain gauges are really sensitive but also really fragile,” said Oluwaseun Araromi, Ph.D., a Research Associate in Materials Science and Mechanical Engineering at SEAS and the Wyss Institute and first author of the paper. “The problem is that we’re working in an oxymoronic paradigm — highly sensitivity sensors are usually very fragile and very strong sensors aren’t usually very sensitive. So, we needed to find mechanisms that could give us enough of each property.”

In the end, the researchers created a design that looks and behaves very much like a Slinky.

“A Slinky is a solid cylinder of rigid metal but if you pattern it into this spiral shape, it becomes stretchable,” said Araromi. “That is essentially what we did here. We started with a rigid bulk material, in this case carbon fiber, and patterned it in such a way that the material becomes stretchable.”

The pattern is known as a serpentine meander, because its sharp ups and downs resemble the slithering of a snake. The patterned conductive carbon fibers are then sandwiched between two pre-strained elastic substrates. The overall electrical conductivity of the sensor changes as the edges of the patterned carbon fiber come out of contact with each other, similar to the way the individual spirals of a slinky come out of contact with each other when you pull both ends. This process happens even with small amounts of strain, which is the key to the sensor’s high sensitivity.

Unlike current highly sensitive stretchable sensors, which rely on exotic materials such as silicon or gold nanowires, this sensor doesn’t require special manufacturing techniques or even a clean room. It could be made using any conductive material.

The researchers tested the resiliency of the sensor by stabbing it with a scalpel, hitting it with a hammer, running it over with a car, and throwing it in a washing machine ten times. The sensor emerged from each test unscathed. To demonstrate its sensitivity, the researchers embedded the sensor in a fabric arm sleeve and asked a participant to make different gestures with their hand, including a fist, open palm, and pinching motion. The sensors detected the small changes in the subject’s forearm muscle through the fabric and a machine learning algorithm was able to successfully classify these gestures.

“These features of resilience and the mechanical robustness put this sensor in a whole new camp,” said Araromi.

Such a sleeve could be used in everything from virtual reality simulations and sportswear to clinical diagnostics for neurodegenerative diseases like Parkinson’s Disease. Harvard’s Office of Technology Development has filed to protect the intellectual property associated with this project.

“The combination of high sensitivity and resilience are clear benefits of this type of sensor,” said senior author Robert Wood, Ph.D., Associate Faculty member at the Wyss Institute, and the Charles River Professor of Engineering and Applied Sciences at SEAS. “But another aspect that differentiates this technology is the low cost of the constituent materials and assembly methods. This will hopefully reduce the barriers to get this technology widespread in smart textiles and beyond.”

“We are currently exploring how this sensor can be integrated into apparel due to the intimate interface to the human body it provides,” says co-author and Wyss Associate Faculty member Conor Walsh, Ph.D., who also is the Paul A. Maeder Professor of Engineering and Applied Sciences at SEAS. “This will enable exciting new applications by being able to make biomechanical and physiological measurements throughout a person’s day, not possible with current approaches.”

The combination of high sensitivity and resilience are clear benefits of this type of sensor. But another aspect that differentiates this technology is the low cost of the constituent materials and assembly methods. This will hopefully reduce the barriers to get this technology widespread in smart textiles and beyond.

Robert Wood

The research was co-authored by Moritz A. Graule, Kristen L. Dorsey, Sam Castellanos, Jonathan R. Foster, Wen-Hao Hsu, Arthur E. Passy, James C. Weaver, Senior Staff Scientist at SEAS and Joost J. Vlassak, the Abbott and James Lawrence Professor of Materials Engineering at SEAS. It was funded through the university’s strategic research alliance with Tata. The 6-year, $8.4M alliance was established in 2016 to advance Harvard innovation in fields including robotics, wearable technologies, and the internet of things (IoT).

#324: Embodied Interactions: from Robotics to Dance, with Kim Baraka

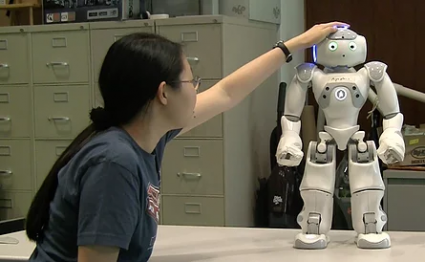

In this episode, our interviewer Lauren Klein speaks with Kim Baraka about his PhD research to enable robots to engage in social interactions, including interactions with children with Autism Spectrum Disorder. Baraka discusses how robots can plan their actions across multiple modalities when interacting with humans, and how models from psychology can inform this process. He also tells us about his passion for dance, and how dance may serve as a testbed for embodied intelligence within Human-Robot Interaction.

Kim Baraka

Kim Baraka is a postdoctoral researcher in the Socially Intelligent Machines Lab at the University of Texas at Austin, and an upcoming Assistant Professor in the Department of Computer Science at Vrije Universiteit Amsterdam, where he will be part of the Social Artificial Intelligence Group. Baraka recently graduated with a dual PhD in Robotics from Carnegie Mellon University (CMU) in Pittsburgh, USA, and the Instituto Superior Técnico (IST) in Lisbon, Portugal. At CMU, Baraka was part of the Robotics Institute and was advised by Prof. Manuela Veloso. At IST, he was part of the Group on AI for People and Society (GAIPS), and was advised by Prof. Francisco Melo.

Kim Baraka is a postdoctoral researcher in the Socially Intelligent Machines Lab at the University of Texas at Austin, and an upcoming Assistant Professor in the Department of Computer Science at Vrije Universiteit Amsterdam, where he will be part of the Social Artificial Intelligence Group. Baraka recently graduated with a dual PhD in Robotics from Carnegie Mellon University (CMU) in Pittsburgh, USA, and the Instituto Superior Técnico (IST) in Lisbon, Portugal. At CMU, Baraka was part of the Robotics Institute and was advised by Prof. Manuela Veloso. At IST, he was part of the Group on AI for People and Society (GAIPS), and was advised by Prof. Francisco Melo.

Dr. Baraka’s research focuses on computational methods that inform artificial intelligence within Human-Robot Interaction. He develops approaches for knowledge transfer between humans and robots in order to support mutual and beneficial relationships between the robot and human. Specifically, he has conducted research in assistive interactions where the robot or human helps their partner to achieve a goal, and in teaching interactions. Baraka is also a contemporary dancer, with an interest in leveraging lessons from dance to inform advances in robotics, or vice versa.

- Download mp3 (13.7 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

PS. If you enjoy listening to experts in robotics and asking them questions, we recommend that you check out Talking Robotics. They have a virtual seminar on Dec 11 where they will be discussing how to conduct remote research for Human-Robot Interaction; something that is very relevant to researchers working from home due to COVID-19.

Inertial Navigation Solution in Delivery Robots & Drones

Women in Robotics Update: Girls Of Steel

“Girls of Steel Robotics (featured 2014) was founded in 2010 at Carnegie Mellon University’s Field Robotics Center as FRC Team 3504. The organization now serves multiple FIRST robotics teams offering STEM opportunities for people of all ages.

Since 2019, Girls of Steel also organizes FIRST Ladies, an online community for anyone involved in FIRST robotics programs who supports girls and women in STEM. Their mission statement reflects their commitment to empowering everyone for success in STEM: “Girls of Steel empowers everyone, especially women and girls, to believe they are capable of success in STEM.”

Girls of Steel celebrated their 10th year in FIRST robotics with a Virtual Gala in May 2020 featuring a panel of four Girls of Steel alumni showcasing a range of STEM opportunities. One is a PhD student in Robotics at CMU, two are working as engineers, and one is a computer science teacher. Girls of Steel are extremely proud of their alumni, of whom 80% are studying or working in STEM fields.

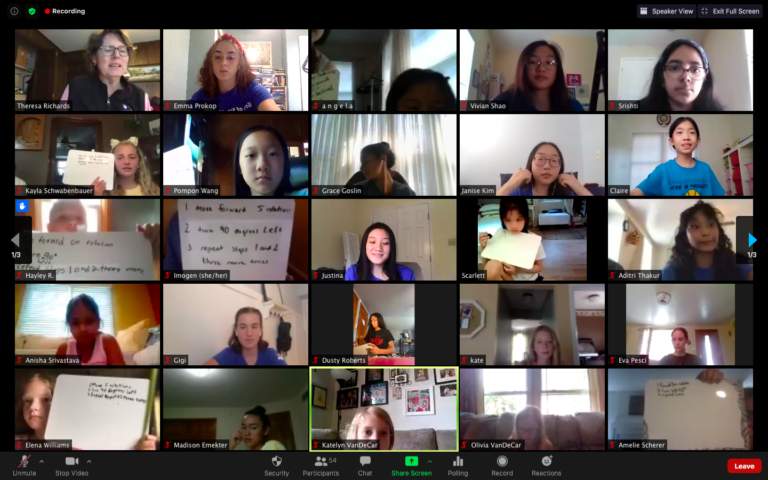

In August 2020, Girls of Steel successfully organized 3 weeks of virtual summer camps and were also able to run 4 teams in a virtual FIRST LEGO League program from September 2020. Girls of Steel also restructured their FIRST team and launched two new sub teams; Advocacy and Diversity, Equity, and Inclusion (DEI) focusing on continuing their efforts to advocate for after-school STEM programs, and for creating an inclusive environment that welcomes all Girls of Steel members. The DEI sub team manages a suggestion box where members can anonymously post ideas for team improvements.

In 2016, Robohub published a follow up on the Girls of Steel and their achievements.

In 2017, Girls of Steel won the 2017 Engineering Inspiration award (Greater Pittsburgh Regional), which “celebrates outstanding success in advancing respect and appreciation for engineering within a team’s school and community.”

In 2018, Girls of Steel won the 2018 Regional Chairman’s Award (Greater Pittsburgh Regional), the most prestigious award at FIRST, it honors the team that best represents a model for other teams to emulate and best embodies the purpose and goals of FIRST.

In 2019, Girls of Steel won the 2019 Gracious Professionalism Award (Greater Pittsburgh Regional), which celebrates the outstanding demonstration of FIRST Core Values such as continuous Gracious Professionalism and working together both on and off the playing field.

And in 2020, Girls of Steel members, Anna N. and Norah O., received 2020 Dean’s List Finalist Awards (Greater Pittsburgh Regional) which reflects their ability to lead their teams and communities to increased awareness for FIRST and its mission while achieving personal technical expertise and accomplishment.

Clearly, all the Girls of Steel over the last ten years are winners. Many women in robotics today point to an early experience in a robotics competition as the turning point when they decided that STEM, particularly robotics, was going to be in their future. We want to thank all the Girls of Steel for being such great role models, and sharing the joy and fun of building robots with other girls/women. It’s working! (And it’s worth it!)

Want to keep reading? There are 180 more stories on our 2013 to 2020 lists. Why not nominate someone for inclusion next year!

And we encourage #womeninrobotics and women who’d like to work in robotics to join our professional network at http://womeninrobotics.org