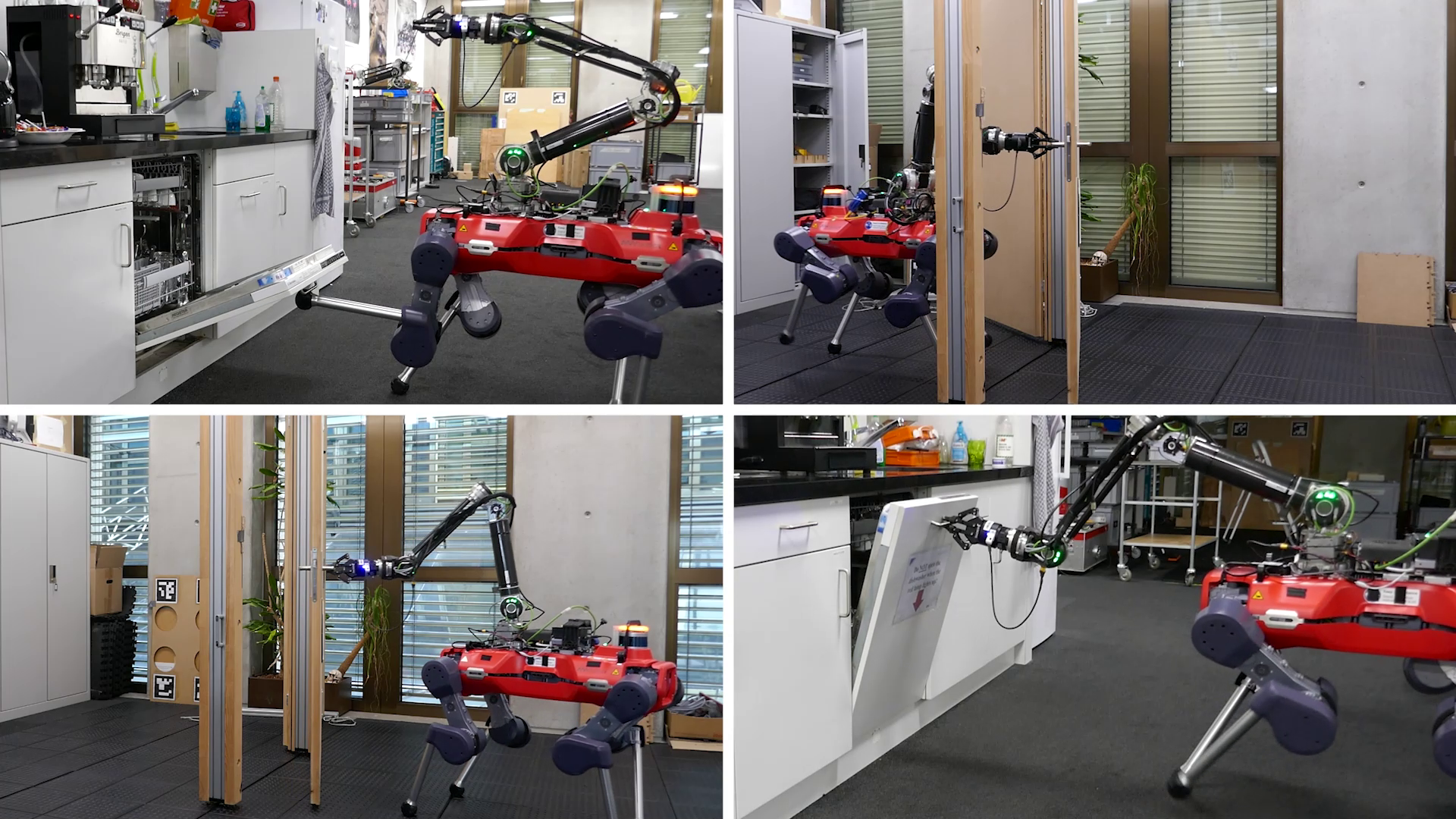

Picture from paper “Versatile multicontact planning and control for legged loco-manipulation“. © American Association for the Advancement of Science

We had the chance to interview Jean Pierre Sleiman, author of the paper “Versatile multicontact planning and control for legged loco-manipulation”, recently published in Science Robotics.

What is the topic of the research in your paper?

The research topic focuses on developing a model-based planning and control architecture that enables legged mobile manipulators to tackle diverse loco-manipulation problems (i.e., manipulation problems inherently involving a locomotion element). Our study specifically targeted tasks that would require multiple contact interactions to be solved, rather than pick-and-place applications. To ensure our approach is not limited to simulation environments, we applied it to solve real-world tasks with a legged system consisting of the quadrupedal platform ANYmal equipped with DynaArm, a custom-built 6-DoF robotic arm.

Could you tell us about the implications of your research and why it is an interesting area for study?

The research was driven by the desire to make such robots, namely legged mobile manipulators, capable of solving a variety of real-world tasks, such as traversing doors, opening/closing dishwashers, manipulating valves in an industrial setting, and so forth. A standard approach would have been to tackle each task individually and independently by dedicating a substantial amount of engineering effort to handcraft the desired behaviors:

This is typically achieved through the use of hard-coded state-machines in which the designer specifies a sequence of sub-goals (e.g., grasp the door handle, open the door to a desired angle, hold the door with one of the feet, move the arm to the other side of the door, pass through the door while closing it, etc.). Alternatively, a human expert may demonstrate how to solve the task by teleoperating the robot, recording its motion, and having the robot learn to mimic the recorded behavior.

However, this process is very slow, tedious, and prone to engineering design errors. To avoid this burden for every new task, the research opted for a more structured approach in the form of a single planner that can automatically discover the necessary behaviors for a wide range of loco-manipulation tasks, without requiring any detailed guidance for any of them.

Could you explain your methodology?

The key insight underlying our methodology was that all of the loco-manipulation tasks that we aimed to solve can be modeled as Task and Motion Planning (TAMP) problems. TAMP is a well-established framework that has been primarily used to solve sequential manipulation problems where the robot already possesses a set of primitive skills (e.g., pick object, place object, move to object, throw object, etc.), but still has to properly integrate them to solve more complex long-horizon tasks.

This perspective enabled us to devise a single bi-level optimization formulation that can encompass all our tasks, and exploit domain-specific knowledge, rather than task-specific knowledge. By combining this with the well-established strengths of different planning techniques (trajectory optimization, informed graph search, and sampling-based planning), we were able to achieve an effective search strategy that solves the optimization problem.

The main technical novelty in our work lies in the Offline Multi-Contact Planning Module, depicted in Module B of Figure 1 in the paper. Its overall setup can be summarized as follows: Starting from a user-defined set of robot end-effectors (e.g., front left foot, front right foot, gripper, etc.) and object affordances (these describe where the robot can interact with the object), a discrete state that captures the combination of all contact pairings is introduced. Given a start and goal state (e.g., the robot should end up behind the door), the multi-contact planner then solves a single-query problem by incrementally growing a tree via a bi-level search over feasible contact modes jointly with continuous robot-object trajectories. The resulting plan is enhanced with a single long-horizon trajectory optimization over the discovered contact sequence.

What were your main findings?

We found that our planning framework was able to rapidly discover complex multi- contact plans for diverse loco-manipulation tasks, despite having provided it with minimal guidance. For example, for the door-traversal scenario, we specify the door affordances (i.e., the handle, back surface, and front surface), and only provide a sparse objective by simply asking the robot to end up behind the door. Additionally, we found that the generated behaviors are physically consistent and can be reliably executed with a real legged mobile manipulator.

What further work are you planning in this area?

We see the presented framework as a stepping stone toward developing a fully autonomous loco-manipulation pipeline. However, we see some limitations that we aim to address in future work. These limitations are primarily connected to the task-execution phase, where tracking behaviors generated on the basis of pre-modeled environments is only viable under the assumption of a reasonably accurate description, which is not always straightforward to define.

Robustness to modeling mismatches can be greatly improved by complementing our planner with data-driven techniques, such as deep reinforcement learning (DRL). So one interesting direction for future work would be to guide the training of a robust DRL policy using reliable expert demonstrations that can be rapidly generated by our loco-manipulation planner to solve a set of challenging tasks with minimal reward-engineering.

About the author

|

Jean-Pierre Sleiman received the B.E. degree in mechanical engineering from the American University of Beirut (AUB), Lebanon, in 2016, and the M.S. degree in automation and control from Politecnico Di Milano, Italy, in 2018. He is currently a Ph.D. candidate at the Robotic Systems Lab (RSL), ETH Zurich, Switzerland. His current research interests include optimization-based planning and control for legged mobile manipulation. |