How AI image generators could help robots

Drone delivery is a thing now. But how feasible is having it everywhere, and would we even want it?

Drone delivery is a thing now. But how feasible is having it everywhere, and would we even want it?

Using small drones to measure wind speeds in the polar regions

The Way to Combine Metal and Plastic: Insert Molding

Robots that can feel cloth layers may one day help with laundry

RobotFalcon found to be effective in chasing off flocks of birds around airports

Sales of Robots for the Service Sector Grew by 37% Worldwide

Reprogrammable materials selectively self-assemble

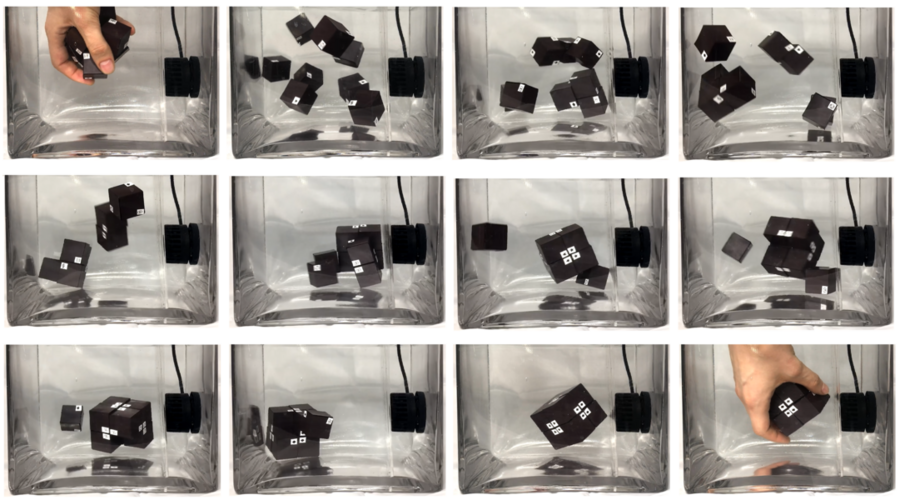

With just a random disturbance that energizes the cubes, they selectively self-assemble into a larger block. Photos courtesy of MIT CSAIL.

By Rachel Gordon | MIT CSAIL

While automated manufacturing is ubiquitous today, it was once a nascent field birthed by inventors such as Oliver Evans, who is credited with creating the first fully automated industrial process, in flour mill he built and gradually automated in the late 1700s. The processes for creating automated structures or machines are still very top-down, requiring humans, factories, or robots to do the assembling and making.

However, the way nature does assembly is ubiquitously bottom-up; animals and plants are self-assembled at a cellular level, relying on proteins to self-fold into target geometries that encode all the different functions that keep us ticking. For a more bio-inspired, bottom-up approach to assembly, then, human-architected materials need to do better on their own. Making them scalable, selective, and reprogrammable in a way that could mimic nature’s versatility means some teething problems, though.

Now, researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have attempted to get over these growing pains with a new method: introducing magnetically reprogrammable materials that they coat different parts with — like robotic cubes — to let them self-assemble. Key to their process is a way to make these magnetic programs highly selective about what they connect with, enabling robust self-assembly into specific shapes and chosen configurations.

The soft magnetic material coating the researchers used, sourced from inexpensive refrigerator magnets, endows each of the cubes they built with a magnetic signature on each of its faces. The signatures ensure that each face is selectively attractive to only one other face from all the other cubes, in both translation and rotation. All of the cubes — which run for about 23 cents — can be magnetically programmed at a very fine resolution. Once they’re tossed into a water tank (they used eight cubes for a demo), with a totally random disturbance — you could even just shake them in a box — they’ll bump into each other. If they meet the wrong mate, they’ll drop off, but if they find their suitable mate, they’ll attach.

An analogy would be to think of a set of furniture parts that you need to assemble into a chair. Traditionally, you’d need a set of instructions to manually assemble parts into a chair (a top-down approach), but using the researchers’ method, these same parts, once programmed magnetically, would self-assemble into the chair using just a random disturbance that makes them collide. Without the signatures they generate, however, the chair would assemble with its legs in the wrong places.

“This work is a step forward in terms of the resolution, cost, and efficacy with which we can self-assemble particular structures,” says Martin Nisser, a PhD student in MIT’s Department of Electrical Engineering and Computer Science (EECS), an affiliate of CSAIL, and the lead author on a new paper about the system. “Prior work in self-assembly has typically required individual parts to be geometrically dissimilar, just like puzzle pieces, which requires individual fabrication of all the parts. Using magnetic programs, however, we can bulk-manufacture homogeneous parts and program them to acquire specific target structures, and importantly, reprogram them to acquire new shapes later on without having to refabricate the parts anew.”

Using the team’s magnetic plotting machine, one can stick a cube back in the plotter and reprogram it. Every time the plotter touches the material, it creates either a “north”- or “south”-oriented magnetic pixel on the cube’s soft magnetic coating, letting the cubes be repurposed to assemble new target shapes when required. Before plotting, a search algorithm checks each signature for mutual compatibility with all previously programmed signatures to ensure they are selective enough for successful self-assembly.

With self-assembly, you can go the passive or active route. With active assembly, robotic parts modulate their behavior online to locate, position, and bond to their neighbors, and each module needs to be embedded with hardware for the computation, sensing, and actuation required to self-assemble themselves. What’s more, a human or computer is needed in the loop to actively control the actuators embedded in each part to make it move. While active assembly has been successful in reconfiguring a variety of robotic systems, the cost and complexity of the electronics and actuators have been a significant barrier to scaling self-assembling hardware up in numbers and down in size.

With passive methods like these researchers’, there’s no need for embedded actuation and control.

Once programmed and set free under a random disturbance that gives them the energy to collide with one another, they’re on their own to shapeshift, without any guiding intelligence.

If you want a structure built from hundreds or thousands of parts, like a ladder or bridge, for example, you wouldn’t want to manufacture a million uniquely different parts, or to have to re-manufacture them when you need a second structure assembled.

The trick the team used toward this goal lies in the mathematical description of the magnetic signatures, which describes each signature as a 2D matrix of pixels. These matrices ensure that any magnetically programmed parts that shouldn’t connect will interact to produce just as many pixels in attraction as those in repulsion, letting them remain agnostic to all non-mating parts in both translation and rotation.

While the system is currently good enough to do self-assembly using a handful of cubes, the team wants to further develop the mathematical descriptions of the signatures. In particular, they want to leverage design heuristics that would enable assembly with very large numbers of cubes, while avoiding computationally expensive search algorithms.

“Self-assembly processes are ubiquitous in nature, leading to the incredibly complex and beautiful life we see all around us,” says Hod Lipson, the James and Sally Scapa Professor of Innovation at Columbia University, who was not involved in the paper. “But the underpinnings of self-assembly have baffled engineers: How do two proteins destined to join find each other in a soup of billions of other proteins? Lacking the answer, we have been able to self-assemble only relatively simple structures so far, and resort to top-down manufacturing for the rest. This paper goes a long way to answer this question, proposing a new way in which self-assembling building blocks can find each other. Hopefully, this will allow us to begin climbing the ladder of self-assembled complexity.”

Nisser wrote the paper alongside recent EECS graduates Yashaswini Makaram ’21 and Faraz Faruqi SM ’22, both of whom are former CSAIL affiliates; Ryo Suzuki, assistant professor of computer science at the University of Calgary; and MIT associate professor of EECS Stefanie Mueller, who is a CSAIL affiliate. They will present their research at the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2022).