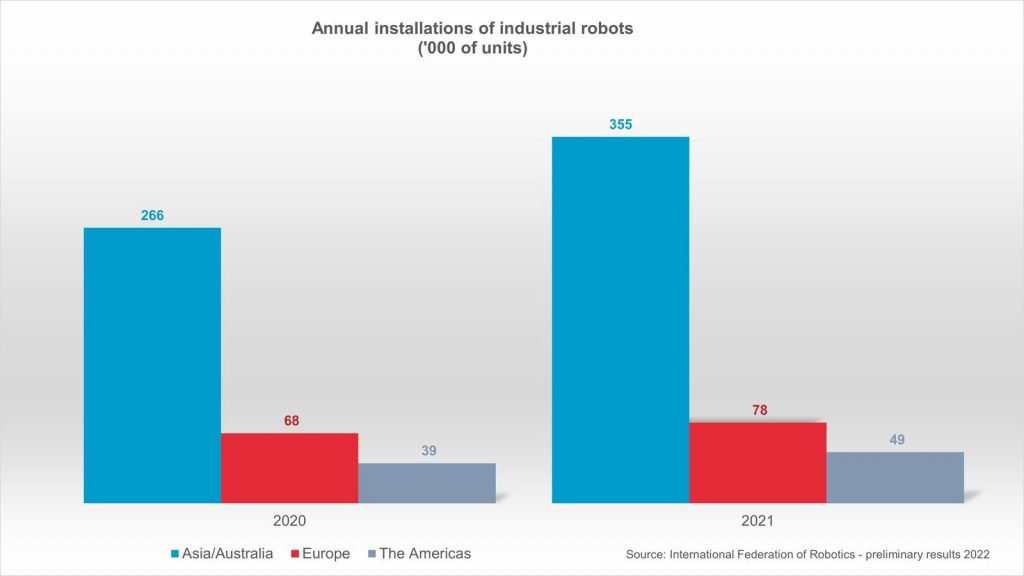

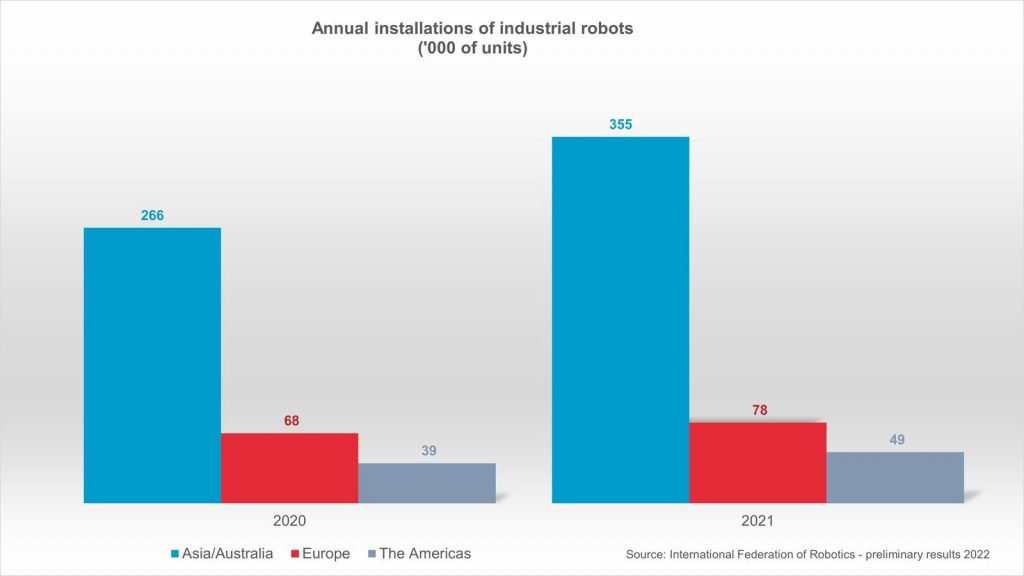

Sales of industrial robots have reached a strong recovery: A new record of 486,800 units were shipped globally – an increase of 27% compared to the previous year. Asia/Australia saw the largest growth in demand: installations were up 33% reaching 354,500 units. The Americas increased by 27% with 49,400 units sold. Europe saw double digit growth of 15% with 78,000 units installed. These preliminary results for 2021 have been published by the International Federation of Robotics.

Preliminary annual installations 2022 compared to 2020 by region – source: International Federation of Robotics

“Robot installations around the world recovered strongly and make 2021 the most successful year ever for the robotics industry,” says Milton Guerry, President of the International Federation of Robotics (IFR). “Due to the ongoing trend towards automation and continued technological innovation, demand reached high levels across industries. In 2021, even the pre-pandemic record of 422,000 installations per year in 2018 was exceeded.”

Strong demand across industries

In 2021, the main growth driver was the electronics industry (132,000 installations, +21%), which surpassed the automotive industry (109,000 installations, +37%) as the largest customer of industrial robots already in 2020. Metal and machinery (57,000 installations, +38%) followed, ahead of plastics and chemical products (22,500 installations, +21%) and food and beverages (15,300 installations, +24%).

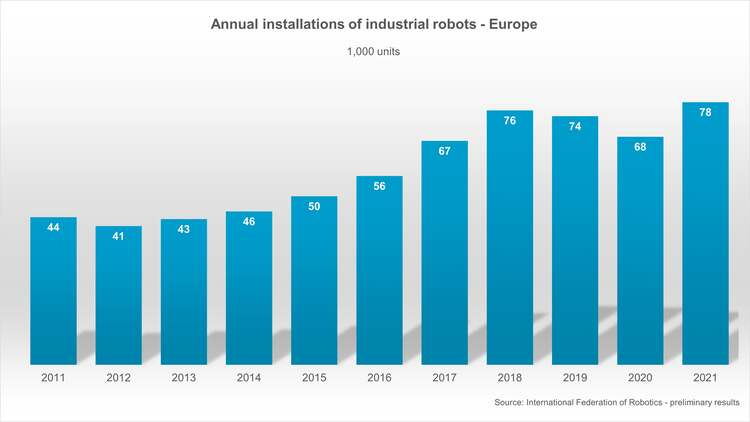

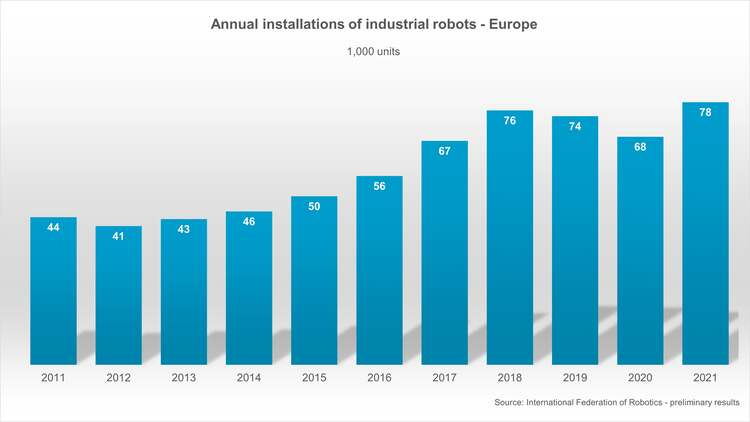

Europe recovered

In 2021, industrial robot installations in Europe recovered after two years of decline – exceeding the peak of 75,600 units in 2018. Demand from the most important adopter, the automotive industry, moved at a high level sideways (19,300 installations, +/-0%). Demand from metal and machinery rose strongly (15,500 installations, +50%), followed by plastics and chemical products (7,700 installations, +30%).

The Americas recovered

In the Americas, the number of industrial robot installations reached the second-best result ever, only surpassed by the record year 2018 (55,200 installations). The largest American market, the United States, shipped 33,800 units – this represents a market share of 68%.

Asia remains world’s largest market

Asia remains the world’s largest industrial robot market: 73% of all newly deployed robots in 2021 were installed in Asia. A total of 354,500 units were shipped in 2021, up 33% compared to 2020. The electronics industry adopted by far the most units (123,800 installations, +22%), followed by a strong demand from the automotive industry (72,600 installations, +57%) and the metal and machinery industry (36,400 installations, +29%).

Video: “Sustainable! How robots enable a green future”

At automatica 2022 trade fair in Munich, robotics industry leaders discussed, how robotics and automation enable to develop sustainable strategies and a green future. A videocast by IFR will feature the event with key statements of executives from ABB, MERCEDES BENZ, STÄUBLI, VDMA and the EUROPEAN COMMISSION. Please find a summary soon on our YouTube Channel.