Standards, Guidelines & Industry Best Practices for Industrial & Collaborative Robots

#IROS2020 Plenary and Keynote talks focus series #5: Nikolaus Correll & Cynthia Breazeal

As part of our series showcasing the plenary and keynote talks from the IEEE/RSJ IROS2020 (International Conference on Intelligent Robots and Systems), this week we bring you Nikolaus Correll (Associate Professor at the University of Colorado at Boulder) and Cynthia Breazeal (Professor of Media Arts and Sciences at MIT). Nikolaus’ talk is on the topic of robot manipulation, while Cynthia’s talk is about the topic of social robots.

|

|

Prof. Nikolaus Correll – Robots Getting a Grip on General Manipulation Bio: Nikolaus Correll is an Associate Professor at the University of Colorado at Boulder. He obtained his MS in Electrical Engineering from ETH Zürich and his PhD in Computer Science from EPF Lausanne in 2007. From 2007-2009 he was a post-doc at MIT’s Computer Science and Artificial Intelligence Lab (CSAIL). Nikolaus is the recipient of a NSF CAREER award, a NASA Early Career Faculty Fellowship and a Provost Faculty achievement award. In 2016, he founded Robotic Materials Inc. to commercialize robotic manipulation technology. |

|

|

Prof. Cynthia Breazeal – Living with Social Robots: from Research to Commercialization and Back Abstract: Social robots are designed to interact with people in an interpersonal way, engaging and supporting collaborative social and emotive behavior for beneficial outcomes. We develop adaptive algorithmic capabilities and deploy multitudes of cloud-connected robots in schools, homes, and other living facilities to support long-term interpersonal engagement and personalization of specific interventions. We examine the impact of the robot’s social embodiment, emotive and relational attributes, and personalization capabilities on sustaining people’s engagement, improving learning, impacting behavior, and shaping attitudes to help people achieve long-term goals. I will also highlight challenges and opportunities in commercializing social robot technologies for impact at scale. In a time where citizens are beginning to live with intelligent machines on a daily basis, we have the opportunity to explore, develop, study, and assess humanistic design principles to support and promote human flourishing at all ages and stages. Bio: Cynthia Breazeal is a Professor at the MIT Media Lab where she founded and Directs the Personal Robots Group. She is also Associate Director of the Media Lab in charge of new strategic initiatives, and she is spearheading MIT’s K-12 education initiative on AI in collaboration with the Media Lab, Open Learning and the Schwarzman College of Computing. She is recognized as a pioneer in the field of social robotics and human-robot interaction and is a AAAI Fellow. She is a recipient of awards by the National Academy of Engineering as well as the National Design Awards. She has received Technology Review’s TR100/35 Award and the George R. Stibitz Computer & Communications Pioneer Award. She has also been recognized as an award-winning entrepreneur, designer and innovator by CES, Fast Company, Entrepreneur Magazine, Forbes, and Core 77 to name a few. Her robots have been recognized by TIME magazine’s Best Inventions in 2008 and in 2017 where her award-wining Jibo robot was featured on the cover. She received her doctorate from MIT in Electrical Engineering and Computer Science in 2000. |

A policy to enable the use of general-purpose manipulators in high-speed robot air hockey

An omnidirectional octopus-like robot arm that can stretch, bend and twist without a motor

The Ultimate Guide to GPU Computers

NASA taps Kyoto startup to make maps of the wind for drones

Contamination-free automation

What is the future of Agri-Food Robotics in the EU and beyond?

By Kees Lokhorst, Project Coordinator of the agROBOfood project

During the last decades robots are transforming from simple machines to cognitive collaborators. The distance that has been covered is long, but there are still challenges, as well as opportunities that lie ahead. That was also the main topic of discussion in the agROBOfood event ‘Visioning the future of agri-food robotics’ by a panel of experts of the domain.

The topic was introduced by two inspiring presentations. The first one was by Jérôme Bandry, who shared the vision of CEMA (European Agricultural Machinery). According to their insights digitalization, precision agriculture and robotics will be part or even drive the re-shape of agricultural systems that address the societal challenges. Industrial leadership will be needed to address challenges on investments in the data infrastructure, legislation, and skills development. However, the biggest challenge is to bridge the gap between the development of innovative robotic solutions and the successful uptake of these solutions in practice.

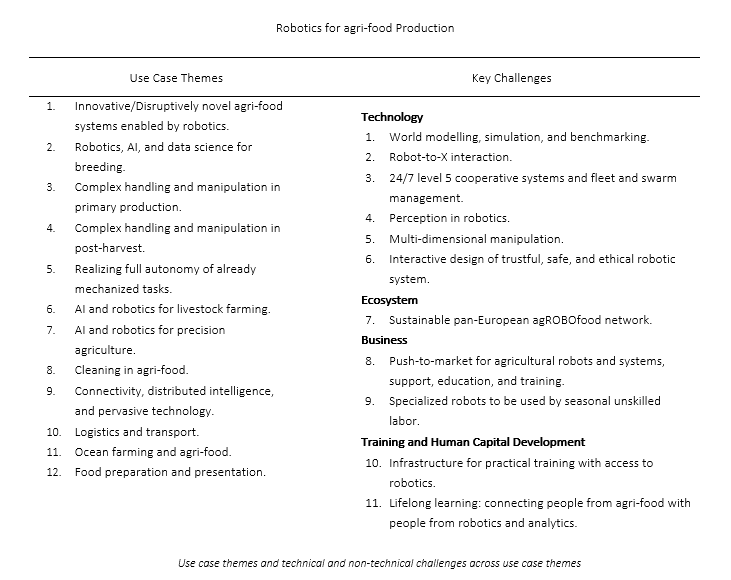

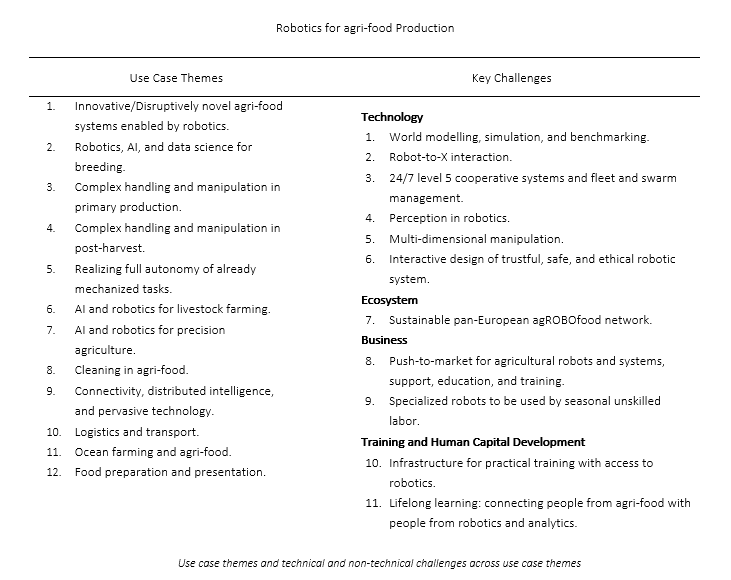

The second one by Dr. Slawomir Sander, Chair of the EU-Robotics AISBL topic group on agriculture robotics showcased the opportunities and challenges for the community involved in the European Robotics for the agri-food production. Those are available in the strategic agenda that was developed by EU-Robotics along with agROBOfood, an H2020 project that aims to build a pan-European network of Digital Innovation Hubs in the agri-food sector. The purpose of this strategic agenda was to contribute to the development of the European Strategic Research and Innovation and Deployment Agenda (SRIDA) of the AI, Data and Robotics PPP, a broad and coherent view of the needs, applications, key challenges for robotics for the agri-food production domain in combination with data and AI, a 360o stakeholder view addressing technology, business, and ecosystem development, and a basis for the vision, alignment, and development of programs, projects, groups, and consortia that aim to contribute to the development and deployment of robotics, AI, and data in the agri-food production network.

The agenda’s vision is well encapsulated in the following sentence: “Future agri-food production networks will be flexible, responsive, and transparent, providing sufficient high-quality and healthy products and services for everyone at a reasonable cost while preserving resources, biodiversity, climate, environment, and cultural differences”. The mission’s respectively in the following: “Stimulate the development and integration of innovative robotic, AI, and Data solutions that can successfully be used in flexible, responsive, and transparent agri-food production networks”.

The current release of the agenda mainly focuses on the food production part. Being a working document though it will serve as a basis for further elaboration to encompass the whole agri-food domain in its full width and depth. With an emphasis on robotics and to a lesser extent on AI and Data, it provides a vision and mission on what robotics should contribute and how to facilitate the transition to a more sustainable agri-food production. Key challenges in terms of technology, ecosystem, and market are derived from 12 use-case themes within the agri-food production domain (table).

In case of questions, please contact Kees.lokhorst@wur.nl or visit https://agrobofood.eu/project/ and https://sparc-robotics-portal.eu/web/agriculture/home

What is the future of Agri-Food Robotics in the EU and beyond?

By Kees Lokhorst, Project Coordinator of the agROBOfood project

During the last decades robots are transforming from simple machines to cognitive collaborators. The distance that has been covered is long, but there are still challenges, as well as opportunities that lie ahead. That was also the main topic of discussion in the agROBOfood event ‘Visioning the future of agri-food robotics’ by a panel of experts of the domain.

The topic was introduced by two inspiring presentations. The first one was by Jérôme Bandry, who shared the vision of CEMA (European Agricultural Machinery). According to their insights digitalization, precision agriculture and robotics will be part or even drive the re-shape of agricultural systems that address the societal challenges. Industrial leadership will be needed to address challenges on investments in the data infrastructure, legislation, and skills development. However, the biggest challenge is to bridge the gap between the development of innovative robotic solutions and the successful uptake of these solutions in practice.

The second one by Dr. Slawomir Sander, Chair of the EU-Robotics AISBL topic group on agriculture robotics showcased the opportunities and challenges for the community involved in the European Robotics for the agri-food production. Those are available in the strategic agenda that was developed by EU-Robotics along with agROBOfood, an H2020 project that aims to build a pan-European network of Digital Innovation Hubs in the agri-food sector. The purpose of this strategic agenda was to contribute to the development of the European Strategic Research and Innovation and Deployment Agenda (SRIDA) of the AI, Data and Robotics PPP, a broad and coherent view of the needs, applications, key challenges for robotics for the agri-food production domain in combination with data and AI, a 360o stakeholder view addressing technology, business, and ecosystem development, and a basis for the vision, alignment, and development of programs, projects, groups, and consortia that aim to contribute to the development and deployment of robotics, AI, and data in the agri-food production network.

The agenda’s vision is well encapsulated in the following sentence: “Future agri-food production networks will be flexible, responsive, and transparent, providing sufficient high-quality and healthy products and services for everyone at a reasonable cost while preserving resources, biodiversity, climate, environment, and cultural differences”. The mission’s respectively in the following: “Stimulate the development and integration of innovative robotic, AI, and Data solutions that can successfully be used in flexible, responsive, and transparent agri-food production networks”.

The current release of the agenda mainly focuses on the food production part. Being a working document though it will serve as a basis for further elaboration to encompass the whole agri-food domain in its full width and depth. With an emphasis on robotics and to a lesser extent on AI and Data, it provides a vision and mission on what robotics should contribute and how to facilitate the transition to a more sustainable agri-food production. Key challenges in terms of technology, ecosystem, and market are derived from 12 use-case themes within the agri-food production domain (table).

In case of questions, please contact Kees.lokhorst@wur.nl or visit https://agrobofood.eu/project/ and https://sparc-robotics-portal.eu/web/agriculture/home

#IROS2020 Real Roboticist focus series #4: Peter Corke (Learning)

In this fourth release of our series dedicated to IEEE/RSJ IROS 2020 (International Conference on Intelligent Robots and Systems) original series Real Roboticist, we bring you Peter Corke. He is a distinguished professor of robotic vision at Queensland University of Technology, Director of the QUT Centre for Robotics, and Director of the ARC Centre of Excellence for Robotic Vision.

If you’ve ever studied a robotics or computer vision course, you might have read a classic book: Peter Corke’s Robotics, Vision and Control. Moreover, Peter has also released several open-source robotics resources and free courses, all available at his website. If you’d like to hear more about his career in robotics and education, his main challenges and what he learnt from them, and what’s his advice for current robotics students, check out his video below. Have fun!

Expanding human-robot collaboration in manufacturing by training AI to detect human intention

2021 IEEE RAS Publications best paper awards

The IEEE Robotics and Automation Society has recently released the list of winners of their best paper awards. Below you can see the list and access the publications. Congratulations to all winners!

IEEE Transactions on Robotics King-Sun Fu Memorial Best Paper Award

- TossingBot: Learning to Throw Arbitrary Objects With Residual Physics. Andy Zeng, Shuran Song, Johnny Lee, Alberto Rodriguez and Thomas Funkhouser. IEEE Transactions on Robotics; vol. 36, no. 4, pp. 1307-1319, August 2020

IEEE Transactions on Automation Science and Engineering Best Paper Award

- A Recursive Watermark Method for Hard Real-Time Industrial Control Systems Cyber Resilience Enhancement. Zhen Song, Antun Skuric, and Kun Ji. IEEE Transactions on Automation Science and Engineering; vol. 17, no. 2, pp. 1030-1043, 2020

IEEE Transactions on Automation Science and Engineering Best New Application Paper Award

- Robotic Batch Somatic Cell Nuclear Transfer Based on Microfluidic Groove. Yaowei Liu, Xuefeng Wang, Qili Zhao, Xin Zhao, and Mingzhu Sun. IEEE Transactions on Automation Science and Engineering; vol. 17, no. 4, pp. 2097-2106, 2020

IEEE Robotics and Automation Letters Best Paper Award

- LIT: Light-Field Inference of Transparency for Refractive Object Localization. Zheming Zhou, Xiaotong Chen, and Odest Chadwicke Jenkins. IEEE Robotics and Automation Letters; vol. 5, no. 3, pp. 4548-4555, July 2020

- EGAD! An Evolved Grasping Analysis Dataset for Diversity and Reproducibility in Robotic Manipulation. Douglas Morrison, Peter Corke, and Jürgen Leitner. IEEE Robotics and Automation Letters; vol. 5, no. 3, pp. 4368-4375, July 2020

- Inverted and Inclined Climbing Using Capillary Adhesion in a Quadrupedal Insect-Scale Robot. Yufeng Chen, Neel Doshi, and Robert J. Wood. IEEE Robotics and Automation Letters; vol. 5, no. 3, pp. 4820-4827, July 2020

- Electronics-Free Logic Circuits for Localized Feedback Control of Multi-Actuator Soft Robots. Ke Xu and Néstor O. Pérez-Arancibia. IEEE Robotics and Automation Letters; vol. 5, no. 3, pp. 3990-3997, July 2020

- Quadrupedal Locomotion on Uneven Terrain With Sensorized Feet. Giorgio Valsecchi, Ruben Grandia, and Marco Hutter. IEEE Robotics and Automation Letters; vol. 5, no. 2, pp. 1548-1555, April 2020

IEEE Robotics and Automation Magazine Best Paper Award

- Vine Robots: Design, Teleoperation, and Deployment for Navigation and Exploration. Margaret M. Coad, Laura H. Blumenschein, Sadie Cutler, Javier A. Reyna Zepeda, Nicholas D. Naclerio, Haitham El-Hussieny, Usman Mehmood, Jee-Hwan Ryu, Elliot W. Hawkes, and Allison M. Okamura. IEEE Robotics and Automation Magazine; vol. 27, no. 3, pp. 120-132, September 2020

IEEE Transactions on Haptics Best Paper Award

- Proprioceptive Localization of the Fingers: Coarse, Biased, and Context-Sensitive. Bharat Dandu, Irene A. Kuling, and Yon Visell. IEEE Transactions on Haptics, vol. 13, no. 2, pp. 259-269, 1 April-June 2020

IEEE Transactions on Haptics Best Application Paper Award

- Soft Exoskeleton Glove with Human Anatomical Architecture: Production of Dexterous Finger Movements and Skillful Piano Performance. Nobuhiro Takahashi, Shinichi Furuya, and Hideki Koike. IEEE Transactions on Haptics, vol. 13, no. 4, pp. 679-690, Oct.-Dec. 2020

Democratising Automation – the Benefits of No-code Robotics

#337: Autonomously Mapping the Seafloor, with Anthony DiMare

![]()

Anthony DiMare and Charles Chiau deep dive into how Bedrock Ocean is innovating in the world of Marine Surveys. At Bedrock Ocean, they are developing an Autonomous Underwater Vehicle (AUV) that is able to map the seafloor autonomously and at a high resolution. They are also developing a data platform to access, process, and visualize data captured from other companies at the seafloor.

Bedrock Ocean is solving two problems in the industry of Marine Surveying.

1. The vast majority of the seafloor is completely unmapped

2. The data that is captured from the seafloor is not standardized or centralized.

Seafloor data conducted by two different companies with the same or different hardware to capture the data can vary significantly in the calculated seafloor profile

Anthony DiMare

Anthony previously founded Nautilus Labs, a leading maritime technology company advancing the efficiency of ocean commerce through artificial intelligence. While at Nautilus, Anthony helped global companies solve challenges with distributed, siloed maritime data systems and built the early team that launched Nautilus Platform into large publicly listed shipping companies.

Charles Chiau

Charles, Bedrock’s CTO, was previously at SpaceX where he helped design the avionics systems for Crew Dragon. He also was a system integration engineer at Reliable Robotics working on their autonomous aviation system and was the CTO of DeepFlight where he worked on manned submersibles including ones for Tom Perkins, Richard Branson, and Steve Fossett.