Finding the Staff Needed: Connected Factories Driving Demand for Robotics Engineers

New algorithm flies drones faster than human racing pilots

To be useful, drones need to be quick. Because of their limited battery life they must complete whatever task they have – searching for survivors on a disaster site, inspecting a building, delivering cargo – in the shortest possible time. And they may have to do it by going through a series of waypoints like windows, rooms, or specific locations to inspect, adopting the best trajectory and the right acceleration or deceleration at each segment.

Algorithm outperforms professional pilots

The best human drone pilots are very good at doing this and have so far always outperformed autonomous systems in drone racing. Now, a research group at the University of Zurich (UZH) has created an algorithm that can find the quickest trajectory to guide a quadrotor – a drone with four propellers – through a series of waypoints on a circuit. “Our drone beat the fastest lap of two world-class human pilots on an experimental race track”, says Davide Scaramuzza, who heads the Robotics and Perception Group at UZH and the Rescue Robotics Grand Challenge of the NCCR Robotics, which funded the research.

“The novelty of the algorithm is that it is the first to generate time-optimal trajectories that fully consider the drones’ limitations”, says Scaramuzza. Previous works relied on simplifications of either the quadrotor system or the description of the flight path, and thus they were sub-optimal. “The key idea is, rather than assigning sections of the flight path to specific waypoints, that our algorithm just tells the drone to pass through all waypoints, but not how or when to do that”, adds Philipp Foehn, PhD student and first author of the paper in Science Robotics.

External cameras provide position information in real-time

The researchers had the algorithm and two human pilots fly the same quadrotor through a race circuit. They employed external cameras to precisely capture the motion of the drones and – in the case of the autonomous drone – to give real-time information to the algorithm on where the drone was at any moment. To ensure a fair comparison, the human pilots were given the opportunity to train on the circuit before the race. But the algorithm won: all its laps were faster than the human ones, and the performance was more consistent. This is not surprising, because once the algorithm has found the best trajectory it can reproduce it faithfully many times, unlike human pilots.

Before commercial applications, the algorithm will need to become less computationally demanding, as it now takes up to an hour for the computer to calculate the time-optimal trajectory for the drone. Also, at the moment, the drone relies on external cameras to compute where it was at any moment. In future work, the scientists want to use onboard cameras. But the demonstration that an autonomous drone can in principle fly faster than human pilots is promising. “This algorithm can have huge applications in package delivery with drones, inspection, search and rescue, and more”, says Scaramuzza.

Literature

New algorithm flies drones faster than human racing pilots

The Elimination Tide of Physical Stores: Rise of E-commerce

What is the best simulation tool for robotics?

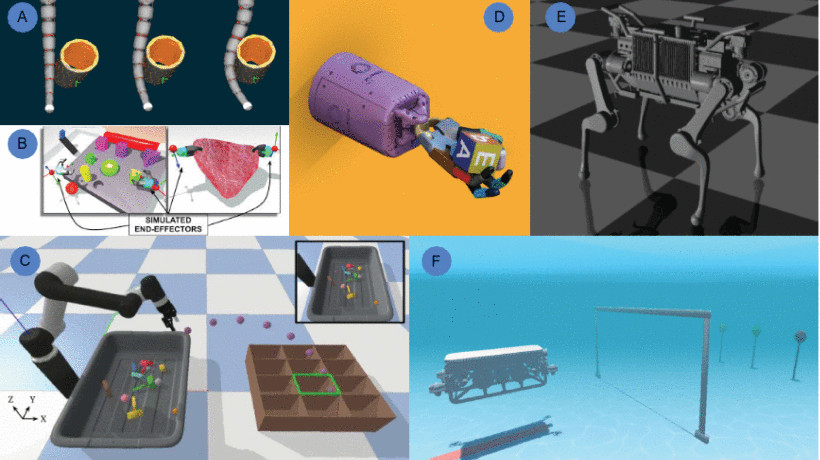

What is the best simulation tool for robotics? This is a hard question to answer because many people (or their companies) specialize in one tool or another. Some simulators are better at one aspect of robotics than at others. When I’m asked to recommend the best simulation tool for robotics I have to find an expert and hope that they are current and across a wide range of simulation tools in order to give me the best advice, which was why I took particular note of the recent review paper from Australia’s CSIRO, “A Review of Physics Simulators for Robotics Applications” by Jack Collins, Shelvin Chand, Anthony Vanderkop, and David Howard, published in IEEE Access (Volume: 9).

“We have compiled a broad review of physics simulators for use within the major fields of robotics research. More specifically, we navigate through key sub-domains and discuss the features, benefits, applications and use-cases of the different simulators categorised by the respective research communities. Our review provides an extensive index of the leading physics simulators applicable to robotics researchers and aims to assist them in choosing the best simulator for their use case.”

Simulation underpins robotics because it’s cheaper, faster and more robust than real robots. While there are some guides that benchmark simulators against real world tasks there isn’t a comprehensive review. A more thorough review can address gaps and needs in research and research challenges for simulation. The authors focus on seven sub-domains: Mobile Ground Robotics; Manipulation; Medical Robotics; Marine Robotics; Aerial Robotics; Soft Robotics and Learning for Robotics.

I’m going to cut to the chase and provide a copy of the final comparison tables of each sub-domain but for anyone interested in utilizing these recommendations, then I recommend reading the rationale behind the rankings in the full review article. The authors also consider whether or not a simulator is actively supported. Handy to know! And the paper is also an excellent source of information about various historic and current robotics competitions.

Mobile Ground Robotics:

TABLE 1 Feature Comparison Between Popular Robotics Simulators

TABLE 2 Feature Comparison Between Popular Robotics Simulators Used for Mobile Ground Robotics

Manipulation:

TABLE 3 Feature Comparison for Popular Robotics Simulators Used for Manipulation

Medical Robotics:

TABLE 4 Feature Comparison of Popular Robotics Simulators Used for Medical Robotics

Marine Robotics:

TABLE 5 Feature Comparison of Popular Simulators Used for Marine Robotics

Aerial Robotics:

TABLE 6 Feature Comparison of Popular Simulators Used for Aerial Robotics

Soft Robotics:

TABLE 7 Feature Comparison of Popular Simulators Used for Soft Robotics

Learning for Robotics:

TABLE 8 Feature Comparison of Popular Simulators Used in Learning for Robotics

Conclusions:

As robotics makes more use of deep learning, simulators that can deal with data on the fly become necessary, and also a potential solution for simulation problems regarding points of contact or collisions. Rather than utilize multiple simulation methods to make a clearer abstraction of the real world in these boundary situations, the answer may be to insert neural networks trained to replicate the properties of difficult phenomena into the simulator. There is further discussion on differentiable simulation, levels of abstraction and the expansion of libraries, plug-ins, toolsets, benchmarking and algorithmic integration, all increasing both the utility and complexity of simulation for robotics.

As the field of simulation for robotics grows, so does the need for metrics that capture the accuracy of the real world representation. “Finally, we predict that we will see further research into estimating and modeling uncertainty of simulators.”

This may have been the first review article on simulation for robotics but hopefully not the last. There’s a clear need to study and measure the field. I found the sections on soft robotics and learning for robotics particularly interesting, as the paper discussed the difficulties of simulation in these fields. And please attribute any errors in this summary to my mistakes. Read the full review here: https://ieeexplore.ieee.org/document/9386154