Embracing automation and robots in industry

Carlotta Berry’s talk – Robotics Education to Robotics Research (with video)

A few days ago, Robotics Today hosted an online seminar with Professor Carlotta Berry from the Rose-Hulman Institute of Technology. In her talk, Carlotta presented the multidisciplinary benefits of robotics in engineering education. In is worth highlighting that Carlotta Berry is one of the 30 women in robotics you need to know about in 2020.

Abstract

This presentation summarizes the multidisciplinary benefits of robotics in engineering education. I will describe how it is used at a primarily undergraduate institution to encourage robotics education and research. There will be a review of how robotics is used in several courses to illustrate engineering design concepts as well as controls, artificial intelligence, human-robot interaction, and software development. This will be a multimedia presentation of student projects in freshman design, mobile robotics, independent research and graduate theses.

Biography

Carlotta A. Berry is a Professor in the Department of Electrical and Computer Engineering at Rose-Hulman Institute of Technology. She has a bachelor’s degree in mathematics from Spelman College, bachelor’s degree in electrical engineering from Georgia Institute of Technology, master’s in electrical engineering from Wayne State University, and PhD from Vanderbilt University. She is one of a team of faculty in ECE, ME and CSSE at Rose-Hulman to create and direct the first multidisciplinary minor in robotics. She is the Co-Director of the NSF S-STEM Rose Building Undergraduate Diversity (ROSE-BUD) Program and advisor for the National Society of Black Engineers. She was previously the President of the Technical Editor Board for the ASEE Computers in Education Journal. Dr. Berry has been selected as one of 30 Women in Robotics You Need to Know About 2020 by robohub.org, Reinvented Magazine Interview of the Year Award on Purpose and Passion, Women and Hi Tech Leading Light Award You Inspire Me and Insight Into Diversity Inspiring Women in STEM. She has taught undergraduate courses in Human-Robot Interaction, Mobile Robotics, circuits, controls, signals and system, freshman and senior design. Her research interests are in robotics education, interface design, human-robot interaction, and increasing underrepresented populations in STEM fields. She has a special passion for diversifying the engineering profession by encouraging more women and underrepresented minorities to pursue undergraduate and graduate degrees. She feels that the profession should reflect the world that we live in in order to solve the unique problems that we face.

You can also view past seminars on the Robotics Today YouTube Channel.

Carlotta Berry’s talk – Robotics Education to Robotics Research (with video)

A few days ago, Robotics Today hosted an online seminar with Professor Carlotta Berry from the Rose-Hulman Institute of Technology. In her talk, Carlotta presented the multidisciplinary benefits of robotics in engineering education. In is worth highlighting that Carlotta Berry is one of the 30 women in robotics you need to know about in 2020.

Abstract

This presentation summarizes the multidisciplinary benefits of robotics in engineering education. I will describe how it is used at a primarily undergraduate institution to encourage robotics education and research. There will be a review of how robotics is used in several courses to illustrate engineering design concepts as well as controls, artificial intelligence, human-robot interaction, and software development. This will be a multimedia presentation of student projects in freshman design, mobile robotics, independent research and graduate theses.

Biography

Carlotta A. Berry is a Professor in the Department of Electrical and Computer Engineering at Rose-Hulman Institute of Technology. She has a bachelor’s degree in mathematics from Spelman College, bachelor’s degree in electrical engineering from Georgia Institute of Technology, master’s in electrical engineering from Wayne State University, and PhD from Vanderbilt University. She is one of a team of faculty in ECE, ME and CSSE at Rose-Hulman to create and direct the first multidisciplinary minor in robotics. She is the Co-Director of the NSF S-STEM Rose Building Undergraduate Diversity (ROSE-BUD) Program and advisor for the National Society of Black Engineers. She was previously the President of the Technical Editor Board for the ASEE Computers in Education Journal. Dr. Berry has been selected as one of 30 Women in Robotics You Need to Know About 2020 by robohub.org, Reinvented Magazine Interview of the Year Award on Purpose and Passion, Women and Hi Tech Leading Light Award You Inspire Me and Insight Into Diversity Inspiring Women in STEM. She has taught undergraduate courses in Human-Robot Interaction, Mobile Robotics, circuits, controls, signals and system, freshman and senior design. Her research interests are in robotics education, interface design, human-robot interaction, and increasing underrepresented populations in STEM fields. She has a special passion for diversifying the engineering profession by encouraging more women and underrepresented minorities to pursue undergraduate and graduate degrees. She feels that the profession should reflect the world that we live in in order to solve the unique problems that we face.

You can also view past seminars on the Robotics Today YouTube Channel.

Readers Choice 2020: Using Mesh Network Applications for Robotics

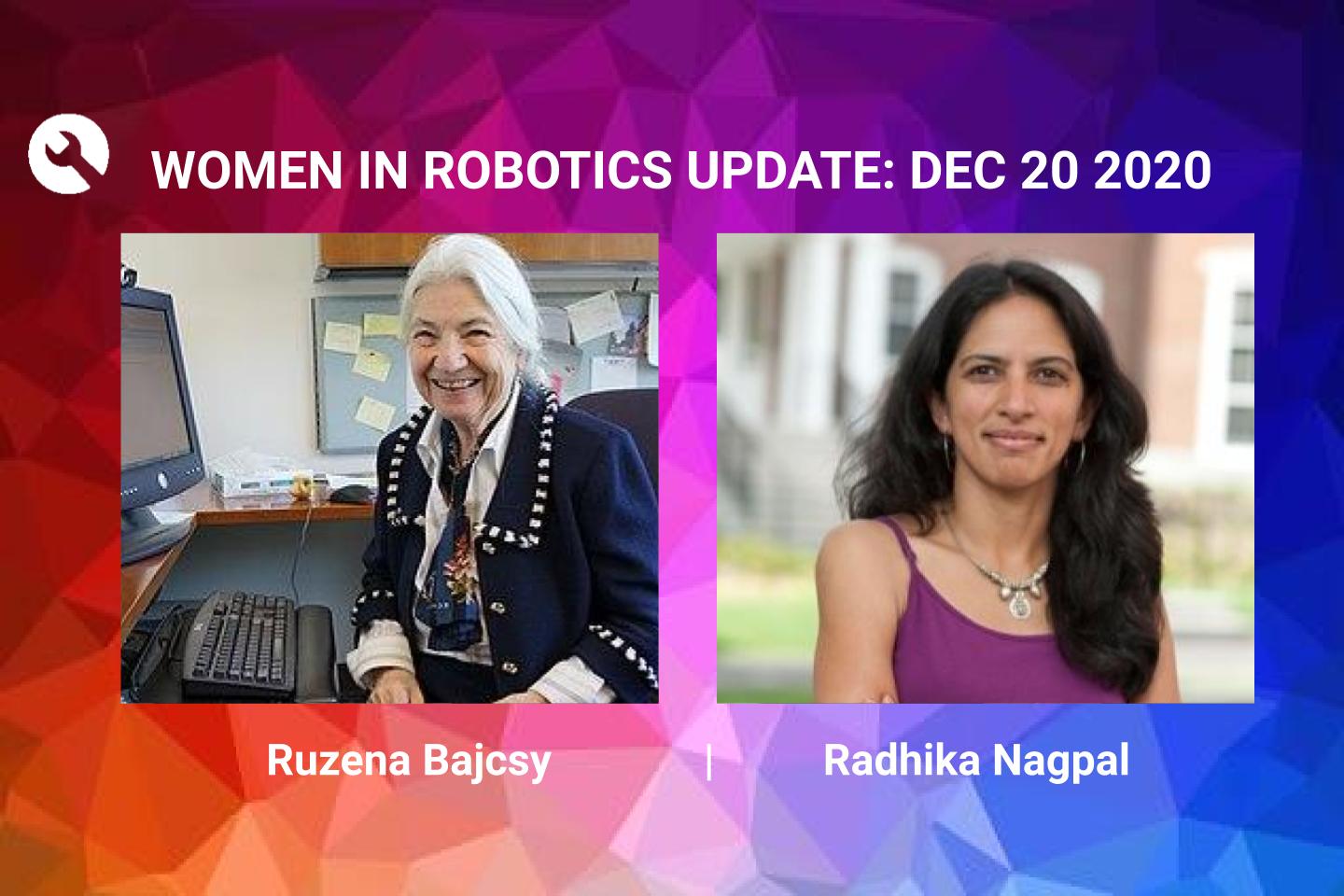

Women in Robotics Update: Ruzena Bajcsy and Radhika Nagpal

Introducing the sixth post in our new series of Women in Robotics Updates, featuring Ruzena Bajcsy and Radhika Nagpal from our first “25 women in robotics you need to know about” list in 2013 and 2014. These women have pioneered foundational research in robotics, created organizations of impact, and inspired the next generations of robotics researchers, of all ages.

“Being an engineer at heart, I really always looked at how technology can help people? That was my model with robots, and in fact, my research in the medical area as well as how can we make things not just empirical, but predictable, ” says Ruzena Bajcsy, expressing the motivation that guides her in both medicine and robotics.

|

Ruzena Bajcsy

NEC Chair and Professor at University of California Berkeley | Founder of HART

Ruzena Bajcsy (featured 2014) is a National Executive Committee (NEC) Chair and Professor, Department of Electrical Engineering and Computer Science, College of Engineering at University of California, Berkeley. She has been a pioneer in the field since 1988 when she laid out the engineering agenda for active perception. Bajcsy works on modeling people using robotic technology and is inspired by recent animal behavioral studies, especially as they pertain to navigation, namely measuring and extracting non-invasively kinematic and dynamic parameters of the individual to assess their physical movement capabilities or limitations and their respective solutions. Professor Ruzena Bajcsy became the founder of many famous research laboratories, such as, for example, the GRASP laboratory at the University of Pennsylvania, CITRIS Institute and currently the HART (Human-Assistive Robotic Technologies) laboratory. Bajcsy has accomplished and received many prestigious awards in her 60 years in Robotics. Since, last featured she has received the Simon Ramo Founders Award Recipient in 2016, for her seminal contributions to the fields of computer vision, robotics, and medical imaging, and technology and policy leadership in computer science education and research. She also received the 2020 NCWIT Pioneer in Tech Award which is awarded to the role models whose legacies continue to inspire generations of young women to pursue computing and make history in their own right. Throughout her career she has been at the intersection of human and machine ways of interpreting the world, with research interests that include Artificial Intelligence; Biosystems and Computational Biology; Control, Intelligent Systems, and Robotics; Human-Computer Interaction; and “Bridging Information Technology to Humanities and Social Sciences.” In her recent interview at the National Center of Women & Information Technology (NCWIT) to the women who are starting in robotics and AI, she says, ” I have a few rules in my book, so to speak. First of all, when you are young, learn as much mathematics and physics as you can. It is never enough of that knowledge..Number two, you have to be realistic. What, with the current technology, can you verify? Because in engineering science it’s not just writing equations, but it’s also building systems where you can validate your results.” |

|

Radhika Nagpal

Fred Kavli Professor at Harvard | Cofounder of Root Robotics

Radhika Nagpal (featured in 2013) is a Fred Kavli Professor of Computer Science at the Harvard School of Engineering and Applied Sciences. At her Self-Organizing Systems Research Group she works on Biologically-inspired Robot Collectives, including novel hardware design, decentralized collective algorithms and theory, and global-to-local swarm programming and Biological Collectives, including mathematical models and field experiments with social insects and cellular morphogenesis. Her lab’s Kilobots are licensed and sold by KTeam inc and over 8000 robots exist in dozens of research labs worldwide. Nagpal has won numerous prestigious awards since 2013. She was distinguished as the top ten scientists and engineers who mattered by Nature 10 in 2014. For her excellent empowerment and contribution to next-generation, she received the McDonald Award for Excellence in Mentoring and Advising at Harvard in 2015. She was named an AAAI fellow & Amazon Scholar in 2020. “Science is of course itself an incredible manifestation of collective intelligence, but unlike the beautiful fish school that I study, I feel we still have a much longer evolutionary path to walk..There’s this saying that I love: Who does science determines what science gets done I believe that we can choose our rules and we can engineer not just robots but we can engineer our own human collective and if we do and when we do, it will be beautiful.”, says Nagpal in her Ted Talk “Harnessing the intelligence of the collective” from 2017 which has more than 1 million views. Nagpal is also a co-founder and scientific advisor of Root Robotics which has been acquired by iRobot. Here, she and her team designed Root, an educational robot that drives on whiteboards with magnet+wheels, senses colors, and draws under program control which can be used to teach programming across all ages. With Root, she aims to transform the home and classroom experiences with programming, by making it tangible and personal. “Every kid should learn to code in a fun way, that enhances their interests, and that inspires them to become creative technologies themselves,” says Nagpal. |

Want to keep reading? There are 180 more stories on our 2013 to 2020 lists. Why not nominate someone for inclusion next year!

And we encourage #womeninrobotics and women who’d like to work in robotics to join our professional network at http://womeninrobotics.org

Who are the Visionary companies in robotics? See the 2020 SVR Industry Award winners

These Visionary companies have a big idea and are well on their way to achieving it, although it isn’t always an easy road for any really innovative technology. In the case of Cruise, that meant testing self driving vehicles on the streets of San Francisco, one of the hardest driving environments in the world. Some of our Visionary Awards go to companies who are opening up new market applications for robotics, such as Built Robotics in construction, Dishcraft in food services, Embark in self-driving trucks, Iron Ox in urban agriculture and Zipline in drone delivery. Some are building tools or platforms that the entire robotics industry can benefit from, such as Agility Robotics, Covariant, Formant, RobustAI and Zoox. The companies in our Good Robot Awards also show that ‘technologies built for us, have to be built by us’.

These Visionary companies have a big idea and are well on their way to achieving it, although it isn’t always an easy road for any really innovative technology. In the case of Cruise, that meant testing self driving vehicles on the streets of San Francisco, one of the hardest driving environments in the world. Some of our Visionary Awards go to companies who are opening up new market applications for robotics, such as Built Robotics in construction, Dishcraft in food services, Embark in self-driving trucks, Iron Ox in urban agriculture and Zipline in drone delivery. Some are building tools or platforms that the entire robotics industry can benefit from, such as Agility Robotics, Covariant, Formant, RobustAI and Zoox. The companies in our Good Robot Awards also show that ‘technologies built for us, have to be built by us’.

Agility Robotics builds robots that go where people go, to do pragmatically useful work in human environments. Digit, Agility Robotics’ humanoid robot with both mobility and manipulation capabilities, is commercially available and has been shipping to customers since July 2020. Digit builds on two decades of research and development from the team on human-like dynamic mobility and manipulation, and can handle unstructured indoor and outdoor terrain. Digit is versatile and can do a range of different jobs that have been designed around a human form factor.

In October 2020, Agility Robotics closed a $20 million Series A round led by DCVC and Playground Global, bringing their total funds raised to $29 million. The investment enables the company to meet the demand from logistics providers, e-commerce retailers and others for robots that can work alongside humans to automate repetitive, physically demanding or dangerous work safely and scalably, even in the majority of spaces that are not purpose-built for automation.

Built Robotics transforms heavy equipment for the $1 trillion earthmoving industry into autonomous robots using its proprietary AI Guidance Systems. Built Robotics combines sensors such as GPS, cameras, and IMUs with advanced software, and the systems can be installed on standard equipment from any manufacturer. The technology allows equipment operators to oversee a fleet of vehicles working autonomously in parallel.

Built Robotics transforms heavy equipment for the $1 trillion earthmoving industry into autonomous robots using its proprietary AI Guidance Systems. Built Robotics combines sensors such as GPS, cameras, and IMUs with advanced software, and the systems can be installed on standard equipment from any manufacturer. The technology allows equipment operators to oversee a fleet of vehicles working autonomously in parallel.

Built Robotics is backed by some of the top investors in Silicon Valley — including Founders Fund, NEA, and Next47 — and has raised over $48M to date. They have targeted markets in which they can have a big impact, such as earthmoving, clean energy, gas pipelines, trenching, and new housing developments. Built Robotics has partnered with one of the largest labor unions in North America, the IUOE, to help train and develop the next generation of equipment operator.

“At the end of the day, robots are just tools in the hands of skilled operators, and we believe that the best-trained workers equipped with our technology will fundamentally change the future of construction,” said Noah Ready-Campbell, CEO of Built Robotics. “Together we can build and maintain the critical infrastructure our country needs.”

Covariant is building the Covariant Brain, a universal AI to give robots the ability to see, reason and act on the world around them. Bringing practical AI Robotics into the physical world is hard. It involves giving robots a level of autonomy that requires breakthroughs in AI research. That’s why Covariant assembled a team that has published cutting-edge research papers at the top AI conferences and journals, with more than 50,000 collective citations. In addition to their research, they’ve also brought together a world-class engineering team to create new types of highly robust, reliable and performant cyber-physical systems.

Instead of learning to master specific tasks separately, Covariant robots learn general abilities such as robust 3D perception, physical affordances of objects, few-shot learning and real-time motion planning. This allows them to adapt to new tasks just like people do — by breaking down complex tasks into simple steps and applying general skills to complete them. In 2020, Covariant raised a $40 million Series B round from investors such as Index Ventures, Lux Capital and Baidu Ventures, bringing their total funding to $67 million. They’ve also developed partnerships with logistics and robotics companies such as Knapp Ag. and ABB, showcasing successful order pick rates at faster than human speeds.

Self driving technology, the integration of robotics, AI and simulation, is the hardest engineering challenge of our generation. So it’s only fitting that Cruise autonomous vehicles are on the road in San Francisco navigating some of the most challenging and unpredictable driving environments, because the best way to bring self-driving technology to the world is to expose it to the same unique and complex traffic scenarios human drivers face every day.

Self driving technology, the integration of robotics, AI and simulation, is the hardest engineering challenge of our generation. So it’s only fitting that Cruise autonomous vehicles are on the road in San Francisco navigating some of the most challenging and unpredictable driving environments, because the best way to bring self-driving technology to the world is to expose it to the same unique and complex traffic scenarios human drivers face every day.

Cruise became the industry’s first unicorn when GM acquired the company in 2016. Cruise is building the world’s most advanced all-electric, self-driving vehicles to safely connect people with the places, things, and experiences they care about. And in the first three months of the COVID-19 pandemic, Cruise delivered more than 125,000 contactless deliveries of groceries and meals to San Francisco’s most vulnerable underserved populations. And as of December 4, Cruise has started driverless testing in San Francisco. You can see the video here:

Dishcraft’s mission is to create happy, productive, sustainable workplaces by making automation accessible to food service operations. Dishcraft Daily® delivers a full-service clean-dish ‘dishwashing as a service’ every day to dining operations in business, education, and healthcare, providing measurable environmental benefits compared to using disposable wares.

Dishcraft provides environmental and financial efficiencies for both dine-in and to-go businesses once you calculate the hidden costs of normal restaurant or food service operation. Dishcraft has raised over $25 million from investors including Baseline Ventures, First Round Capital, and Lemnos. The company’s dishwashing as a service is now being used by dozens of companies, including hospitals, around the Bay Area. Since the advent of COVID-19, there’s been an increased demand for food safe and sterile processes in the food service industry.

Embark technology is already moving freight for five Fortune 500 companies in the southwest U.S. By moving real freight through our purpose-built transfer hubs, we are setting a new standard for how driverless trucks will move freight in the future. Embark has compiled many firsts for automated trucks, including driving across the country, operating in rain and fog, and navigating between transfer hubs. Embark is advancing the state of the art in automated trucks and bringing safe, efficient commercial transport closer every day.

Started as University of Waterloo startup, then at YCombinator, Embark has raised more than $117 million with top investors like DCVC and Sequoia Capital. Embark is assembling a world-class group of engineers from companies like Tesla, Google, Audi and NASA with a professional operations team that averages over a million miles per driver, with the goal of developing a system tailored to the demands of real world trucking.

Autonomous robots are awesome, but if you want to run a business with them, you’ll need a robust operations platform that connects people, processes, sensors and robots, and provides fleet-wide management, control, and analytics at scale. That is where Formant comes in.

Formant bridges the gap between autonomous systems and the people running them. Our robot data and operations platform provides organizations with a command center that can be used to operate, observe, and analyze the performance of a growing fleet of heterogeneous robots. Empowering customers to deploy faster, scale while reducing overhead, and maximize the value of autonomous robots and the data they collect.

So far in 2020, Formant’s robot data and operations platform is supporting dozens of different customers with a multitude of robot types and is deployed on thousands of autonomous devices worldwide. Formant’s customers span robot manufacturers, robot-as-a-service providers, and enterprises with robotic installations and represent a variety of industries, from energy to agriculture to warehouse automation.

Iron Ox is an operator of autonomous robotic greenhouses used to grow fresh and pesticide-free farm products that are accessible everywhere. It leverages plant science, machine learning, and robotics to increase the availability, quality, and flavor of leafy greens and culinary herbs that enable consumers to access naturally grown and chemical-free farm products.

Iron Ox is an operator of autonomous robotic greenhouses used to grow fresh and pesticide-free farm products that are accessible everywhere. It leverages plant science, machine learning, and robotics to increase the availability, quality, and flavor of leafy greens and culinary herbs that enable consumers to access naturally grown and chemical-free farm products.

Iron Ox is reimagining the modern farm, utilizing robotics and AI to grow fresh, consistent, and responsibly farmed produce for everyone. From the development of multiple robot platforms to their own custom hydroponic, seeding, and harvesting systems, Iron Ox is taking a system-level approach to creating the ideal farm. The company’s experienced team of growers, plant scientists, software engineers, and hardware engineers are passionate about bringing forward this new wave of technology to grow local, affordable fresh produce.

Robust.AI is building the world’s first industrial grade cognitive engine, with a stellar team that’s attracted $22.5 million in seed and Series A funding from Jazz Ventures, Playground Global, Fontinalis, Liquid 2, Mark Leslie and Jaan Tallis. Robust’s stated mission is to overhaul the software stack that runs many of existing robots, in order to make them function better in complex environments and be safer for operation around humans.

The all-star team of founders are Gary Marcus and Rodney Brooks, both pioneers in AI and robotics, Mohamed Amer from SRI International, Anthony Jules from Formant and Redwood Robotics, and Henrik Christensen author of the US National Robotics Roadmaps.

“Finding market fit is as important in robots and AI systems as any other product,” Brooks said in a statement. “We are building something we believe most robotics companies will find irresistible, taking solutions from single-purpose tools that today function in defined environments, to highly useful systems that can work within our world and all its intricacies.”

Zipline is a California-based automated logistics company that designs, manufactures, and operates drones to deliver vital medical products. Zipline’s mission is to provide every human on Earth with instant access to vital medical supplies. In 2014, Zipline started flying medical supplies in Africa, and has gone on to fly more than 39,000 deliveries worldwide and raise over $233 million in funding.

Zipline is a California-based automated logistics company that designs, manufactures, and operates drones to deliver vital medical products. Zipline’s mission is to provide every human on Earth with instant access to vital medical supplies. In 2014, Zipline started flying medical supplies in Africa, and has gone on to fly more than 39,000 deliveries worldwide and raise over $233 million in funding.

Zipline has built the world’s fastest and most reliable delivery drone, the world’s largest autonomous logistics network, and a truly amazing team. Zipline designs and tests its technology in Half Moon Bay, California. The company assembles the drones and the technology that powers its distribution centers in South San Francisco. Zipline performs extensive flight testing in Davis, California, and operates distribution centers around the planet with teams of local operators.

Zoox is working on the full stack for Robo-taxis, providing mobility-as-a-service. Operating at the intersection of design, computer science, and electro-mechanical engineering, Zoox is a multidisciplinary team working to imagine and build an advanced mobility experience that will support the future needs of urban mobility for both people and the environment.

In December 2018, Zoox became the first company to gain approval for providing self-driving transport services to the public in California. In January 2019, Zoox appointed a new CEO, Aicha Evans, who was previously the Chief Strategy Officer at Intel and became the first African-American CEO of a $1B company. Zoox had raised a total of $1B in funding over 6 rounds and on June 26, 2020, Amazon and Zoox signed a “definitive merger agreement” under which Amazon will acquire Zoox for over $1.2 billion. Zoox’s ground-up technology, which includes developing zero-emission vehicles built specifically for autonomous use, could be used to augment Amazon’s logistics operations.

You can see the full list of our Good Robot Awards in Innovation, Vision, Commercialization and our Community Champions here at https://svrobo.org/awards and we’ll be sharing articles about each category of award winners throughout the week.

Who are the Visionary companies in robotics? See the 2020 SVR Industry Award winners

These Visionary companies have a big idea and are well on their way to achieving it, although it isn’t always an easy road for any really innovative technology. In the case of Cruise, that meant testing self driving vehicles on the streets of San Francisco, one of the hardest driving environments in the world. Some of our Visionary Awards go to companies who are opening up new market applications for robotics, such as Built Robotics in construction, Dishcraft in food services, Embark in self-driving trucks, Iron Ox in urban agriculture and Zipline in drone delivery. Some are building tools or platforms that the entire robotics industry can benefit from, such as Agility Robotics, Covariant, Formant, RobustAI and Zoox. The companies in our Good Robot Awards also show that ‘technologies built for us, have to be built by us’.

These Visionary companies have a big idea and are well on their way to achieving it, although it isn’t always an easy road for any really innovative technology. In the case of Cruise, that meant testing self driving vehicles on the streets of San Francisco, one of the hardest driving environments in the world. Some of our Visionary Awards go to companies who are opening up new market applications for robotics, such as Built Robotics in construction, Dishcraft in food services, Embark in self-driving trucks, Iron Ox in urban agriculture and Zipline in drone delivery. Some are building tools or platforms that the entire robotics industry can benefit from, such as Agility Robotics, Covariant, Formant, RobustAI and Zoox. The companies in our Good Robot Awards also show that ‘technologies built for us, have to be built by us’.

Agility Robotics builds robots that go where people go, to do pragmatically useful work in human environments. Digit, Agility Robotics’ humanoid robot with both mobility and manipulation capabilities, is commercially available and has been shipping to customers since July 2020. Digit builds on two decades of research and development from the team on human-like dynamic mobility and manipulation, and can handle unstructured indoor and outdoor terrain. Digit is versatile and can do a range of different jobs that have been designed around a human form factor.

In October 2020, Agility Robotics closed a $20 million Series A round led by DCVC and Playground Global, bringing their total funds raised to $29 million. The investment enables the company to meet the demand from logistics providers, e-commerce retailers and others for robots that can work alongside humans to automate repetitive, physically demanding or dangerous work safely and scalably, even in the majority of spaces that are not purpose-built for automation.

Built Robotics transforms heavy equipment for the $1 trillion earthmoving industry into autonomous robots using its proprietary AI Guidance Systems. Built Robotics combines sensors such as GPS, cameras, and IMUs with advanced software, and the systems can be installed on standard equipment from any manufacturer. The technology allows equipment operators to oversee a fleet of vehicles working autonomously in parallel.

Built Robotics transforms heavy equipment for the $1 trillion earthmoving industry into autonomous robots using its proprietary AI Guidance Systems. Built Robotics combines sensors such as GPS, cameras, and IMUs with advanced software, and the systems can be installed on standard equipment from any manufacturer. The technology allows equipment operators to oversee a fleet of vehicles working autonomously in parallel.

Built Robotics is backed by some of the top investors in Silicon Valley — including Founders Fund, NEA, and Next47 — and has raised over $48M to date. They have targeted markets in which they can have a big impact, such as earthmoving, clean energy, gas pipelines, trenching, and new housing developments. Built Robotics has partnered with one of the largest labor unions in North America, the IUOE, to help train and develop the next generation of equipment operator.

“At the end of the day, robots are just tools in the hands of skilled operators, and we believe that the best-trained workers equipped with our technology will fundamentally change the future of construction,” said Noah Ready-Campbell, CEO of Built Robotics. “Together we can build and maintain the critical infrastructure our country needs.”

Covariant is building the Covariant Brain, a universal AI to give robots the ability to see, reason and act on the world around them. Bringing practical AI Robotics into the physical world is hard. It involves giving robots a level of autonomy that requires breakthroughs in AI research. That’s why Covariant assembled a team that has published cutting-edge research papers at the top AI conferences and journals, with more than 50,000 collective citations. In addition to their research, they’ve also brought together a world-class engineering team to create new types of highly robust, reliable and performant cyber-physical systems.

Instead of learning to master specific tasks separately, Covariant robots learn general abilities such as robust 3D perception, physical affordances of objects, few-shot learning and real-time motion planning. This allows them to adapt to new tasks just like people do — by breaking down complex tasks into simple steps and applying general skills to complete them. In 2020, Covariant raised a $40 million Series B round from investors such as Index Ventures, Lux Capital and Baidu Ventures, bringing their total funding to $67 million. They’ve also developed partnerships with logistics and robotics companies such as Knapp Ag. and ABB, showcasing successful order pick rates at faster than human speeds.

Self driving technology, the integration of robotics, AI and simulation, is the hardest engineering challenge of our generation. So it’s only fitting that Cruise autonomous vehicles are on the road in San Francisco navigating some of the most challenging and unpredictable driving environments, because the best way to bring self-driving technology to the world is to expose it to the same unique and complex traffic scenarios human drivers face every day.

Self driving technology, the integration of robotics, AI and simulation, is the hardest engineering challenge of our generation. So it’s only fitting that Cruise autonomous vehicles are on the road in San Francisco navigating some of the most challenging and unpredictable driving environments, because the best way to bring self-driving technology to the world is to expose it to the same unique and complex traffic scenarios human drivers face every day.

Cruise became the industry’s first unicorn when GM acquired the company in 2016. Cruise is building the world’s most advanced all-electric, self-driving vehicles to safely connect people with the places, things, and experiences they care about. And in the first three months of the COVID-19 pandemic, Cruise delivered more than 125,000 contactless deliveries of groceries and meals to San Francisco’s most vulnerable underserved populations. And as of December 4, Cruise has started driverless testing in San Francisco. You can see the video here:

Dishcraft’s mission is to create happy, productive, sustainable workplaces by making automation accessible to food service operations. Dishcraft Daily® delivers a full-service clean-dish ‘dishwashing as a service’ every day to dining operations in business, education, and healthcare, providing measurable environmental benefits compared to using disposable wares.

Dishcraft provides environmental and financial efficiencies for both dine-in and to-go businesses once you calculate the hidden costs of normal restaurant or food service operation. Dishcraft has raised over $25 million from investors including Baseline Ventures, First Round Capital, and Lemnos. The company’s dishwashing as a service is now being used by dozens of companies, including hospitals, around the Bay Area. Since the advent of COVID-19, there’s been an increased demand for food safe and sterile processes in the food service industry.

Embark technology is already moving freight for five Fortune 500 companies in the southwest U.S. By moving real freight through our purpose-built transfer hubs, we are setting a new standard for how driverless trucks will move freight in the future. Embark has compiled many firsts for automated trucks, including driving across the country, operating in rain and fog, and navigating between transfer hubs. Embark is advancing the state of the art in automated trucks and bringing safe, efficient commercial transport closer every day.

Started as University of Waterloo startup, then at YCombinator, Embark has raised more than $117 million with top investors like DCVC and Sequoia Capital. Embark is assembling a world-class group of engineers from companies like Tesla, Google, Audi and NASA with a professional operations team that averages over a million miles per driver, with the goal of developing a system tailored to the demands of real world trucking.

Autonomous robots are awesome, but if you want to run a business with them, you’ll need a robust operations platform that connects people, processes, sensors and robots, and provides fleet-wide management, control, and analytics at scale. That is where Formant comes in.

Formant bridges the gap between autonomous systems and the people running them. Our robot data and operations platform provides organizations with a command center that can be used to operate, observe, and analyze the performance of a growing fleet of heterogeneous robots. Empowering customers to deploy faster, scale while reducing overhead, and maximize the value of autonomous robots and the data they collect.

So far in 2020, Formant’s robot data and operations platform is supporting dozens of different customers with a multitude of robot types and is deployed on thousands of autonomous devices worldwide. Formant’s customers span robot manufacturers, robot-as-a-service providers, and enterprises with robotic installations and represent a variety of industries, from energy to agriculture to warehouse automation.

Iron Ox is an operator of autonomous robotic greenhouses used to grow fresh and pesticide-free farm products that are accessible everywhere. It leverages plant science, machine learning, and robotics to increase the availability, quality, and flavor of leafy greens and culinary herbs that enable consumers to access naturally grown and chemical-free farm products.

Iron Ox is an operator of autonomous robotic greenhouses used to grow fresh and pesticide-free farm products that are accessible everywhere. It leverages plant science, machine learning, and robotics to increase the availability, quality, and flavor of leafy greens and culinary herbs that enable consumers to access naturally grown and chemical-free farm products.

Iron Ox is reimagining the modern farm, utilizing robotics and AI to grow fresh, consistent, and responsibly farmed produce for everyone. From the development of multiple robot platforms to their own custom hydroponic, seeding, and harvesting systems, Iron Ox is taking a system-level approach to creating the ideal farm. The company’s experienced team of growers, plant scientists, software engineers, and hardware engineers are passionate about bringing forward this new wave of technology to grow local, affordable fresh produce.

Robust.AI is building the world’s first industrial grade cognitive engine, with a stellar team that’s attracted $22.5 million in seed and Series A funding from Jazz Ventures, Playground Global, Fontinalis, Liquid 2, Mark Leslie and Jaan Tallis. Robust’s stated mission is to overhaul the software stack that runs many of existing robots, in order to make them function better in complex environments and be safer for operation around humans.

The all-star team of founders are Gary Marcus and Rodney Brooks, both pioneers in AI and robotics, Mohamed Amer from SRI International, Anthony Jules from Formant and Redwood Robotics, and Henrik Christensen author of the US National Robotics Roadmaps.

“Finding market fit is as important in robots and AI systems as any other product,” Brooks said in a statement. “We are building something we believe most robotics companies will find irresistible, taking solutions from single-purpose tools that today function in defined environments, to highly useful systems that can work within our world and all its intricacies.”

Zipline is a California-based automated logistics company that designs, manufactures, and operates drones to deliver vital medical products. Zipline’s mission is to provide every human on Earth with instant access to vital medical supplies. In 2014, Zipline started flying medical supplies in Africa, and has gone on to fly more than 39,000 deliveries worldwide and raise over $233 million in funding.

Zipline is a California-based automated logistics company that designs, manufactures, and operates drones to deliver vital medical products. Zipline’s mission is to provide every human on Earth with instant access to vital medical supplies. In 2014, Zipline started flying medical supplies in Africa, and has gone on to fly more than 39,000 deliveries worldwide and raise over $233 million in funding.

Zipline has built the world’s fastest and most reliable delivery drone, the world’s largest autonomous logistics network, and a truly amazing team. Zipline designs and tests its technology in Half Moon Bay, California. The company assembles the drones and the technology that powers its distribution centers in South San Francisco. Zipline performs extensive flight testing in Davis, California, and operates distribution centers around the planet with teams of local operators.

Zoox is working on the full stack for Robo-taxis, providing mobility-as-a-service. Operating at the intersection of design, computer science, and electro-mechanical engineering, Zoox is a multidisciplinary team working to imagine and build an advanced mobility experience that will support the future needs of urban mobility for both people and the environment.

In December 2018, Zoox became the first company to gain approval for providing self-driving transport services to the public in California. In January 2019, Zoox appointed a new CEO, Aicha Evans, who was previously the Chief Strategy Officer at Intel and became the first African-American CEO of a $1B company. Zoox had raised a total of $1B in funding over 6 rounds and on June 26, 2020, Amazon and Zoox signed a “definitive merger agreement” under which Amazon will acquire Zoox for over $1.2 billion. Zoox’s ground-up technology, which includes developing zero-emission vehicles built specifically for autonomous use, could be used to augment Amazon’s logistics operations.

You can see the full list of our Good Robot Awards in Innovation, Vision, Commercialization and our Community Champions here at https://svrobo.org/awards and we’ll be sharing articles about each category of award winners throughout the week.

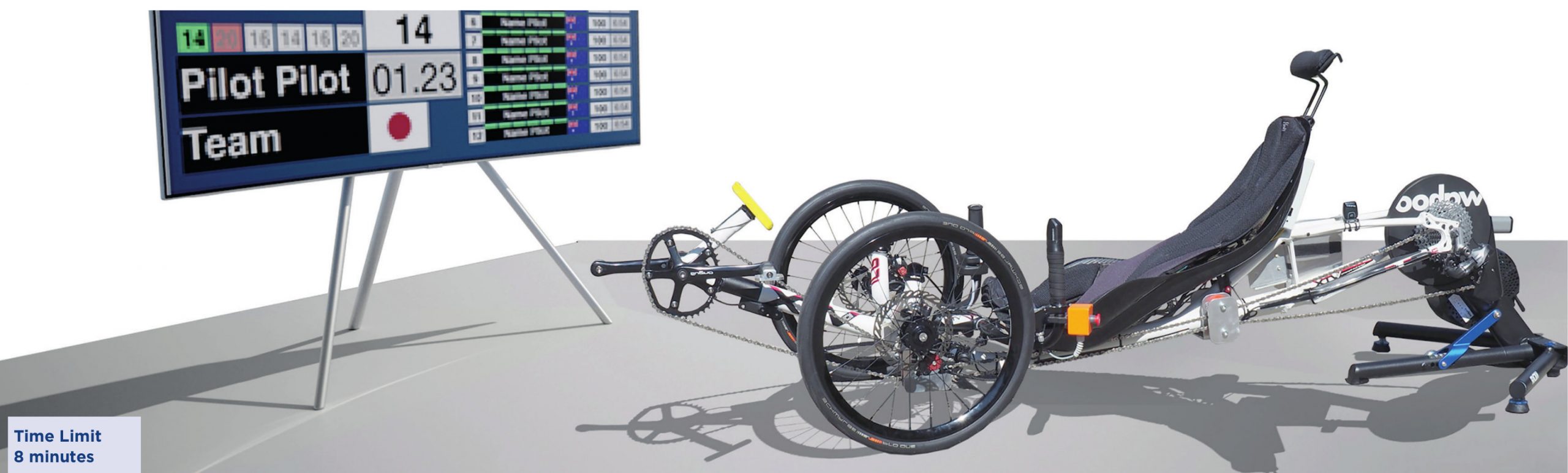

#CYBATHLON2020GlobalEdition winners of the functional electrical stimulation bike race (with interview)

In continuation to this series of CYBATHLON 2020 winners, today we feature the victory of PULSE Racing from VU University Amsterdam. We also had the chance to interview them (see the end of this post).

In this race, pilots with paraplegia from nine teams competed against each other using a recumbent bicycle that they could pedal with the help of functional electrical stimulation (FES) of their leg muscles. As the organizers of CYBATHLON describe, “FES is a technique that allows paralysed muscles to move again. By placing electrodes on the skin or implanting them, currents are applied to the muscles, making them contract. Thus, a person whose nerves from the brain to the leg muscles are disconnected due to a spinal cord injury (SCI) can use an intelligent control device to initiate a movement, e.g. stepping on a bike pedal. New types of electrodes and an exact control of the currents make it possible to maximise the pedal force with each rotation while avoiding early muscle fatigue.” Pilots had 8 minutes to complete 1200m on their static recumbent bike.

Five out of the nine teams finished the 1200m race. Therefore, the podium was decided based on finishing time, with pilot Sander Koomen from the winning team PULSE Racing (The Netherlands) completing the distance in 2 minutes and 40 seconds (that’s an average speed of 27km/h). The silver medal went to team ImperialBerkel (UK & The Netherlands) with pilot Johnny Beer, who made a time of 2 minutes and 59 seconds. Finally, team Cleveland (US) with pilot Mark Steven Muhn won the bronze medal with a finishing time of 3 minutes and 13 seconds. You can watch a summary of the top 4 races in the video below.

You can also see the results from the rest of the teams in this discipline here, or watch the recorded livestreams of both days on their website.

Interview to PULSE Racing

D. C. Z.: What does it mean for your team to have won in your CYBATHLON category?

P.R.: We saw this as a good reflection of where we stand as a team. The result was unexpected, because sometimes it is hard to see results during training. By winning the Cybathlon, our uncertainties about our developments vanished. The golden medal emphasizes our strength and motivation as a student team. Our dream, winning the Cybathlon on the first attempt, came true. We are thankful that the Cybathlon gave us the opportunity to participate, which helped us to gain publicity.

D. C. Z.: And what does it mean for people with disabilities?

P.R.: It means that with joining our team, people with a disability are able to keep developing their bodies and mind, according to a training scheme. This improves mental well-being and health. Besides that they can still be part of the society, and become more confident.

D. C. Z.: What are still your challenges?

P.R.: In the coming years we want to focus more on the mechanics of the bike, to see if we can make some improvements. And it would be great if this form of exercise is accessible for more people with a spinal cord injury. For that it is important that more people are aware of the possibilities of Functional Electrostimulation (FES).

You can follow team updates on Instragram (#pulse.racingnl) and Facebook/LinkedIN (#pulseracing).

What does Innovation look like in robotics? See the SVR 2020 Industry Award winners

Self-driving vehicles would not be possible without sensors and so it’s not surprising to see two small new sensors in the 2020 Silicon Valley Robotics ‘Good Robot’ Innovation Awards, the Velabit from Velodyne and the nanoScan3 from SICK. We showcase three other innovations in component technology, the FHA-C with Integrated Servo Drive from Harmonic Drive, the radically new Inception Drive from SRI International and Qualcomm’s RB5 Processor, all ideal for building robots.

Our other Innovation Awards go to companies with groundbreakingly new robots; from the tensegrity structure of Squishy Robotics, which will help in both space exploration and disaster response on earth, to the Dusty Robotics full scale FieldPrinter for the construction industry, and Titan from FarmWise for agriculture, which was also named one of Time’s Best Inventions for 2020. Finally, we’re delighted to see innovation in robotics that is affordable and collaborative enough for home robot applications, with Stretch from Hello Robot and Eve from Halodi Robotics.

The Velabit, a game-changing lidar sensor, leverages Velodyne’s innovative lidar technology and manufacturing partnerships for cost optimization and high-volume production, to make high-quality 3D lidar sensors readily accessible to everyone. The Velabit is smaller than a deck of playing cards, and it shatters the price barrier, costing $100.00 per sensor. The compact, mid-range Velabit is highly configurable for specialized use cases and can be embedded almost anywhere. Gatik and May Mobility are just two of pioneers in autonomous vehicle technology using Velodyne Lidar.

The nanoScan3 from SICK is the world’s smallest safety laser scanner and is based on their latest patented Time-Of-Flight technology. Not only does it provide the most robust protection for stationary and mobile robots, but being a LiDAR, it simultaneously supports navigation and other measurement-based applications.

Founded in 1946, SICK sensors help robots make more intelligent decisions and give them the ability to sense objects, the environment, or their own position. SICK, and their west coast distributor EandM, offer solutions for all challenges in the field of robotics: Robot Vision, Safe Robotics, End-of-Arm Tooling, and Position Feedback.

The FHA-C Mini Series from Harmonic Drive is a family of extremely compact actuators that deliver high torque with exceptional accuracy and repeatability. The revolutionary FHA-C with Integrated Servo Drive eliminates the need for an external drive and greatly improves wiring while retaining high-positional accuracy and torsional stiffness in a compact housing. This new mini actuator product is ideal for use in robotics.

The Qualcomm Robotics RB5 Platform supports the development of the next generation of high-compute, AI-enabled, low power robots and drones for the consumer, enterprise, defense, industrial and professional service sectors that can be connected by 5G. The QRB5165 processor, customized for robotics applications, offers a powerful heterogeneous computing architecture coupled with the leading 5th generation Qualcomm® Artificial Intelligence (AI) Engine delivering 15 Trillion Operations Per Second (TOPS) of AI performance. It’s designed to achieve peak performance while being able to also support small battery-operated robots with challenging power and thermal dissipation requirements. The platform offers support for Linux, Ubuntu and Robot Operating System (ROS) 2, as well as pre-integrated drivers for various cameras, sensors and connectivity.

The latest breakthrough from SRI Robotics is a novel ultra-compact, infinitely variable transmission that is an order of magnitude smaller and lighter than existing technologies. The Inception Drive is a new transmission that can reverse the direction of the output relative to input without clutches or extra stages, dramatically increasing total system efficiency in applications including robotics, transportation, and heavy industry.

Squishy Robotics’ rapidly deployable, air-droppable, mobile sensor robots provide lifesaving, cost-saving information in real time, enabling faster, better-informed data-driven decisions. The company’s robots provide first responders with location and chemical sensor data as well as the visual information needed to safely plan a mitigation response, all from a safe distance away from the “hot zones.” The scalable and reconfigurable robots can carry customized, third-party equipment (e.g., COTS sensors, emergency medical aid supplies, or specialized radio components) in a variety of deployment scenarios.

The company’s first target market is the HazMat and CBRNE (chemical, biological, radiological, nuclear, and explosive) response market, enabling lifesaving maneuvers and securing the safety of first responders by providing situational awareness and sensor data in uncharted terrains. The robots can be quickly deployed by ground or be dropped from drones or other aerial vehicles and then be used in a variety of ways, including remote monitoring, disaster response, and rescue assistance. A spin-off of prior work with NASA on robots for space exploration, the company’s Stationary Robot has been successfully dropped from airplanes from heights of up to 1,000 ft; the company’s Mobile Robot can traverse rugged and uneven territory.

Dusty Robotics develops innovative robotics technology that power the creation of high-accuracy mobile printers for the construction industry. Dusty’s novel robotics algorithms enable the system to achieve 1-millimeter precision printing construction layout on concrete decks, which is a breakthrough in the industry.

Dusty Robotics develops innovative robotics technology that power the creation of high-accuracy mobile printers for the construction industry. Dusty’s novel robotics algorithms enable the system to achieve 1-millimeter precision printing construction layout on concrete decks, which is a breakthrough in the industry.

Construction industry veterans who are normally skeptical about new innovations have all embraced Dusty’s FieldPrinter as the solution to critical problems in the industry. Layout today involves a number of manual steps, each of which has the potential to introduce errors into the process. Errors increase building cost and delay time to completion. Dusty’s robotic layout printer automates the BIM->field workflow and is poised to be the first widely adopted robotic technology in the field across the construction industry.

For vegetable growers who face increased growing costs and new environmental and regulatory pressures, the FarmWise suite of data-driven services harnesses plant-level data to drive precise field actions in order to streamline farm operations and increase food production efficiency.

Titan FT-35, the automated weeding robot from FarmWise Labs, has just been named one of Time Magazine’s ‘Best Inventions of 2020’. Titan consists of a driverless tractor and a smart implement that uses deep learning to detect crops from weeds and mechanically removes weeds from farmers’ fields. Thanks to the trust and collaborative effort of visionary growers, the FarmWise idea of a machine that could kill weeds without using chemicals went from a proof-of-concept to a commercialized product.

Hello Robot has reinvented the mobile manipulator. In July 2020 they launched Stretch, the first capable, portable, and affordable mobile manipulator designed specifically to assist people in the home and workplace. At a fraction the cost, size, and weight of previous capable mobile manipulators, Stretch’s novel design is a game changer.

Stretch has a low mass, contact-sensitive body with a compact footprint and slender telescopic manipulator, so that it weighs only 51lb. Stretch is ready for autonomous operation as well as teleoperation, with Python interfaces, ROS integration and open source code. In the future, mobile manipulators will enhance the lives of older adults, people with disabilities, and caregivers. Hello Robot is working to build a bridge to this future.

Halodi Robotics has developed the EVE humanoid robot platform using its patented REVO1 actuators to enable truly capable and safe humanoid robots. The robots have been commercialized, and the first commercial customer pilots are being planned for next year in security, health care and retail.

Halodi Robotics has developed the EVE humanoid robot platform using its patented REVO1 actuators to enable truly capable and safe humanoid robots. The robots have been commercialized, and the first commercial customer pilots are being planned for next year in security, health care and retail.

By developing a new actuator and differential rope based transmission systems, the company has overcome many of the obstacles preventing the development of capable and safe robots. Impact energies of less than a thousandth of comparable systems means that the system can be inherently safe around humans and in human environments.

Halodi Robotics is using the EVE platform to pilot humanoid robotics into new areas while their next generation robot Sarah is being developed for 2022 launch.

You can see the full list of our Good Robot Awards in Innovation, Vision, Commercialization and our Community Champions here at https://svrobo.org/awards and we’ll be sharing articles about each category of award winners throughout the week.

Shaping the UK’s future with smart machines: Findings from four ThinkIns with academia, industry, policy, and the public

The UK Robotics Growth Partnership (RGP) aims to set the conditions for success to empower the UK to be a global leader in Robotics and Autonomous Systems whilst delivering a smarter, safer, more prosperous, sustainable and competitive UK. The aim is for smart machines to become ubiquitous, woven into the fabric of society, in every sector, every workplace, and at home. If done right, this could lead to increased productivity, and improved quality of life. It could enable us to meet Net Zero targets, and support workers as their roles transition from menial tasks.

One thing that’s striking is that although robotics holds so much potential, they are not yet ready. The covid crisis has made this very clear. If it had been ready, we could have – at scale – deployed robots to sanitise hospitals, enable doctor-patient communication through telepresence, or connect patients with loved ones. Robots could have produced, organised, and delivered much needed samples, tests, PPE, medicine, and food across the UK. And many businesses could be reopening with a robotic interface. Robots could have powered a low-touch economy, where activities continue even when humans can’t be in close physical contact, driving recovery and resilience.

For the past year, we’ve been thinking about this at the RGP. How could we have done things better? What would it take to make an armada of disinfecting robots for a Covid pop-up hospital (called a Nightingale hospital in the UK)? Ideally we would have been able to log into a digital twin of the hospital, port in a model of a robot platform from a database, work-up a solution in the virtual world, and readily port it to an actual testbed to demonstrate its function in the physical world. We could then trial the solution in a living lab, maybe a dedicated Nightingale, before scaling up the solution to other hospitals. Others developing telepresence robots could follow the same methodology, checking that their solutions are interoperable, and working within the same virtual and physical environments.

We have many bits of the puzzle in the UK – great research and industry, plus government buy-in, but what we need is to bring this together.

To explore this further, we spent the last months hosting ThinkIns with Tortoise Media to get feedback from Academia, Industry, Policy and the Public. You can read all the blog posts and watch the videos here:

The future of smart machines: reflections from academia https://ukrgp.org/the-future-of-smart-machines-reflections-from-academia/

Building an ecosystem to make useful robots https://ukrgp.org/building-an-ecosystem-to-make-useful-robots/

Musings with the public about their future with smart machines https://ukrgp.org/musings-with-the-public-about-their-future-with-smart-machines/

Keeping up with the pace of change – positioning the UK as a leader in smart machines https://ukrgp.org/keeping-up-with-the-pace-of-change-positioning-the-uk-as-a-leader-in-smart-machines/

Below are some preliminary findings.

From digital twins to living labs

In his blog, James Kell from Rolls Royce says “The UK is small enough to collaborate well, but big enough to be a global leader. But to be successful we will need new tools, in particular better, cheaper digital twins – synthetic environments where we can develop and test new approaches before we test them in real world environments on our $35m engines.”

Professor Samia Nefti-Meziani from Salford University had a similar comment “Society needs better tools to support a sustainable future, with a new network of synthetic environments to build and fine-tune new technologies, to ensure they work in the real world and to reduce the time from their inception to deployment from years to months. Digital platforms and accessible, open-source software tools will empower SMEs, academics and the public sector to engage and benefit from these new solutions.”

Collaboration across academia and industry

As Samia highlights, “Collaboration was a recurring theme in the ThinkIns, with academic and industry partnerships essential to ensure we target the most pressing challenges and drive innovation in the sectors that need its solutions. As smart machines become more capable and cheaper, their adoption and development within the UK business ecosystem will broaden across sectors and applications.”

Government support to unlock incentives

James continued, “Collaboration needs coordination: Government is critical to convening and leading, creating new ways and incentives to work better together. The new tools will only equip our researchers and SMEs to accelerate product development, validation and speed to market if they can trust each other and all both contribute and benefit. We need new ways for big industry (companies like mine) to have their challenges understood and find new partners to work with, to learn together to develop solutions and put them in place quicker. And if we join up the academics and link across our innovation infrastructure and existing test areas, we will accelerate the adoption of smart machines and unleash the multiple benefits they bring.”

The human element

‘Taking the public with us’, is critical to mass adoption, says Samia. “Key will be:

– Involving the public in co-creating research and industry ambitions, to help them understand and engage with what RAS can offer

– Engaging with those who distrust RAS, to understand their concerns and gain their confidence

– Improving RAS education and lifelong learning, so those with interest and capability can be trained in RAS and directly involved in shaping their future.”

“The sector must ‘show its workings’ and be clear of the problems and challenges to prioritise. Ensuring standards and protocols are developed to protect the input of the public and the quality of the outputs is vital to buy-in in the long-term.”

David Bisset, a Robotics Consultant, commented on the Public session, highlighting that “Smart Machines are already with us, cars, aeroplanes, vacuum cleaners we don’t call them robots but they all use that technology. To make them work requires many skills; industrial design, AI people, sensor experts and interaction designer… and many more. At a human level we need to be able to trust, to know it’s built right and safe.”

He highlights the issue of “Tech Wash” mentioned by the public. “Is ‘smart machine’ just some clever rebranding? The needless selling of technology as a solution to every senior manager’s need to outshine their peers? We need to stop and think about the consequences of forcing through technology driven organisational change without evidence and stop needless disruption. We need to know these things will work!”

Overall, to make smart machines a success, we need to bring the discussion to a human level, to where this makes a difference to people.

Bringing it all together

Rob Buckingham, Head of RACE at the UK Atomic Energy Authority commented on the policy ThinkIn “Robotics includes both tools that are physically discrete from us and physical augmentation. In either case the interface between person and machine is going to be a field of rapid development driven at least in part by gaming and zooming.

The much bigger part is the informed discussion with people, with society, about the world we want to live in. Are we Canute (spoiler alert – it doesn’t end well) or are we the voice of sustainable democracy that values both people and nature?

I think Living Labs are going to sit at the heart of this… physical places where we explore the issues and opportunities together. In my field of nuclear, mock-ups have always been sensible because experimenting with the real thing is only allowed in exceptional circumstances. My hope is that we will invest in many Living Labs around the country that enable us, collectively, to explore the benefits and unintended consequences of our creativity. Of course, we might expect all of the Living Labs to be connected by data and the management of data; indeed we might expect common tech platforms and digital models of ‘nearly everything’ to be one of the highest value spin-offs.”

Let’s talk about the future of Air cargo.

You invest in the future you want to live in. I want to invest my time in the future of rapid logistics.

Three years ago I set out on a journey to build a future where one-day delivery is available anywhere in the world by commercializing high precision, low-cost automated airdrops. In the beginning, the vision seemed almost so grand and unachievable as to be silly. A year ago we began assembling a top-notch team full of engineers, aviators and business leaders to help solve this problem. After a lot of blood sweat and tears, we arrive at present day with the announcement of our $8M seed round raise backed by some amazing capital partners and a growing coalition excited and engaged to accelerate DASH to the next chapter. With this occasion, we have been reflecting a lot on the journey and the “why” that inspired this endeavor to start all those years ago.

Why Does This Problem Exist?

To those of us fortunate enough to live in large well-infrastructured metropolitan cities, deliveries and logistics isn’t an issue we often consider. We expect our Amazon Prime, UPS, and FedEx packages to arrive the next day or within the standard 3-5 business days. If you live anywhere else these networks grind to a halt trying to deliver. For all its scale, Amazon Prime services less than 60 percent of zipcodes in the US with free 2-day prime shipping. The rural access index shows that over 3 Billion people, live in rural settings and over 700 million people don’t live within easy access to all-weather roads at all. Ask manufacturers in need of critical spare parts in Montana, earthquake rescue personnel in Nepal, grocery store owners in mountainous Columbia, or anyone on the 20,000 inhabited islands of the Philippines if rapid logistics feels solved or affordable. The short answer – it’s not.

Before that package is delivered to your door it requires a middle mile solution to move from region to region. There is only one technology that can cross oceans, mountains, and continents in a single day, and that is air cargo.

Air cargo accounts for less than one percent of all pounds delivered, but over 33 percent of all shipping revenue globally. We collectively believe in air cargo and rely on it to get our most critical and immediate deliveries, including a growing share of e-commerce and just in time deliveries. If you want something fast, it’s coming by airplane. There is no substitute.

However, the efficiency and applications for air cargo break down when the plane has to land. While the 737 can fly over 600 mph and thousands of miles, it requires hundreds of millions in infrastructure, airports, and ground trucking to get cargo from the airport to your local warehouse making it very costly for commercial deliveries. The ground infrastructure has to exist on every island in the Philippines, every mountain town in Columbia and every town in Nepal. This infrastructure has to reach both sides of every mountain or island anywhere you want things fast. Even when you can land at a modern airport take-off and fuel burn during climb can account for upwards of 30 percent of an entire flight’s fuel use and drives insurance and maintenance costs from landing and takeoff cycles. This problem is so intrinsic to air cargo and logistics it almost seems natural. Well of course flyover states and rural areas don’t get cheap, fast, and convenient deliveries. Are you going to land that 737 at 20 towns on the way from LA to New York City? We fly over potential customers on our way to big urban cities with modern infrastructure even though only a minority portion of the world’s population lives there. Something has to change.

Our solution

To solve this problem is simple in thought. Yet this has been one of the most complex tasks I’ve had the honor of working on in my engineering career. Land the package, not the plane. By commercializing high-precision low-cost air drops you can decouple airplanes from landings, runways and trucks. Suddenly a delivery to rural Kansas is just as fast and cost-effective as a major coastal city. Fuel, insurance, utilization rate, service improvements, coverage area, and-and-and, so many metrics improve overnight in significant ways if an existing aircraft can deliver directly to the last mile sorting facility and bypasses much of the complexity, cost and infrastructure needed for traditional hub and spoke networks.

Perhaps one of the most common questions I received when I started DASH why hasn’t [insert your preferred enterprise organization here] done this before? Without taking a detour conversation on why large enterprises historically struggle with innovation, the simple answer is: Because now is the time. Advancements in IoT, low size weight and power flight controllers coupled with a career implementing automation in safety-critical environments meant that the necessary ingredients were ready. Tremendous credit is due to some of the most brilliant engineers, scientists and developers I’ve had the pleasure of working with who took to task carving away raw ideas and rough prototypes into aerospace grade commercial products. All with the bravery to do so while working outside the confines of existing aerospace text books.

Beyond the intricacies of technology was a personal impetus to implement. My father’s family has origins in Barbados, during hurricane season we would make the call, when the phone lines were restored, to ask “is everything okay?” It often felt like a roll of the dice if they would be spared that year in a sick game of roulette that someone else would lose. With islands by definition nearly all help and aid have to come from aboard, but how can supplies be distributed when ports are destroyed, runways damaged and roads washed out? To me, it is a moral imperative to help, but also to build self-sustaining commercial solutions that can scale to help more in the future.

This thought process was put to the test in 2017, just weeks after starting to seriously contemplate and study the ideas that became DASH. Hurricane Maria hit Puerto Rico. I awoke just as millions of others to witness one of the worst hurricanes to make landfall in 100 years. That day we started making calls, 10 days later we were flying inland in a rented Cessna 208 delivering thousands of pounds of humanitarian supplies via air drops to cut off communities. The take away was that if this could be done safely and legally on an idle Fedex feeder aircraft, if those on the ground were willing and ready for rapid logistics at the same price they would have paid, why did it have wait until a natural disaster to strike? DASH exists because there is no technology, process, or company that can honestly make the claim of delivery to anywhere or even most places in under 2 days. We in large cities have come to enjoy it and expect it, yet in the same breath, we cut the conversation short for those geographically situated elsewhere. Our solution exists and with the hard work of an amazingly talented team and excellent partners continue to scale and grow until that one day that claim can be made.

Our Future

The story of DASH is far from over, our vision is rapid logistics anywhere and there is a flight path ahead of us to get there. Today, DASH is advancing the state of the art of precision air drop technology, tomorrow we are looking to deliver into your community wherever it is and despite the circumstances. The entire globe deserves the same level of service and convenience. The list is too long to thank everyone who has helped DASH get to where we are today, and growing longer every day. Instead I can offer up, look to the skies you may see your next delivery safely and precisely coming down to a location near you.

Joel Ifill is the founder and CEO of DASH Systems. He can be found at www.dashshipping.com and reached at inquiries@dashshipping.com we are always on the hunt for talented roboticists engineers and developers who enjoy aviation, inquire at HR@DASHshipping.com