A soft robot, attached to a balloon and submerged in a transparent column of water, dives and surfaces, then dives and surfaces again, like a fish chasing flies. Soft robots have performed this kind of trick before. But unlike most soft robots, this one is made and operated with no hard or electronic parts. Inside, a soft, rubber computer tells the balloon when to ascend or descend. For the first time, this robot relies exclusively on soft digital logic.

In the last decade, soft robots have surged into the metal-dominant world of robotics. Grippers made from rubbery silicone materials are already used in assembly lines: Cushioned claws handle delicate fruit and vegetables like tomatoes, celery, and sausage links or extract bottles and sweaters from crates. In laboratories, the grippers can pick up slippery fish, live mice, and even insects, eliminating the need for more human interaction.

Soft robots already require simpler control systems than their hard counterparts. The grippers are so compliant, they simply cannot exert enough pressure to damage an object and without the need to calibrate pressure, a simple on-off switch suffices. But until now, most soft robots still rely on some hardware: Metal valves open and close channels of air that operate the rubbery grippers and arms, and a computer tells those valves when to move.

Now, researchers have built a soft computer using just rubber and air. “We’re emulating the thought process of an electronic computer, using only soft materials and pneumatic signals, replacing electronics with pressurized air,” says Daniel J. Preston, first author on a paper published in PNAS and a postdoctoral researcher working with George Whitesides, a Founding Core Faculty member of Harvard’s Wyss Institute for Biologically Inspired Engineering, and the Woodford L. and Ann A. Flowers University Professor at Harvard University’s Department of Chemistry and Chemical Biology.

To make decisions, computers use digital logic gates, electronic circuits that receive messages (inputs) and determine reactions (outputs) based on their programming. Our circuitry isn’t so different: When a doctor strikes a tendon below our kneecap (input), the nervous system is programmed to jerk (output).

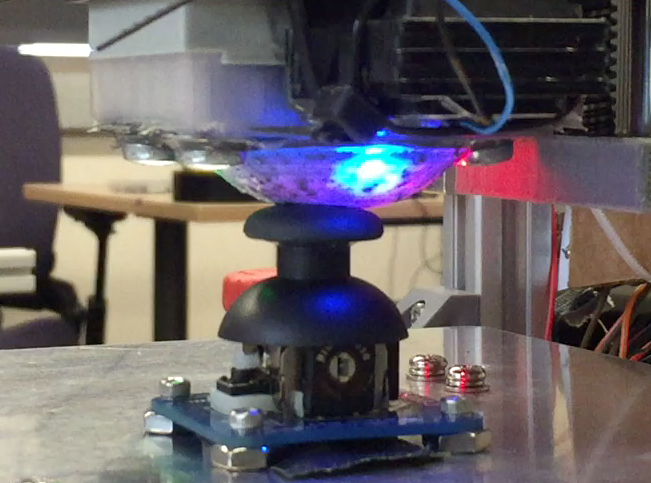

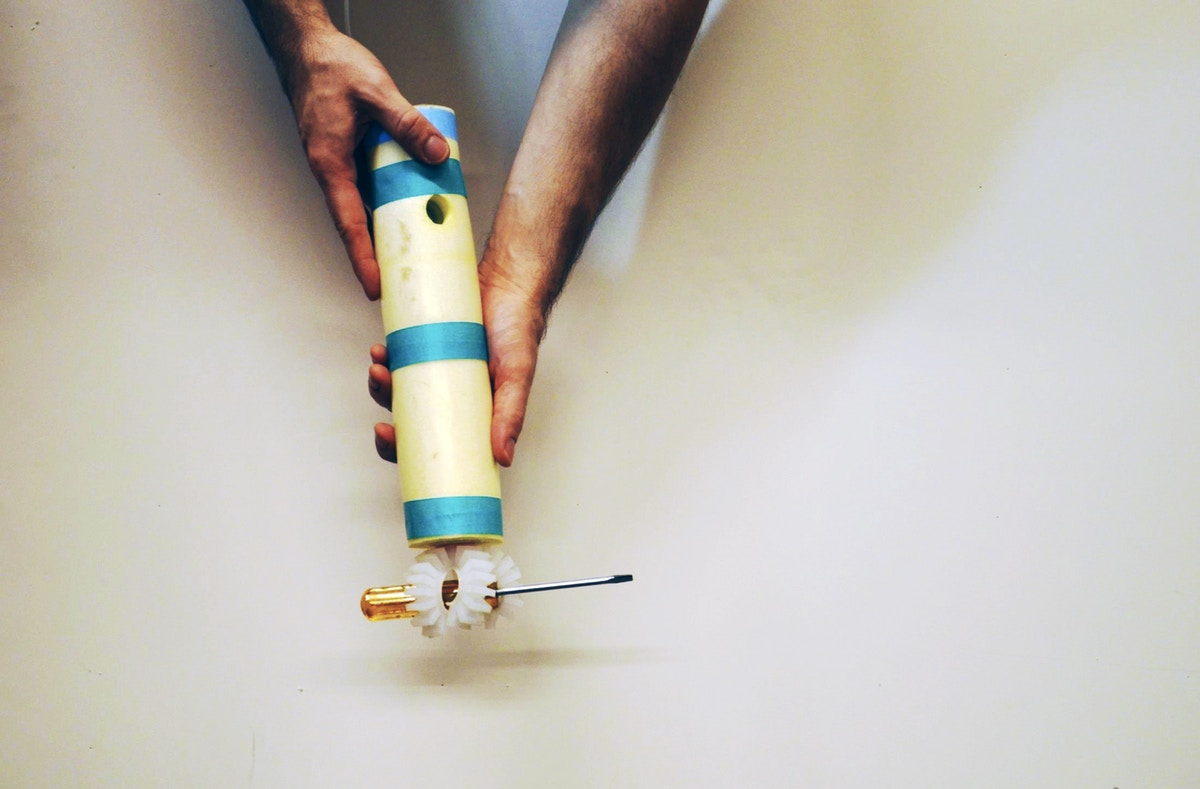

Preston’s soft computer mimics this system using silicone tubing and pressurized air. To achieve the minimum types of logic gates required for complex operations—in this case, NOT, AND, and OR—he programmed the soft valves to react to different air pressures. For the NOT logic gate, for example, if the input is high pressure, the output will be low pressure. With these three logic gates, Preston says, “you could replicate any behavior found on any electronic computer.”

The bobbing fish-like robot in the water tank, for example, uses an environmental pressure sensor (a modified NOT gate) to determine what action to take. The robot dives when the circuit senses low pressure at the top of the tank and surfaces when it senses high pressure at depth. The robot can also surface on command if someone pushes an external soft button.

Robots built with only soft parts have several benefits. In industrial settings, like automobile factories, massive metal machines operate with blind speed and power. If a human gets in the way, a hard robot could cause irreparable damage. But if a soft robot bumps into a human, Preston says, “you wouldn’t have to worry about injury or a catastrophic failure.” They can only exert so much force.

But soft robots are more than just safer: They are generally cheaper and simpler to make, light weight, resistant to damage and corrosive materials, and durable. Add intelligence and soft robots could be used for much more than just handling tomatoes. For example, a robot could sense a user’s temperature and deliver a soft squeeze to indicate a fever, alert a diver when the water pressure rises too high, or push through debris after a natural disaster to help find victims and offer aid.

Soft robots can also venture where electronics struggle: High radiative fields, like those produced after a nuclear malfunction or in outer-space, and inside Magnetic Resonance Imaging (MRI) machines. In the wake of a hurricane or flooding, a hardy soft robot could manage hazardous terrain and noxious air. “If it gets run over by a car, it just keeps going, which is something we don’t have with hard robots,” Preston says.

Preston and colleagues are not the first to control robots without electronics. Other research teams have designed microfluidic circuits, which can use liquid and air to create nonelectronic logic gates. One microfluidic oscillator helped a soft octopus-shaped robot flail all eight arms.

Yet, microfluidic logic circuits often rely on hard materials like glass or hard plastics, and they use such thin channels that only small amounts of air can move through at a time, slowing the robot’s motion. In comparison, Preston’s channels are larger—close to one millimeter in diameter—which enables much faster air flow rates. His air-based grippers can grasp an object in a matter of seconds.

Microfluidic circuits are also less energy efficient. Even at rest, the devices use a pneumatic resistor, which flows air from the atmosphere to either a vacuum or pressure source to maintain stasis. Preston’s circuits require no energy input when dormant. Such energy conservation could be crucial in emergency or disaster situations where the robots travel far from a reliable energy source.

The soft, pneumatic robots also offer an enticing possibility: Invisibility. Depending on which material Preston selects, he could design a robot that is index-matched to a specific substance. So, if he chooses a material that camouflages in water, the robot would appear transparent when submerged. In the future, he and his colleagues hope to create autonomous robots that are invisible to the naked eye or even sonar detection. “It’s just a matter of choosing the right materials,” he says.

For Preston, the right materials are elastomers or rubbers. While other fields chase higher power with machine learning and artificial intelligence, the Whitesides team turns away from the mounting complexity. “There’s a lot of capability there,” Preston says, “but it’s also good to take a step back and think about whether or not there’s a simpler way to do things that gives you the same result, especially if it’s not only simpler, it’s also cheaper.”