Engineering students create a 3D-printed functional robotic arm

New algorithms help four-legged robots run in the wild

CMES – AI-Powered 3D Robot Vision

Low-maintenance Palletizer Magnet Saves Robot and Manpower

Tesla’s Optimus robot isn’t very impressive – but it may be a sign of better things to come

By Wafa Johal (Senior Lecturer, Computing & Information Systems, The University of Melbourne)

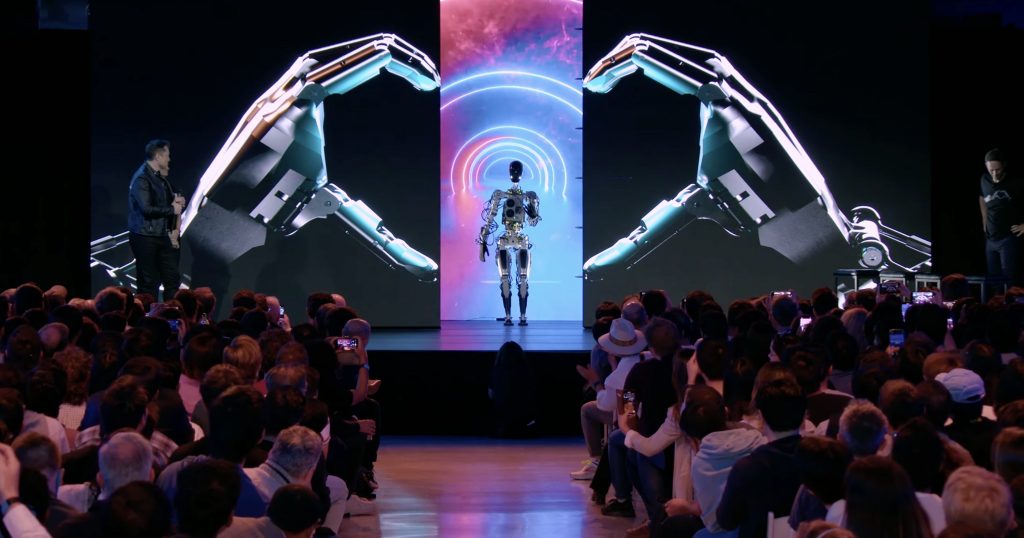

In August 2021, Tesla CEO Elon Musk announced the electric car manufacturer was planning to get into the robot business. In a presentation accompanied by a human dressed as a robot, Musk said work was beginning on a “friendly” humanoid robot to “navigate through a world built for humans and eliminate dangerous, repetitive and boring tasks”.

Musk has now unveiled a prototype of the robot, called Optimus, which he hopes to mass-produce and sell for less than US$20,000 (A$31,000).

At the unveiling, the robot walked on a flat surface and waved to the crowd, and was shown doing simple manual tasks such as carrying and lifting in a video. As a robotics researcher, I didn’t find the demonstration very impressive – but I am hopeful it will lead to bigger and better things.

Why would we want humanoid robots?

Most of the robots used today don’t look anything like people. Instead, they are machines designed to carry out a specific purpose, like the industrial robots used in factories or the robot vacuum cleaner you might have in your house.

So why would you want one shaped like a human? The basic answer is they would be able to operate in environments designed for humans.

Unlike industrial robots, humanoid robots might be able to move around and interact with humans. Unlike robot vacuum cleaners, they might be able to go up stairs or traverse uneven terrain.

And as well as practical considerations, the idea of “artificial humans” has long had an appeal for inventors and science-fiction writers!

Room for improvement

Based on what we saw in the Tesla presentation, Optimus is a long way from being able to operate with humans or in human environments. The capabilities of the robot showcased fall far short of the state of the art in humanoid robotics.

The Atlas robot made by Boston Dynamics, for example, can walk outdoors and carry out flips and other acrobatic manoeuvres.

And while Atlas is an experimental system, even the commercially available Digit from Agility Robotics is much more capable than what we have seen from Optimus. Digit can walk on various terrains, avoid obstacles, rebalance itself when bumped, and pick up and put down objects.

Bipedal walking (on two feet) alone is no longer a great achievement for a robot. Indeed, with a bit of knowledge and determination you can build such a robot yourself using open source software.

There was also no sign in the Optimus presentation of how it will interact with humans. This will be essential for any robot that works in human environments: not only for collaborating with humans, but also for basic safety.

It can be very tricky for a robot to accomplish seemingly simple tasks such as handing an object to a human, but this is something we would want a domestic humanoid robot to be able to do.

Sceptical consumers

Others have tried to build and sell humanoid robots in the past, such as Honda’s ASIMO and SoftBank’s Pepper. But so far they have never really taken off.

Amazon’s recently released Astro robot may make inroads here, but it may also go the way of its predecessors.

Consumers seem to be sceptical of robots. To date, the only widely adopted household robots are the Roomba-like vacuum cleaners, which have been available since 2002.

To succeed, a humanoid robot will need be able to do something humans can’t to justify the price tag. At this stage the use case for Optimus is still not very clear.

Hope for the future

Despite these criticisms, I am hopeful about the Optimus project. It is still in the very early stages, and the presentation seemed to be aimed at recruiting new staff as much as anything else.

Tesla certainly has plenty of resources to throw at the problem. We know it has the capacity to mass produce the robots if development gets that far.

Musk’s knack for gaining attention may also be helpful – not only for attracting talent to the project, but also to drum up interest among consumers.

Robotics is a challenging field, and it’s difficult to move fast. I hope Optimus succeeds, both to make something cool we can use – and to push the field of robotics forward.

Wafa Johal receives funding from the Australian Research Council.

This article appeared in The Conversation.

Comau’s Robotics And Advanced Technologies For The “E.Do Learning Center”, The Educational Project Launched By Ferrari To Support New Generations Of Students In The Local Community

Bipedal robot achieves Guinness World Record in 100 metres

Cassie the robot sets 100-metre record, photo by Kegan Sims.

Cassie the robot sets 100-metre record, photo by Kegan Sims.

By Steve Lundeberg

Cassie the robot, invented at the Oregon State University College of Engineering and produced by OSU spinout company Agility Robotics, has established a Guinness World Record for the fastest 100 metres by a bipedal robot.

Cassie clocked the historic time of 24.73 seconds at OSU’s Whyte Track and Field Center, starting from a standing position and returning to that position after the sprint, with no falls.

The 100-metre record builds on earlier achievements by the robot, including traversing five kilometres in 2021 in just over 53 minutes. Cassie, the first bipedal robot to use machine learning to control a running gait on outdoor terrain, completed the 5K on Oregon State’s campus untethered and on a single battery charge.

Cassie was developed under the direction of Oregon State robotics professor Jonathan Hurst. The robot has knees that bend like an ostrich’s and operates with no cameras or external sensors, essentially as if blind.

Since Cassie’s introduction in 2017, in collaboration with artificial intelligence professor Alan Fern, OSU students have been exploring machine learning options in Oregon State’s Dynamic Robotics and AI Lab.

“We have been building the understanding to achieve this world record over the past several years, running a 5K and also going up and down stairs,” said graduate student Devin Crowley, who led the Guinness effort. “Machine learning approaches have long been used for pattern recognition, such as image recognition, but generating control behaviors for robots is new and different.”

The Dynamic Robotics and AI Lab melds physics with AI approaches more commonly used with data and simulation to generate novel results in robot control, Fern said. Students and researchers come from a range of backgrounds including mechanical engineering, robotics and computer science.

“Cassie has been a platform for pioneering research in robot learning for locomotion,” Crowley said. “Completing a 5K was about reliability and endurance, which left open the question of, how fast can Cassie run? That led the research team to shift its focus to speed.”

Cassie was trained for the equivalent of a full year in a simulation environment, compressed to a week through a computing technique known as parallelization – multiple processes and calculations happening at the same time, allowing Cassie to go through a range of training experiences simultaneously.

“Cassie can perform a spectrum of different gaits but as we specialized it for speed we began to wonder, which gaits are most efficient at each speed?” Crowley said. “This led to Cassie’s first optimized running gait and resulted in behavior that was strikingly similar to human biomechanics.”

The remaining challenge, a “deceptively difficult” one, was to get Cassie to reliably start from a free-standing position, run, and then return to the free-standing position without falling.

“Starting and stopping in a standing position are more difficult than the running part, similar to how taking off and landing are harder than actually flying a plane,” Fern said. “This 100-metre result was achieved by a deep collaboration between mechanical hardware design and advanced artificial intelligence for the control of that hardware.”

Hurst, chief technology officer at Agility Robotics and a robotics professor at Oregon State, said: “This may be the first bipedal robot to learn to run, but it won’t be the last. I believe control approaches like this are going to be a huge part of the future of robotics. The exciting part of this race is the potential. Using learned policies for robot control is a very new field, and this 100-metre dash is showing better performance than other control methods. I think progress is going to accelerate from here.”

Tesla robot walks, waves, but doesn’t show off complex tasks

Breaking through the mucus barrier

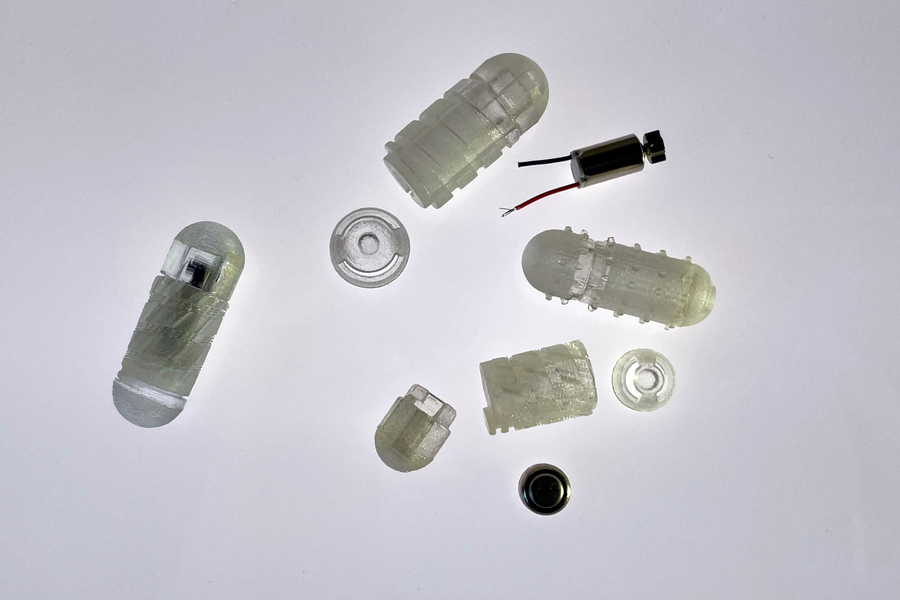

A new drug capsule developed at MIT can help large proteins such as insulin and small-molecule drugs be absorbed in the digestive tract. Image: Felice Frankel

By Anne Trafton | MIT News Office

One reason that it’s so difficult to deliver large protein drugs orally is that these drugs can’t pass through the mucus barrier that lines the digestive tract. This means that insulin and most other “biologic drugs” — drugs consisting of proteins or nucleic acids — have to be injected or administered in a hospital.

A new drug capsule developed at MIT may one day be able to replace those injections. The capsule has a robotic cap that spins and tunnels through the mucus barrier when it reaches the small intestine, allowing drugs carried by the capsule to pass into cells lining the intestine.

“By displacing the mucus, we can maximize the dispersion of the drug within a local area and enhance the absorption of both small molecules and macromolecules,” says Giovanni Traverso, the Karl van Tassel Career Development Assistant Professor of Mechanical Engineering at MIT and a gastroenterologist at Brigham and Women’s Hospital.

In a study appearing today in Science Robotics, the researchers demonstrated that they could use this approach to deliver insulin as well as vancomycin, an antibiotic peptide that currently has to be injected.

Shriya Srinivasan, a research affiliate at MIT’s Koch Institute for Integrative Cancer Research and a junior fellow at the Society of Fellows at Harvard University, is the lead author of the study.

Tunneling through

For several years, Traverso’s lab has been developing strategies to deliver protein drugs such as insulin orally. This is a difficult task because protein drugs tend to be broken down in acidic environment of the digestive tract, and they also have difficulty penetrating the mucus barrier that lines the tract.

To overcome those obstacles, Srinivasan came up with the idea of creating a protective capsule that includes a mechanism that can tunnel through mucus, just as tunnel boring machines drill into soil and rock.

“I thought that if we could tunnel through the mucus, then we could deposit the drug directly on the epithelium,” she says. “The idea is that you would ingest this capsule and the outer layer would dissolve in the digestive tract, exposing all these features that start to churn through the mucus and clear it.”

The “RoboCap” capsule, which is about the size of a multivitamin, carries its drug payload in a small reservoir at one end and carries the tunnelling features in its main body and surface. The capsule is coated with gelatin that can be tuned to dissolve at a specific pH.

When the coating dissolves, the change in pH triggers a tiny motor inside the RoboCap capsule to start spinning. This motion helps the capsule to tunnel into the mucus and displace it. The capsule is also coated with small studs that brush mucus away, similar to the action of a toothbrush.

The spinning motion also helps to erode the compartment that carries the drug, which is gradually released into the digestive tract.

“What the RoboCap does is transiently displace the initial mucus barrier and then enhance absorption by maximizing the dispersion of the drug locally,” Traverso says. “By combining all of these elements, we’re really maximizing our capacity to provide the optimal situation for the drug to be absorbed.”

Enhanced delivery

In tests in animals, the researchers used this capsule to deliver either insulin or vancomycin, a large peptide antibiotic that is used to treat a broad range of infections, including skin infections as well as infections affecting orthopedic implants. With the capsule, the researchers found that they could deliver 20 to 40 times more drug than a similar capsule without the tunneling mechanism.

Once the drug is released from the capsule, the capsule itself passes through the digestive tract on its own. The researchers found no sign of inflammation or irritation in the digestive tract after the capsule passed through, and they also observed that the mucus layer reforms within a few hours after being displaced by the capsule.

Another approach that some researchers have used to enhance oral delivery of drugs is to give them along with additional drugs that help them cross through the intestinal tissue. However, these enhancers often only work with certain drugs. Because the MIT team’s new approach relies solely on mechanical disruptions to the mucus barrier, it could potentially be applied to a broader set of drugs, Traverso says.

“Some of the chemical enhancers preferentially work with certain drug molecules,” he says. “Using mechanical methods of administration can potentially enable more drugs to have enhanced absorption.”

While the capsule used in this study released its payload in the small intestine, it could also be used to target the stomach or colon by changing the pH at which the gelatin coating dissolves. The researchers also plan to explore the possibility of delivering other protein drugs such as GLP1 receptor agonist, which is sometimes used to treat type 2 diabetes. The capsules could also be used to deliver topical drugs to treat ulcerative colitis and other inflammatory conditions by maximizing the local concentration of the drugs in the tissue to help treat the inflammation.

The research was funded, in part, by the National Institutes of Health and MIT’s Department of Mechanical Engineering.

Other authors of the paper include Amro Alshareef, Alexandria Hwang, Zilianng Kang, Johannes Kuosmanen, Keiko Ishida, Joshua Jenkins, Sabrina Liu, Wiam Abdalla Mohammed Madani, Jochen Lennerz, Alison Hayward, Josh Morimoto, Nina Fitzgerald, and Robert Langer.