A system that allows users to communicate with others remotely while embodying a humanoid robot

Magnetic sensors track muscle length

A small, bead-like magnet used in a new approach to measuring muscle position. Image: Courtesy of the researchers

By Anne Trafton | MIT News Office

Using a simple set of magnets, MIT researchers have come up with a sophisticated way to monitor muscle movements, which they hope will make it easier for people with amputations to control their prosthetic limbs.

In a new pair of papers, the researchers demonstrated the accuracy and safety of their magnet-based system, which can track the length of muscles during movement. The studies, performed in animals, offer hope that this strategy could be used to help people with prosthetic devices control them in a way that more closely mimics natural limb movement.

“These recent results demonstrate that this tool can be used outside the lab to track muscle movement during natural activity, and they also suggest that the magnetic implants are stable and biocompatible and that they don’t cause discomfort,” says Cameron Taylor, an MIT research scientist and co-lead author of both papers.

In one of the studies, the researchers showed that they could accurately measure the lengths of turkeys’ calf muscles as the birds ran, jumped, and performed other natural movements. In the other study, they showed that the small magnetic beads used for the measurements do not cause inflammation or other adverse effects when implanted in muscle.

“I am very excited for the clinical potential of this new technology to improve the control and efficacy of bionic limbs for persons with limb-loss,” says Hugh Herr, a professor of media arts and sciences, co-director of the K. Lisa Yang Center for Bionics at MIT, and an associate member of MIT’s McGovern Institute for Brain Research.

Herr is a senior author of both papers, which appear in the journal Frontiers in Bioengineering and Biotechnology. Thomas Roberts, a professor of ecology, evolution, and organismal biology at Brown University, is a senior author of the measurement study.

Tracking movement

Currently, powered prosthetic limbs are usually controlled using an approach known as surface electromyography (EMG). Electrodes attached to the surface of the skin or surgically implanted in the residual muscle of the amputated limb measure electrical signals from a person’s muscles, which are fed into the prosthesis to help it move the way the person wearing the limb intends.

However, that approach does not take into account any information about the muscle length or velocity, which could help to make the prosthetic movements more accurate.

Several years ago, the MIT team began working on a novel way to perform those kinds of muscle measurements, using an approach that they call magnetomicrometry. This strategy takes advantage of the permanent magnetic fields surrounding small beads implanted in a muscle. Using a credit-card-sized, compass-like sensor attached to the outside of the body, their system can track the distances between the two magnets. When a muscle contracts, the magnets move closer together, and when it flexes, they move further apart.

The new muscle measuring approach takes advantage of the magnetic attraction between two small beads implanted in a muscle. Using a small sensor attached to the outside of the body, the system can track the distances between the two magnets as the muscle contracts and flexes. Image: Courtesy of the researchers

In a study published last year, the researchers showed that this system could be used to accurately measure small ankle movements when the beads were implanted in the calf muscles of turkeys. In one of the new studies, the researchers set out to see if the system could make accurate measurements during more natural movements in a nonlaboratory setting.

To do that, they created an obstacle course of ramps for the turkeys to climb and boxes for them to jump on and off of. The researchers used their magnetic sensor to track muscle movements during these activities, and found that the system could calculate muscle lengths in less than a millisecond.

They also compared their data to measurements taken using a more traditional approach known as fluoromicrometry, a type of X-ray technology that requires much larger equipment than magnetomicrometry. The magnetomicrometry measurements varied from those generated by fluoromicrometry by less than a millimeter, on average.

“We’re able to provide the muscle-length tracking functionality of the room-sized X-ray equipment using a much smaller, portable package, and we’re able to collect the data continuously instead of being limited to the 10-second bursts that fluoromicrometry is limited to,” Taylor says.

Seong Ho Yeon, an MIT graduate student, is also a co-lead author of the measurement study. Other authors include MIT Research Support Associate Ellen Clarrissimeaux and former Brown University postdoc Mary Kate O’Donnell.

Biocompatibility

In the second paper, the researchers focused on the biocompatibility of the implants. They found that the magnets did not generate tissue scarring, inflammation, or other harmful effects. They also showed that the implanted magnets did not alter the turkeys’ gaits, suggesting they did not produce discomfort. William Clark, a postdoc at Brown, is the co-lead author of the biocompatibility study.

The researchers also showed that the implants remained stable for eight months, the length of the study, and did not migrate toward each other, as long as they were implanted at least 3 centimeters apart. The researchers envision that the beads, which consist of a magnetic core coated with gold and a polymer called Parylene, could remain in tissue indefinitely once implanted.

“Magnets don’t require an external power source, and after implanting them into the muscle, they can maintain the full strength of their magnetic field throughout the lifetime of the patient,” Taylor says.

The researchers are now planning to seek FDA approval to test the system in people with prosthetic limbs. They hope to use the sensor to control prostheses similar to the way surface EMG is used now: Measurements regarding the length of muscles will be fed into the control system of a prosthesis to help guide it to the position that the wearer intends.

“The place where this technology fills a need is in communicating those muscle lengths and velocities to a wearable robot, so that the robot can perform in a way that works in tandem with the human,” Taylor says. “We hope that magnetomicrometry will enable a person to control a wearable robot with the same comfort level and the same ease as someone would control their own limb.”

In addition to prosthetic limbs, those wearable robots could include robotic exoskeletons, which are worn outside the body to help people move their legs or arms more easily.

The research was funded by the Salah Foundation, the K. Lisa Yang Center for Bionics at MIT, the MIT Media Lab Consortia, the National Institutes of Health, and the National Science Foundation.

- PAPERS

Shoring up drones with artificial intelligence helps surf lifesavers spot sharks at the beach

Scientist develops an open-source algorithm for selecting a dictionary of a neurointerface

An automated system to clean restrooms in convenience stores

Sarcos Successfully Executes Field Trials Demonstrating Suite of Robotic Technologies for Maintenance, Inspection, and Repair in Shipyard Operations

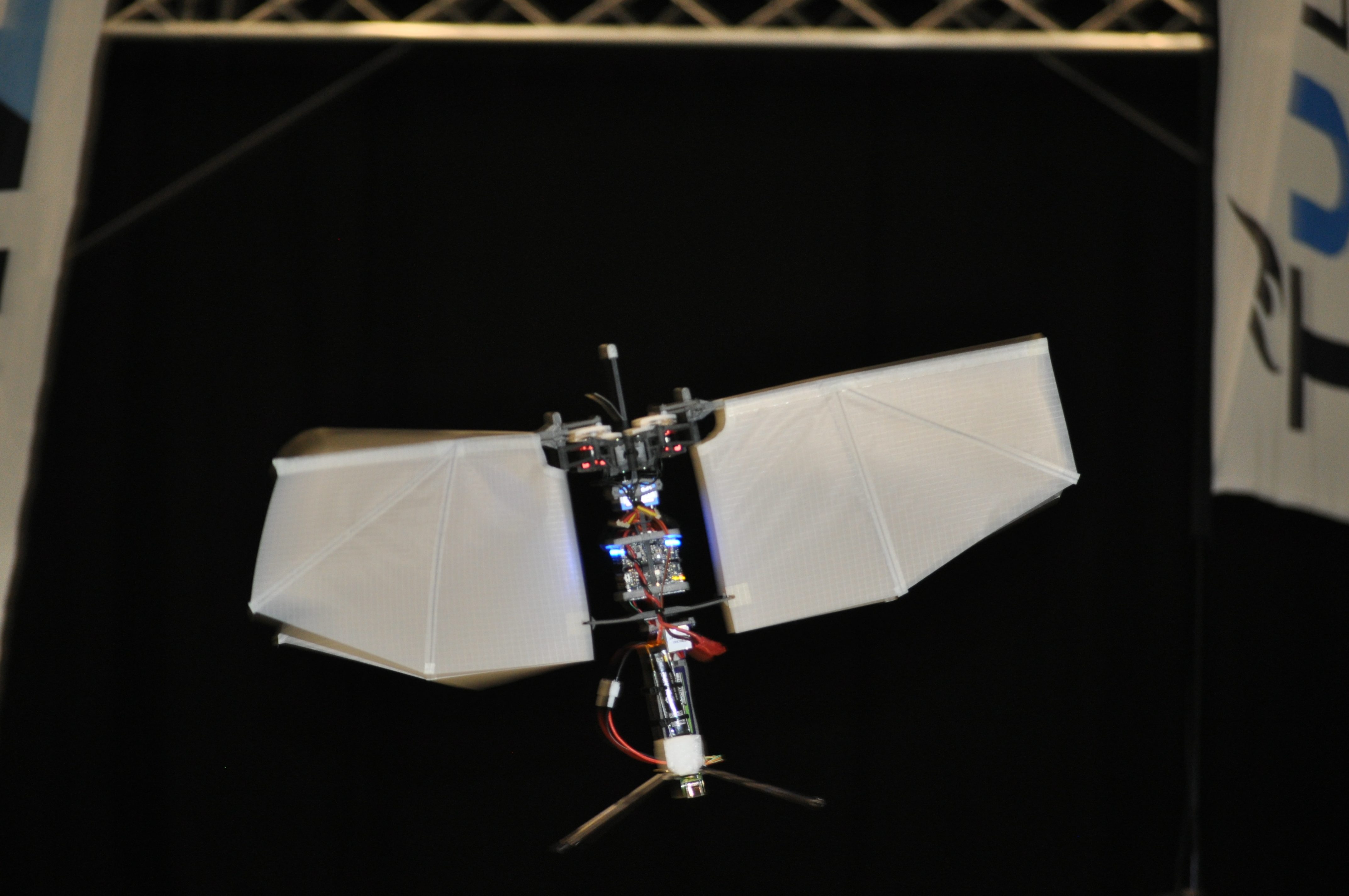

Big step towards tiny autonomous drones

Scientists have developed a theory that can explain how flying insects determine the gravity direction without using accelerometers. It also forms a substantial step in the creation of tiny, autonomous drones.

Scientists have discovered a novel manner for flying drones and insects to estimate the gravity direction. Whereas drones typically use accelerometers to this end, the way in which flying insects do this has until now been shrouded in mystery, since they lack a specific sense for acceleration. In an article published in Nature, scientists from TU Delft and Aix Marseille Université / CNRS in France have shown that drones can estimate the gravity direction by combining visual motion sensing with a model of how they move. The study is a great example of the synergy between technology and biology.

On the one hand, the new approach is an important step for the creation of autonomous tiny, insect-sized drones, since it requires fewer sensors. On the other hand, it forms a hypothesis for how insects control their attitude, as the theory forms a parsimonious explanation of multiple phenomena observed in biology.

The importance of finding the gravity direction

Successful flight requires knowing the direction of gravity. As ground-bound animals, we humans typically have no trouble determining which way is down. However, this becomes more difficult when flying. Indeed, the passengers in an airplane are normally not aware of the plane being slightly tilted sideways in the air to make a wide circle. When humans started to take the skies, pilots relied purely on visually detecting the horizon line for determining the plane’s “attitude”, that is, its body orientation with respect to gravity. However, when flying through clouds the horizon line is no longer visible, which can lead to an increasingly wrong impression of what is up and down – with potentially disastrous consequences.

Also drones and flying insects need to control their attitude. Drones typically use accelerometers for determining the gravity direction. However, in flying insects no sensing organ for measuring accelerations has been found. Hence, for insects it is currently still a mystery how they estimate attitude, and some even question whether they estimate attitude at all.

Optic flow suffices for finding attitude

Although it is unknown how flying insects estimate and control their attitude, it is very well known that they visually observe motion by means of “optic flow”. Optic flow captures the relative motion between an observer and its environment. For example, when sitting in a train, trees close by seem to move very fast (have a large optic flow), while mountains in the distance seem to move very slowly (have a small optic flow).

“Optic flow itself carries no information on attitude. However, we found out that combining optic flow with a motion model allows to retrieve the gravity direction.”, says Guido de Croon, full professor of bio-inspired micro air vehicles at TU Delft, “Having a motion model means that a robot or animal can predict how it will move when taking actions. For example, drones can predict what will happen when they spin their two right propellers faster than their left propellers. Since a drone’s attitude determines in which direction it accelerates, and this direction can be picked up by changes in optic flow, the combination allows a drone to determine its attitude.”

The theoretical analysis in the article shows that finding the gravity direction with optic flow works almost under any condition, except for specific cases such as when the observer is completely still. “Whereas engineers would find such an observability problem unacceptable, we hypothesise that nature has simply accepted it”, says Guido de Croon. “In the article we provide a theoretical proof that despite this problem, an attitude controller will still work around hover at the cost of slight oscillations – reminiscent of the more erratic flight behaviour of flying insects.”

Implications for robotics

The researchers confirmed the theory’s validity with robotic implementations, demonstrating its promise for the field of robotics. De Croon: “Tiny flapping wing drones can be useful for tasks like search-and-rescue or pollination. Designing such drones means dealing with a major challenge that nature also had to face; how to achieve a fully autonomous system subject to extreme payload restrictions. This makes even tiny accelerometers a considerable burden. Our proposed theory will contribute to the design of tiny drones by allowing for a smaller sensor suite.”

Biological insights

The proposed theory has the potential to give insight into various biological phenomena. “It was known that optic flow played a role in attitude control, but until now the precise mechanism for this was unclear.”, explains Franck Ruffier, bio-roboticist and director of research at Aixe Marseille Université / CNRS, “The proposed theory can explain how flying insects succeed in estimating and controlling their attitude even in difficult, cluttered environments where the horizon line is not visible. It also provides insight into other phenomena, for example, why locusts fly less well when their ocelli (eyes on the top of their heads) are occluded.”

”We expect that novel biological experiments, specifically designed for testing our theory will be necessary for verifying the use of the proposed mechanism in insects”, adds Franck Ruffier.

Click here for the original publication in Nature. The scientific article shows how the synergy between robotics and biology can lead to technological advances and novel avenues for biological research.

The post Big step towards tiny autonomous drones appeared first on RoboHouse.