Untangle your hair with help from robots

Untangle your hair with help from robots

Is ‘Spot’ a good dog? Why we’re right to worry about unleashing robot quadrupeds

Disinfection robot: Value created by linking up to building data

Robotics in Woodworking – The Zimmer Group reaches deep into its bag of tricks for Schmidt

DNA-inspired ‘supercoiling’ fibres could make powerful artificial muscles for robots

Shutterstock

The double helix of DNA is one of the most iconic symbols in science. By imitating the structure of this complex genetic molecule we have found a way to make artificial muscle fibres far more powerful than those found in nature, with potential applications in many kinds of miniature machinery such as prosthetic hands and dextrous robotic devices.

The power of the helix

DNA is not the only helix in nature. Flip through any biology textbook and you’ll see helices everywhere from the alpha-helix shapes of individual proteins to the “coiled coil” helices of fibrous protein assemblies like keratin in hair.

Some bacteria, such as spirochetes, adopt helical shapes. Even the cell walls of plants can contain helically arranged cellulose fibres.

Muscle tissue too is composed of helically wrapped proteins that form thin filaments. And there are many other examples, which poses the question of whether the helix endows a particular evolutionary advantage.

Many of these naturally occurring helical structures are involved in making things move, like the opening of seed pods and the twisting of trunks, tongues and tentacles. These systems share a common structure: helically oriented fibres embedded in a squishy matrix which allows complex mechanical actions like bending, twisting, lengthening and shortening, or coiling.

This versatility in achieving complex shapeshifting may hint at the reason for the prevalence of helices in nature.

Fibres in a twist

Ten years ago my work on artificial muscles brought me to think a lot about helices. My colleagues and I discovered a simple way to make powerful rotating artificial muscle fibres by simply twisting synthetic yarns.

These yarn fibres could rotate by untwisting when we expanded the volume of the yarn by heating it, making it absorb small molecules, or by charging it like a battery. Shrinking the fibre caused the fibres to re-twist.

Read more:

Show us your (carbon nanotube artificial) muscles!

We demonstrated that these fibres could spin a rotor at speeds of up to 11,500 revolutions per minute. While the fibres were small, we showed they could produce about as much torque per kilogram as large electric motors.

The key was to make sure the helically arranged filaments in the yarn were quite stiff. To accommodate an overall volume increase in the yarn, the individual filaments must either stretch in length or untwist. When the filaments are too stiff to stretch, the result is untwisting of the yarn.

Learning from DNA

More recently, I realised DNA molecules behave like our untwisting yarns. Biologists studying single DNA molecules showed that double-stranded DNA unwinds when treated with small molecules that insert themselves inside the double helix structure.

The backbone of DNA is a stiff chain of molecules called sugar phosphates, so when the small inserted molecules push the two strands of DNA apart the double helix unwinds. Experiments also showed that, if the ends of the DNA are tethered to stop them rotating, the untwisting leads to “supercoiling”: the DNA molecule forms a loop that wraps around itself.

Read more:

Fishing for artificial muscles nets a very simple solution

In fact, special proteins induce coordinated supercoiling in our cells to pack DNA molecules into the tiny nucleus.

We also see supercoiling in everyday life, for example when a garden hose becomes tangled. Twisting any long fibre can produce supercoiling, which is known as “snarling” in textiles processing or “hockling” when cables become snagged.

Supercoiling for stronger ‘artificial muscles’

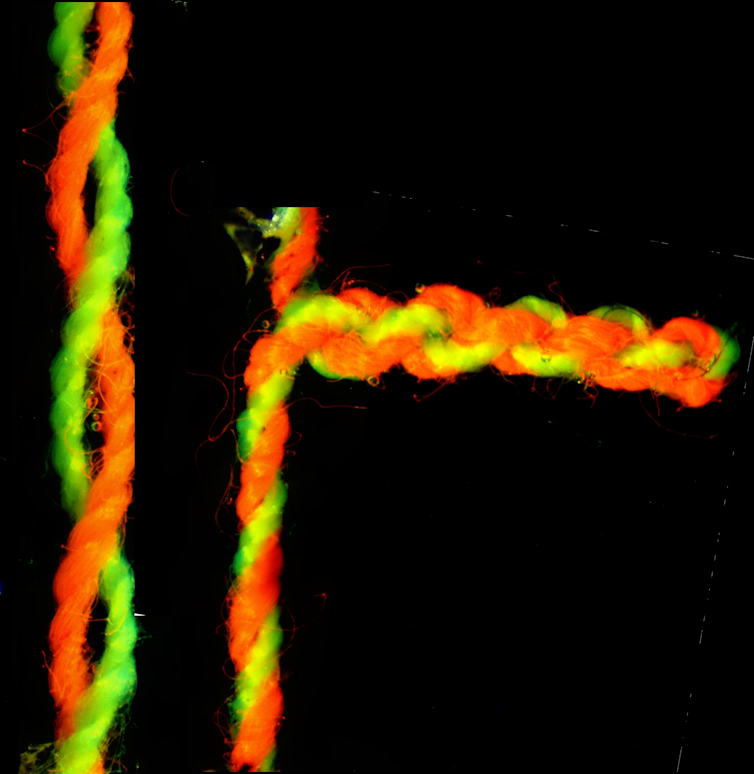

Our latest results show DNA-like supercoiling can be induced by swelling pre-twisted textile fibres. We made composite fibres with two polyester sewing threads, each coated in a hydrogel that swells up when it gets wet and then the pair twisted together.

Swelling the hydrogel by immersing it in water caused the composite fibre to untwist. But if the fibre ends were clamped to stop untwisting, the fibre began to supercoil instead.

Geoff Spinks, Author provided

As a result, the fibre shrank by up to 90% of its original length. In the process of shrinking, it did mechanical work equivalent to putting out 1 joule of energy per gram of dry fibre.

For comparison, the muscle fibres of mammals like us only shrink by about 20% of their original length and produce a work output of 0.03 joules per gram. This means that the same lifting effort can be achieved in a supercoiling fibre that is 30 times smaller in diameter compared with our own muscles.

Why artificial muscles?

Artificial muscle materials are especially useful in applications where space is limited. For example, the latest motor-driven prosthetic hands are impressive, but they do not currently match the dexterity of a human hand. More actuators are needed to replicate the full range of motion, grip types and strength of a healthy human.

Electric motors become much less powerful as their size is reduced, which makes them less useful in prosthetics and other miniature machines. However, artificial muscles maintain a high work and power output at small scales.

To demonstrate their potential applications, we used our supercoiling muscle fibres to open and close miniature tweezers. Such tools may be part of the next generation of non-invasive surgery or robotic surgical systems.

Many new types of artificial muscles have been introduced by researchers over the past decade. This is a very active area of research driven by the need for miniaturised mechanical devices. While great progress has been made, we still do not have an artificial muscle that completely matches the performance of natural muscle: large contractions, high speed, efficiency, long operating life, silent operation and safe for use in contact with humans.

The new supercoiling muscles take us one step closer to this goal by introducing a new mechanism for generating very large contractions. Currently our fibres operate slowly, but we see avenues for greatly increasing the speed of response and this will be the focus for ongoing research.

![]()

Geoff Spinks does not work for, consult, own shares in or receive funding from any company or organisation that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

Original post published in The Conversation.

Q&A: Vivienne Sze on crossing the hardware-software divide for efficient artificial intelligence

Not so long ago, watching a movie on a smartphone seemed impossible. Vivienne Sze was a graduate student at MIT at the time, in the mid 2000s, and she was drawn to the challenge of compressing video to keep image quality high without draining the phone’s battery. The solution she hit upon called for co-designing energy-efficient circuits with energy-efficient algorithms.

Sze would go on to be part of the team that won an Engineering Emmy Award for developing the video compression standards still in use today. Now an associate professor in MIT’s Department of Electrical Engineering and Computer Science, Sze has set her sights on a new milestone: bringing artificial intelligence applications to smartphones and tiny robots.

Her research focuses on designing more-efficient deep neural networks to process video, and more-efficient hardware to run those applications. She recently co-published a book on the topic, and will teach a professional education course on how to design efficient deep learning systems in June.

On April 29, Sze will join Assistant Professor Song Han for an MIT Quest AI Roundtable on the co-design of efficient hardware and software moderated by Aude Oliva, director of MIT Quest Corporate and the MIT director of the MIT-IBM Watson AI Lab. Here, Sze discusses her recent work.

Q: Why do we need low-power AI now?

A: AI applications are moving to smartphones, tiny robots, and internet-connected appliances and other devices with limited power and processing capabilities. The challenge is that AI has high computing requirements. Analyzing sensor and camera data from a self-driving car can consume about 2,500 watts, but the computing budget of a smartphone is just about a single watt. Closing this gap requires rethinking the entire stack, a trend that will define the next decade of AI.

Q: What’s the big deal about running AI on a smartphone?

A: It means that the data processing no longer has to take place in the “cloud,” on racks of warehouse servers. Untethering compute from the cloud allows us to broaden AI’s reach. It gives people in developing countries with limited communication infrastructure access to AI. It also speeds up response time by reducing the lag caused by communicating with distant servers. This is crucial for interactive applications like autonomous navigation and augmented reality, which need to respond instantaneously to changing conditions. Processing data on the device can also protect medical and other sensitive records. Data can be processed right where they’re collected.

Q: What makes modern AI so inefficient?

A: The cornerstone of modern AI — deep neural networks — can require hundreds of millions to billions of calculations — orders of magnitude greater than compressing video on a smartphone. But it’s not just number crunching that makes deep networks energy-intensive — it’s the cost of shuffling data to and from memory to perform these computations. The farther the data have to travel, and the more data there are, the greater the bottleneck.

Q: How are you redesigning AI hardware for greater energy efficiency?

A: We focus on reducing data movement and the amount of data needed for computation. In some deep networks, the same data are used multiple times for different computations. We design specialized hardware to reuse data locally rather than send them off-chip. Storing reused data on-chip makes the process extremely energy-efficient.

We also optimize the order in which data are processed to maximize their reuse. That’s the key property of the Eyeriss chip that was developed in collaboration with Joel Emer. In our followup work, Eyeriss v2, we made the chip flexible enough to reuse data across a wider range of deep networks. The Eyeriss chip also uses compression to reduce data movement, a common tactic among AI chips. The low-power Navion chip that was developed in collaboration with Sertac Karaman for mapping and navigation applications in robotics uses two to three orders of magnitude less energy than a CPU, in part by using optimizations that reduce the amount of data processed and stored on-chip.

Q: What changes have you made on the software side to boost efficiency?

A: The more that software aligns with hardware-related performance metrics like energy efficiency, the better we can do. Pruning, for example, is a popular way to remove weights from a deep network to reduce computation costs. But rather than remove weights based on their magnitude, our work on energy-aware pruning suggests you can remove the more energy-intensive weights to improve overall energy consumption. Another method we’ve developed, NetAdapt, automates the process of adapting and optimizing a deep network for a smartphone or other hardware platforms. Our recent followup work, NetAdaptv2, accelerates the optimization process to further boost efficiency.

Q: What low-power AI applications are you working on?

A: I’m exploring autonomous navigation for low-energy robots with Sertac Karaman. I’m also working with Thomas Heldt to develop a low-cost and potentially more effective way of diagnosing and monitoring people with neurodegenerative disorders like Alzheimer’s and Parkinson’s by tracking their eye movements. Eye-movement properties like reaction time could potentially serve as biomarkers for brain function. In the past, eye-movement tracking took place in clinics because of the expensive equipment required. We’ve shown that an ordinary smartphone camera can take measurements from a patient’s home, making data collection easier and less costly. This could help to monitor disease progression and track improvements in clinical drug trials.

Q: Where is low-power AI headed next?

A: Reducing AI’s energy requirements will extend AI to a wider range of embedded devices, extending its reach into tiny robots, smart homes, and medical devices. A key challenge is that efficiency often requires a tradeoff in performance. For wide adoption, it will be important to dig deeper into these different applications to establish the right balance between efficiency and accuracy.