A deep learning and model predictive control framework to control quadrotors and agile robots

Learning to compute through art

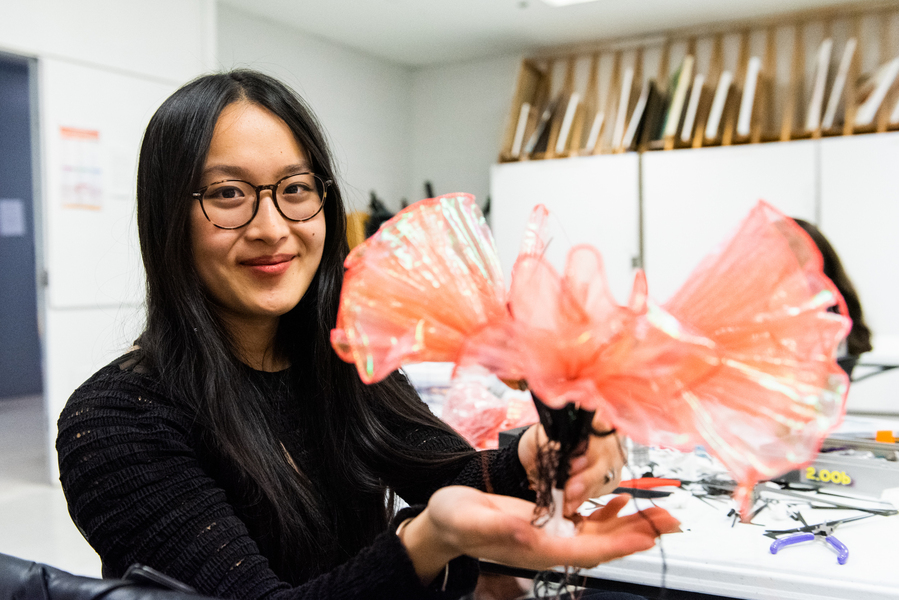

Shua Cho works on her artwork in “Introduction to Physical Computing for Artists” at the MIT Student Art Association. Photo: Sarah Bastille

By Ken Shulman | Arts at MIT

One student confesses that motors have always freaked them out. Amy Huynh, a first-year student in the MIT Technology and Policy Program, says “I just didn’t respond to the way electrical engineering and coding is usually taught.”

Huynh and her fellow students found a different way to master coding and circuits during the Independent Activities Period course Introduction to Physical Computing for Artists — a class created by Student Art Association (SAA) instructor Timothy Lee and offered for the first time last January. During the four-week course, students learned to use circuits, wiring, motors, sensors, and displays by developing their own kinetic artworks.

“It’s a different approach to learning about art, and about circuits,” says Lee, who joined the SAA instructional staff last June after completing his MFA at Goldsmiths, University of London. “Some classes can push the technology too quickly. Here we try to take away the obstacles to learning, to create a collaborative environment, and to frame the technology in the broader concept of making an artwork. For many students, it’s a very effective way to learn.”

Lee graduated from Wesleyan University with three concurrent majors in neuroscience, biology, and studio art. “I didn’t have a lot of free time,” says Lee, who originally intended to attend medical school before deciding to follow his passion for making art. “But I benefited from studying both science and art. Just as I almost always benefited from learning from my peers. I draw on both of those experiences in designing and teaching this class.”

On this January evening, the third of four scheduled classes, Lee leads his students through an exercise to create an MVP — a minimum viable product of their art project. The MVP, he explains, serves as an artist’s proof of concept. “This is the smallest single unit that can demonstrate that your project is doable,” he says. “That you have the bare-minimum functioning hardware and software that shows your project can be scalable to your vision. Our work here is different from pure robotics or pure electronics. Here, the technology and the coding don’t need to be perfect. They need to support your aesthetic and conceptual goals. And here, these things can also be fun.”

Lee distributes various electronic items to the students according to their specific needs — wires, soldering irons, resistors, servo motors, and Arduino components. The students have already acquired a working knowledge of coding and the Arduino language in the first two class sessions. Sophomore Shua Cho is designing an evening gown bedecked with flowers that will open and close continuously. Her MVP is a cluster of three blossoms, mounted on a single post that, when raised and lowered, opens and closes the sewn blossoms. She asks Lee for help in attaching a servo motor — an electronic motor that alternates between 0, 90, and 180 degrees — to the post. Two other students, working on similar problems, immediately pull their chairs beside Cho and Lee to join the discussion.

Shua Cho is designing an evening gown bedecked with flowers that will open and close continuously. Her minimum viable product is a cluster of three blossoms, mounted on a single post that, when raised and lowered, opens and closes the sewn blossoms. Photo: Sarah Bastille

The instructor suggests they observe the dynamics of an old-fashioned train locomotive wheel. One student calls up the image on their laptop. Then, as a group, they reach a solution for Cho — an assembly of wire and glue that will attach the servo engine to the central post, opening and closing the blossoms. It’s improvised, even inelegant. But it works, and proves that the project for the blossom-covered kinetic dress is viable.

“This is one of the things I love about MIT,” says aeronautical and astronautical engineering senior Hannah Munguia. Her project is a pair of hands that, when triggered by a motion sensor, will applaud when anyone walks by. “People raise their hand when they don’t understand something. And other people come to help. The students here trust each other, and are willing to collaborate.”

Student Hannah Munguia (left), instructor Timothy Lee (center), and student Bryan Medina work on artwork in “Introduction to Physical Computing for Artists” at the MIT Student Art Association. Photo: Sarah Bastille

Cho, who enjoys exploring the intersection between fashion and engineering, discovered Lee’s work on Instagram long before she decided to enroll at MIT. “And now I have the chance to study with him,” says Cho, who works at Infinite — MIT’s fashion magazine — and takes classes in both mechanical engineering and design. “I find that having a creative project like this one, with a goal in mind, is the best way for me to learn. I feel like it reinforces my neural pathways, and I know it helps me retain information. I find myself walking down the street or in my room, thinking about possible solutions for this gown. It never feels like work.”

For Lee, who studied computational art during his master’s program, his course is already a successful experiment. He’d like to offer a full-length version of “Introduction to Physical Computing for Artists” during the school year. With 10 sessions instead of four, he says, students would be able to complete their projects, instead of stopping at an MVP.

“Prior to coming to MIT, I’d only taught at art institutions,” says Lee. “Here, I needed to revise my focus, to redefine the value of art education for students who most likely were not going to pursue art as a profession. For me, the new definition was selecting a group of skills that are necessary in making this type of art, but that can also be applied to other areas and fields. Skills like sensitivity to materials, tactile dexterity, and abstract thinking. Why not learn these skills in an atmosphere that is experimental, visually based, sometimes a little uncomfortable. And why not learn that you don’t need to be an artist to make art. You just have to be excited about it.”

Robot Talk Episode 40 – Edward Timpson

Claire chatted to Edward Timpson from QinetiQ all about robots in the military, uncrewed vehicles, and cyber security.

Ed Timpson joined QinetiQ in 2020 after 11 years serving in the Royal Navy as a Weapons Engineering Officer (Submarines) across a number of ranks. Joining QinetiQ Target Systems as a Project Engineer and also managing the Hardware team, he had success in developing new capabilities for the Banshee family of UAS. He then moved into future systems within QinetiQ as a Principal Systems Engineer specialising in complex trials and experimentation of uncrewed vehicles. He now heads up the Robotics and Autonomous Systems capability within QinetiQ UK.

The Past and Future of Plant-Robot Cybernetics

An overview of in vitro biological neural networks for robot intelligence

Robotics has a diversity problem. Will doing away with solely tech-oriented approaches lead to a solution?

New material provides breakthrough in ‘softbotics’

Researchers use table tennis to understand human-robot dynamics in agile environments

A robot that can autonomously explore real-world environments

How Micromotors Are Helping Eliminate Inefficiency in EV Charging

A new bioinspired earthworm robot for future underground explorations

Author: D.Farina. Credits: Istituto Italiano di Tecnologia – © IIT, all rights reserved

Researchers at Istituto Italiano di Tecnologia (IIT-Italian Institute of Technology) in Genova has realized a new soft robot inspired by the biology of earthworms,which is able to crawl thanks to soft actuators that elongate or squeeze, when air passes through them or is drawn out. The prototype has been described in the international journal Scientific Reports of the Nature Portfolio, and it is the starting point for developing devices for underground exploration, but also search and rescue operations in confined spaces and the exploration of other planets.

Nature offers many examples of animals, such as snakes, earthworms, snails, and caterpillars, which use both the flexibility of their bodies and the ability to generate physical travelling waves along the length of their body to move and explore different environments. Some of their movements are also similar to plant roots.

Taking inspiration from nature and, at the same time, revealing new biological phenomena while developing new technologies is the main goal of the BioInspired Soft robotics lab coordinated by Barbara Mazzolai, and this earthworm-like robot is the latest invention coming from her group.

The creation of earthworm-like robot was made possible through a thorough understanding and application of earthworm locomotion mechanics. They use alternating contractions of muscle layers to propel themselves both below and above the soil surface by generating retrograde peristaltic waves. The individual segments of their body (metameres) have a specific quantity of fluid that controls the internal pressure to exert forces, and perform independent, localized and variable movement patterns.

IIT researchers have studied the morphology of earthworms and have found a way to mimic their muscle movements, their constant volume coelomic chambers and the function of their bristle-like hairs (setae) by creating soft robotic solutions.

The team developed a peristaltic soft actuator (PSA) that implements the antagonistic muscle movements of earthworms; from a neutral position it elongates when air is pumped into it and compresses when air is extracted from it. The entire body of the robotic earthworm is made of five PSA modules in series, connected with interlinks. The current prototype is 45 cm long and weighs 605 grams.

Each actuator has an elastomeric skin that encapsulates a known amount of fluid, thus mimicking the constant volume of internal coelomic fluid in earthworms. The earthworm segment becomes shorter longitudinally and wider circumferentially and exerts radial forces as the longitudinal muscles of an individual constant volume chamber contract. Antagonistically, the segment becomes longer along the anterior–posterior axis and thinner circumferentially with the contraction of circumferential muscles, resulting in penetration forces along the axis.

Every single actuator demonstrates a maximum elongation of 10.97mm at 1 bar of positive pressure and a maximum compression of 11.13mm at 0.5 bar of negative pressure, unique in its ability to generate both longitudinal and radial forces in a single actuator module.

In order to propel the robot on a planar surface, small passive friction pads inspired by earthworms’ setae were attached to the ventral surface of the robot. The robot demonstrated improved locomotion with a speed of 1.35mm/s.

This study not only proposes a new method for developing a peristaltic earthworm-like soft robot but also provides a deeper understanding of locomotion from a bioinspired perspective in different environments. The potential applications for this technology are vast, including underground exploration, excavation, search and rescue operations in subterranean environments and the exploration of other planets. This bioinspired burrowing soft robot is a significant step forward in the field of soft robotics and opens the door for further advancements in the future.