Study suggests framework to ensure that walking bots meet safety standards

A framework that could improve the social intelligence of home assistants

Powering the Future: NREL Research Finds Opportunities for Breakthrough Battery Designs

First step for smart port facilities: Maintain fenders with drone and AI combination

A fairy-like robot flies by the power of wind and light

AI-Powered Kindred INDUCT Automates Induction of One Million Items with 95% Accuracy

Sensing with purpose

Fadel Adib, associate professor in the Department of Electrical Engineering and Computer Science and the Media Lab, seeks to develop wireless technology that can sense the physical world in ways that were not possible before. Image: Adam Glanzman

By Adam Zewe | MIT News Office

Fadel Adib never expected that science would get him into the White House, but in August 2015 the MIT graduate student found himself demonstrating his research to the president of the United States.

Adib, fellow grad student Zachary Kabelac, and their advisor, Dina Katabi, showcased a wireless device that uses Wi-Fi signals to track an individual’s movements.

As President Barack Obama looked on, Adib walked back and forth across the floor of the Oval Office, collapsed onto the carpet to demonstrate the device’s ability to monitor falls, and then sat still so Katabi could explain to the president how the device was measuring his breathing and heart rate.

“Zach started laughing because he could see that my heart rate was 110 as I was demoing the device to the president. I was stressed about it, but it was so exciting. I had poured a lot of blood, sweat, and tears into that project,” Adib recalls.

For Adib, the White House demo was an unexpected — and unforgettable — culmination of a research project he had launched four years earlier when he began his graduate training at MIT. Now, as a newly tenured associate professor in the Department of Electrical Engineering and Computer Science and the Media Lab, he keeps building off that work. Adib, the Doherty Chair of Ocean Utilization, seeks to develop wireless technology that can sense the physical world in ways that were not possible before.

In his Signal Kinetics group, Adib and his students apply knowledge and creativity to global problems like climate change and access to health care. They are using wireless devices for contactless physiological sensing, such as measuring someone’s stress level using Wi-Fi signals. The team is also developing battery-free underwater cameras that could explore uncharted regions of the oceans, tracking pollution and the effects of climate change. And they are combining computer vision and radio frequency identification (RFID) technology to build robots that find hidden items, to streamline factory and warehouse operations and, ultimately, alleviate supply chain bottlenecks.

While these areas may seem quite different, each time they launch a new project, the researchers uncover common threads that tie the disciplines together, Adib says.

“When we operate in a new field, we get to learn. Every time you are at a new boundary, in a sense you are also like a kid, trying to understand these different languages, bring them together, and invent something,” he says.

A science-minded child

A love of learning has driven Adib since he was a young child growing up in Tripoli on the coast of Lebanon. He had been interested in math and science for as long as he could remember, and had boundless energy and insatiable curiosity as a child.

“When my mother wanted me to slow down, she would give me a puzzle to solve,” he recalls.

By the time Adib started college at the American University of Beirut, he knew he wanted to study computer engineering and had his sights set on MIT for graduate school.

Seeking to kick-start his future studies, Adib reached out to several MIT faculty members to ask about summer internships. He received a response from the first person he contacted. Katabi, the Thuan and Nicole Pham Professor in the Department of Electrical Engineering and Computer Science (EECS), and a principal investigator in the Computer Science and Artificial Intelligence Laboratory (CSAIL) and the MIT Jameel Clinic, interviewed him and accepted him for a position. He immersed himself in the lab work and, as the end of summer approached, Katabi encouraged him to apply for grad school at MIT and join her lab.

“To me, that was a shock because I felt this imposter syndrome. I thought I was moving like a turtle with my research, but I did not realize that with research itself, because you are at the boundary of human knowledge, you are expected to progress iteratively and slowly,” he says.

As an MIT grad student, he began contributing to a number of projects. But his passion for invention pushed him to embark into unexplored territory. Adib had an idea: Could he use Wi-Fi to see through walls?

“It was a crazy idea at the time, but my advisor let me work on it, even though it was not something the group had been working on at all before. We both thought it was an exciting idea,” he says.

As Wi-Fi signals travel in space, a small part of the signal passes through walls — the same way light passes through windows — and is then reflected by whatever is on the other side. Adib wanted to use these signals to “see” what people on the other side of a wall were doing.

Discovering new applications

There were a lot of ups and downs (“I’d say many more downs than ups at the beginning”), but Adib made progress. First, he and his teammates were able to detect people on the other side of a wall, then they could determine their exact location. Almost by accident, he discovered that the device could be used to monitor someone’s breathing.

“I remember we were nearing a deadline and my friend Zach and I were working on the device, using it to track people on the other side of the wall. I asked him to hold still, and then I started to see him appearing and disappearing over and over again. I thought, could this be his breathing?” Adib says.

Eventually, they enabled their Wi-Fi device to monitor heart rate and other vital signs. The technology was spun out into a startup, which presented Adib with a conundrum once he finished his PhD — whether to join the startup or pursue a career in academia.

He decided to become a professor because he wanted to dig deeper into the realm of invention. But after living through the winter of 2014-2015, when nearly 109 inches of snow fell on Boston (a record), Adib was ready for a change of scenery and a warmer climate. He applied to universities all over the United States, and while he had some tempting offers, Adib ultimately realized he didn’t want to leave MIT. He joined the MIT faculty as an assistant professor in 2016 and was named associate professor in 2020.

“When I first came here as an intern, even though I was thousands of miles from Lebanon, I felt at home. And the reason for that was the people. This geekiness — this embrace of intellect — that is something I find to be beautiful about MIT,” he says.

He’s thrilled to work with brilliant people who are also passionate about problem-solving. The members of his research group are diverse, and they each bring unique perspectives to the table, which Adib says is vital to encourage the intellectual back-and-forth that drives their work.

Diving into a new project

For Adib, research is exploration. Take his work on oceans, for instance. He wanted to make an impact on climate change, and after exploring the problem, he and his students decided to build a battery-free underwater camera.

Adib learned that the ocean, which covers 70 percent of the planet, plays the single largest role in the Earth’s climate system. Yet more than 95 percent of it remains unexplored. That seemed like a problem the Signal Kinetics group could help solve, he says.

But diving into this research area was no easy task. Adib studies Wi-Fi systems, but Wi-Fi does not work underwater. And it is difficult to recharge a battery once it is deployed in the ocean, making it hard to build an autonomous underwater robot that can do large-scale sensing.

So, the team borrowed from other disciplines, building an underwater camera that uses acoustics to power its equipment and capture and transmit images.

“We had to use piezoelectric materials, which come from materials science, to develop transducers, which come from oceanography, and then on top of that we had to marry these things with technology from RF known as backscatter,” he says. “The biggest challenge becomes getting these things to gel together. How do you decode these languages across fields?”

It’s a challenge that continues to motivate Adib as he and his students tackle problems that are too big for one discipline.

He’s excited by the possibility of using his undersea wireless imaging technology to explore distant planets. These same tools could also enhance aquaculture, which could help eradicate food insecurity, or support other emerging industries.

To Adib, the possibilities seem endless.

“With each project, we discover something new, and that opens up a whole new world to explore. The biggest driver of our work in the future will be what we think is impossible, but that we could make possible,” he says.

Robot Talk Episode 34 – Interview with Sabine Hauert

Claire chatted to Dr Sabine Hauert from the University of Bristol all about swarm robotics, nanorobots, and environmental monitoring.

Sabine Hauert is Associate Professor of Swarm Engineering at University of Bristol. She leads a team of 20 researchers working on making swarms for people, and across scales, from nanorobots for cancer treatment, to larger robots for environmental monitoring, or logistics. Previously she worked at MIT and EPFL. She is President and Executive Trustee of non-profits robohub.org and aihub.org, which connect the robotics and AI communities to the public.

Year in Review: 2022’s Top New and Emerging Technologies

Special drone collects environmental DNA from trees

By Peter Rüegg

Ecologists are increasingly using traces of genetic material left behind by living organisms left behind in the environment, called environmental DNA (eDNA), to catalogue and monitor biodiversity. Based on these DNA traces, researchers can determine which species are present in a certain area.

Obtaining samples from water or soil is easy, but other habitats – such as the forest canopy – are difficult for researchers to access. As a result, many species remain untracked in poorly explored areas.

Researchers at ETH Zurich and the Swiss Federal Institute for Forest, Snow and Landscape Research WSL, and the company SPYGEN have partnered to develop a special drone that can autonomously collect samples on tree branches.

(Video: ETH Zürich)

How the drone collects material

The drone is equipped with adhesive strips. When the aircraft lands on a branch, material from the branch sticks to these strips. Researchers can then extract DNA in the lab, analyse it and assign it to genetic matches of the various organisms using database comparisons.

But not all branches are the same: they vary in terms of their thickness and elasticity. Branches also bend and rebound when a drone lands on them. Programming the aircraft in such a way that it can still approach a branch autonomously and remain stable on it long enough to take samples was a major challenge for the roboticists.

“Landing on branches requires complex control,” explains Stefano Mintchev, Professor of Environmental Robotics at ETH Zurich and WSL. Initially, the drone does not know how flexible a branch is, so the researchers fitted it with a force sensing cage. This allows the drone to measure this factor at the scene and incorporate it into its flight manoeuvre.

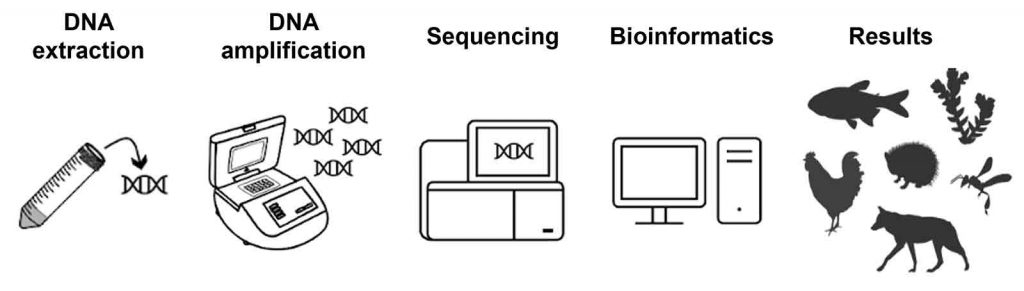

Scheme: DNA is extracted from the collected branch material, amplified, sequenced and the sequences found are compared with databases. This allows the species to be identified. (Graphic: Stefano Mintchev / ETH Zürich)

Preparing rainforest operations at Zoo Zurich

Researchers have tested their new device on seven tree species. In the samples, they found DNA from 21 distinct groups of organisms, or taxa, including birds, mammals and insects. “This is encouraging, because it shows that the collection technique works,“ says Mintchev, who co-authored the study that has appeared in the journal Science Robotics.

The researchers now want to improve their drone further to get it ready for a competition in which the aim is to detect as many different species as possible across 100 hectares of rainforest in Singapore in 24 hours.

To test the drone’s efficiency under conditions similar to those it will experience at the competition, Mintchev and his team are currently working at the Zoo Zurich’s Masoala Rainforest. “Here we have the advantage of knowing which species are present, which will help us to better assess how thorough we are in capturing all eDNA traces with this technique or if we’re missing something,“ Mintchev says.

For this event, however, the collection device must become more efficient and mobilize faster. In the tests in Switzerland, the drone collected material from seven trees in three days; in Singapore, it must be able to fly to and collect samples from ten times as many trees in just one day.

Collecting samples in a natural rainforest, however, presents the researchers with even tougher challenges. Frequent rain washes eDNA off surfaces, while wind and clouds impede drone operation. “We are therefore very curious to see whether our sampling method will also prove itself under extreme conditions in the tropics,” Mintchev says.