Automated optical inspection of FAST’s reflector surface using drones and computer vision

A precision arm for miniature robots

Why Manufacturing’s Technological Evolution Must Never End

Why Manufacturing’s Technological Evolution Must Never End

Robot Talk Episode 32 – Interview with Mollie Claypool

Claire chatted to Mollie Claypool from Automated Architecture about robot house-building, zero-carbon architecture, and community participation.

Mollie Claypool is CEO of AUAR Ltd, a tech company revolutionising house building using automation. Mollie is a leading architecture theorist focused on issues of social justice highlighted by increasing automation in architecture and design production. She is also Associate Professor in Architecture at The Bartlett School of Architecture, UCL.

A Guide to Selecting the Right LiDAR Sensors

Get ready to robot! Robot drawing and story competitions for primary schoolchildren now officially open for entries

The EPSRC UK Robotics and Autonomous Systems (UK-RAS) Network is pleased to announce the official launch of its 2023 competitions, inviting the UK’s primary schoolchildren to share their creative robot designs and imaginative stories with a panel of experts, for a chance to win some unique prizes. These annual competitions, which have proved hugely popular with budding authors and illustrators nationwide, are now returning for the fourth year.

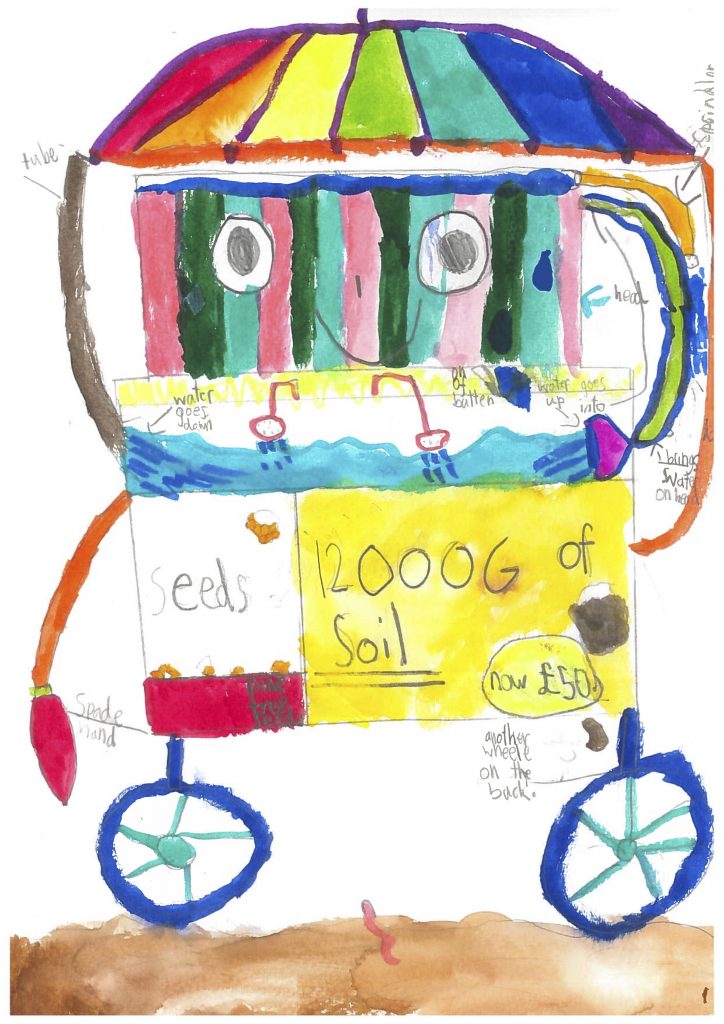

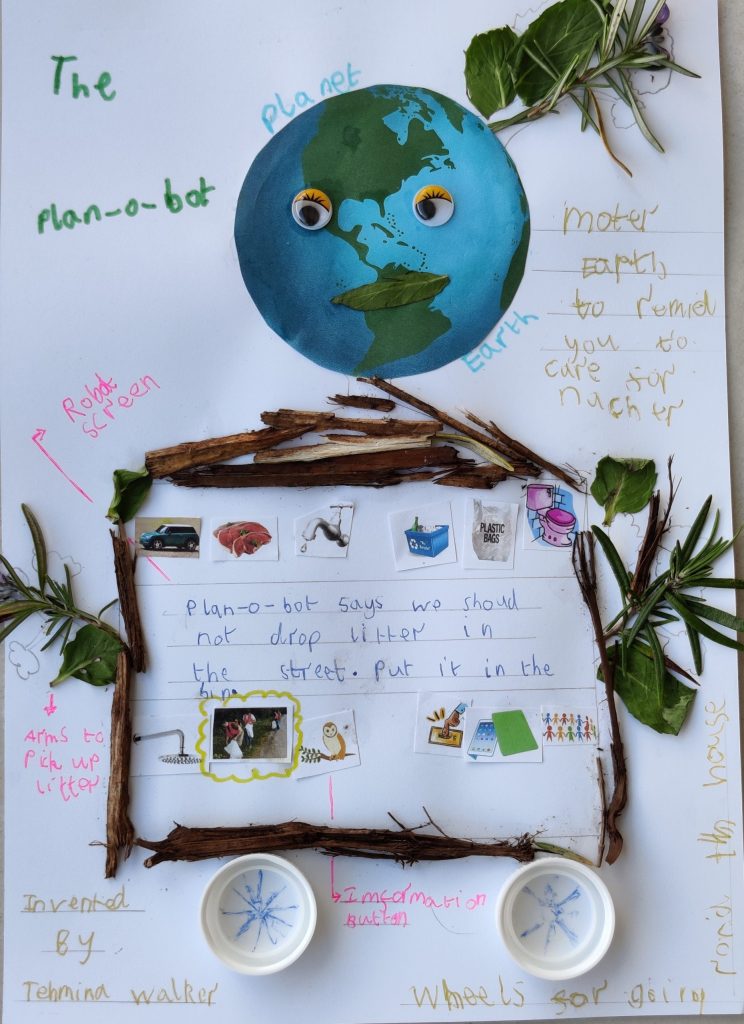

The “Draw A Robot” competition challenges children in Key Stage 1 (aged 5-7 years old) to design a robot that they’d like to see in the future. Children can use whichever drawing materials they prefer — paper, pens, pencils, paints, crayons, or even natural materials — to create their ideal robot design, and the robot can be designed to perform any task or job. Competition participants will be able to explain their robot’s functions by labelling gadgets and features on the drawing and writing a short design spec.

For the “Once Upon A Robot” writing competition, Key Stage 2 children (aged 7-11 years old), are invited to write an imaginative short story featuring any kind of robot – or robots – their imagination can conjure! Children will have up to 800 words to tell their creative robot tales and they can choose any literary genre they like. It could be a spine-tingling horror, an action-packed adventure, or even a light-hearted comedy.

ZOOG by Matilde Facchini, age 7 (Draw a Robot 2022 Winner)

Plan-o-bot by Tehmina Walker, age 6 (Draw a Robot 2021 Winner)

The two competitions will be judged by robotics experts from the organising ESPRC UK-RAS Network, plus two very special invited judges. The writing competition will be judged this year by award-winning author Sharna Jackson, whose inspiring and mystifying books include High-Rise Mystery and The Good Turn. The drawing competition will be judged by internationally acclaimed Anglo/American author, illustrator and artist Ted Dewan, creator of the Emmy-Award-winning animated television series Bing.

This year’s exclusive prize packages include:

Draw A Robot Competition winner

- Thames & Kosmos Coding and Robotics kit – contributed by competition partner the University of Sheffield Advanced Manufacturing Research Centre (AMRC)

- A tour of the AMRC’s Factory 2050 in Sheffield, the UK’s first state-of-the-art factory dedicated to conducting collaborative research into reconfigurable digitally assisted assembly, component manufacturing and machining technologies

- A copy of the book “The Sorcerer’s Apprentice”, signed by competition judge Ted Dewan

Draw A Robot Competition runner-up

- 4M Green Science Solar Hybrid Power Aqua Robot – contributed by competition partner the UKRI Trust Worthy Autonomous Systems (TAS) hub

- A copy of the book “Top Secret”, signed by competition judge Ted Dewan

Once Upon A Robot Competition winner

- Lego Mindstorms Robot Inventor kit – contributed by competition partner Birmingham Extreme Robotics Lab

- A tour of the Extreme Robotics Lab in Birmingham and a robotics masterclass from RobotCoders for the winner and a friend

- Printed copy of the winning story with bespoke illustrations by illustrator and science communicator Hana Ayoob

- A copy of the books “The Good Turn” and “Black Artists Shaping the World”, signed by competition judge Sharna Jackson

Once Upon A Robot Competition runner-up

- Maqueen Lite – micro:bit – contributed by competition partner The National Robotarium

- A copy of the book “High-Rise Mystery”, signed by competition judge Sharna Jackson

For more information, details of prizes, judging criteria and to submit an entry, please visit https://www.ukras.org.uk/school-robot-competition/.

Both competitions are open for entry from the 10th January and will close for submissions on the 23rd April. The winners will be announced at a special virtual award ceremony due to be held on 22nd June 2023.

EPSRC UK-RAS Network Chair Prof. Robert Richardson says: “We are absolutely delighted to be launching these two fantastic competitions for primary schoolchildren for the fourth year running, which offer the next generation a creative way to engage with the exciting world of robotics and automation. We can’t wait to see the imagination and ingenuity that the nation’s young authors and artists bring to these challenges, and we look forward to the very enjoyable task of judging this year’s entries.”

The two creative competitions for young children were first launched in 2020 for UK Robotics Week, now the UK Festival of Robotics – a 7-day celebration of robotics and intelligent systems held at the end of June. This annual celebration is hosted by the EPSRC UK Robotics and Autonomous Systems (UK-RAS) Network, which provides academic leadership in robotics and coordinates activities at 35 partner universities across the UK.

BitFlow Identifies Five Machine Vision Trends for 2023

Precision Drive Systems – Spindles for Robotics

Precision Drive Systems – Trust PDS for 3-5 Day Robotic Spindle Repair

Need a hand? This robotic hand can help you pick your food items and plate your dish

What killer robots mean for the future of war

Year end summary: Top Robocar stories of 2022

Here’s my annual summary of the top stories of the prior year. This time the news was a strong mix of bad and good.

Read the text story on Forbes.com at Robocars 2022 year in review.

And see the video version here: