Learn Pytorch: Training your first deep learning models step by step

The physical intelligence of collectives: How ants and robots pull off a prison escape without a plan or a planner

Words prove their worth as teaching tools for robots

ep.363: Going out on a Bionic Limb, with Joel Gibbard

Many people associate prosthetic limbs with nude-colored imitations of human limbs. Something built to blend into a society where people have all of their limbs while serving functional use cases. On the other end of the spectrum are the highly optimized prosthetics used by Athletes, built for speed, low weight, and appearing nothing like a human limb.

As a child under 12 years old, neither of these categories of prosthetics particularly speaks to you. Open Bionics, founded by Joel Gibbard and Samantha Payne, was started to create a third category of prosthetics. One that targets the fun, imaginative side of children, while still providing the daily functional requirements.

Through partnerships with Disney and Lucasfilms, Open Bionics has built an array of imagination-capturing prosthetic limbs that are straight-up cool.

Joel Gibbard dives into why they founded Open Bionics, and why you should invest in their company as they are getting ready to let the general public invest in them for the first time.

Joel Gibbard

Joel Gibbard lives in Bristol, UK and graduated with a first-class honors degree in Robotics from the University of Plymouth, UK.

Joel Gibbard lives in Bristol, UK and graduated with a first-class honors degree in Robotics from the University of Plymouth, UK.

He co-founded Open Bionics alongside Samantha Payne with the goal of bringing advanced, accessible bionic arms to the market. Open Bionics offers the Hero Arm, which is available in the UK, USA, France, Australia, and New Zealand. Open Bionics is revolutionizing the prosthetics industry through its line of inspiration-capturing products.

Links

- Open Bionics

- Pre-register to invest

- Download mp3

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

North American Robot Sales On Track For Another Record Year for 2022

North American Robot Sales On Track For Another Record Year for 2022

Should we tax robots?

When to Choose a 4pole DC Motor

CLAIRE and euRobotics: all questions answered on humanoid robotics

On 9 December, CLAIRE and euRobotics jointly hosted an All Questions Answered (AQuA) event. This one hour session focussed on humanoid robotics, and participants could ask questions regarding the current and future state of AI, robotics and human augmentation in Europe.

The questions were fielded by an expert panel, comprising:

- Rainer Bischoff, euRobotics

- Wolfram Burgard, Professor of Robotics and AI, University of Technology Nuremberg

- Francesco Ferro, CEO, PAL Robotics

- Holger Hoos, Chair of the Board of Directors, CLAIRE

The session was recorded and you can watch in full below:

A Guide to the Types of Internal Corporate Communication

In business, internal communication is just as important as external communication. Business leaders are strategists and ideas people, yes, but they are also communicators. Well-run businesses operate efficiently only if they can effectively communicate internally. Many kinds of internal communication are essential in modern business. Here is a very brief rundown of some of the...

The post <strong>A Guide to the Types of Internal Corporate Communication</strong> appeared first on 1redDrop.

A model to enable the autonomous navigation of spacecraft during deep-space missions

Researchers develop winged robot that can land like a bird

Japanese Robot Manufacturer relies on Digital Twin by CENIT

12 years of NCCR Robotics

After 12 years of activity, NCCR Robotics officially ended on 30 November 2022.

We can proudly say that NCCR Robotics has had a truly transformational effect on the national robotics research landscape, creating novel synergies, strengthening key areas, and adding a unique signature that made Switzerland prominent and attractive at the international level.

In its 12 years of activity, NCCR Robotics has had a transformational effect on the national robotics research landscape.

Our highlights include:

- Achieving several breakthroughs in wearable, rescue and educational robotics

- Creating new master’s and doctoral programmes that will train generations of future robotics engineers

- Graduating more than 200 PhD students and 100 postdocs, with more than 1’000 peer-reviewed publications

- Spinning out several projects into companies, many of which have become international leaders and generated more than 400 jobs

- Improving awareness of gender balance in robotics and substantially increasing the percentage of women in robotics in Switzerland

- Kick-starting large outreach programs, such as Cybathlon, Swiss Drone Days, and Swiss Robotics Days, which will continue to increase public awareness of robotics for good

It is not the end of the story though: our partner institutions – EPFL, ETH Zurich, the University of Zurich, the University of Bern, the University of Basel, Università della Svizzera Italiana, EMPA – will continue to collaborate through the Innovation Booster Robotics, a new national program aimed at developing technology transfer activities and maintaining the network.

Research

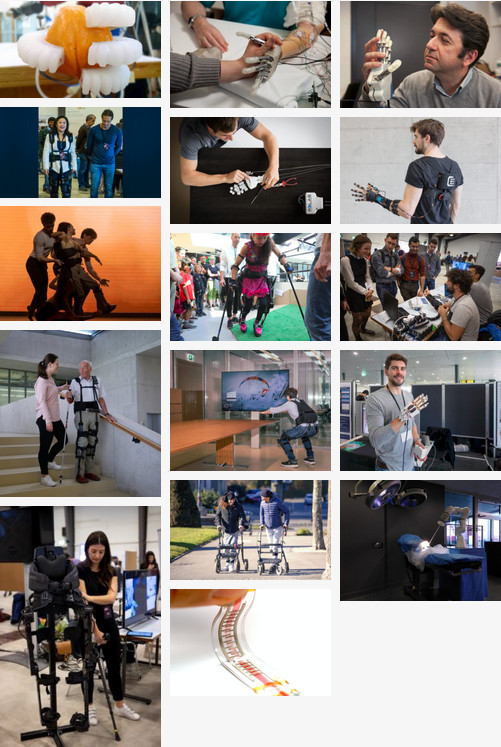

The research programme of NCCR Robotics has been articulated around three Grand Challenges for future intelligent robots that can improve the quality of life: Wearable Robotics, Rescue Robotics, and Educational Robotics.

In the Wearable Robotics Grand Challenge, NCCR Robotics studied and developed a large range of novel prosthetic and orthotic robots, implantable sensors, and artificial intelligence algorithms to restore the capabilities of persons with disabilities and neurological disorders.

For example, researchers developed implantable and assistive technologies that allowed patients with completely paralyzed legs to walk again thanks to a combination of assistive robots (such as Rysen), implantable microdevices that read brain signals and stimulate spinal cord nerves, and artificial intelligence that translate neural signals into gait patterns.

They also developed prosthetic hands with soft sensors and implantable neural stimulators that enable people to feel again the haptic qualities of objects. Along this line, they also studied and developed prototypes of an extra arm and artificial intelligence that could allow humans to control the additional artificial limb in combination with their natural arms for situations that normally require more than one person.

Researchers also developed the MyoSuit textile soft exoskeletons that allow persons on wheelchairs to stand up, take a few steps, and then sit back on the wheelchair without external help.

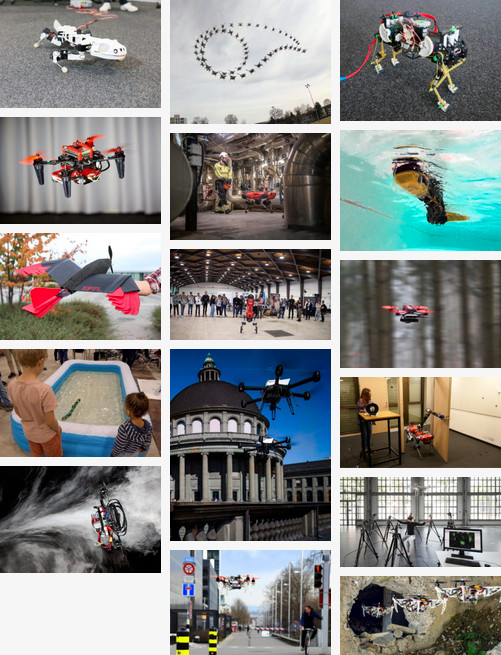

In the Rescue Robotics Grand Challenge, researchers developed and deployed legged and flying robots with self-learning capabilities for use in disaster mitigation as well as in civil and industrial inspection.

Among the most notable results are ANYMal, a quadruped robot that won the first prize in the international DARPA challenge, by exploring underground tunnels and identifying a number of objects; K-rock, an amphibious robot inspired by salamanders and crocodiles that can swim, walk, and squat under narrow passages; a collision-resilient drone that has become the most widely used robot in the world by rescue teams, governments, and companies for inspection of confined spaces, bridges, boilers, and ship tankers, to mention a few; a whole family of foldable drones that can change shape to squeeze through narrow passage, protect nearby persons from propellers, carry cargo of various size and weight, and twist their arms to get close to surfaces and perform repair operations; an avian-inspired drone with artificial feathers that can approximate the flight agility of birds of prey.

In addition, researchers developed powerful learning algorithms that enabled legged robots to walk up mountains and grassy land by adapting their gait, and flying robots that learn to fly and avoid high-speed moving objects using bio-inspired vision systems, and even learned to race through a circuit beating world-champion humans. Researchers also proposed new methods to let inexperienced humans and rescue officers interact with, and easily control, drones as if they were an extension of their own body.

In the Educational Robotics Grand Challenge, NCCR researchers created Thymio, a mobile robot for teaching programming and elements of robotics that has been deployed in more than 80’000 units in classrooms across Switzerland, and Cellulo, a smaller modular robot that allows richer forms of interaction with pupils, as wells as a broad range of learning activities (physics, mathematics, geography, games) and training methods for teaching teachers how to integrate robots in their lectures.

Researchers also teamed with Canton Vaud on a large-scale project to introduce robotics and computer science into all primary-school classes and have already trained more than one thousand teachers.

Outreach

Communication, knowledge (and technology) transfer to society and the economy

Over 12 years, NCCR Robotics researchers published approximately 500 articles in peer-review journals and 500 articles in peer-reviewed conferences and filed approximately 50 patents (one third of which have already been granted). They also developed a tech-transfer support programme to help young researchers translate research results into commercially viable products. As a result, 16 spin-offs were supported, out of which 14 have been incorporated and are still active. Some of these start-ups have become full scale-up companies with products sold all over the world, have raised more than five times capital than the total funds of the NCCR over 12 years, and generated several hundreds of new high-tech jobs in Switzerland.

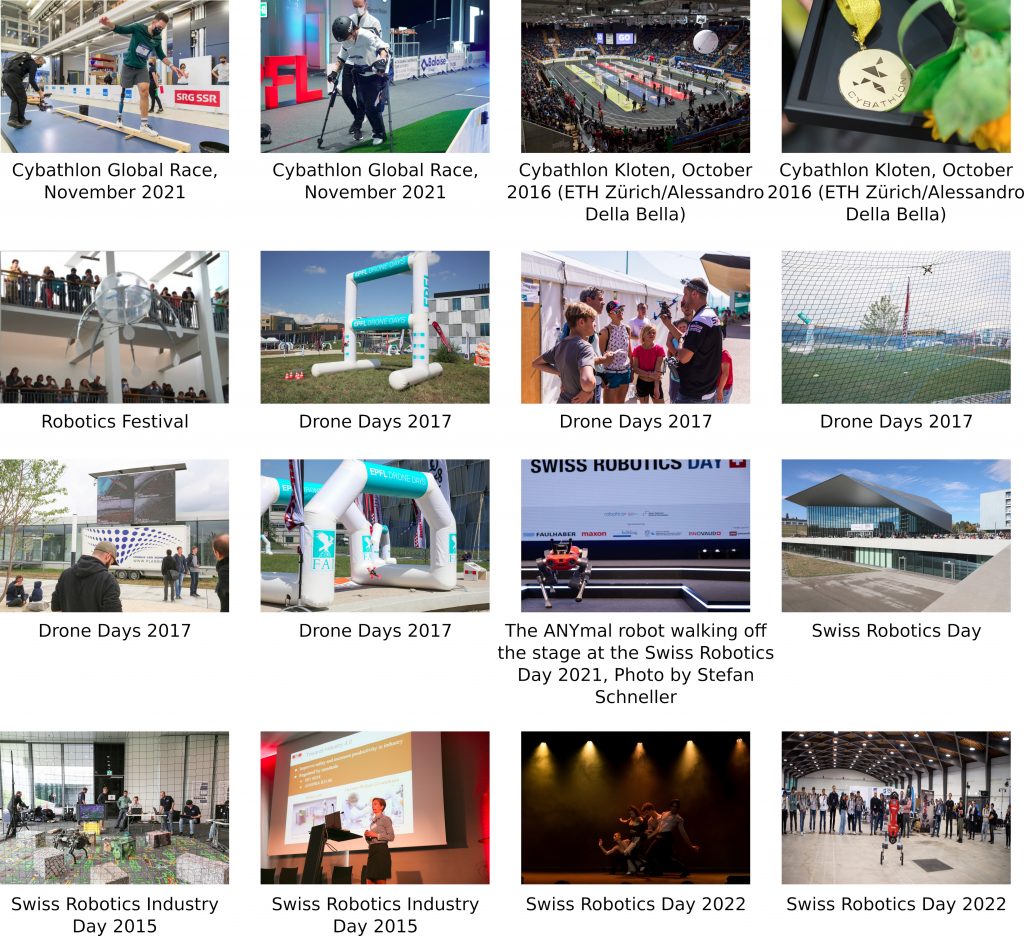

Several initiatives were aimed at the public to communicate the importance of robotics for the quality of life.

- For example, during the first phase NCCR Robotics organized an annual Robotics Festival at EPFL that attracted at its peak 17’000 visitors in one day.

- In the second phase, Cybathlon was launched, a world-first Olympic-style competition for athletes with disabilities and supported by assistive devices, which was later taken over by ETH Zurich that will ensure its continuation.

- Additionally, NCCR Robotics launched the Swiss Drone Days at EPFL that combine drone exhibitions, drone races, and public presentations, and were later taken over by EPFL and most recently by University of Zurich.

- NCCR Robotics also organized the annual Swiss Robotics Day with the aim of bringing together researchers and industry representatives in a full day of high-profile technology presentations from top Swiss and international speakers, demonstrations of research prototypes and robotics products, carousel of pitch presentations by young spin-offs, and several panel discussions and networking events.

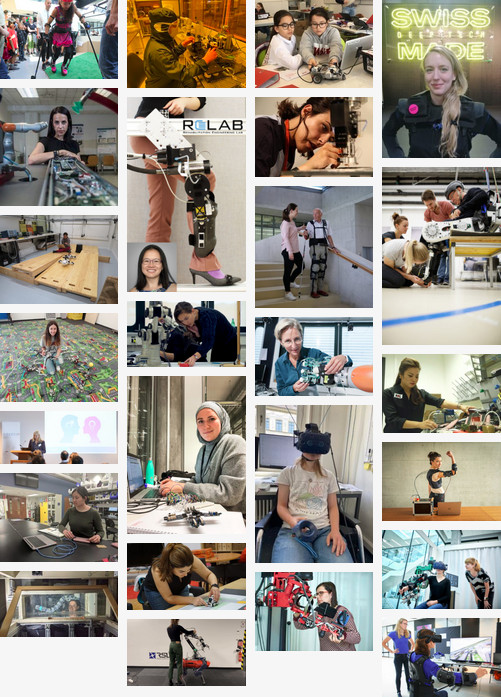

Promotion of young scientists and of academic careers of women

The NCCR Robotics helped develop a new master’s programme and a new PhD programme in robotics at EPFL, created exchange programmes and fellowships with ETH Zurich and top international universities with a program in robotics, and issued several awards for excellence in study, research, technology transfer, and societal impact.

NCCR Robotics has also been very active in addressing equal opportunities. Activities aimed at improving gender balance included dedicated exchange and travel grants, awards for supporting career development, master study fellowships, outreach campaigns, promotional movies and surveys for continuous assessment of the effectiveness of actions. As a result, the percentage of women in the EPFL robotics masters almost doubled in four years, and the number of women postgraduate and assistant professors doubled too. Although much remains to be done in Switzerland, the initial results of these actions are promising and the awareness of the importance of equal opportunity has become pervasive throughout the NCCR Robotics community and in all its research, outreach, and educational activities.

Beyond NCCR Robotics

In order to sustain the long-term impact of NCCR Robotics, EPFL launched a new Center of Intelligent Systems where robotics is a major research pillar and ETH Zurich created a Center for Robotics that includes large research facilities, annual summer schools, and activities to favor collaborations with industry.

Furthermore, EPFL built on NCCR Robotics’ educational technologies and further programmes to create the LEARN Center that will continue training teachers in the use of robots and digital technologies in schools. Similarly, ETH Zurich built on the research and competence developed in the Wearable Robotics Grand Challenge to create the Competence Centre for Rehabilitation Engineering and Science with the goal of restoring and maintaining independence, productivity, and quality of life for people with physical disabilities and contribute towards an inclusive society.

As the project approaches its conclusion, NCCR Robotics members applied for additional funding for the National Thematic Network Innovation Booster Robotics that was launched in 2022 and will continue supporting networking activities and technology transfer in medical and mobile robotics for the next 4 years. Finally, a Swiss Robotics Association comprising stakeholders from academia and industry will be created in order to manage the Innovation Booster programme and offer a communication and collaboration platform for the transformed and enlarged robotics community that NCCR Robotics has contributed to create.