PHYSFRAME: a system to type check physical frames of reference for robotic systems

Zimmer Group Supports Vischer & Bolli Robot Cell With Kuka Robots and a Bespoke Gripper

Russian Yandex to launch delivery robots in US

Softbank Robotics Europe cutting workforce 40% in shake-up

Softbank Robotics Europe, the group behind two of the more recognizable robots, is laying off 40% of its workforce. On July 7, the developer of the famous Nao and Pepper robots will reduce its Paris-based workforce that had 330 employees as of March 2021.

The Robot Report confirmed this news, which was first reported by French media outlet Le Journal du Net. Softbank Robotics Europe lost \$38 million in its fiscal 2019-2020 year and more than \$119 million over the last three years, according to Le Journal du Net.

Despite their worldwide fame, the Nao and Pepper robots never achieved financial success. Most of the 27,000 Nao and Pepper robots were sold in Japan, which is more accepting of humanoid robots than Europe, the United States and other parts of the world. While selling that many robots is certainly an achievement, price and capabilities were often issues, especially for Pepper, which costs \$30,000 in the U.S.

A Softbank Robotics Europe employee, who wished to remain anonymous, told The Robot Report “the market for Nao and Pepper is smaller than we expected.” The source added that it is “not sustainable to have this many workers in Paris based on the economic issues we are facing.”

Softbank Robotics Europe was formed after parent company Softbank acquired Aldebaran Robotics for \$100 million about a decade ago.

Softbank Robotics Europe sent this public statement to The Robot Report:

“Since 2012 SoftBank Robotics Group, a subsidiary of SoftBank, has invested in Humanoid Robotics and intends to keep Pepper & NAO robots business moving forward.

“In the light of the pandemic and economic slowdown, SoftBank Robotics Europe is considering a significant workforce optimization plan. Our EMEA HQ located in Paris is home to about 330 employees, as of March 2021.

“In this difficult time, we want to thank all our employees for their efforts in creating the best humanoids on the planet, and will make the best efforts to ensure fair departure decisions with labor representatives and local consultation bodies in France. The current round of job cuts should be completed by the end of 2021.

“The restructuring project has as one of its objectives to continue to provide product sales, services support and maintenance for Pepper and NAO robots.

“We also want to thank our customers, partners and suppliers for their trust in our Pepper & NAO products.

“SoftBank Robotics Europe will continue to make significant investments in next-generation robots to serve our customers and partners.”

Downplaying Nao & Pepper going forward

While this next information isn’t surprising, it is confirmation from a Softbank insider. The source said both Softbank Robotics Europe and Softbank Robotics America won’t be focusing on Nao and Pepper as much going forward.

“There will be less investment in emotional humanoids, and more focus on commercial products such as Whiz,” the source said. The Whiz autonomous floor cleaning robot first went on sale in early 2019 as sales of Pepper lagged.

This is part of a larger shift in strategy for Softbank’s robotics efforts. Softbank Robotics America, for example, recently announced that Nao and Pepper will be available in the U.S. exclusively through San Francisco-based RobotLAB, a company that has focused on educational robots for years. While other media outlets have referred to this as Softbank continuing to expand, it’s actually the reverse. The company is divesting from its direct channel.

Parent company Softbank will maintain the IP for both Nao and Pepper, but it’s looking to outsource much of the sales, service, and support work for these robots as they don’t generate significant revenue. The source told The Robot Report deals similar to the RobotLAB partnership are being explored for Nao and Pepper in other parts of the world.

“Pepper launched in 2014, but Whiz is our flagship robot now,” the source said. “Pepper will remain an icon in robotics, but more business efforts will be put into Whiz going forward.”

It’s been clear for a while that Nao and Pepper weren’t going to be a major part of Softbank’s robotics strategy going forward. In January 2021, for example, Softbank Group announced that Softbank Robotics will jointly develop robots with Japanese electronics maker Iris Ohyama. The joint venture, called Iris Robotics, highlighted two products that are evolutions of existing products. The first product is a new take on Softbank’s Whiz cleaning robot called the “Whiz i Iris Edition.” The second product is an updated version of Bear Robotics’ flagship robot, Servi.

As we pointed out at the time Iris Robotics was announced, neither Nao or Pepper appeared to be part of the deal. Now we know why.

Softbank has made other changes to its robotics strategy, most notably offloading 80% of its ownership stake in Boston Dynamics to Hyundai for \$880 million. It also paid \$2.8 billion for a 40% ownership stake in AutoStore, a leading developer of automated storage and retrieval systems (AS/RS). The logistics market is booming automation-wise, and now Softbank is tied up with a major player. AutoStore currently has a global blue-chip customer base with more than 600 installations and 20,000 robots across 35 countries.

Softbank also recently partnered with Bear Robotics on serving and bussing robots. Founded in 2017, Bear Robotics’ robots operate in restaurants, corporate campuses, ghost kitchens, senior care facilities, and casinos across North America, Asia, and Europe. Softbank is an investor in Bear Robotics.

This article was first published in The Robot Report.

MCRI Webcast Series – Robotics Roundtable

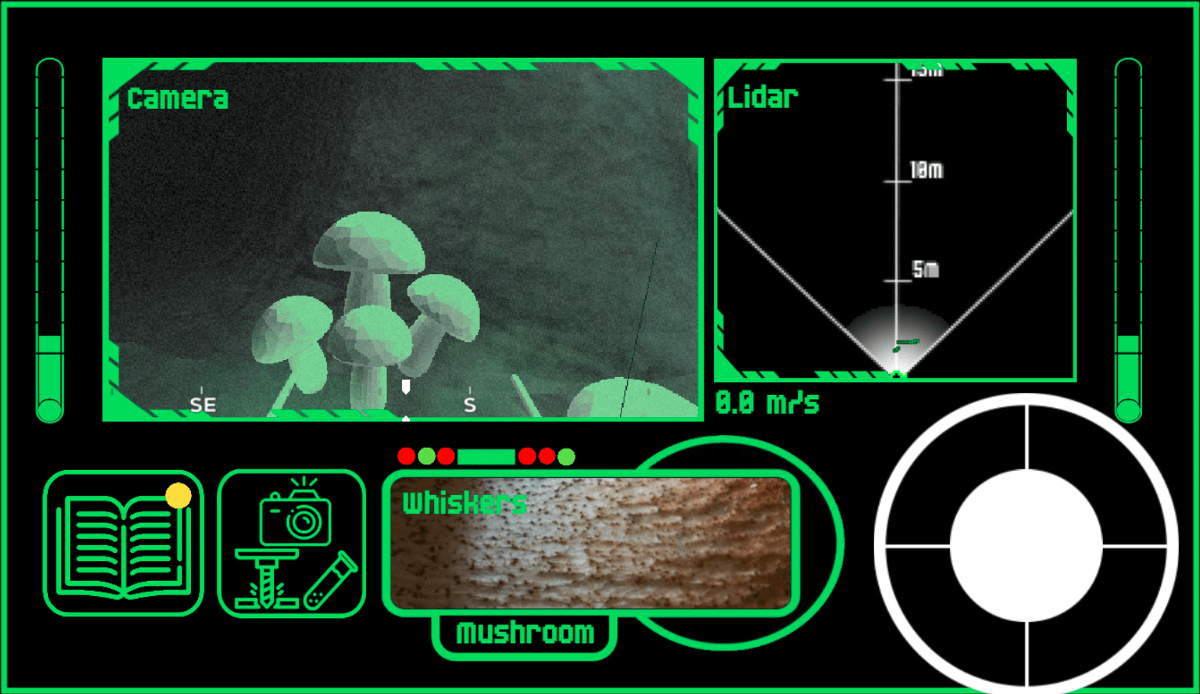

Play RoC-Ex: Robotic Cave Explorer and unearth the truth about what happened in cave system #0393

Dive into the experience of piloting a robotic scout through what appears to be an ancient cave system leading down to the centre of the Earth. With the help of advanced sensors, guide your robot explorer along dark tunnels and caverns, avoiding obstacles, collecting relics of aeons past and, hopefully, discover what happened to its predecessor.

Mickey Li, Julian Hird, G. Miles, Valentina Lo Gatto, Alex Smith and WeiJie Wong (most from the FARSCOPE CDT programme of the University of Bristol and the University of the West of England) have created this educational game as part of the UKRAS festival of Robotics 2021. The game has been designed to teach you about how sensors work, how they are used in reality and perhaps give a glimpse into the mind of the robot. With luck, this game can show how exciting it can be to work in robotics.

As the authors suggest, you can check out the DARPA Subterranean Challenge (video) (website) (not affiliated) for an example of the real life version of this game.

The game is hosted on this website. The site is secured and no data is collected when playing the game (apart from if you decide to fill in the anonymous feedback form). The source code for the game is available open source here on Github. Enjoy!

Tuning collagen threads for biohybrid robots

Insect-sized robot navigates mazes with the agility of a cheetah

An expert on search and rescue robots explains the technologies used in disasters like the Florida condo collapse

Texas A&M’s Robin Murphy has deployed robots at 29 disasters, including three building collapses, two mine disasters and an earthquake as director of the Center for Robot-Assisted Search and Rescue. She has also served as a technical search specialist with the Hillsboro County (Florida) Fire and Rescue Department. The Conversation talked to Murphy to provide readers an understanding of the types of technologies that search and rescue crews at the Champlain Towers South disaster site in Surfside, Florida, have at their disposal, as well as some they don’t. The interview has been edited for length.

What types of technologies are rescuers using at the Surfside condo collapse site?

We don’t have reports about it from Miami-Dade Fire Rescue Department, but news coverage shows that they’re using drones.

A standard kit for a technical search specialist would be basically a backpack of tools for searching the interior of the rubble: listening devices and a camera-on-a-wand or borescope for looking into the rubble.

How are drones typically used to help searchers?

They’re used to get a view from above to map the disaster and help plan the search, answering questions like: What does the site look like? Where is everybody? Oh crap, there’s smoke. Where is it coming from? Can we figure out what that part of the rubble looks like?

In Surfside, I wouldn’t be surprised if they were also flying up to look at those balconies that are still intact and the parts that are hanging over. A structural specialist with binoculars generally can’t see accurately above three stories. So they don’t have a lot of ability to determine if a building’s safe for people to be near, to be working around or in, by looking from the ground.

AP Photo/Wilfredo Lee

Drones can take a series of photos to generate orthomosaics. Orthomosaics are like those maps of Mars where they use software to glue all the individual photos together and it’s a complete map of the planet. You can imagine how useful an orthomosaic can be for dividing up an area for a search and seeing the progress of the search and rescue effort.

Search and rescue teams can use that same data for a digital elevation map. That’s software that gets the topology of the rubble and you can start actually measuring how high the pile is, how thick that slab is, that this piece of rubble must have come from this part of the building, and those sorts of things.

How might ground robots be used in this type of disaster?

The current state of the practice for searching the interior of rubble is to use either a small tracked vehicle, such as an Inkutun VGTV Extreme, which is the most commonly used robot for such situations, or a snakelike robot, such as the Active Scope Camera developed in Japan.

Teledyne FLIR is sending a couple of tracked robots and operators to the site in Surfside, Florida.

Ground robots are typically used to go into places that searchers can’t fit into and go farther than search cameras can. Search cams typically max out at 18 feet, whereas ground robots have been able to go over 60 feet into rubble. They are also used to go into unsafe voids that a rescuer could fit in but that would be unsafe and thus would require teams to work for hours to shore up before anyone could enter it safely.

In theory, ground robots could also be used to allow medical personnel to see and talk with survivors trapped in rubble, and carry small packages of water and medicine to them. But so far no search and rescue teams anywhere have found anyone alive with a ground robot.

What are the challenges for using ground robots inside rubble?

The big problem is seeing inside the rubble. You’ve got basically a concrete, sheetrock, piping and furniture version of pickup sticks. If you can get a robot into the rubble, then the structural engineers can see the interior of that pile of pickup sticks and say “Oh, OK, we’re not going pull on that, that’s going to cause a secondary collapse. OK, we should start on this side, we’ll get through the debris quicker and safer.”

Going inside rubble piles is really hard. Scale is important. If the void spaces are on the order of the size of the robot, it’s tricky. If something goes wrong, it can’t turn around; it has to drive backward. Tortuosity – how many turns per meter – is also important. The more turns, the harder it is.

There’s also different surfaces. The robot may be on a concrete floor, next thing it’s on a patch of somebody’s shag carpeting. Then it’s got to go through a bunch of concrete that’s been pulverized into sand. There’s dust kicking up. The surroundings may be wet from all the sewage and all the water from sprinkler systems and the sand and dust start acting like mud. So it gets really hard really fast in terms of mobility.

What is your current research focus?

We look at human-robot interaction. We discovered that of all of the robots we could find in use, including ours – and we were the leading group in deploying robots in disasters – 51% of the failures during a disaster deployment were due to human error.

It’s challenging to work in these environments. I’ve never been in a disaster where there wasn’t some sort of surprise related to perception, something that you didn’t realize you needed to look for until you’re there.

What is your ideal search and rescue robot?

I’d like someone to develop a robot ferret. Ferrets are kind of snakey-looking mammals. But they have legs, small little legs. They can scoot around like a snake. They can claw with their little feet and climb up on uneven rocks. They can do a full meerkat, meaning they can stretch up really high and look around. They’re really good at balance, so they don’t fall over. They can be looking up and all of a sudden the ground starts to shift and they’re down and they’re gone – they’re fast.

How do you see the field of search and rescue robots going forward?

There’s no real funding for these types of ground robots. So there’s no economic incentive to develop robots for building collapses, which are very rare, thank goodness.

And the public safety agencies can’t afford them. They typically cost US\$50,000 to \$150,000 versus as little as \$1,000 for an aerial drone. So the cost-benefit doesn’t seem to be there.

I’m very frustrated with this. We’re still about the same level we were 20 years ago at the World Trade Center.

![]()

Robin R. Murphy volunteers with the Center for Robot-Assisted Search and Rescue. She receives funding from the National Science Foundation for her work in disaster robotics and with CRASAR. She is affiliated with Texas A&M.

Original post published in The Conversation.