Investing in the Future: Grow Your Own Robot

Robot Swarms in the Real World workshop at IEEE ICRA 2021

Siddharth Mayya (University of Pennsylvania), Gennaro Notomista (CNRS Rennes), Roderich Gross (The University of Sheffield) and Vijay Kumar (University of Pennsylvania) were the organisers of this IEEE ICRA 2021 workshop aiming to identify and accelerate developments that help swarm robotics technology transition into the real world. Here we bring you the recordings of the session in case you missed it or would like to re-watch.

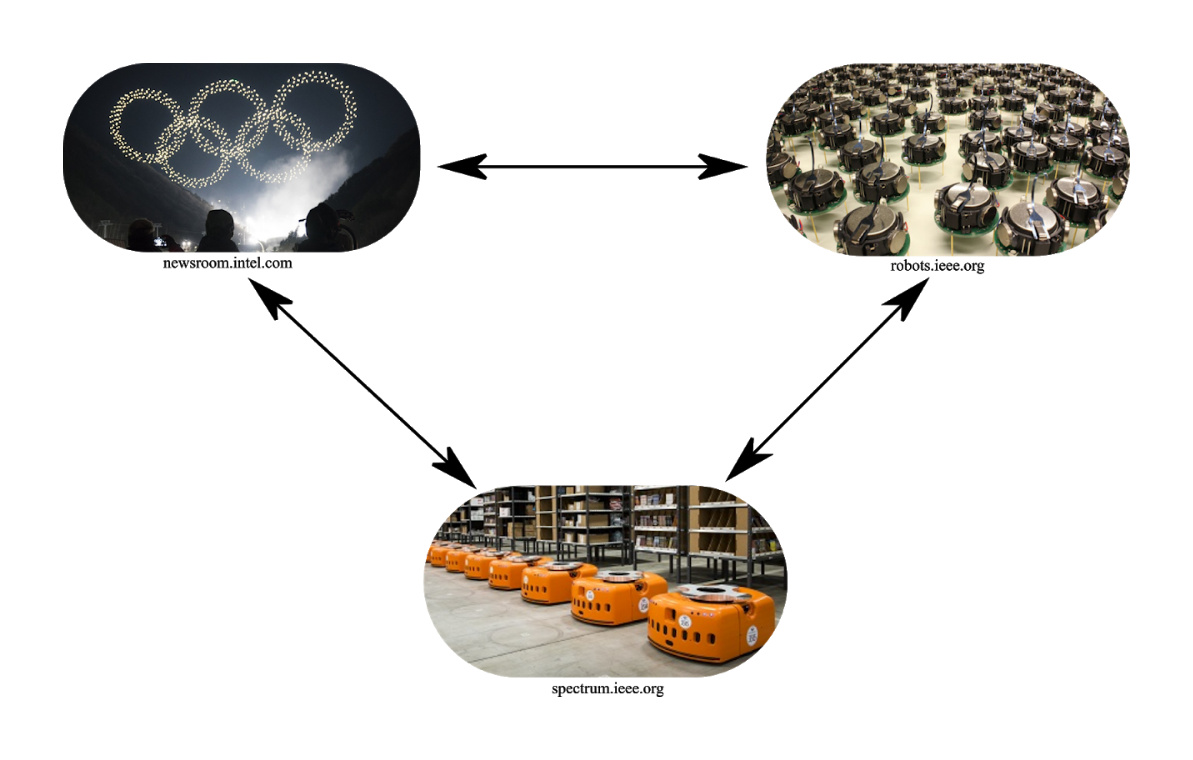

As the organisers describe, “in swarm robotics systems, coordinated behaviors emerge via local interactions among the robots as well as between robots and the environment. From Kilobots to Intel Aeros, the last decade has seen a rapid increase in the number of physically instantiated robot swarms. Such deployments can be broadly classified into two categories: in-laboratory swarms designed primarily as research aids, and industry-led efforts, especially in the entertainment and automated warehousing domains. In both of these categories, researchers have accumulated a vast amount of domain-specific knowledge, for example, regarding physical robot design, algorithm and software architecture design, human-swarm interfacing, and the practicalities of deployment.” The workshop brought together swarm roboticists from academia to industry to share their latest developments—from theory to real-world deployment. Enjoy the playlist with all the recordings below!

Underwater robot offers new insight into mid-ocean ‘twilight zone’

A complete Weights and Biases tutorial

Socially distanced robots serve Mecca holy water ahead of Hajj

Subterranean investigations: Researchers explore the shallow underground world with a burrowing soft robot

Electrohydraulic arachno-bot offers light weight robotic articulation

6 Cool Servo Drive Features You Might Not Know About

Robotic ship sets off to retrace the Mayflower’s journey

Highly maneuverable miniature robots controlled by magnetic fields

Four Ways to Improve Your Logistics Applications

IEEE ICRA 2021 Awards (with videos and papers)

Did you have the chance to attend the 2021 International Conference on Robotics and Automation (ICRA 2021)? Here we bring you the papers that received an award this year in case you missed them. Congratulations to all the winners and finalists!

Best Paper Award in Automation

Automated Fabrication of the High-Fidelity Cellular Micro-Scaffold through Proportion-Corrective Control of the Photocuring Process. Xin Li, Huaping Wang, Qing Shi, JiaXin Liu, Zhanhua Xin, Xinyi Dong, Qiang Huang and Toshio Fukuda

“An essential and challenging use case solved and evaluated convincingly. This work brings to light the artisanal field that can gain a lot in terms of safety and worker’s health preservation through the use of collaborative robots. Simulation is used to design advanced control architectures, including virtual walls around the cutting-tool as well as adaptive damping that would account for the operator know-how and level of expertise.”

Access the paper here.

Best Paper Award in Cognitive Robotics

How to Select and Use Tools?: Active Perception of Target Objects Using Multimodal Deep Learning. Namiko Saito, Tetsuya Ogata, Satoshi Funabashi, Hiroki Mori and Shigeki Sugano

“Robots benefit from being able to select and use appropriate tools. This paper contributes to the advancement of robotics by focusing on tool-object-action relations. The proposed deep neural network model generates motions for tool selection and use. Results demonstrated for a relatively complex ingredient handling task have broader applications in robotics. The approach that relies on active perception and multimodal information fusion is an impactful contribution to cognitive robotics.”

Access the paper here or here.

Best Paper Award on Human-Robot Interaction (HRI)

Reactive Human-To-Robot Handovers of Arbitrary Objects. Wei Yang, Chris Paxton, Arsalan Mousavian, Yu-Wei Chao, Maya Cakmak and Dieter Fox

“This paper presents a method combining realtime motion planning and grasp selection for object handover task from a human to a robot, with effective evaluation on a user study on 26 diverse household objects. The incremental contribution has been made for human robot interaction. Be great if the cost function of best grasp selection somehow involves robotic manipulation metric, eg., form closure.”

Access the paper here.

Best Paper Award on Mechanisms and Design

Soft Hybrid Aerial Vehicle Via Bistable Mechanism. Xuan Li, Jessica McWilliams, Minchen Li, Cynthia Sung and Chenfanfu Jiang

“This paper presents a novel morphing hybrid aerial vehicle with folding wings that exhibits both a quadrotor and a fixed wing modewithout requiring any extra actuation by leveraging the motion of a bistable mechanism at the center of the aircraft. A topology optimization method is developed to optimize the bistable mechanism and the folding wing. This work is an important contribution to design of hybrid aerial vehicles.”

Access the paper here.

Best Paper Award in Medical Robotics

Relational Graph Learning on Visual and Kinematics Embeddings for Accurate Gesture Recognition in Robotic Surgery. Yonghao Long, Jie Ying Wu, Bo Lu, Yueming Jin, Mathias Unberath, Yunhui Liu, Pheng Ann Heng and Qi Dou

“This paper presents a novel online multi-modal graph learning method to dynamically integrate complementary information in video and kinematics data from robotic systems, to achieve accurate surgical gesture recognition. The proposed method is validated on collected in-house dVRK datasets, shedding light on the general efficacy of their approach.”

Access the paper here.

Best Paper Award on Multi-Robot Systems

Optimal Sequential Stochastic Deployment of Multiple Passenger Robots. Chris (Yu Hsuan) Lee, Graeme Best and Geoffrey Hollinger

“The paper presents rigorous results (well validated experimentally) and visionary ideas: the innovative idea of marsupial robots is very promising for the multi-robot systems community.”

Access the paper here.

Best Paper Award in Robot Manipulation

StRETcH: A Soft to Resistive Elastic Tactile Hand. Carolyn Matl, Josephine Koe and Ruzena Bajcsy

“The committee was particularly impressed by the high level of novelty in this work with unique applications for tactile manipulation of soft objects. Both the paper and presentation provided a clear description of the problem solved, methods and contribution suitable for the general ICRA audience. Significant experimental validations made for a compelling record of the contribution.”

Access the paper here.

Best Paper Award in Robotic Vision

Interval-Based Visual-LiDAR Sensor Fusion. Raphael Voges and Bernardo Wagner

“The paper proposes to use interval analysis to propagate the error from the input sources to the fused information in a straightforward way. To show the applicability of our approach, the paper uses the fused information for dead reckoning. An evaluation using real data shows that the proposed approach localizes the robot in a guaranteed way.”

Access the paper here.

Best Paper Award in Service Robotics

Compact Flat Fabric Pneumatic Artificial Muscle (ffPAM) for Soft Wearable Robotic Devices. Woojong Kim, Hyunkyu Park and Jung Kim

“This paper presents design and evaluation of a novel flat fabric pneumatic artificial muscle with embedded sensing. Experimental results clearly demonstrate that the innovative ffPAM is durable, compact, and has great potential to advance broader application of wearable service robots.”

Access the paper here.

Best Paper Award on Unmanned Aerial Vehicles

Aerial Manipulator Pushing a Movable Structure Using a DOB-Based Robust Controller. Dongjae Lee, Hoseong Seo, Inkyu Jang,Seung Jae Lee and H. Jin Kim

“This paper provides a robust control approach that maintains UAV stability through manipulator contact forces during pushing. It contributes control design along with convincing experimental validation on manipulated objects of unknown size and dynamics. The approach provides practical utility for unmanned aerial manipulation with contact forces.”

Access the paper here.

Best Student Paper Award

Unsupervised Learning of Lidar Features for Use in a Probabilistic Trajectory Estimator. David Juny Yoon, Haowei Zhang, Mona Gridseth, Hugues Thomas and Timothy Barfoot

“The paper presents an unsupervised parameter learning approach in the context of Gaussian variational inference. The approach is innovative and sound. It has been well evaluated using open benchmark datasets. The paper has a broad impact on autonomous navigation.”

Access the paper here.

Best Conference Paper Award

Extrinsic Contact Sensing with Relative-Motion Tracking from Distributed Tactile Measurements. Daolin Ma, Siyuan Dong, Alberto Rodriguez

“The paper makes a notable contribution to the important and re-emerging field of tactile perception by solving the problem of contact localization between an unknown object held by an imprecise grasp and the unknown environment with which it is in contact. This paper represents an excellent theory-to-practice exercise as the novel proposal of using extrinsic tactile array data to infer contact is verified with a new tactile sensor and real robotic manipulation in a simplified, but realistic environment. The authors also provide a robust and honest discussion of results, both positive and negative, for reader evaluation.”

Access the paper here.

Best Video Demonstration Award

Merihan Alhafnawi at the Robot Swarms in the Real World workshop.

Access the video here.