Argon Medical Addresses High Labor Costs and Productivity with MiR Robot

New Collaborative Robot Vacuum Tool

Omron to Introduce New i4 SCARA Product Family

Grown-ups with supportive robots

SmokeBot – a robot serving rescue units

CES Asia: Innovation at the Speed of 5G

5 Tips for Writing a Great Robotics Engineer Resume

WAZE for drones: expanding the national airspace

Sitting in New York City, looking up at the clear June skies, I wonder if I am staring at an endangered phenomena. According to many in the Unmanned Aircraft Systems (UAS) industry, skylines across the country soon will be filled with flying cars, quadcopter deliveries, emergency drones, and other robo-flyers. Moving one step closer to this mechanically-induced hazy future, General Electric (GE) announced last week the launch of AiRXOS, a “next generation unmanned traffic” management system.

Managing the National Airspace is already a political football with the Trump Administration proposing privatizing the air-control division of the Federal Aviation Administration (FAA), taking its controller workforce of 15,000 off the government’s books. The White House argues that this would enable the FAA to modernize and adopt “NextGen” technologies to speed commercial air travel. While this budgetary line item is debated in the halls of Congress, one certainty inside the FAA is that the National Airspace (NAS) will have to expand to make room for an increased amount of commercial and recreational traffic, the majority of which will be unmanned.

Ken Stewart, the General Manager of AiRXOS, boasts, “We’re addressing the complexity of integrating unmanned vehicles into the national airspace. When you’re thinking about getting a package delivered to your home by drone, there are some things that need to be solved before we can get to that point.” The first step for the new division of GE is to pilot the system in a geographically-controlled airspace. To accomplish this task, DriveOhio’s UAS Center invested millions in the GE startup. Accordingly, the first test deployment of AiRXOS will be conducted over a 35 mile stretch of Ohio’s Interstate 33 by placing sensors along the road to detect and report on air traffic. GE states that this trial will lay the foundation for the UAS industry. As Alan Caslavka, president of Avionics at GE Aviation, explains, “AiRXOS is addressing the rapid changes in autonomous vehicle technology, advanced operations, and in the regulatory environment. We’re excited for AiRXOS to help set the standard for autonomous and manned aerial vehicles to share the sky safely.”

Stewart whimsically calls his new air traffic control platform WAZE for drones. Like the popular navigation app, AiRXOS provides drone operators with real-time flight-planning data to automatically avoid obstacles, other aircraft, and route around inclement weather. The company also plans to integrate with the FAA to streamline regulatory communications with the agency. Stewart explains that this will speed up authorizations as today, “It’s difficult to get [requests] approved because the FAA hasn’t collected enough data to make a decision about whether something is safe or not.”

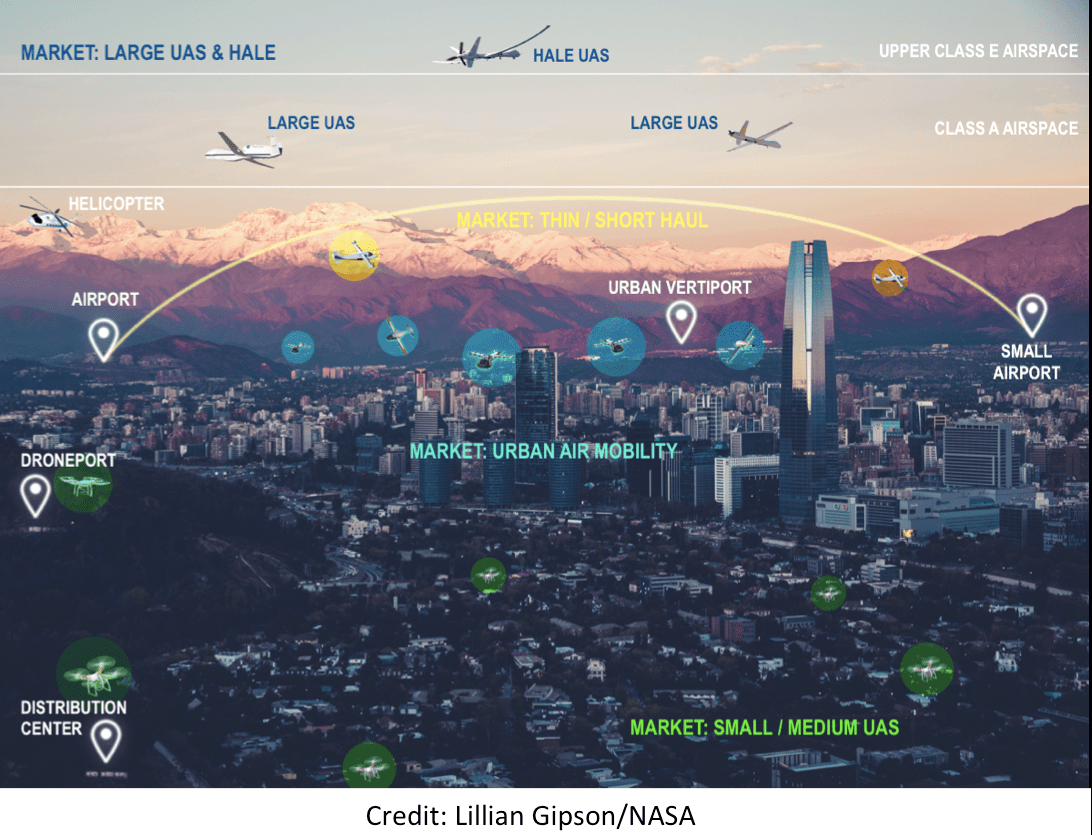

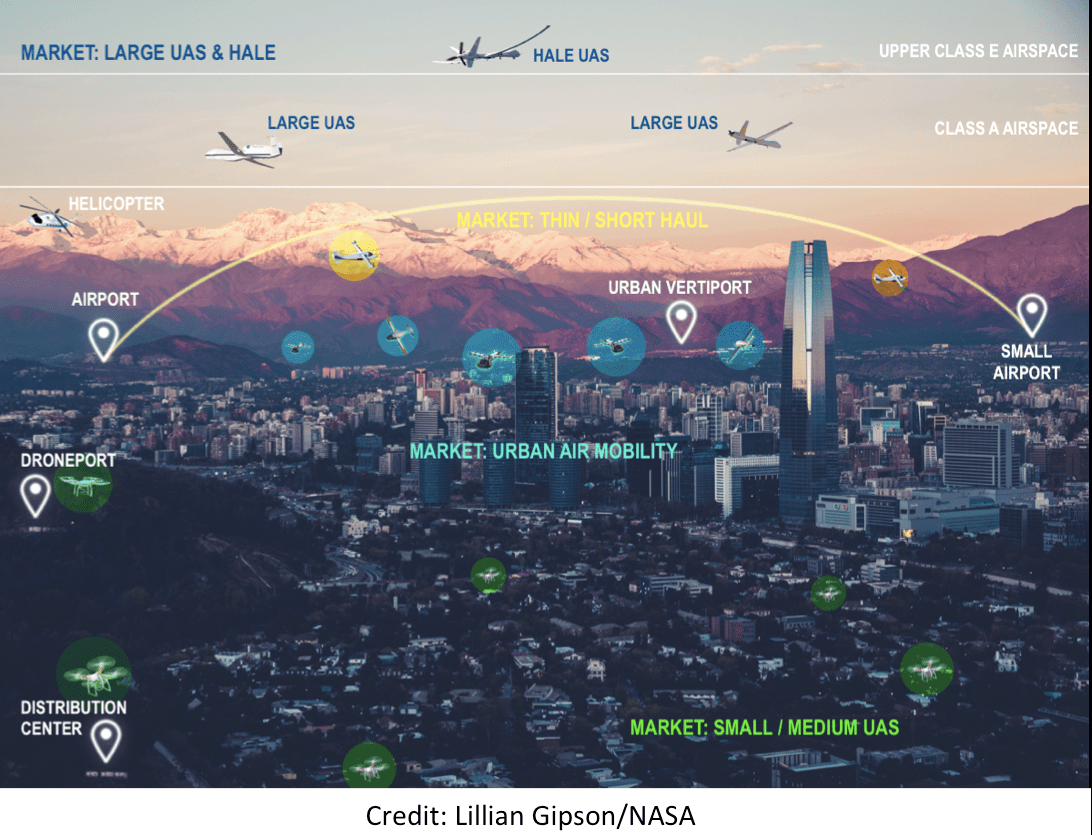

NASA is a key partner in counseling the FAA in integrating commercial UAS into the NAS. Charged with removing the “technical and regulatory barriers that are limiting the ability for civil UAS to fly in the NAS” is Davis Hackenberg of NASA’s Armstrong Flight Research Center. Last year, we invited Hackenberg to present his UAS vision to RobotLabNYC. Hackenberg shared with the packed audience NASA’s multi-layered approach to parsing the skies for a wide-range of aircrafts, including: high altitude long endurance flights, commercial airliners, small recreational craft, quadcopter inspections, drone deliveries and urban aerial transportation. Recently the FAA instituted a new regulation mandating that all aircrafts be equipped with Automatic Dependent Surveillance-Broadcast (ADS-B) systems by January 1, 2020. The FAA calls such equipment “foundational NextGen technology that transforms aircraft surveillance using satellite-based positioning,” essentially connecting human-piloted craft to computers on the ground and, quite possibly, in the sky. Many believe this is a critical step towards delivering on the long-awaited promise of the commercial UAS industry with autonomous beyond visual line of sight flights.

I followed up this week with Hackenberg about the news of AiRXOS and the new FAA guidelines. He explained, “For aircraft operating in an ADS-B environment, testing the cooperative exchange of information on position and altitude (and potentially intent) still needs to be accomplished in order to validate the accuracy and reliability necessary for a risk-based safety case.” Hackenberg continued to describe how ADS-B might not help low altitude missions, “For aircraft operating in an environment where many aircraft are not transmitting position and altitude (non-transponder equipped aircraft), developing low cost/weight/power solutions for DAA [Detect and Avoid] and C2 [Command and Control Systems] is critical to ensure that the unmanned aircraft can remain a safe distance from all traffic. Finally, the very low altitude environment (package delivery and air taxi) will need significant technology development for similar DAA/C2 solutions, as well as certified much more (e.g. vehicles to deal with hazardous weather conditions).” The Deputy Project Manager then shared with me his view of the future, “In the next five years, there will be significant advancements in the introduction of drone deliveries. The skies will not be ‘darkened,’ but there will likely be semi-routine service to many areas of the country, particularly major cities. I also believe there will be at least a few major cities with air taxi service using optionally piloted vehicles within the 10-year horizon. Having the pilot onboard in the initial phase may be a critical stepping-stone to gathering sufficient data to justify future safety cases. And then hopefully soon enough there will be several cities with fully autonomous taxi service.”

Last month, Uber already ambitiously declared at its Elevate Summit that its ride-hail program will begin shuttling humans by 2023. Uber plans to deploy electric vertical take-off and landing (eVTOL) vehicles throughout major metropolitan areas. “Ultimately, where we want to go is about urban mobility and urban transport, and being a solution for the cities in which we operate,” says Uber CEO, Dara Khosrowshahi. Uber has been cited by many civil planners as the primary cause for increased urban congestion. Its eVTOL plan, called uberAIR, is aimed at alleviating terrestrial vehicle traffic by offsetting commutes with autonomous air taxis that are centrally located on rooftops throughout city centers.

One of Uber’s first test locations for uberAIR will be Dallas-Fort Worth, Texas. Tom Prevot, Uber’s Director of Engineering for Airspace Systems, describes the company’s effort to design a Dynamic Skylane Networks of virtual lanes for its eVTOLs to travel, “We’re designing our flight paths essentially to stay out of the scheduled air carriers’ flight paths initially. We do want to test some of these concepts of maybe flying in lanes and flying close to each other but in a very safe environment, initially.” To accomplish these objectives, Prevot’s group signed a Space Act Agreement with NASA to determine the requirements for its aerial ride-share network. Using Uber’s data, NASA is already simulating small-passenger flights around the Texas city to identify potential risks to an already crowded airspace.

After the Elevate conference, media reports hyped the immanent arrival of flying taxis. Rodney Brooks (considered by many as the godfather of robotics) responded with a tweet: “Headline says ‘prototype’, story says ‘concept’. This is a big difference, and symptomatic of stupid media hype. Really!!!” Dan Elwell, FAA Acting Administrator, was much more subdued with his opinion of how quickly the technology will arrive, “Well, we’ll see”

Editor’s Note: This week we will explore regulating unmanned systems further with Democratic Presidential Candidate Andrew Yang and New York State Assemblyman Clyde Vanel at the RobotLab forum on “The Politics Of Automation” in New York City.

WAZE for drones: expanding the national airspace

Sitting in New York City, looking up at the clear June skies, I wonder if I am staring at an endangered phenomena. According to many in the Unmanned Aircraft Systems (UAS) industry, skylines across the country soon will be filled with flying cars, quadcopter deliveries, emergency drones, and other robo-flyers. Moving one step closer to this mechanically-induced hazy future, General Electric (GE) announced last week the launch of AiRXOS, a “next generation unmanned traffic” management system.

Managing the National Airspace is already a political football with the Trump Administration proposing privatizing the air-control division of the Federal Aviation Administration (FAA), taking its controller workforce of 15,000 off the government’s books. The White House argues that this would enable the FAA to modernize and adopt “NextGen” technologies to speed commercial air travel. While this budgetary line item is debated in the halls of Congress, one certainty inside the FAA is that the National Airspace (NAS) will have to expand to make room for an increased amount of commercial and recreational traffic, the majority of which will be unmanned.

Ken Stewart, the General Manager of AiRXOS, boasts, “We’re addressing the complexity of integrating unmanned vehicles into the national airspace. When you’re thinking about getting a package delivered to your home by drone, there are some things that need to be solved before we can get to that point.” The first step for the new division of GE is to pilot the system in a geographically-controlled airspace. To accomplish this task, DriveOhio’s UAS Center invested millions in the GE startup. Accordingly, the first test deployment of AiRXOS will be conducted over a 35 mile stretch of Ohio’s Interstate 33 by placing sensors along the road to detect and report on air traffic. GE states that this trial will lay the foundation for the UAS industry. As Alan Caslavka, president of Avionics at GE Aviation, explains, “AiRXOS is addressing the rapid changes in autonomous vehicle technology, advanced operations, and in the regulatory environment. We’re excited for AiRXOS to help set the standard for autonomous and manned aerial vehicles to share the sky safely.”

Stewart whimsically calls his new air traffic control platform WAZE for drones. Like the popular navigation app, AiRXOS provides drone operators with real-time flight-planning data to automatically avoid obstacles, other aircraft, and route around inclement weather. The company also plans to integrate with the FAA to streamline regulatory communications with the agency. Stewart explains that this will speed up authorizations as today, “It’s difficult to get [requests] approved because the FAA hasn’t collected enough data to make a decision about whether something is safe or not.”

NASA is a key partner in counseling the FAA in integrating commercial UAS into the NAS. Charged with removing the “technical and regulatory barriers that are limiting the ability for civil UAS to fly in the NAS” is Davis Hackenberg of NASA’s Armstrong Flight Research Center. Last year, we invited Hackenberg to present his UAS vision to RobotLabNYC. Hackenberg shared with the packed audience NASA’s multi-layered approach to parsing the skies for a wide-range of aircrafts, including: high altitude long endurance flights, commercial airliners, small recreational craft, quadcopter inspections, drone deliveries and urban aerial transportation. Recently the FAA instituted a new regulation mandating that all aircrafts be equipped with Automatic Dependent Surveillance-Broadcast (ADS-B) systems by January 1, 2020. The FAA calls such equipment “foundational NextGen technology that transforms aircraft surveillance using satellite-based positioning,” essentially connecting human-piloted craft to computers on the ground and, quite possibly, in the sky. Many believe this is a critical step towards delivering on the long-awaited promise of the commercial UAS industry with autonomous beyond visual line of sight flights.

I followed up this week with Hackenberg about the news of AiRXOS and the new FAA guidelines. He explained, “For aircraft operating in an ADS-B environment, testing the cooperative exchange of information on position and altitude (and potentially intent) still needs to be accomplished in order to validate the accuracy and reliability necessary for a risk-based safety case.” Hackenberg continued to describe how ADS-B might not help low altitude missions, “For aircraft operating in an environment where many aircraft are not transmitting position and altitude (non-transponder equipped aircraft), developing low cost/weight/power solutions for DAA [Detect and Avoid] and C2 [Command and Control Systems] is critical to ensure that the unmanned aircraft can remain a safe distance from all traffic. Finally, the very low altitude environment (package delivery and air taxi) will need significant technology development for similar DAA/C2 solutions, as well as certified much more (e.g. vehicles to deal with hazardous weather conditions).” The Deputy Project Manager then shared with me his view of the future, “In the next five years, there will be significant advancements in the introduction of drone deliveries. The skies will not be ‘darkened,’ but there will likely be semi-routine service to many areas of the country, particularly major cities. I also believe there will be at least a few major cities with air taxi service using optionally piloted vehicles within the 10-year horizon. Having the pilot onboard in the initial phase may be a critical stepping-stone to gathering sufficient data to justify future safety cases. And then hopefully soon enough there will be several cities with fully autonomous taxi service.”

Last month, Uber already ambitiously declared at its Elevate Summit that its ride-hail program will begin shuttling humans by 2023. Uber plans to deploy electric vertical take-off and landing (eVTOL) vehicles throughout major metropolitan areas. “Ultimately, where we want to go is about urban mobility and urban transport, and being a solution for the cities in which we operate,” says Uber CEO, Dara Khosrowshahi. Uber has been cited by many civil planners as the primary cause for increased urban congestion. Its eVTOL plan, called uberAIR, is aimed at alleviating terrestrial vehicle traffic by offsetting commutes with autonomous air taxis that are centrally located on rooftops throughout city centers.

One of Uber’s first test locations for uberAIR will be Dallas-Fort Worth, Texas. Tom Prevot, Uber’s Director of Engineering for Airspace Systems, describes the company’s effort to design a Dynamic Skylane Networks of virtual lanes for its eVTOLs to travel, “We’re designing our flight paths essentially to stay out of the scheduled air carriers’ flight paths initially. We do want to test some of these concepts of maybe flying in lanes and flying close to each other but in a very safe environment, initially.” To accomplish these objectives, Prevot’s group signed a Space Act Agreement with NASA to determine the requirements for its aerial ride-share network. Using Uber’s data, NASA is already simulating small-passenger flights around the Texas city to identify potential risks to an already crowded airspace.

After the Elevate conference, media reports hyped the immanent arrival of flying taxis. Rodney Brooks (considered by many as the godfather of robotics) responded with a tweet: “Headline says ‘prototype’, story says ‘concept’. This is a big difference, and symptomatic of stupid media hype. Really!!!” Dan Elwell, FAA Acting Administrator, was much more subdued with his opinion of how quickly the technology will arrive, “Well, we’ll see”

Editor’s Note: This week we will explore regulating unmanned systems further with Democratic Presidential Candidate Andrew Yang and New York State Assemblyman Clyde Vanel at the RobotLab forum on “The Politics Of Automation” in New York City.

Robots in Depth with Andrew Graham

In this episode of Robots in Depth, Per Sjöborg speaks with Andrew Graham about snake arm robots that can get into impossible locations and do things no other system can.

In this episode of Robots in Depth, Per Sjöborg speaks with Andrew Graham about snake arm robots that can get into impossible locations and do things no other system can.

Andrew tells the story about starting OC Robotics as a way to ground his robotics development efforts in a customer need. He felt that making something useful gave a great direction to his projects.

We also hear about some of the unique properties of snake arm robots:

– They can fit in any space that the tip of the robot can get through

– They can operate in very tight locations as they are flexible all along and therefore do not sweep large areas to move

– They are easy to seal up so that they don’t interact with the environment they operate in

– They are set up in two parts where the part exposed to the environment and to risk is the cheaper part

Andrew then shares some interesting insights from the many projects he has worked on, from fish processing and suit making to bomb disposal and servicing of nuclear power plants.

This interview was recorded in 2015.

Robots in Depth with Andrew Graham

In this episode of Robots in Depth, Per Sjöborg speaks with Andrew Graham about snake arm robots that can get into impossible locations and do things no other system can.

In this episode of Robots in Depth, Per Sjöborg speaks with Andrew Graham about snake arm robots that can get into impossible locations and do things no other system can.

Andrew tells the story about starting OC Robotics as a way to ground his robotics development efforts in a customer need. He felt that making something useful gave a great direction to his projects.

We also hear about some of the unique properties of snake arm robots:

– They can fit in any space that the tip of the robot can get through

– They can operate in very tight locations as they are flexible all along and therefore do not sweep large areas to move

– They are easy to seal up so that they don’t interact with the environment they operate in

– They are set up in two parts where the part exposed to the environment and to risk is the cheaper part

Andrew then shares some interesting insights from the many projects he has worked on, from fish processing and suit making to bomb disposal and servicing of nuclear power plants.

This interview was recorded in 2015.

Teaching robots how to move objects

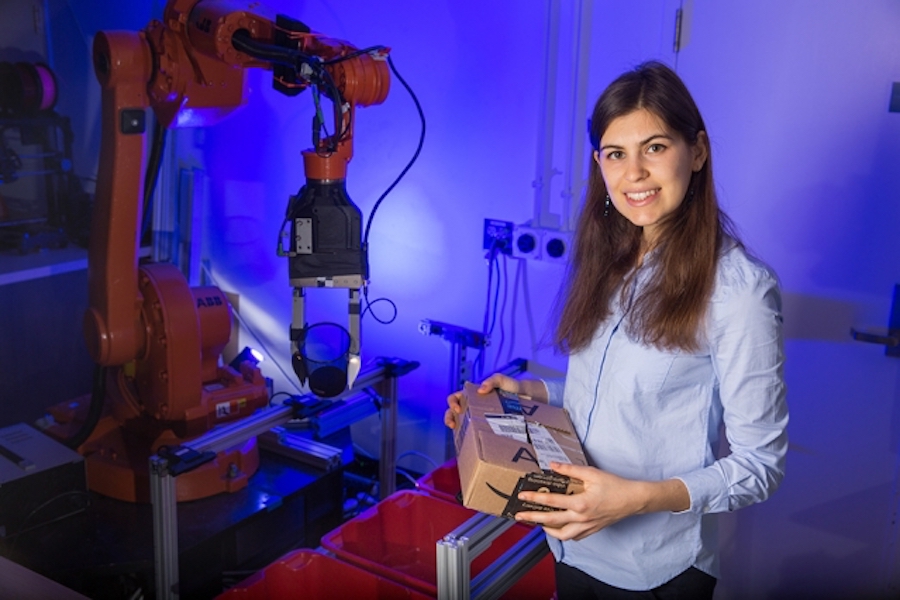

Photo: Tony Pulsone

By Mary Beth O’Leary

With the push of a button, months of hard work were about to be put to the test. Sixteen teams of engineers convened in a cavernous exhibit hall in Nagoya, Japan, for the 2017 Amazon Robotics Challenge. The robotic systems they built were tasked with removing items from bins and placing them into boxes. For graduate student Maria Bauza, who served as task-planning lead for the MIT-Princeton Team, the moment was particularly nerve-wracking.

“It was super stressful when the competition started,” recalls Bauza. “You just press play and the robot is autonomous. It’s going to do whatever you code it for, but you have no control. If something is broken, then that’s it.”

Robotics has been a major focus for Bauza since her undergraduate career. She studied mathematics and engineering physics at the Polytechnic University of Catalonia in Barcelona. During a year as a visiting student at MIT, Bauza was able to put her interest in computer science and artificial intelligence into practice. “When I came to MIT for that year, I starting applying the tools I had learned in machine learning to real problems in robotics,” she adds.

Two creative undergraduate projects gave her even more practice in this area. In one project, she hacked the controller of a toy remote control car to make it drive in a straight line. In another, she developed a portable robot that could draw on the blackboard for teachers. The robot was given an image of Mona Lisa and, after going through an algorithm, it drew that image on the blackboard. “That was the first small success in my robotics career,” says Bauza.

After graduating with her bachelor’s degree in 2016, she joined the Manipulation and Mechanisms Laboratory at MIT (known as MCube Lab) under Assistant Professor Alberto Rodriguez’s guidance. “Maria brings together experience in machine learning and a strong background in mathematics, computer science, and mechanics, which makes her a great candidate to grow into a leader in the fields of machine learning and robotics,” says Rodriguez.

For her PhD thesis, Bauza is developing machine-learning algorithms and software to improve how robots interact with the world. MCube’s multidisciplinary team provides the support needed to pursue this goal.

“In the end, machine learning can’t work if you don’t have good data,” Bauza explains. “Good data comes from good hardware, good sensors, good cameras — so in MCube we all collaborate to make sure the systems we build are powerful enough to be autonomous.”

To create these robust autonomous systems, Bauza has been exploring the notion of uncertainty when robots pick up, grasp, or push an object. “If the robot could touch the object, have a notion of tactile information, and be able to react to that information, it will have much more success,” explains Bauza.

Improving how robots interact with the world and reason to find the best possible outcome was crucial to the Amazon Robotics Challenge. Bauza built the code that helped the MIT-Princeton Team robot understand what object it was interacting with, and where to place that object. “Maria was in charge of developing the software for high-level decision making,” explains Rodriguez. “She did it without having prior experience in big robotic systems and it worked out fantastic.”

Bauza’s mind was at ease within a few minutes of 2017 Amazon Robotics Challenge. “After a few objects that you do well, you start to relax,” she remembers. “You realize the system is working. By the end it was such a good feeling!”

Bauza and the rest of the MCube team walked away with first place in the “stow task” portion of the challenge. They will continue to work with Amazon on perfecting the technology they developed.

While Bauza tackles the challenge of developing software to help robots interact with their environments, she has her own personal challenge to tackle: surviving winter in Boston. “I’m from the island of Menorca off the coast of Spain, so Boston winters have definitely been an adjustment,” she adds. “Every year I buy warmer clothes. But I’m really lucky to be here and be able to collaborate with Professor Rodriguez and the MCube team on developing smart robots that interact with their environment.”

Teaching robots how to move objects

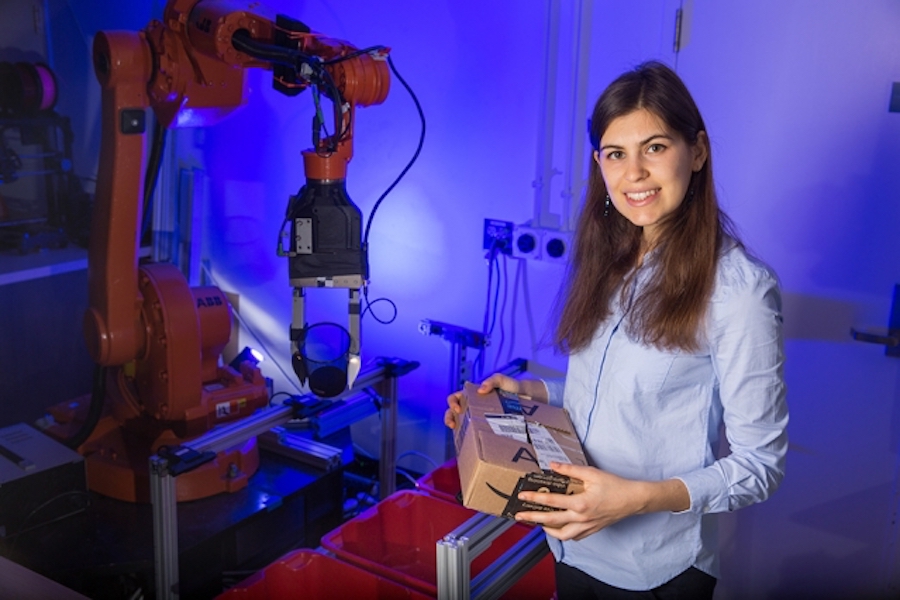

Photo: Tony Pulsone

By Mary Beth O’Leary

With the push of a button, months of hard work were about to be put to the test. Sixteen teams of engineers convened in a cavernous exhibit hall in Nagoya, Japan, for the 2017 Amazon Robotics Challenge. The robotic systems they built were tasked with removing items from bins and placing them into boxes. For graduate student Maria Bauza, who served as task-planning lead for the MIT-Princeton Team, the moment was particularly nerve-wracking.

“It was super stressful when the competition started,” recalls Bauza. “You just press play and the robot is autonomous. It’s going to do whatever you code it for, but you have no control. If something is broken, then that’s it.”

Robotics has been a major focus for Bauza since her undergraduate career. She studied mathematics and engineering physics at the Polytechnic University of Catalonia in Barcelona. During a year as a visiting student at MIT, Bauza was able to put her interest in computer science and artificial intelligence into practice. “When I came to MIT for that year, I starting applying the tools I had learned in machine learning to real problems in robotics,” she adds.

Two creative undergraduate projects gave her even more practice in this area. In one project, she hacked the controller of a toy remote control car to make it drive in a straight line. In another, she developed a portable robot that could draw on the blackboard for teachers. The robot was given an image of Mona Lisa and, after going through an algorithm, it drew that image on the blackboard. “That was the first small success in my robotics career,” says Bauza.

After graduating with her bachelor’s degree in 2016, she joined the Manipulation and Mechanisms Laboratory at MIT (known as MCube Lab) under Assistant Professor Alberto Rodriguez’s guidance. “Maria brings together experience in machine learning and a strong background in mathematics, computer science, and mechanics, which makes her a great candidate to grow into a leader in the fields of machine learning and robotics,” says Rodriguez.

For her PhD thesis, Bauza is developing machine-learning algorithms and software to improve how robots interact with the world. MCube’s multidisciplinary team provides the support needed to pursue this goal.

“In the end, machine learning can’t work if you don’t have good data,” Bauza explains. “Good data comes from good hardware, good sensors, good cameras — so in MCube we all collaborate to make sure the systems we build are powerful enough to be autonomous.”

To create these robust autonomous systems, Bauza has been exploring the notion of uncertainty when robots pick up, grasp, or push an object. “If the robot could touch the object, have a notion of tactile information, and be able to react to that information, it will have much more success,” explains Bauza.

Improving how robots interact with the world and reason to find the best possible outcome was crucial to the Amazon Robotics Challenge. Bauza built the code that helped the MIT-Princeton Team robot understand what object it was interacting with, and where to place that object. “Maria was in charge of developing the software for high-level decision making,” explains Rodriguez. “She did it without having prior experience in big robotic systems and it worked out fantastic.”

Bauza’s mind was at ease within a few minutes of 2017 Amazon Robotics Challenge. “After a few objects that you do well, you start to relax,” she remembers. “You realize the system is working. By the end it was such a good feeling!”

Bauza and the rest of the MCube team walked away with first place in the “stow task” portion of the challenge. They will continue to work with Amazon on perfecting the technology they developed.

While Bauza tackles the challenge of developing software to help robots interact with their environments, she has her own personal challenge to tackle: surviving winter in Boston. “I’m from the island of Menorca off the coast of Spain, so Boston winters have definitely been an adjustment,” she adds. “Every year I buy warmer clothes. But I’m really lucky to be here and be able to collaborate with Professor Rodriguez and the MCube team on developing smart robots that interact with their environment.”

Magnetic 3-D-printed structures crawl, roll, jump, and play catch

Photo: Felice Frankel

By Jennifer Chu

MIT engineers have created soft, 3-D-printed structures whose movements can be controlled with a wave of a magnet, much like marionettes without the strings.

The menagerie of structures that can be magnetically manipulated includes a smooth ring that wrinkles up, a long tube that squeezes shut, a sheet that folds itself, and a spider-like “grabber” that can crawl, roll, jump, and snap together fast enough to catch a passing ball. It can even be directed to wrap itself around a small pill and carry it across a table.

The researchers fabricated each structure from a new type of 3-D-printable ink that they infused with tiny magnetic particles. They fitted an electromagnet around the nozzle of a 3-D printer, which caused the magnetic particles to swing into a single orientation as the ink was fed through the nozzle. By controlling the magnetic orientation of individual sections in the structure, the researchers can produce structures and devices that can almost instantaneously shift into intricate formations, and even move about, as the various sections respond to an external magnetic field.

Xuanhe Zhao, the Noyce Career Development Professor in MIT’s Department of Mechanical Engineering and Department of Civil and Environmental Engineering, says the group’s technique may be used to fabricate magnetically controlled biomedical devices.

“We think in biomedicine this technique will find promising applications,” Zhao says. “For example, we could put a structure around a blood vessel to control the pumping of blood, or use a magnet to guide a device through the GI tract to take images, extract tissue samples, clear a blockage, or deliver certain drugs to a specific location. You can design, simulate, and then just print to achieve various functions.”

Zhao and his colleagues have published their results today in the journal Nature. His co-authors include Yoonho Kim, Hyunwoo Yuk, and Ruike Zhao of MIT, and Shawn Chester of the New Jersey Institute of Technology.

A shifting field

The team’s magnetically activated structures fall under the general category of soft actuated devices — squishy, moldable materials that are designed to shape-shift or move about through a variety of mechanical means. For instance, hydrogel devices swell when temperature or pH changes; shape-memory polymers and liquid crystal elastomers deform with sufficient stimuli such as heat or light; pneumatic and hydraulic devices can be actuated by air or water pumped into them; and dielectric elastomers stretch under electric voltages.

But hydrogels, shape-memory polymers, and liquid crystal elastomers are slow to respond, and change shape over the course of minutes to hours. Air- and water-driven devices require tubes that connect them to pumps, making them inefficient for remotely controlled applications. Dielectric elastomers require high voltages, usually above a thousand volts.

“There is no ideal candidate for a soft robot that can perform in an enclosed space like a human body, where you’d want to carry out certain tasks untethered,” Kim says. “That’s why we think there’s great promise in this idea of magnetic actuation, because it is fast, forceful, body-benign, and can be remotely controlled.”

Other groups have fabricated magnetically activated materials, though the movements they have achieved have been relatively simple. For the most part, researchers mix a polymer solution with magnetic beads, and pour the mixture into a mold. Once the material cures, they apply a magnetic field to uniformly magnetize the beads, before removing the structure from the mold.

“People have only made structures that elongate, shrink, or bend,” Yuk says. “The challenge is, how do you design a structure or robot that can perform much more complicated tasks?”

Domain game

Instead of making structures with magnetic particles of the same, uniform orientation, the team looked for ways to create magnetic “domains” — individual sections of a structure, each with a distinct orientation of magnetic particles. When exposed to an external magnetic field, each section should move in a distinct way, depending on the direction its particles move in response to the magnetic field. In this way, the group surmised that structures should carry out more complex articulations and movements.

With their new 3-D-printing platform, the researchers can print sections, or domains, of a structure, and tune the orientation of magnetic particles in a particular domain by changing the direction of the electromagnet encircling the printer’s nozzle, as the domain is printed.

The team also developed a physical model that predicts how a printed structure will deform under a magnetic field. Given the elasticity of the printed material, the pattern of domains in a structure, and the way in which an external magnetic field is applied, the model can predict the way an overall structure will deform or move. Ruike found that the model’s predictions closely matched with experiments the team carried out with a number of different printed structures.

In addition to a rippling ring, a self-squeezing tube, and a spider-like grabber, the team printed other complex structures, such as a set of “auxetic” structures that rapidly shrink or expand along two directions. Zhao and his colleagues also printed a ring embedded with electrical circuits and red and green LED lights. Depending on the orientation of an external magnetic field, the ring deforms to light up either red or green, in a programmed manner.

“We have developed a printing platform and a predictive model for others to use. People can design their own structures and domain patterns, validate them with the model, and print them to actuate various functions,” Zhao says. “By programming complex information of structure, domain, and magnetic field, one can even print intelligent machines such as robots.”

Jerry Qi, professor of mechanical engineering at Georgia Tech, says the group’s design can enable a range of fast, remotely controlled soft robotics, particularly in the biomedical field.

“This work is very novel,” says Qi, who was not involved in the research. “One could use a soft robot inside a human body or somewhere that is not easily accessible. With this technology reported in this paper, one can apply a magnetic field outside the human body, without using any wiring. Because of its fast responsive speed, the soft robot can fulfill many actions in a short time. These are important for practical applications.”

This research was supported, in part, by the National Science Foundation, the Office of Naval Research, and the MIT Institute for Soldier Nanotechnologies.