Robot created to monitor key wine vineyard parameters

Choosing the Best Machine Vision Technique for Robot Guidance

“Robotics Skins” turn everyday objects into robots

Researchers work to add function to 3-D-printed objects

RECORD BREAKER: IMTS 2018 LARGEST SHOW EVER

Multi-joint, personalized soft exosuit breaks new ground

By Benjamin Boettner

In the future, smart textile-based soft robotic exosuits could be worn by soldiers, fire fighters and rescue workers to help them traverse difficult terrain and arrive fresh at their destinations so that they can perform their respective tasks more effectively. They could also become a powerful means to enhance mobility and quality of living for people suffering from neurodegenerative disorders and for the elderly.

Conor Walsh’s team at the Wyss Institute for Biologically Inspired Engineering at Harvard University and the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) has been at the forefront of developing different soft wearable robotic devices that support mobility by applying mechanical forces to critical joints of the body, including at the ankle or hip joints, or in the case of a multi-joint soft exosuit both. Because of its potential for relieving overburdened solders in the field, the Defense Advanced Research Projects Agency (DARPA) funded the team’s efforts as part of its former Warrior Web program.

While the researchers have demonstrated that lab-based versions of soft exosuits can provide clear benefits to wearers, allowing them to spend less energy while walking and running, there remains a need for fully wearable exosuits that are suitable for use in the real world.

Now, in a study reported in the proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), the team presented their latest generation of a mobile multi-joint exosuit, which has been improved on all fronts and tested in the field through long marches over uneven terrain. Using the same exosuit in a second study published in the Journal of NeuroEngineering and Rehabilitation (JNER), the researchers developed an automatic tuning method to customize its assistance based on how an individual’s body is responding to it, and demonstrated significant energy savings.

The multi-joint soft exosuit consists of textile apparel components worn at the waist, thighs, and calves. Through an optimized mobile actuation system worn near the waist and integrated into a military rucksack, mechanical forces are transmitted via cables that are guided through the exosuit’s soft components to ankle and hip joints. This way, the exosuit adds power to the ankles and hips to assist with leg movements during the walking cycle.

“We have updated all components in this new version of the multi-joint soft exosuit: the apparel is more user-friendly, easy to put on and accommodating to different body shapes; the actuation is more robust, lighter, quieter and smaller; and the control system allows us to apply forces to hips and ankles more robustly and consistently,” said David Perry, a co-author of the ICRA study and a Staff Engineer on Walsh’s team. As part of the DARPA program, the exosuit was field-tested in Aberdeen, MD, in collaboration with the Army Research Labs, where soldiers walked through a 12-mile cross-country course.

“We previously demonstrated that it is possible to use online optimization methods that by quantifying energy savings in the lab automatically individualize control parameters across different wearers. However, we needed a means to tune control parameters quickly and efficiently to the different gaits of soldiers at the Army outside a laboratory,” said Walsh, Ph.D., Core Faculty member of the Wyss Institute, the John L. Loeb Associate Professor of Engineering and Applied Sciences at SEAS, and Founder of the Harvard Biodesign Lab.

In the JNER study, the team presented a suitable new tuning method that uses exosuit sensors to optimize the positive power delivered at the ankle joints. When a wearer begins walking, the system measures the power and gradually adjusts controller parameters until it finds those that maximize the exosuit’s effects based on the wearer’s individual gait mechanics. The method can be used as a proxy measure for elaborate energy measurements.

“We evaluated the metabolic parameters in the seven study participants wearing exosuits that underwent the tuning process and found that the method reduced the metabolic cost of walking by about 14.8% compared to walking without the device and by about 22% compared to walking with the device unpowered,” said Sangjun Lee, the first author of both studies and a Graduate Student with Walsh at SEAS.

“These studies represent the exciting culmination of our DARPA-funded efforts. We are now continuing to optimize the technology for specific uses in the Army where dynamic movements are important; and we are exploring it for assisting workers in factories performing strenuous physical tasks,” said Walsh. “In addition, the field has recognized there is still a lot to understand on the basic science of co-adaptation of humans and wearable robots. Future co-optimization strategies and new training approaches could help further enhance individualization effects and enable wearers that initially respond poorly to exosuits to adapt to them as well and benefit from their assistance”.

“This research marks an important point in the Wyss Institute’s Bioinspired Soft Robotics Initiative and its development of soft exosuits in that it opens a path on which robotic devices could be adopted and personalized in real world scenarios by healthy and disabled wearers,” said Wyss Institute Founding Director Donald Ingber, M.D., Ph.D., who is also the Judah Folkman Professor of Vascular Biology at HMS and the Vascular Biology Program at Boston Children’s Hospital, and Professor of Bioengineering at SEAS.

Additional members of Walsh’s team were authors on either or both studies. Nikos Karavas, Ph.D., Brendan T. Quinlivan, Danielle Louise Ryan, Asa Eckert-Erdheim, Patrick Murphy, Taylor Greenberg Goldy, Nicolas Menard, Maria Athanassiu, Jinsoo Kim, Giuk Lee, Ph.D., and Ignacio Galiana, Ph.D., were authors on the ICRA study; and Jinsoo Kim, Lauren Baker, Andrew Long, Ph.D., Nikos Karavas, Ph.D., Nicolas Menard, and Ignacio Galiana, Ph.D., on the JNER study. The studies, in addition to DARPA’s Warrior Web program, were funded by Harvard’s Wyss Institute and SEAS.

- WYSS TECHNOLOGY – Soft Exosuits

- PUBLICATION – Journal of NeuroEngineering and Rehabilitation: Autonomous multi-joint soft exosuit with augmentation-power-based control parameter tuning reduces energy cost of loaded walking

- PUBLICATION – 2018 IEEE International Conference on Robotics and Automation (ICRA): Autonomous Multi-Joint Soft Exosuit for Assistance with Walking Overground

Machine-learning system tackles speech and object recognition, all at once

Image: Christine Daniloff

By Rob Matheson

MIT computer scientists have developed a system that learns to identify objects within an image, based on a spoken description of the image. Given an image and an audio caption, the model will highlight in real-time the relevant regions of the image being described.

Unlike current speech-recognition technologies, the model doesn’t require manual transcriptions and annotations of the examples it’s trained on. Instead, it learns words directly from recorded speech clips and objects in raw images, and associates them with one another.

The model can currently recognize only several hundred different words and object types. But the researchers hope that one day their combined speech-object recognition technique could save countless hours of manual labor and open new doors in speech and image recognition.

Speech-recognition systems such as Siri and Google Voice, for instance, require transcriptions of many thousands of hours of speech recordings. Using these data, the systems learn to map speech signals with specific words. Such an approach becomes especially problematic when, say, new terms enter our lexicon, and the systems must be retrained.

“We wanted to do speech recognition in a way that’s more natural, leveraging additional signals and information that humans have the benefit of using, but that machine learning algorithms don’t typically have access to. We got the idea of training a model in a manner similar to walking a child through the world and narrating what you’re seeing,” says David Harwath, a researcher in the Computer Science and Artificial Intelligence Laboratory (CSAIL) and the Spoken Language Systems Group. Harwath co-authored a paper describing the model that was presented at the recent European Conference on Computer Vision.

In the paper, the researchers demonstrate their model on an image of a young girl with blonde hair and blue eyes, wearing a blue dress, with a white lighthouse with a red roof in the background. The model learned to associate which pixels in the image corresponded with the words “girl,” “blonde hair,” “blue eyes,” “blue dress,” “white light house,” and “red roof.” When an audio caption was narrated, the model then highlighted each of those objects in the image as they were described.

One promising application is learning translations between different languages, without need of a bilingual annotator. Of the estimated 7,000 languages spoken worldwide, only 100 or so have enough transcription data for speech recognition. Consider, however, a situation where two different-language speakers describe the same image. If the model learns speech signals from language A that correspond to objects in the image, and learns the signals in language B that correspond to those same objects, it could assume those two signals — and matching words — are translations of one another.

“There’s potential there for a Babel Fish-type of mechanism,” Harwath says, referring to the fictitious living earpiece in the “Hitchhiker’s Guide to the Galaxy” novels that translates different languages to the wearer.

The CSAIL co-authors are: graduate student Adria Recasens; visiting student Didac Suris; former researcher Galen Chuang; Antonio Torralba, a professor of electrical engineering and computer science who also heads the MIT-IBM Watson AI Lab; and Senior Research Scientist James Glass, who leads the Spoken Language Systems Group at CSAIL.

Audio-visual associations

This work expands on an earlier model developed by Harwath, Glass, and Torralba that correlates speech with groups of thematically related images. In the earlier research, they put images of scenes from a classification database on the crowdsourcing Mechanical Turk platform. They then had people describe the images as if they were narrating to a child, for about 10 seconds. They compiled more than 200,000 pairs of images and audio captions, in hundreds of different categories, such as beaches, shopping malls, city streets, and bedrooms.

They then designed a model consisting of two separate convolutional neural networks (CNNs). One processes images, and one processes spectrograms, a visual representation of audio signals as they vary over time. The highest layer of the model computes outputs of the two networks and maps the speech patterns with image data.

The researchers would, for instance, feed the model caption A and image A, which is correct. Then, they would feed it a random caption B with image A, which is an incorrect pairing. After comparing thousands of wrong captions with image A, the model learns the speech signals corresponding with image A, and associates those signals with words in the captions. As described in a 2016 study, the model learned, for instance, to pick out the signal corresponding to the word “water,” and to retrieve images with bodies of water.

“But it didn’t provide a way to say, ‘This is exact point in time that somebody said a specific word that refers to that specific patch of pixels,’” Harwath says.

Making a matchmap

In the new paper, the researchers modified the model to associate specific words with specific patches of pixels. The researchers trained the model on the same database, but with a new total of 400,000 image-captions pairs. They held out 1,000 random pairs for testing.

In training, the model is similarly given correct and incorrect images and captions. But this time, the image-analyzing CNN divides the image into a grid of cells consisting of patches of pixels. The audio-analyzing CNN divides the spectrogram into segments of, say, one second to capture a word or two.

With the correct image and caption pair, the model matches the first cell of the grid to the first segment of audio, then matches that same cell with the second segment of audio, and so on, all the way through each grid cell and across all time segments. For each cell and audio segment, it provides a similarity score, depending on how closely the signal corresponds to the object.

The challenge is that, during training, the model doesn’t have access to any true alignment information between the speech and the image. “The biggest contribution of the paper,” Harwath says, “is demonstrating that these cross-modal alignments can be inferred automatically by simply teaching the network which images and captions belong together and which pairs don’t.”

The authors dub this automatic-learning association between a spoken caption’s waveform with the image pixels a “matchmap.” After training on thousands of image-caption pairs, the network narrows down those alignments to specific words representing specific objects in that matchmap.

“It’s kind of like the Big Bang, where matter was really dispersed, but then coalesced into planets and stars,” Harwath says. “Predictions start dispersed everywhere but, as you go through training, they converge into an alignment that represents meaningful semantic groundings between spoken words and visual objects.”

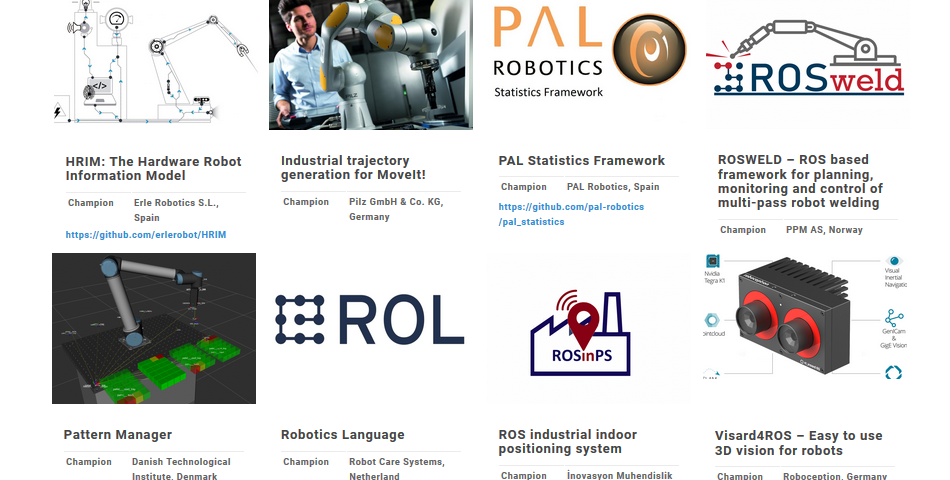

First results of the ROSIN project: Robotics Open-Source Software for Industry

Open-Source Software for robots is a de-facto standard in academia, and its advantages can benefit industrial applications as well. The worldwide ROS-Industrial initiative has been using ROS, the Robot Operating System, to this end.

In order to consolidate Europe’s expertise in advanced manufacturing, the H2020 project ROSIN supports EU’s strong role within ROS-Industrial. It will achieve this goal through three main actions on ROS: ensuring industrial-grade software quality; promoting new business-relevant applications through so-called Focused Technical Projects (FTPs); supporting educational activities for students and industry professionals on the one side conducting ROS-I trainings as well as and MOOCs and on the other hand by supporting education at third parties via Education Projects (EPs).

Now it is easier to get an overview of first results from ROSIN at http://rosin-project.eu/results.

Browse through vendor-developed ROS drivers for industrial hardware, generic ROS frameworks for industrial applications and model based tooling. Thanks to ROSIN support, all of these new ROS components are open-sourced for the benefit of the ROS-Industrial community. Each entry leads to a minipage that is maintained by the FTP champion, so check back often for updates on the progress of the projects.

The project is continuously accepting FTP project proposals to advance open-source robot software. New incoming proposals are evaluated every 3 months (approximately). The next cut-off dates will be 14. September and 16. November 2018. Further calls can be expected throughout the project runtime (January 2017 – December 2020).