#SciRocChallenge announces winners of Smart Cities Robotic Competition

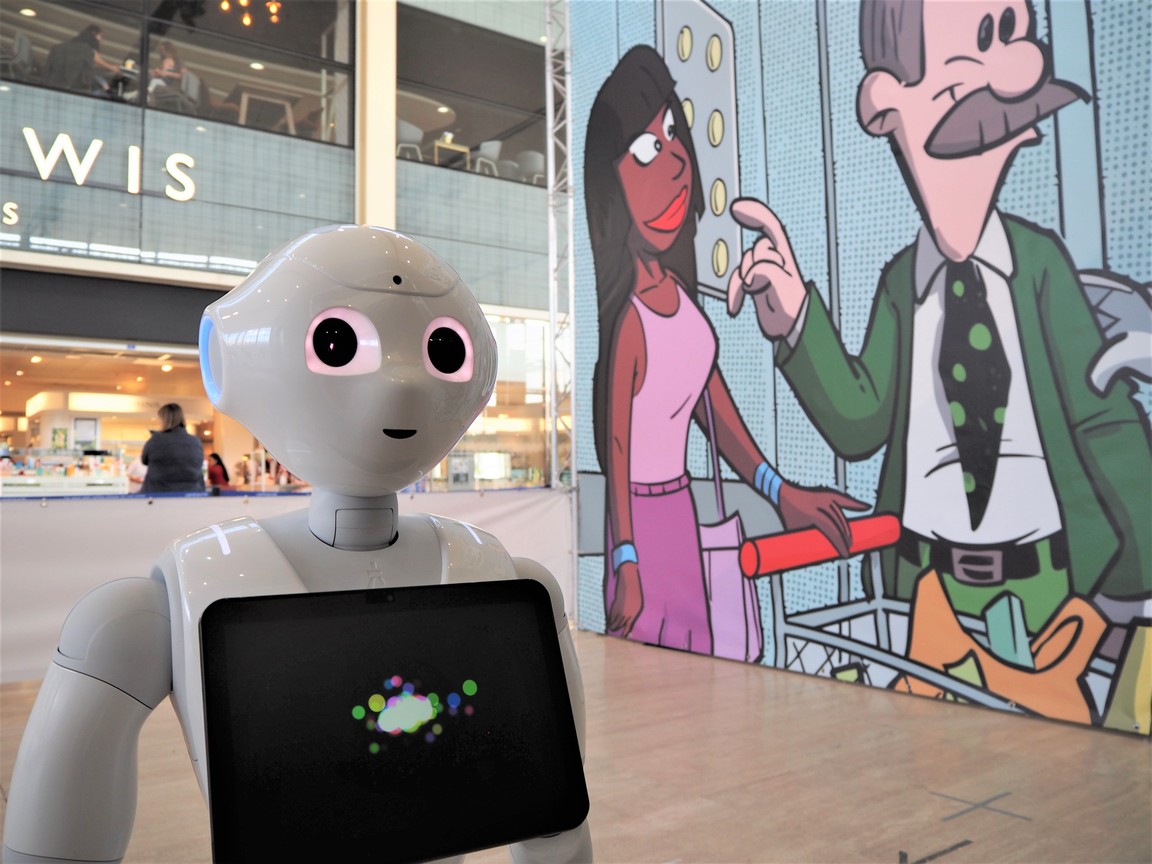

The smart city of Milton Keynes hosted the first edition of the European Robotics League (ERL)- Smart Cities Robotic Challenge (SciRoc Challenge). Ten European teams met in the shopping mall of Centre:mk to compete against each other in five futuristic scenarios in which robots assist humans serving coffee orders, picking products in a grocery shop or bringing medical aid. This robotics competition aims at benchmarking robots using a ranking system that allows teams to assess their performance and compare it with others. Find out the winning teams of the SciRoc Challenge 2019…

The ERL Smart Cities Robotics Challenge

The European Robotics League (ERL) was launched in 2016 under the umbrella of SPARC- the Partnership for Robotics in Europe. This pan-European robotics competition builds on the success of the EU-funded projects: RoCKIn, euRathlon, EuRoC and ROCKEU2. The SciRoc Horizon 2020 project took over the reins of the league in 2018, bringing in the expertise from the University of the West of England, Bristol, the Advanced Center for Aerospace Technologies (CATEC), the Association of Instituto Superior Técnico for Research and Development (IST-ID), the Centre for Maritime Research and Experimentation (CMRE), euRobotics aisbl, Politecnico di Milano, the Open University, the Sapienza University of Rome, the University of Applied Sciences Bonn-Rhein-Sieg and the Universitat Politecnica de Catalunya. The SciRoc consortium carefully designed the new biennial ERL Smart Cities Robotics challenge where robots from all three ERL leagues (Consumer, Professional and Emergency Service Robots) come together to interact with a smart infrastructure in a familiar urban setting.

Daniele Nardi, Professor of Artificial Intelligence at Sapienza University of Rome and Head of the Technical Committee of the SciRoc Challenge, explains that the project consortium chose the topic of smart cities because “Robotics competitions in a smart city are projected into the future, since it is likely that smart cities will be among the first places populated by robots”. Professor Nardi adds that they structured the competition through a series of episodes, each of them being a specific technical challenge for a robotic system and at the same time representing a situation that would be typically encountered in a smart city populated with robots.

Benchmarking through competitions

Benchmarking has been and still is a hot topic in the robotics community. How can we compare robots’ and robot systems’ performance? In 2013, the RoCKIn and euRathlon projects started exploring and developing a benchmarking methodology for robotics competitions. But, why benchmark robots through competitions? The answer from Matteo Matteuci, Assistant Professor at the Politecnico di Milano and one of the researchers behind the development of the ERL benchmarking methodology, is clear: “competitions are fun, they put you in realistic situations outside of your own lab”.

The European Robotics League approach to benchmarking is based on the definition of two separate, but interconnected, types of benchmarks: Functionality Benchmarks (FBMs) and Task Benchmarks (TBMs). A functionality benchmark evaluates a robot’s performance in specific functionalities, such as navigation, object perception, manipulation, etc. Whereas, a task benchmark assesses the performance of the robot system facing complex tasks that require using different functionalities. Matteucci comments “We have gone from a big once in a while costly competition to frequent, sustainable and repeatable competitions in a regional net of laboratories and hubs. The ERL is a big open lab running tournaments during the whole year. The competitions are structured in a way that makes them repeatable experiments you can compare.” In the case of the Sciroc Challenge he adds “it is somehow in the middle of the two, it’s a bigger event made up of small tasks in a public venue. Each task benchmark requires different functionalities, but it is more based on one than the others. For example, the episode of the elevator is mostly focused on HRI but also requires navigation and perception. The door is a mobile manipulation task but requires navigation and perception. SciRoc can be seen as a dry run of possible benchmarks that can be later introduced into the ERL local tournaments. In the case of the “Through the door” episode set up, it’s going to be deployed within 6 months in the facility of EUROBENCH project in Genova for benchmarking humanoid robots.”

The SciRoc challenge has also introduced a new term to the European Robotics League terminology: “the Episode”. Matteucci explains that this term refers more to the set up than to a category of benchmark. “The episode provides a narrative for the general public. SciRoc is a robotics event in the middle of a city in contact with people, so people are more interested in the story and perspective than the pure engineering benchmarking part. That’s why we came up with the short stories in the context of the smart cities.”

The ERL local tournaments have specific TBMs and FBMs for each of the leagues. With the purpose to align the ERL tournaments with the new SciRoc challenge, the leagues had to integrate new benchmarks. This was the case of the ERL Consumer Service robotics league, that takes place in a home or domestic environment. Pedro Lima, Professor in Robotics at IST university of Lisbon and Head of the Technical Committee of the ERL Consumer league explains that “the tasks the robots have to perform in the apartment (navigate around the house, detecting and picking objects, etc.) are very similar to the ones they have to perform in the shopping mall. We made changes in some of the TBMs to be more in line with the requirements of the coffee shop environment. Also, the task of opening a door is not new for the ERL Consumer league, but in the “through the door” episode the door has a handle to add complexity and also encourage humanoid robots to participate.”

SciRoc Challenge 2019 winners

The ERL Smart Cities Robotic challenge finals took place during the weekend and many visitors could see the robots successfully perform different complex tasks.

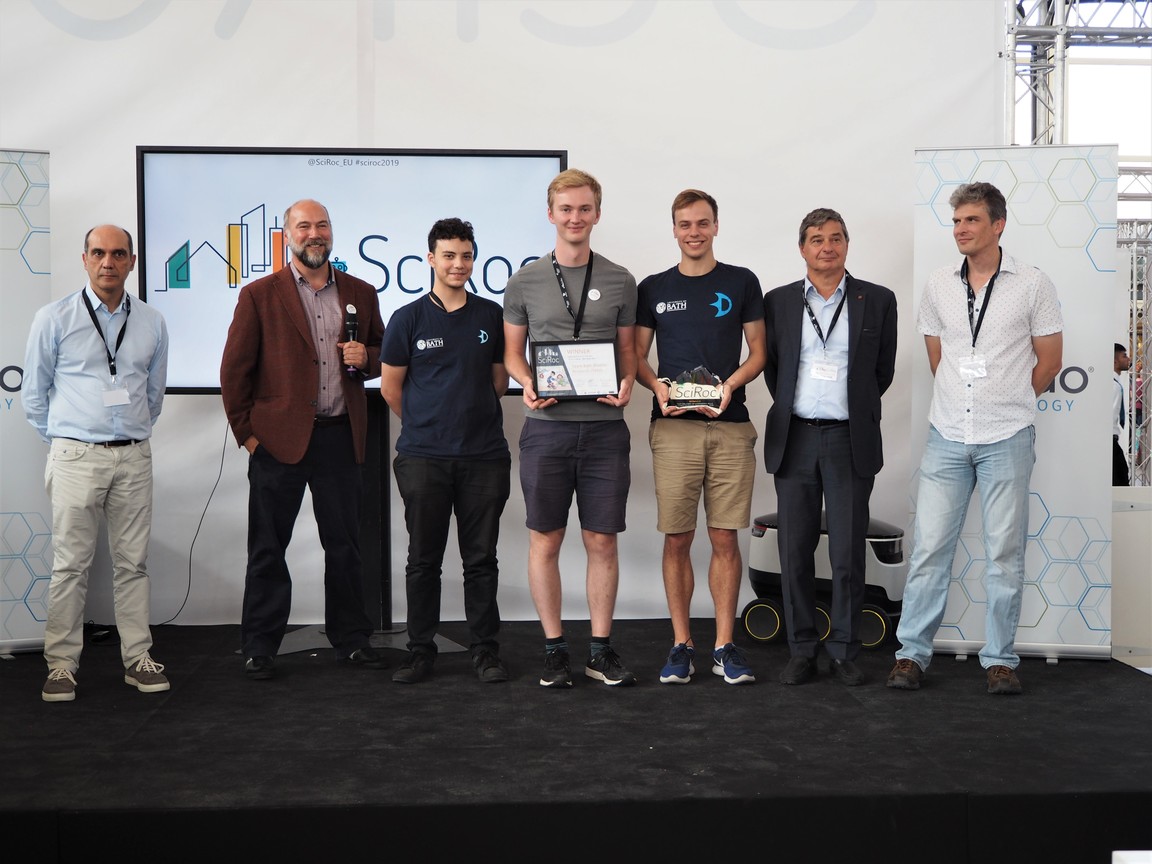

The awards ceremony was held at the Centre:MK competition arena on Saturday afternoon. Matthew Studley, SciRoc project coordinator, welcomed everyone and opened the ceremony. Then followed a short speech by Enrico Motta, Director of SciRoc Challenge 2019, thanking teams and sponsors Milton Keynes Council, Centre:mk, PAL Robotics, OCADO Technology, COSTA Coffee, Cranfield University and Catapult.

The winners of the SciRoc Challenge 2019 in each episode are:

Deliver coffee shop orders (E03)

- Winner: Leeds Autonomous Service Robots

- Runner up: eNTiTy

Take the elevator (E04)

- Winner: Gentlebots

- Runner up: eNTiTy

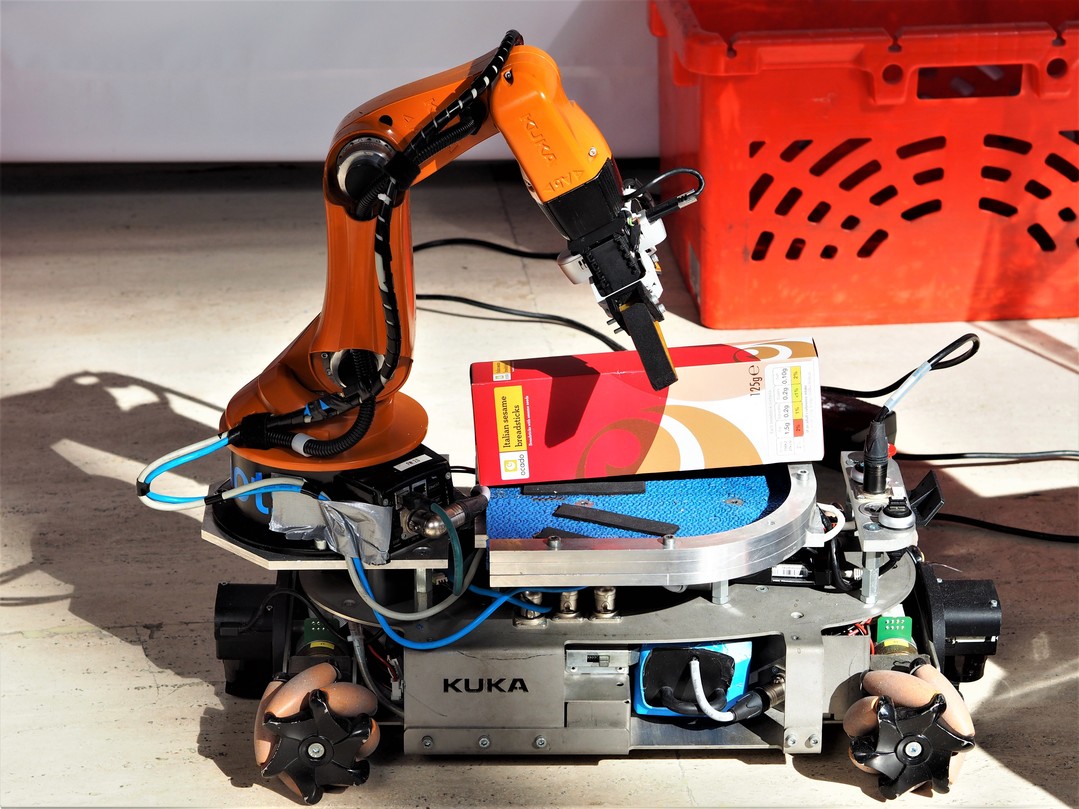

Shopping pick and pack (E07)

- Winner: b-it-bots

- Runner up: CATIE Robotics

Through the door (E10)

- Winner: b-it-bots

Fast delivery of emergency pills (E12)

- Winner: TeamBathDrones Research

- Runner up: UWE Aero

Public choice Award: Most social robot

- Winner: eNTiTy

For information on teams’ scoring, visit the websites of the European Robotics League and SciRoc Challenge.

Epilogue

This summer our colleague and friend Gerhard Kraetzschmar passed away. Gerhard was Professor for Autonomous Systems at Bonn-Rhein-Sieg University of Applied Sciences, RoboCup Trustee and Head of the ERL Professional Service Robots league. He believed robotics competitions are an excellent platform for challenging and showcasing robotics technologies, and for developing skills of future engineers and scientists. I am sure his legacy will inspire new generations of roboticist, the same way that he inspired us.

Get involved in robotics competitions, they are much more than fun.

See you all at SciRoc Challenge 2021!

Missed coverage of the teams participating in SciRoc Challenge? Find it here.

Assembler robots make large structures from little pieces

Image courtesy of Benjamin Jenett

By David L. Chandler

Today’s commercial aircraft are typically manufactured in sections, often in different locations — wings at one factory, fuselage sections at another, tail components somewhere else — and then flown to a central plant in huge cargo planes for final assembly.

But what if the final assembly was the only assembly, with the whole plane built out of a large array of tiny identical pieces, all put together by an army of tiny robots?

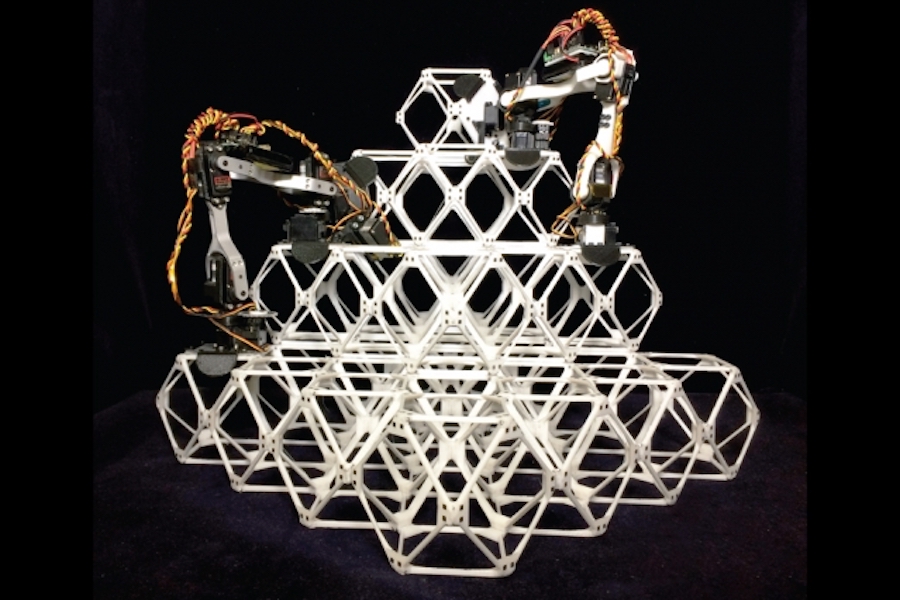

That’s the vision that graduate student Benjamin Jenett, working with Professor Neil Gershenfeld in MIT’s Center for Bits and Atoms (CBA), has been pursuing as his doctoral thesis work. It’s now reached the point that prototype versions of such robots can assemble small structures and even work together as a team to build up a larger assemblies.

The new work appears in the October issue of the IEEE Robotics and Automation Letters, in a paper by Jenett, Gershenfeld, fellow graduate student Amira Abdel-Rahman, and CBA alumnus Kenneth Cheung SM ’07, PhD ’12, who is now at NASA’s Ames Research Center, where he leads the ARMADAS project to design a lunar base that could be built with robotic assembly.

“This paper is a treat,” says Aaron Becker, an associate professor of electrical and computer engineering at the University of Houston, who was not associated with this work. “It combines top-notch mechanical design with jaw-dropping demonstrations, new robotic hardware, and a simulation suite with over 100,000 elements,” he says.

“What’s at the heart of this is a new kind of robotics, that we call relative robots,” Gershenfeld says. Historically, he explains, there have been two broad categories of robotics — ones made out of expensive custom components that are carefully optimized for particular applications such as factory assembly, and ones made from inexpensive mass-produced modules with much lower performance. The new robots, however, are an alternative to both. They’re much simpler than the former, while much more capable than the latter, and they have the potential to revolutionize the production of large-scale systems, from airplanes to bridges to entire buildings.

Experiments demonstrating relative robotic assembly of 1D, 2D, and 3D discrete cellular structures

According to Gershenfeld, the key difference lies in the relationship between the robotic device and the materials that it is handling and manipulating. With these new kinds of robots, “you can’t separate the robot from the structure — they work together as a system,” he says. For example, while most mobile robots require highly precise navigation systems to keep track of their position, the new assembler robots only need to keep track of where they are in relation to the small subunits, called voxels, that they are currently working on. Every time the robot takes a step onto the next voxel, it readjusts its sense of position, always in relation to the specific components that it is standing on at the moment.

The underlying vision is that just as the most complex of images can be reproduced by using an array of pixels on a screen, virtually any physical object can be recreated as an array of smaller three-dimensional pieces, or voxels, which can themselves be made up of simple struts and nodes. The team has shown that these simple components can be arranged to distribute loads efficiently; they are largely made up of open space so that the overall weight of the structure is minimized. The units can be picked up and placed in position next to one another by the simple assemblers, and then fastened together using latching systems built into each voxel.

The robots themselves resemble a small arm, with two long segments that are hinged in the middle, and devices for clamping onto the voxel structures on each end. The simple devices move around like inchworms, advancing along a row of voxels by repeatedly opening and closing their V-shaped bodies to move from one to the next. Jenett has dubbed the little robots BILL-E (a nod to the movie robot WALL-E), which stands for Bipedal Isotropic Lattice Locomoting Explorer.

Computer simulation shows a group of four assembler robots at work on building a three-dimensional structure. Whole swarms of such robots could be unleashed to create large structures such as airplane wings or space habitats. Illustration courtesy of the researchers

Jenett has built several versions of the assemblers as proof-of-concept designs, along with corresponding voxel designs featuring latching mechanisms to easily attach or detach each one from its neighbors. He has used these prototypes to demonstrate the assembly of the blocks into linear, two-dimensional, and three-dimensional structures. “We’re not putting the precision in the robot; the precision comes from the structure” as it gradually takes shape, Jenett says. “That’s different from all other robots. It just needs to know where its next step is.”

As it works on assembling the pieces, each of the tiny robots can count its steps over the structure, says Gershenfeld, who is the director of CBA. Along with navigation, this lets the robots correct errors at each step, eliminating most of the complexity of typical robotic systems, he says. “It’s missing most of the usual control systems, but as long as it doesn’t miss a step, it knows where it is.” For practical assembly applications, swarms of such units could be working together to speed up the process, thanks to control software developed by Abdel-Rahman that can allow the robots to coordinate their work and avoid getting in each other’s way.

This kind of assembly of large structures from identical subunits using a simple robotic system, much like a child assembling a large castle out of LEGO blocks, has already attracted the interest of some major potential users, including NASA, MIT’s collaborator on this research, and the European aerospace company Airbus SE, which also helped to sponsor the study.

One advantage of such assembly is that repairs and maintenance can be handled easily by the same kind of robotic process as the initial assembly. Damaged sections can be disassembled from the structure and replaced with new ones, producing a structure that is just as robust as the original. “Unbuilding is as important as building,” Gershenfeld says, and this process can also be used to make modifications or improvements to the system over time.

“For a space station or a lunar habitat, these robots would live on the structure, continuously maintaining and repairing it,” says Jenett.

Ultimately, such systems could be used to construct entire buildings, especially in difficult environments such as in space, or on the moon or Mars, Gershenfeld says. This could eliminate the need to ship large preassembled structures all the way from Earth. Instead it could be possible to send large batches of the tiny subunits — or form them from local materials using systems that could crank out these subunits at their final destination point. “If you can make a jumbo jet, you can make a building,” Gershenfeld says.

Sandor Fekete, director of the Institute of Operating Systems and Computer Networks at the Technical University of Braunschweig, in Germany, who was not involved in this work, says “Ultralight, digital materials such as [these] open amazing perspectives for constructing efficient, complex, large-scale structures, which are of vital importance in aerospace applications.”

But assembling such systems is a challenge, says Fekete, who plans to join the research team for further development of the control systems. “This is where the use of small and simple robots promises to provide the next breakthrough: Robots don’t get tired or bored, and using many miniature robots seems like the only way to get this critical job done. This extremely original and clever work by Ben Jennet and collaborators makes a giant leap towards the construction of dynamically adjustable airplane wings, enormous solar sails or even reconfigurable space habitats.”

In the process, Gershenfeld says, “we feel like we’re uncovering a new field of hybrid material-robot systems.”

Robots help patients manage chronic illness at home

Courtesy of Catalia Health

By Zach Winn

The Mabu robot, with its small yellow body and friendly expression, serves, literally, as the face of the care management startup Catalia Health. The most innovative part of the company’s solution, however, lies behind Mabu’s large blue eyes.

Catalia Health’s software incorporates expertise in psychology, artificial intelligence, and medical treatment plans to help patients manage their chronic conditions. The result is a sophisticated robot companion that uses daily conversations to give patients tips, medication reminders, and information on their condition while relaying relevant data to care providers. The information exchange can also take place on patients’ mobile phones.

“Ultimately, what we’re building are care management programs to help patients in particular disease states,” says Catalia Health founder and CEO Cory Kidd SM ’03, PhD ’08. “A lot of that is getting information back to the people providing care. We’re helping them scale up their efforts to interact with every patient more frequently.”

Heart failure patients first brought Mabu into their homes about a year and a half ago as part of a partnership with the health care provider Kaiser Permanente, who pays for the service. Since then, Catalia Health has also partnered with health care systems and pharmaceutical companies to help patients dealing with conditions including rheumatoid arthritis and kidney cancer.

Treatment plans for chronic diseases can be challenging for patients to manage consistently, and many people don’t follow them as prescribed. Kidd says Mabu’s daily conversations help not only patients, but also human care givers as they make treatment decisions using data collected by their robot counterpart.

Robotics for change

Kidd was a student and faculty member at Georgia Tech before coming to MIT for his master’s degree in 2001. His work focused on addressing problems in health care caused by an aging population and an increase in the number of people managing chronic diseases.

“The way we deliver health care doesn’t scale to the needs we have, so I was looking for technologies that might help with that,” Kidd says.

Many studies have found that communicating with someone in person, as opposed to over the phone or online, makes that person appear more trustworthy, engaging, and likeable. At MIT, Kidd conducted studies aimed at understanding if those findings translated to robots.

“What I found was when we used an interactive robot that you could look in the eye and share the same physical space with, you got the same psychological effects as face-to-face interaction,” Kidd says.

As part of his PhD in the Media Lab’s Media Arts and Sciences program, Kidd tested that finding in a randomized, controlled trial with patients in a diabetes and weight management program at the Boston University Medical Center. A portion of the patients were given a robotic weight-loss coach to take home, while another group used a computer running the same software. The tabletop robot conducted regular check ups and offered tips on maintaining a healthy diet and lifestyle. Patients who received the robot were much more likely to stick with the weight loss program.

Upon finishing his PhD in 2007, Kidd immediately sought to apply his research by starting the company Intuitive Automata to help people manage their diabetes using robot coaches. Even as he pursued the idea, though, Kidd says he knew it was too early to be introducing such sophisticated technology to a health care industry that, at the time, was still adjusting to electronic health records.

Intuitive Automata ultimately wasn’t a major commercial success, but it did help Kidd understand the health care sector at a much deeper level as he worked to sell the diabetes and weight management programs to providers, pharmaceutical companies, insurers, and patients.

“I was able to build a big network across the industry and understand how these people think about challenges in health care,” Kidd says. “It let me see how different entities think about how they fit in the health care ecosystem.”

Since then, Kidd has watched the costs associated with robotics and computing plummet. Many people have also enthusiastically adopted computer assistance like Amazon’s Alexa and Apple’s Siri. Finally, Kidd says members of the health care industry have developed an appreciation for technology’s potential to complement traditional methods of care.

“The common ways [care is delivered] on the provider side is by bringing patients to the doctor’s office or hospital,” Kidd explains. “Then on the pharma side, it’s call center-based. In the middle of these is the home visitation model. They’re all very human powered. If you want to help twice as many patients, you hire twice as many people. There’s no way around that.”

In the summer of 2014, he founded Catalia Health to help patients with chronic conditions at scale.

“It’s very exciting because I’ve seen how well this can work with patients,” Kidd says of the company’s potential. “The biggest challenge with the early studies was that, in the end, the patients didn’t want to give the robots back. From my perspective, that’s one of the things that shows this really does work.”

Mabu makes friends

Catalia Health uses artificial intelligence to help Mabu learn about each patient through daily conversations, which vary in length depending on the patient’s answers.

“A lot of conversations start off with ‘How are you feeling?’ similar to what a doctor or nurse might ask,” Kidd explains. “From there, it might go off in many directions. There are a few things doctors or nurses would ask if they could talk to these patients every day.”

For example, Mabu would ask heart failure patients how they are feeling, if they have shortness of breath, and about their weight.

“Based on patients’ answers, Mabu might say ‘You might want to call your doctor,’ or ‘I’ll send them this information,’ or ‘Let’s check in tomorrow,’” Kidd says.

Last year, Catalia Health announced a collaboration with the American Heart Association that has allowed Mabu to deliver the association’s guidelines for patients living with heart failure.

“A patient might say ‘I’m feeling terrible today’ and Mabu might ask ‘Is it one of these symptoms a lot of people with your condition deal with?’ We’re trying to get down to whether it’s the disease or the drug. When that happens, we do two things: Mabu has a lot of information about problems a patient might be dealing with, so she’s able to give quick feedback. Simultaneously, she’s sending that information to a clinician — a doctor, nurse, or pharmacists — whoever’s providing care.”

In addition to health care providers, Catalia also partners with pharmaceutical companies. In each case, patients pay nothing out of pocket for their robot companions. Although the data Catalia Health sends pharmaceutical companies is completely anonymized, it can help them follow their treatment’s effects on patients in real time and better understand the patient experience.

Details about many of Catalia Health’s partnerships have not been disclosed, but the company did announce a collaboration with Pfizer last month to test the impact of Mabu on patient treatment plans.

Over the next year, Kidd hopes to add to the company’s list of partnerships and help patients dealing a wider swath of diseases. Regardless of how fast Catalia Health scales, he says the service it provides will not diminish as Mabu brings its trademark attentiveness and growing knowledge base to every conversation.

“In a clinical setting, if we talk about a doctor with good bedside manner, we don’t mean that he or she has more clinical knowledge than the next person, we simply mean they’re better at connecting with patients,” Kidd says. “I’ve looked at the psychology behind that — what does it mean to be able to do that? — and turned that into the algorithms we use to help create conversations with patients.”

ROBOTT-NET pilot project: Urban pest control

“Within the framework of the European project ROBOTT-NET we are developing software and robotic solutions for the prevention and control of rodents in enclosed spaces”, says Marco Lorenzo, Service Supervisor at Irabia Control De Plagas.

You can learn more about the urban pest control project here:

This type of prevention is designed to help technicians and companies have better efficiency and control and a faster response, when it comes to controlling rodent pests.

“The project uses a mobile autonomous robotic platform with a robot arm to introduce a camera into the trap. It captures an image that is uploaded to the cloud”.

“The project is in collaboration with Robotnik, which is responsible for the assembly of the robot; and Hispavista, which is in charge of the cloud part”, Marco Lorenzo adds.

Aritz Zabaleta, a Systems Technician at Hispavista Labs explains that the application consists of two components:

“The first manages the entire fleet of robots that communicate with the server in the cloud and it processes the information collected by them. The second component is the one that allows customers to access processed images”.

If you want to watch more fascinating robotics videos, you can explore ROBOTT-NET’s pilot projects on our YouTube-channel.

Functional RL with Keras and Tensorflow Eager

By Eric Liang and Richard Liaw and Clement Gehring

In this blog post, we explore a functional paradigm for implementing reinforcement learning (RL) algorithms. The paradigm will be that developers write the numerics of their algorithm as independent, pure functions, and then use a library to compile them into policies that can be trained at scale. We share how these ideas were implemented in RLlib’s policy builder API, eliminating thousands of lines of “glue” code and bringing support for Keras and TensorFlow 2.0.

Why Functional Programming?

One of the key ideas behind functional programming is that programs can be composed largely of pure functions, i.e., functions whose outputs are entirely determined by their inputs. Here less is more: by imposing restrictions on what functions can do, we gain the ability to more easily reason about and manipulate their execution.

In TensorFlow, such functions of tensors can be executed either symbolically with placeholder inputs or eagerly with real tensor values. Since such functions have no side-effects, they have the same effect on inputs whether they are called once symbolically or many times eagerly.

Functional Reinforcement Learning

Consider the following loss function over agent rollout data, with current state $s$, actions $a$, returns $r$, and policy $\pi$:

If you’re not familiar with RL, all this function is saying is that we should try to improve the probability of good actions (i.e., actions that increase the future returns). Such a loss is at the core of policy gradient algorithms. As we will see, defining the loss is almost all you need to start training a RL policy in RLlib.

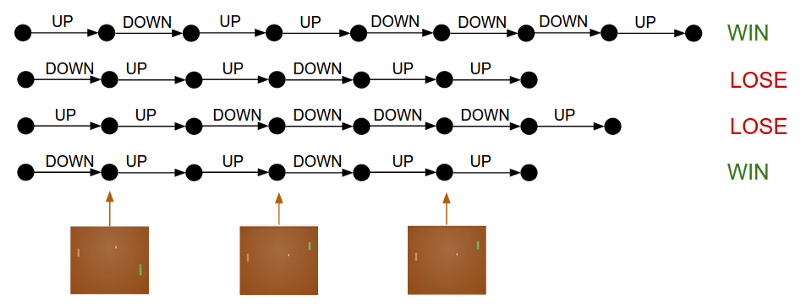

Given a set of rollouts, the policy gradient loss seeks to improve the probability of good actions (i.e., those that lead to a win in this Pong example above).

A straightforward translation into Python is as follows. Here, the loss function takes $(\pi, s, a, r)$, computes $\pi(s, a)$ as a discrete action distribution, and returns the log probability of the actions multiplied by the returns:

def loss(model, s: Tensor, a: Tensor, r: Tensor) -> Tensor:

logits = model.forward(s)

action_dist = Categorical(logits)

return -tf.reduce_mean(action_dist.logp(a) * r)

There are multiple benefits to this functional definition. First, notice that loss reads quite naturally — there are no placeholders, control loops, access of external variables, or class members as commonly seen in RL implementations. Second, since it doesn’t mutate external state, it is compatible with both TF graph and eager mode execution.

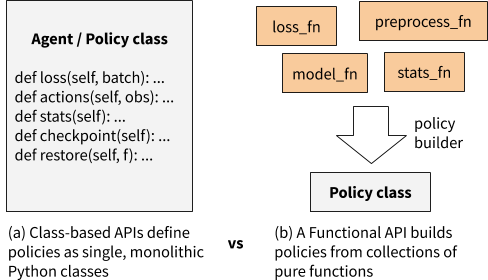

In contrast to a class-based API, in which class methods can access arbitrary parts of the class state, a functional API builds policies from loosely coupled pure functions.

In this blog we explore defining RL algorithms as collections of such pure functions. The paradigm will be that developers write the numerics of their algorithm as independent, pure functions, and then use a RLlib helper function to compile them into policies that can be trained at scale. This proposal is implemented concretely in the RLlib library.

Functional RL with RLlib

RLlib is an open-source library for reinforcement learning that offers both high scalability and a unified API for a variety of applications. It offers a wide range of scalable RL algorithms.

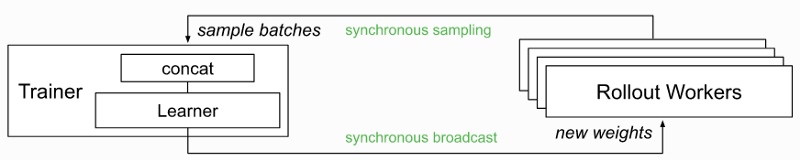

Example of how RLlib scales algorithms, in this case with distributed synchronous sampling.

Given the increasing popularity of PyTorch (i.e., imperative execution) and the imminent release of TensorFlow 2.0, we saw the opportunity to improve RLlib’s developer experience with a functional rewrite of RLlib’s algorithms. The major goals were to:

Improve the RL debugging experience

- Allow eager execution to be used for any algorithm with just an — eager flag, enabling easy

print()debugging.

Simplify new algorithm development

- Make algorithms easier to customize and understand by replacing monolithic “Agent” classes with policies built from collections of pure functions (e.g., primitives provided by TRFL).

- Remove the need to manually declare tensor placeholders for TF.

- Unify the way TF and PyTorch policies are defined.

Policy Builder API

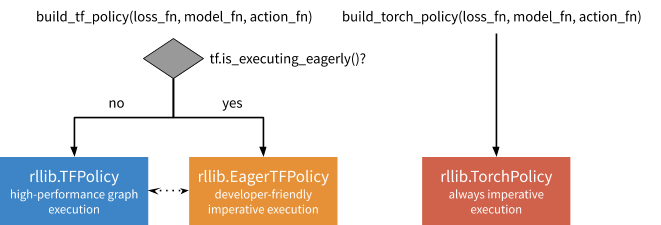

The RLlib policy builder API for functional RL (stable in RLlib 0.7.4) involves just two key functions:

At a high level, these builders take a number of function objects as input, including a loss_fn similar to what you saw earlier, a model_fn to return a neural network model given the algorithm config, and an action_fn to generate action samples given model outputs. The actual API takes quite a few more arguments, but these are the main ones. The builder compiles these functions into a policy that can be queried for actions and improved over time given experiences:

These policies can be leveraged for single-agent, vector, and multi-agent training in RLlib, which calls on them to determine how to interact with environments:

We’ve found the policy builder pattern general enough to port almost all of RLlib’s reference algorithms, including A2C, APPO, DDPG, DQN, PG, PPO, SAC, and IMPALA in TensorFlow, and PG / A2C in PyTorch. While code readability is somewhat subjective, users have reported that the builder pattern makes it much easier to customize algorithms, especially in environments such as Jupyter notebooks. In addition, these refactorings have reduced the size of the algorithms by up to hundreds of lines of code each.

Vanilla Policy Gradients Example

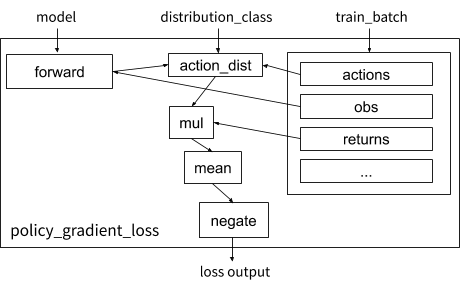

Visualization of the vanilla policy gradient loss function in RLlib.

Let’s take a look at how the earlier loss example can be implemented concretely using the builder pattern. We define policy_gradient_loss, which requires a couple of tweaks for generality: (1) RLlib supplies the proper distribution_class so the algorithm can work with any type of action space (e.g., continuous or categorical), and (2) the experience data is held in a train_batch dict that contains state, action, etc. tensors:

def policy_gradient_loss(

policy, model, distribution_cls, train_batch):

logits, _ = model.from_batch(train_batch)

action_dist = distribution_cls(logits, model)

return -tf.reduce_mean(

action_dist.logp(train_batch[“actions”]) *

train_batch[“returns”])

To add the “returns” array to the batch, we need to define a postprocessing function that calculates it as the temporally discounted reward over the trajectory:

We set $\gamma = 0.99$ when computing $R(T)$ below in code:

from ray.rllib.evaluation.postprocessing import discount

# Run for each trajectory collected from the environment

def calculate_returns(policy,

batch,

other_agent_batches=None,

episode=None):

batch[“returns”] = discount(batch[“rewards”], 0.99)

return batch

Given these functions, we can then build the RLlib policy and trainer (which coordinates the overall training workflow). The model and action distribution are automatically supplied by RLlib if not specified:

MyTFPolicy = build_tf_policy(

name="MyTFPolicy",

loss_fn=policy_gradient_loss,

postprocess_fn=calculate_returns)

MyTrainer = build_trainer(

name="MyCustomTrainer", default_policy=MyTFPolicy)

Now we can run this at the desired scale using Tune, in this example showing a configuration using 128 CPUs and 1 GPU in a cluster:

tune.run(MyTrainer,

config={“env”: “CartPole-v0”,

“num_workers”: 128,

“num_gpus”: 1})

While this example (runnable code) is only a basic algorithm, it demonstrates how a functional API can be concise, readable, and highly scalable. When compared against the previous way to define policies in RLlib using TF placeholders, the functional API uses ~3x fewer lines of code (23 vs 81 lines), and also works in eager:

Comparing the legacy class-based API

with the new functional policy builder API

Both policies implement the same behaviour, but the functional definition is

much shorter.

How the Policy Builder works

Under the hood, build_tf_policy takes the supplied building blocks (model_fn, action_fn, loss_fn, etc.) and compiles them into either a DynamicTFPolicy or EagerTFPolicy, depending on if TF eager execution is enabled. The former implements graph-mode execution (auto-defining placeholders dynamically), the latter eager execution.

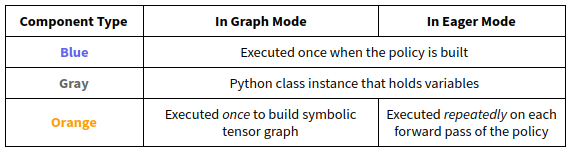

The main difference between DynamicTFPolicy and EagerTFPolicy is how many times they call the functions passed in. In either case, a model_fn is invoked once to create a Model class. However, functions that involve tensor operations are either called once in graph mode to build a symbolic computation graph, or multiple times in eager mode on actual tensors. In the following figures we show how these operations work together in blue and orange:

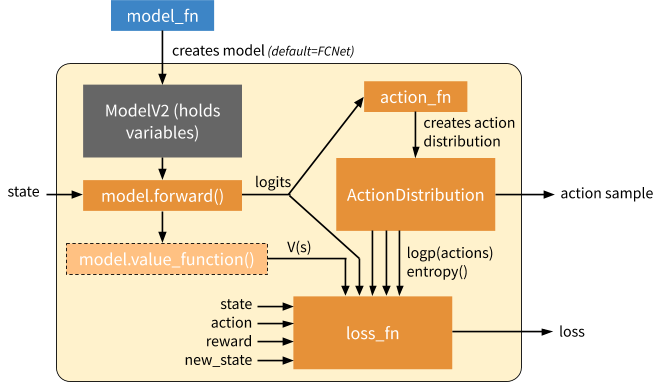

Overview of a generated EagerTFPolicy. The policy passes the environment state through model.forward(), which emits output logits. The model output parameterizes a probability distribution over actions (“ActionDistribution”), which can be used when sampling actions or training. The loss function operates over batches of experiences. The model can provide additional methods such as a value function (light orange) or other methods for computing Q values, etc. (not shown) as needed by the loss function.

This policy object is all RLlib needs to launch and scale RL training. Intuitively, this is because it encapsulates how to compute actions and improve the policy. External state such as that of the environment and RNN hidden state is managed externally by RLlib, and does not need to be part of the policy definition. The policy object is used in one of two ways depending on whether we are computing rollouts or trying to improve the policy given a batch of rollout data:

Inference: Forward pass to compute a single action. This only involves querying the model, generating an action distribution, and sampling an action from that distribution. In eager mode, this involves calling action_fn DQN example of an action sampler, which creates an action distribution / action sampler as relevant that is then sampled from.

Training: Forward and backward pass to learn on a batch of experiences. In this mode, we call the loss function to generate a scalar output which can be used to optimize the model variables via SGD. In eager mode, both action_fn and loss_fn are called to generate the action distribution and policy loss respectively. Note that here we don’t show differentiation through action_fn, but this does happen in algorithms such as DQN.

Loose Ends: State Management

RL training inherently involves a lot of state. If algorithms are defined using pure functions, where is the state held? In most cases it can be managed automatically by the framework. There are three types of state that need to be managed in RLlib:

- Environment state: this includes the current state of the environment and any recurrent state passed between policy steps. RLlib manages this internally in its rollout worker implementation.

- Model state: these are the policy parameters we are trying to learn via an RL loss. These variables must be accessible and optimized in the same way for both graph and eager mode. Fortunately, Keras models can be used in either mode. RLlib provides a customizable model class (TFModelV2) based on the object-oriented Keras style to hold policy parameters.

- Training workflow state: state for managing training, e.g., the annealing schedule for various hyperparameters, steps since last update, and so on. RLlib lets algorithm authors add mixin classes to policies that can hold any such extra variables.

Loose ends: Eager Overhead

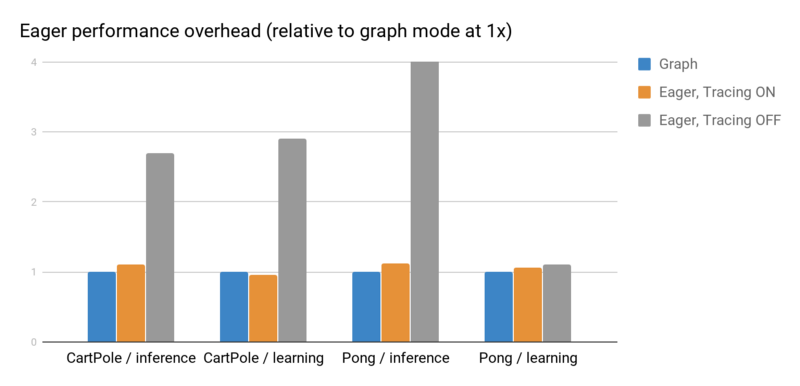

Next we investigate RLlib’s eager mode performance with eager tracing on or off. As shown in the below figure, tracing greatly improves performance. However, the tradeoff is that Python operations such as print may not be called each time. For this reason, tracing is off by default in RLlib, but can be enabled with “eager_tracing”: True. In addition, you can also set “no_eager_on_workers” to enable eager only for learning but disable it for inference:

Eager inference and gradient overheads measured using rllib train --run=PG --env=<env> [ --eager [ --trace]] on a laptop processor. With tracing off, eager imposes a significant overhead for small batch operations. However it is often as fast or faster than graph mode when tracing is enabled.

Conclusion

To recap, in this blog post we propose using ideas from functional programming to simplify the development of RL algorithms. We implement and validate these ideas in RLlib. Beyond making it easy to support new features such as eager execution, we also find the functional paradigm leads to substantially more concise and understandable code. Try it out yourself with pip install ray[rllib] or by checking out the docs and source code.

If you’re interested in helping improve RLlib, we’re also hiring.

This article was initially published on the BAIR blog, and appears here with the authors’ permission.