Letting robots manipulate cables

By Rachel Gordon

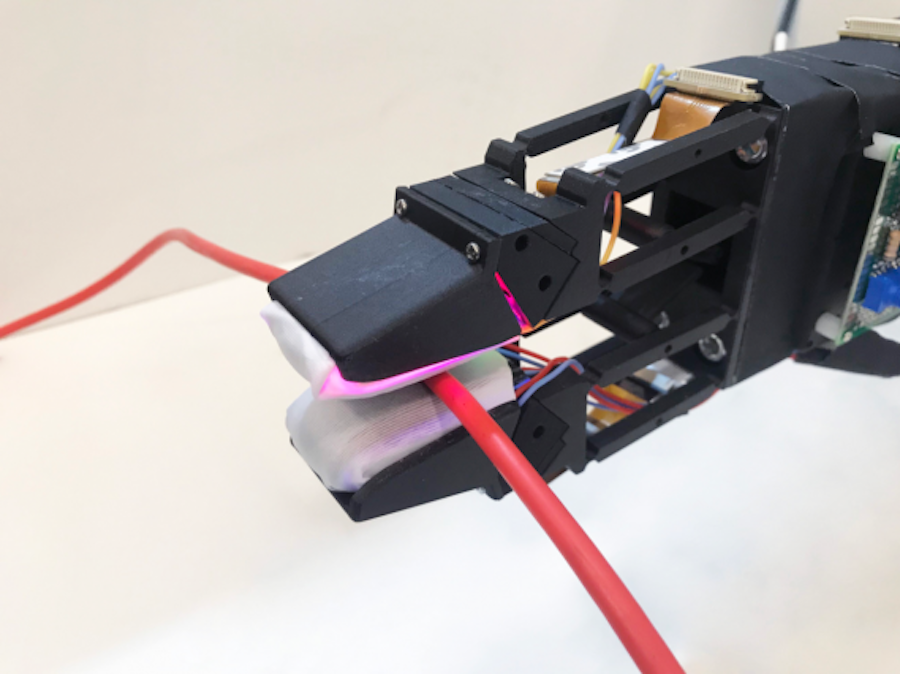

The opposing fingers are lightweight and quick moving, allowing nimble, real-time adjustments of force and position.

Photo courtesy of MIT CSAIL.

For humans, it can be challenging to manipulate thin flexible objects like ropes, wires, or cables. But if these problems are hard for humans, they are nearly impossible for robots. As a cable slides between the fingers, its shape is constantly changing, and the robot’s fingers must be constantly sensing and adjusting the cable’s position and motion.

Standard approaches have used a series of slow and incremental deformations, as well as mechanical fixtures, to get the job done. Recently, a group of researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) pursued the task from a different angle, in a manner that more closely mimics us humans. The team’s new system uses a pair of soft robotic grippers with high-resolution tactile sensors (and no added mechanical constraints) to successfully manipulate freely moving cables.

One could imagine using a system like this for both industrial and household tasks, to one day enable robots to help us with things like tying knots, wire shaping, or even surgical suturing.

The team’s first step was to build a novel two-fingered gripper. The opposing fingers are lightweight and quick moving, allowing nimble, real-time adjustments of force and position. On the tips of the fingers are vision-based “GelSight” sensors, built from soft rubber with embedded cameras. The gripper is mounted on a robot arm, which can move as part of the control system.

The team’s second step was to create a perception-and-control framework to allow cable manipulation. For perception, they used the GelSight sensors to estimate the pose of the cable between the fingers, and to measure the frictional forces as the cable slides. Two controllers run in parallel: one modulates grip strength, while the other adjusts the gripper pose to keep the cable within the gripper.

When mounted on the arm, the gripper could reliably follow a USB cable starting from a random grasp position. Then, in combination with a second gripper, the robot can move the cable “hand over hand” (as a human would) in order to find the end of the cable. It could also adapt to cables of different materials and thicknesses.

As a further demo of its prowess, the robot performed an action that humans routinely do when plugging earbuds into a cell phone. Starting with a free-floating earbud cable, the robot was able to slide the cable between its fingers, stop when it felt the plug touch its fingers, adjust the plug’s pose, and finally insert the plug into the jack.

“Manipulating soft objects is so common in our daily lives, like cable manipulation, cloth folding, and string knotting,” says Yu She, MIT postdoc and lead author on a new paper about the system. “In many cases, we would like to have robots help humans do this kind of work, especially when the tasks are repetitive, dull, or unsafe.”

String me along

Cable following is challenging for two reasons. First, it requires controlling the “grasp force” (to enable smooth sliding), and the “grasp pose” (to prevent the cable from falling from the gripper’s fingers).

This information is hard to capture from conventional vision systems during continuous manipulation, because it’s usually occluded, expensive to interpret, and sometimes inaccurate.

What’s more, this information can’t be directly observed with just vision sensors, hence the team’s use of tactile sensors. The gripper’s joints are also flexible — protecting them from potential impact.

The algorithms can also be generalized to different cables with various physical properties like material, stiffness, and diameter, and also to those at different speeds.

When comparing different controllers applied to the team’s gripper, their control policy could retain the cable in hand for longer distances than three others. For example, the “open-loop” controller only followed 36 percent of the total length, the gripper easily lost the cable when it curved, and it needed many regrasps to finish the task.

Looking ahead

The team observed that it was difficult to pull the cable back when it reached the edge of the finger, because of the convex surface of the GelSight sensor. Therefore, they hope to improve the finger-sensor shape to enhance the overall performance.

In the future, they plan to study more complex cable manipulation tasks such as cable routing and cable inserting through obstacles, and they want to eventually explore autonomous cable manipulation tasks in the auto industry.

Yu She wrote the paper alongside MIT PhD students Shaoxiong Wang, Siyuan Dong, and Neha Sunil; Alberto Rodriguez, MIT associate professor of mechanical engineering; and Edward Adelson, the John and Dorothy Wilson Professor in the MIT Department of Brain and Cognitive Sciences.

#ICRA2020 workshops on robotics and learning

This year the International Conference on Robotics and Automation (ICRA) is being run as a virtual event. One interesting feature of this conference is that it has been extended to run from 31 May to 31 August. A number of workshops were held on the opening day and here we focus on two of them: “Learning of manual skills in humans and robots” and “Emerging learning and algorithmic methods for data association in robotics”.

Learning of manual skills in humans and robots

This workshop was organised by Aude Billard, EPFL and Dagmar Sternad, Northeastern University. It brought together researchers from human motor control and from robotics to answer questions such as: How do humans achieve manual dexterity? What kind of practice schedules can shape these skills? Can some of these strategies be transferred to robots? To which extent is robot manual skill limited by the hardware, what can be learned and what cannot?

The third session of the workshop focussed on “Learning skills” and you can watch the two talks and the discussions below:

Jeannette Bohg – Learning to scaffold the development of robotic manipulation skills

Dagmar Sternad – Learning and control in skilled interactions with objects: A task-dynamic approach

Discussion with Jeannette Bohg and Dagmar Sternad

Emerging learning and algorithmic methods for data association in robotics

This workshop covered emerging algorithmic methods based on optimization and graph-theoretic techniques, learning and end-to-end solutions based on deep neural networks, and the relationships between these techniques.

You can watch the workshop in full here:

Below is the programme with the times indicating the position of that talk in the YouTube video:

11:00 Ayoung Kim – Learning motion and place descriptor from LiDARs for long-term navigation

34:11 Xiaowei Zhou – Learning correspondences for 3D reconstruction and pose estimation

51:30 Florian Bernard – Higher-order projected power iterations for scalable multi-matching

1:11:24 Cesar Cadena – High level understanding in the data association problem

1:34:55 Spotlight talk 1: Daniele Cattaneo – CMRNet++: map and camera agnostic monocular visual localization in LiDAR maps

1:50:45 Nicholas Roy – The role of semantics in perception

2:11:12 Kostas Daniilidis – Learning representations for matching

2:33:26 Jonathan How – Consistent multi-view data association

2:51:40 John Leonard – A research agenda for robust semantic SLAM

3:17:58 Luca Carlone – Towards certifiably robust spatial perception

3:39:36 Roberto Tron – Fast, consistent distributed matching for robotics applications

3:59:22 Randal Beard – Tracking moving objects from a moving camera in 3d environments

4:18:49 Nikolay Atanasov – A unifying view of geometry, semantics, and data association in SLAM

4:39:03 Spotlight talk 2: Nathaniel Glaser – Enhancing multi-robot perception via learned data association

Researchers create new model that aims to give robots human-like perception of their physical environments

A GoPro for beetles: Researchers create a robotic camera backpack for insects

Ex-Google robotics head unveils automated home assistant

Researchers give robots intelligent sensing abilities to carry out complex tasks

Cloud Robotics: A Perspective

Opportunities in DARPA SubT Challenge

About this Event

The DARPA Subterranean (SubT) Challenge aims to develop innovative technologies that would augment operations underground.

The SubT Challenge allows teams to demonstrate new approaches for robotic systems to rapidly map, navigate, and search complex underground environments, including human-made tunnel systems, urban underground, and natural cave networks.

The SubT Challenge is organized into two Competitions (Systems and Virtual), each with two tracks (DARPA-funded and self-funded).

The Cave Circuit, the final of three Circuit events, is planned for later this year. Final Event, planned for summer of 2021, will put both Systems and Virtual teams to the test with courses that incorporate diverse elements from all three environments. Teams will compete for up to $2 million in the Systems Final Event and up to $1.5 million in the Virtual Final Event, with additional prizes.

Learn more about the opportunities to participate either virtual or systems Team: https://www.subtchallenge.com/

Dr. Timothy Chung – Program Manager

Dr. Timothy Chung joined DARPA’s Tactical Technology Office as a program manager in February 2016. He serves as the Program Manager for the OFFensive Swarm-Enabled Tactics Program and the DARPA Subterranean (SubT) Challenge. His interests include autonomous/unmanned air vehicles, collaborative autonomy for unmanned swarm system capabilities, distributed perception, distributed decision-making, and counter unmanned system technologies.

Prior to joining DARPA, Dr. Chung served as an Assistant Professor at the Naval Postgraduate School and Director of the Advanced Robotic Systems Engineering Laboratory (ARSENL). His academic interests included modeling, analysis, and systems engineering of operational settings involving unmanned systems, combining collaborative autonomy development efforts with an extensive live-fly field experimentation program for swarm and counter-swarm unmanned system tactics and associated technologies.

Dr. Chung holds a Bachelor of Science in Mechanical and Aerospace Engineering from Cornell University. He also earned Master of Science and Doctor of Philosophy degrees in Mechanical Engineering from the California Institute of Technology.

Learn more about DARPA here: www.darpa.mil

Inspired by a coral polyp, this plastic mini robot moves by magnetism and light

Army robots get driver education for difficult tasks

#314: High Earth Orbit Robotics, with William Crowe

In this episode, Lilly interviews Dr. William Crowe, CEO of High Earth Orbit (HEO) Robotics. The mission of HEO Robotics is to provide high quality imagery of satellites, space-debris and resource-rich asteroids. Crowe discusses the technical challenges which make robotics and satellites similar like computer vision and controls, and those where traditional robotics approaches aren’t suitable like localization and mobility. He explains new trends in the satellite industry, and the need for high quality imagery.

William Crowe

William Crowe is CEO of High Earth Orbit Robotics, a company that performs health checks on satellites by assessing data on images they take from other satellites. He has a PhD in Astrodynamics, where his research focused on the use of swarms to characterize asteroids, especially those that fly closer than the Moon. William sees a future where we use the resources of space in space to better our Earth.

Links