Top 10 recommendations for a video gamer who you’d like to read (or even just touch) a book

Sure the average video gamer is 34 years old, but the most active group is boys under 18, a group famously resistant to reading. Here is the RTSF Top 10 recommendations of books that have robots plus enough world building to rival Halo or Doom and lots of action or puzzles to solve. What’s even cooler is that you can cleverly use the “Topics” links to work in some STEM talking points by asking things like: do you think it would be easy reprogram cars to hit pedestrians instead of avoiding them? How would you fool a security drone? or Do you think robots should have the same rights as animals? But you may want to read them too, the first six on the list are books that I routinely recommend to general audiences and people tell me how much they loved them.

Head On – The rugby-like game in the book, Hilketa, played with real robots, is the best multiplayer game that never was. And paralyzed people have an advantage! (FYI: a PG-13 discussion of tele-sex through robots). Good for teachable moments about teleoperation.

Robopocalypse– Loved World War Z and read the book? They’ll love this more and it’s largely accurate about robots. Good for teachable moments about robot autonomy.

The Murderbot Diaries (series)- Delightfully snarky point of view of a security robot trying to save clueless scientists from Aliens-like corpos and creatures. Good for teachable moments about software engineering and whether intelligence systems would need a governor to keep them in line.

The Electric State– This is sort of a graphic novel the way Hannah Gadsby is sort of a comedian- it transcends the genre. Neither the full page illustrations nor the accompanying text tell the whole story of the angry teenage girl and her robot trying to outrun the end of the world. Like an escape room, you have to put the text and images together to figure out what is going on. Good for teachable moments about autonomy.

Tales from the Loop– the graphic novels, two in the series, are different from the emo Amazon streaming series. The books are much more suited to a teenage audience who love world building and surprising twists. Good for teachable moments about bounded rationality.

Kill Decision– Scarily realistic description of killer drones, with cool Spec Ops guy who has two ravens with call out to Norse mythology. Good for teachable moments about swarms (aka multi-robot systems).

Robots of Gotham– It’s sort of Game Lit without being based on a video game. Excellent discussion of how computer vision/machine learning works. Good for teachable moments about computer vision and machine learning.

The Andromeda Evolution– Helps if they’ve seen or read the original Andromeda Strain movie, but it can be read as a stand-alone. This commissioned sequel is a worthy addition. Good for teachable moments about drones and teleoperation.

Machinehood – A pro-Robots Rights group is terrorizing the world, nice discussion of ethics amid a lot of action- no boring lectures. Good for teachable moments about robot ethics.

The Themis Files– A earnest girl finds an alien Pacific Rim robot and learns to use it to fight evil giant piloted mecha invaders while shadowy quasi-governmental figures try to uncover its origins. Good for teachable moments about exoskeletons.

New microrobotic trajectory tracking method using broad learning system

A soft magnetic pixel robot that can be programmed to take different shapes

Top tweets from the Conference on Robot Learning #CoRL2021

The Conference on Robot Learning (CoRL) is an annual international conference specialised in the intersection of robotics and machine learning. The fifth edition took place last week in London and virtually around the globe. Apart from the novelty of being a hybrid conference, this year the focus was put on openness. OpenReview was used for the peer review process, meaning that the reviewers’ comments and replies from the authors are public, for anyone to see. The research community suggests that open review could encourage mutual trust, respect, and openness to criticism, enable constructive and efficient quality assurance, increase transparency and accountability, facilitate wider, and more inclusive discussion, give reviewers recognition and make reviews citable [1]. You can access all CoRL 2021 papers and their corresponding reviews here. In addition, you may want to listen to all presentations, available in the conference YouTube channel.

In this post we bring you a glimpse of the conference through the most popular tweets written last week. Cool robot demos, short and sweet explanation of papers and award finalists to look forward to next year’s edition in New Zealand. Enjoy!

Robots, robots, robots!

Here’s our fourth and final #CoRL2021 paper, on multi-stage imitation learning: https://t.co/22JqLMMp4X

We’re doing a live demo of this right now, see below! This sequence was trained with a single demonstration, and generalises across poses / distractors.

With @normandipalo. pic.twitter.com/ENEzKvAlRm

— Edward Johns (@Ed__Johns) November 11, 2021

ANYmal trotting around at #CoRL2021 pic.twitter.com/59ql6sEJEx

— Kai Arulkumaran (@kaixhin) November 9, 2021

When I am human – I like dogs a lot. But it turns out that when I am a robot – I am a bit timid around quadrupeds. Thanks to the wonderful team of #CoRL2021 who organized telepresence sessions (@Ed__Johns, @vitalisvos19, Binbin Xu, and others). This was a very curious experience! pic.twitter.com/mMEdqUq6yv

— Rika Antonova (@contactrika) November 11, 2021

We will be doing a live demo of iMAP at #corl2021, sessions 1 and 8 Monday and Thursday, come if you're around!https://t.co/Tagk4jocbe@liu_shikun, @joeaortiz, @AjdDavison pic.twitter.com/XTUYrkOWnO

— Edgar Sucar (@SucarEdgar) November 8, 2021

Papers and presentations

Excited to present our work at #CoRL2021 this week. Our paper shows a promising approach to achieve sim2real transfer for perception. w/ @ToyotaResearch @berkeley_ai (1/7) https://t.co/MAbYaXaPrY pic.twitter.com/scfv4DtOSJ

— Michael laskey (@Michaellaskey7) November 8, 2021

Ever wondered how to tune your hyperparameters while training RL agents? w/o running thousands of experiments in parallel? And even combine them?

Check out our work @ #CoRL2021 on training mixture agents which combines components with diverse architectures, distributions, etc pic.twitter.com/4tsqWXjf79

— Markus Wulfmeier (@markus_with_k) November 9, 2021

Proud to share "LILA: Language-Informed Latent Actions," our paper at #CoRL2021.

How can we build assistive controllers by fusing language & shared autonomy?

Jointly authored w/ @megha_byte, with my advisors @percyliang & @DorsaSadigh.

: (1 / 10) pic.twitter.com/K5vdUO8R6q

— Siddharth Karamcheti (@siddkaramcheti) November 8, 2021

In daily life after passing the paper exam for a permit, a newbie can drive the car for real under an expert's monitoring. This guardian mechanism is crucial for exploratory but safe learning. See our #CoRL2021 work https://t.co/OegFMwj9JS

Poster session today at Session 8 pic.twitter.com/IlKkZhk8UD— Bolei Zhou (@zhoubolei) November 11, 2021

I've just finished my talk in the Blue Sky oral session at #CoRL2021. Great to have a session which allows for unconventional opinions to be heard in this way. Looking forward to the next two!

Here's my paper, Back to Reality for Imitation Learning: https://t.co/TZLj77zGWV. pic.twitter.com/NnwZNaSsX5

— Edward Johns (@Ed__Johns) November 9, 2021

This week at #CoRL2021, our team explores how data efficient off-policy RL, on-board reward generation, and vision can teach walking and wall avoidance to small humanoid robots without laboratory instrumentation: https://t.co/ZZo9hJXXO5 1/2 pic.twitter.com/4qWqn1hefF

— DeepMind (@DeepMind) November 10, 2021

Awards

Congratulations again to the #CoRL #BestSystemPaper Winner, "FlingBot: The Unreasonable Effectiveness of Dynamic Manipulation for Cloth Unfolding", by Huy Ha and Shuran Song

Read it here: https://t.co/en8ic29gPM#robot #learning #robotics #award pic.twitter.com/P9ZW884dDn— Conference on Robot Learning (@corl_conf) November 12, 2021

Congratulations again to the #CoRL #BestPaper Winner, "A System for General In-Hand Object Re-Orientation", by Tao Chen, Jie Xu and Pulkit Agrawal

Read it here: https://t.co/QeIzAW1fng#robot #learning #robotics #award pic.twitter.com/niHDCArbmq— Conference on Robot Learning (@corl_conf) November 12, 2021

Congratulations to #CoRL2021 best paper finalist, "Robot Reinforcement Learning on the Constraint Manifold", Puze Liu, Davide Tateo, Haitham Bou Ammar, Jan Peters.https://t.co/SdssOu3akD#robotics #learning #award #research pic.twitter.com/Lpgn2XjEhY

— Conference on Robot Learning (@corl_conf) November 8, 2021

Congratulations to #CoRL2021 best paper finalist, "Learning Off-Policy with Online Planning", Harshit Sikchi, Wenxuan Zhou, David Held.https://t.co/7xUFyMlyQU#robotics #learning #award #research pic.twitter.com/D5u4NdDDST

— Conference on Robot Learning (@corl_conf) November 8, 2021

Congratulations to #CoRL2021 best paper finalist, "XIRL: Cross-embodiment Inverse Reinforcement Learning", Kevin Zakka, Andy Zeng, Pete Florence, Jonathan Tompson, Jeannette Bohg, Debidatta Dwibedi.https://t.co/Z4qVMiuhgS#robotics #learning #award #research pic.twitter.com/k2KSkv67vd

— Conference on Robot Learning (@corl_conf) November 8, 2021

Congratulations to #CoRL2021 best systems paper finalist, "SORNet: Spatial Object-Centric Representations for Sequential Manipulation", Wentao Yuan, Chris Paxton, Karthik Desingh, Dieter Fox.https://t.co/en8ic29gPM#robotics #learning #award #research pic.twitter.com/Hfn1CiZNP8

— Conference on Robot Learning (@corl_conf) November 8, 2021

Congratulations to #CoRL2021 best systems paper finalist, "Fast and Efficient Locomotion via Learned Gait Transitions", Yuxiang Yang, Tingnan Zhang, Erwin Coumans, Jie Tan, Byron Boots.https://t.co/HbrCOVZOpz#robotics #learning #award #research pic.twitter.com/T5Ev31PgPZ

— Conference on Robot Learning (@corl_conf) November 8, 2021

References

Purdue researchers test 3D concrete printing system as part of NSF-funded project

Snake robot turned movie hero

True Flexibility in your Robotics System

Finding inspiration in starfish larva

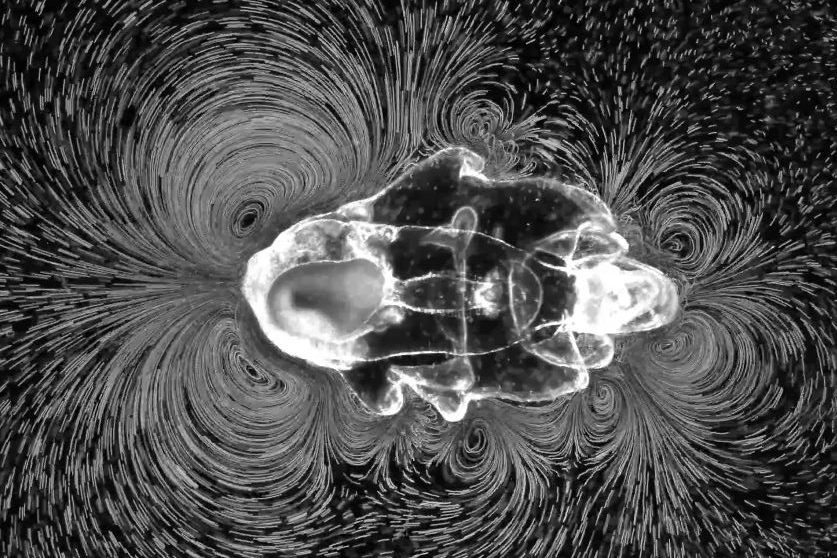

The new microbot inspired by starfish larva stirs up plastic beads. (Image: Cornel Dillinger/ETH Zurich)

By Rahel Künzler

Among scientists, there is great interest in tiny machines that are set to revolutionise medicine. These microrobots, often only a fraction of the diameter of a hair, are made to swim through the body to deliver medication to specific areas and perform the smallest surgical procedures.

The designs of these robots are often inspired by natural microorganisms such as bacteria or algae. Now, for the first time, a research group at ETH Zurich has developed a microrobot design inspired by starfish larva, which use ciliary bands on their surface to swim and feed. The ultrasound-activated synthetic system mimics the natural arrangements of starfish ciliary bands and leverages nonlinear acoustics to replicate the larva’s motion and manipulation techniques.

Hairs to push liquid away or suck it in

Depending on whether it is swimming or feeding, the starfish larva generates different patterns of vortices. (Image: Prakash Lab, Stanford University)

At first glance, the microrobots bear only scant similarity to starfish larva. In its larval stage, a starfish has a lobed body that measures just a few millimetres across. Meanwhile, the microrobot is a rectangle and ten times smaller, only a quarter of a millimetre across. But the two do share one important feature: a series of fine, movable hairs on the surface, called cilia.

A starfish larva is blanketed with hundreds of thousands of these hairs. Arranged in rows, they beat back and forth in a coordinated fashion, creating eddies in the surrounding water. The relative orientation of two rows determines the end result: Inclining two bands of beating cilia toward each other creates a vortex with a thrust effect, propelling the larva. On the other hand, inclining two bands away from each other creates a vortex that draws liquid in, trapping particles on which the larva feeds.

Artificial swimmers beat faster

These cilia were the key design element for the new microrobot developed by ETH researchers led by Daniel Ahmed, who is a Professor of Acoustic Robotics for life sciences and healthcare. “In the beginning,” Ahmed said, “we simply wanted to test whether we could create vortices similar to those of the starfish larva with rows of cilia inclined toward or away from each other.

To this end, the researchers used photolithography to construct a microrobot with appropriately inclined ciliary bands. They then applied ultrasound waves from an external source to make the cilia oscillate. The synthetic versions beat back and forth more than ten thousand times per second – about a thousand times faster than those of a starfish larva. And as with the larva, these beating cilia can be used to generate a vortex with a suction effect at the front and a vortex with a thrust effect at the rear, the combined effect “rocketing” the robot forward.

Besides swimming, the new microrobot can collect particles and steer them in a predetermined direction. (Video: Cornel Dillinger/ETH Zurich)

In their lab, the researchers showed that the microrobots can swim in a straight line through liquid such as water. Adding tiny plastic beads to the water made it possible to visualize the vortices created by the microrobot. The result is astonishing: both starfish larva and microrobots generate virtually identical flow patterns.

Next, the researchers arranged the ciliary bands so that a suction vortex was positioned next to a thrust vortex, imitating the feeding technique used by starfish larva. This arrangement enabled the robots to collect particles and send them out in a predetermined direction.

Ultrasound offers many advantages

Ahmed is convinced that this new type of microrobot will be ready for use in medicine in the foreseeable future. This is because a system that relies only on ultrasound offers decisive advantages: ultrasound waves are already widely used in imaging, penetrate deep inside the body, and pose no health risks.

“Our vision is to use ultrasound for propulsion, imaging and drug delivery.”

– Daniel Ahmed

The fact that this therapy requires only an ultrasound device makes it cheap, he adds, and hence suitable for use in both developed and developing countries.

Ahmed believes one initial field of application could be the treatment of gastric tumours. Uptake of conventional drugs by diffusion is inefficient, but having microrobots transport a drug specifically to the site of a stomach tumour and then deliver it there might make the drug’s uptake into tumour cells more efficient and reduce side effects.

Sharper images thanks to contrast agents

But before this vision can be realized, a major challenge remains to be overcome: imaging. Steering the tiny machines to the right place requires that a sharp image be generated in real time. The researchers have plans to make the microrobots more visible by incorporating contrast agents such as those already used in medical imaging with ultrasound.

In addition to medical applications, Ahmed anticipates this starfish-inspired design to have important implications for the manipulation of smallest liquid volumes in research and in industry. Bands of beating cilia could execute tasks such as mixing, pumping and particle trapping.

3D Vision System Nets the Right Tuna

Jason Richards: Robotic Food Delivery, Crowdfunding, and Working with Lawmakers | Sense Think Act Podcast #7

In this episode, Audrow Nash speaks to Jason Richards, CEO at DaxBot. DaxBot makes a charismatic robot for food delivery. Jason speaks on their delivery robot, their crowdfunding campaign, DaxBot’s revenue model, working with lawmakers to have robots on the sidewalks, and Jason teases their realtime operating system, DaxOS.

Episode Links

Podcast info

Machine Vision of Tomorrow: How 3D Scanning in Motion Revolutionizes Automation

Social robots deserve our appreciation, bioethicist says

Emerging Applications for Robotics in the Aerospace Industry: 5 Innovations

Exploring ROS2 with wheeled robot – #2 – How to subscribe to ROS2 laser scan topic

By Marco Arruda

This is the second chapter of the series “Exploring ROS2 with a wheeled robot”. In this episode, you’ll learn how to subscribe to a ROS2 topic using ROS2 C++.

You’ll learn:

- How to create a node with ROS2 and C++

- How to subscribe to a topic with ROS2 and C++

- How to launch a ROS2 node using a launch file

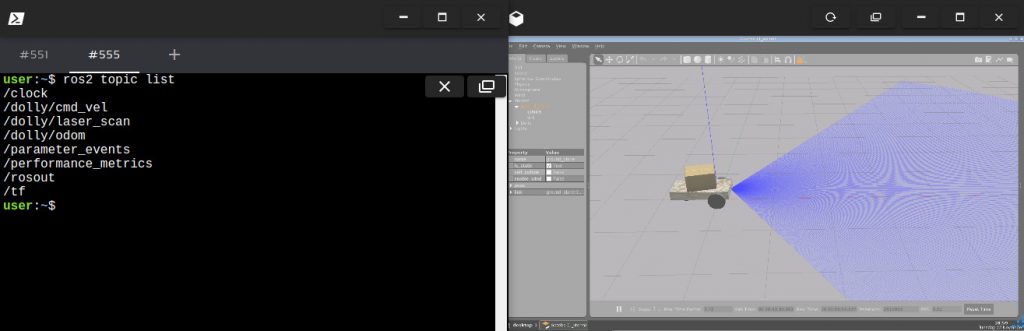

1 – Setup environment – Launch simulation

Before anything else, make sure you have the rosject from the previous post, you can copy it from here.

Launch the simulation in one webshell and in a different tab, checkout the topics we have available. You must get something similar to the image below:

2 – Create a ROS2 node

Our goal is to read the laser data, so create a new file called reading_laser.cpp:

touch ~/ros2_ws/src/my_package/reading_laser.cpp

And paste the content below:

#include "rclcpp/rclcpp.hpp"

#include "sensor_msgs/msg/laser_scan.hpp"

using std::placeholders::_1;

class ReadingLaser : public rclcpp::Node {

public:

ReadingLaser() : Node("reading_laser") {

auto default_qos = rclcpp::QoS(rclcpp::SystemDefaultsQoS());

subscription_ = this->create_subscription(

"laser_scan", default_qos,

std::bind(&ReadingLaser::topic_callback, this, _1));

}

private:

void topic_callback(const sensor_msgs::msg::LaserScan::SharedPtr _msg) {

RCLCPP_INFO(this->get_logger(), "I heard: '%f' '%f'", _msg->ranges[0],

_msg->ranges[100]);

}

rclcpp::Subscription::SharedPtr subscription_;

};

int main(int argc, char *argv[]) {

rclcpp::init(argc, argv);

auto node = std::make_shared();

RCLCPP_INFO(node->get_logger(), "Hello my friends");

rclcpp::spin(node);

rclcpp::shutdown();

return 0;

}

We are creating a new class ReadingLaser that represents the node (it inherits rclcpp::Node). The most important about that class are the subscriber attribute and the method callback. In the main function we are initializing the node and keep it alive (spin) while its ROS connection is valid.

The subscriber constructor expects to get a QoS, that stands for the middleware used for the quality of service. You can have more information about it in the reference attached, but in this post we are just using the default QoS provided. Keep in mind the following parameters:

- topic name

- callback method

The callback method needs to be binded, which means it will not be execute at the subscriber declaration, but when the callback is called. So we pass the reference of the method and setup the this reference for the current object to be used as callback, afterall the method itself is a generic implementation of a class.

3 – Compile and run

In order to compile the cpp file, we must add some instructions to the ~/ros2_ws/src/my_package/src/CMakeLists.txt:

- Look for find dependencies and include the sensor_msgs library

- Just before the install instruction add the executable and target its dependencies

- Append another install instruction for the new executable we’ve just created

# find dependencies

find_package(ament_cmake REQUIRED)

find_package(rclcpp REQUIRED)

find_package(sensor_msgs REQUIRED)

...

...

add_executable(reading_laser src/reading_laser.cpp)

ament_target_dependencies(reading_laser rclcpp std_msgs sensor_msgs)

...

...

install(TARGETS

reading_laser

DESTINATION lib/${PROJECT_NAME}/

)

Compile it:

colcon build --symlink-install --packages-select my_package

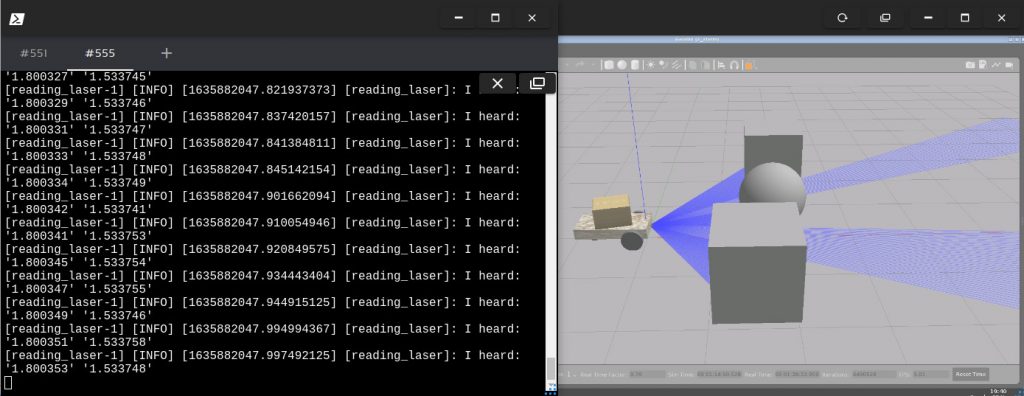

4 – Run the node and mapping the topic

In order to run the executable created, you can use:

ros2 run my_package reading_laser

Although the the laser values won’t show up. That’s because we have a “hard coded” topic name laser_scan. No problem at all, when we can map topics using launch files. Create a new launch file ~/ros2_ws/src/my_package/launch/reading_laser.py:

from launch import LaunchDescription

from launch_ros.actions import Node

def generate_launch_description():

reading_laser = Node(

package='my_package',

executable='reading_laser',

output='screen',

remappings=[

('laser_scan', '/dolly/laser_scan')

]

)

return LaunchDescription([

reading_laser

])

In this launch file there is an instance of a node getting the executable as argument and it is setup the remappings attribute in order to remap from laser_scan to /dolly/laser_scan.

Run the same node using the launch file this time:

ros2 launch my_package reading_laser.launch.py

Add some obstacles to the world and the result must be similar to:

:

: