2021 Top Article – Collaborative Robotic Sanding with Kane Robotics and ATI’s AOV-10

Brett Aldrich: State Machines for Complex Robot Behavior | Sense Think Act Podcast #10

In this episode, Audrow Nash interviews Brett Aldrich, author of SMACC and CEO of Robosoft AI. Robosoft AI develops and maintains SMACC and SMACC2, which are event-driven, behavior state machine libraries for ROS 1 and ROS 2, respectively. Brett explains SMACC, its origins, other strategies for robot control such as behavior trees, speaks about the challenges of developing software for industry users and hobbyists, and gives some advice for new roboticists.

Episode Links

Podcast info

Robots collect underwater litter

2021 Top Article – Sustainable Supply Chains in the Era of Industry 4.0

Q&A: Cathy Wu on developing algorithms to safely integrate robots into our world

Cathy Wu is the Gilbert W. Winslow Assistant Professor of Civil and Environmental Engineering and a member of the MIT Institute for Data, Systems, and Society.

By Kim Martineau | MIT Schwarzman College of Computing

Cathy Wu is the Gilbert W. Winslow Assistant Professor of Civil and Environmental Engineering and a member of the MIT Institute for Data, Systems, and Society. As an undergraduate, Wu won MIT’s toughest robotics competition, and as a graduate student took the University of California at Berkeley’s first-ever course on deep reinforcement learning. Now back at MIT, she’s working to improve the flow of robots in Amazon warehouses under the Science Hub, a new collaboration between the tech giant and the MIT Schwarzman College of Computing. Outside of the lab and classroom, Wu can be found running, drawing, pouring lattes at home, and watching YouTube videos on math and infrastructure via 3Blue1Brown and Practical Engineering. She recently took a break from all of that to talk about her work.

Q: What put you on the path to robotics and self-driving cars?

A: My parents always wanted a doctor in the family. However, I’m bad at following instructions and became the wrong kind of doctor! Inspired by my physics and computer science classes in high school, I decided to study engineering. I wanted to help as many people as a medical doctor could.

At MIT, I looked for applications in energy, education, and agriculture, but the self-driving car was the first to grab me. It has yet to let go! Ninety-four percent of serious car crashes are caused by human error and could potentially be prevented by self-driving cars. Autonomous vehicles could also ease traffic congestion, save energy, and improve mobility.

I first learned about self-driving cars from Seth Teller during his guest lecture for the course Mobile Autonomous Systems Lab (MASLAB), in which MIT undergraduates compete to build the best full-functioning robot from scratch. Our ball-fetching bot, Putzputz, won first place. From there, I took more classes in machine learning, computer vision, and transportation, and joined Teller’s lab. I also competed in several mobility-related hackathons, including one sponsored by Hubway, now known as Blue Bike.

Q: You’ve explored ways to help humans and autonomous vehicles interact more smoothly. What makes this problem so hard?

A: Both systems are highly complex, and our classical modeling tools are woefully insufficient. Integrating autonomous vehicles into our existing mobility systems is a huge undertaking. For example, we don’t know whether autonomous vehicles will cut energy use by 40 percent, or double it. We need more powerful tools to cut through the uncertainty. My PhD thesis at Berkeley tried to do this. I developed scalable optimization methods in the areas of robot control, state estimation, and system design. These methods could help decision-makers anticipate future scenarios and design better systems to accommodate both humans and robots.

Q: How is deep reinforcement learning, combining deep and reinforcement learning algorithms, changing robotics?

A: I took John Schulman and Pieter Abbeel’s reinforcement learning class at Berkeley in 2015 shortly after Deepmind published their breakthrough paper in Nature. They had trained an agent via deep learning and reinforcement learning to play “Space Invaders” and a suite of Atari games at superhuman levels. That created quite some buzz. A year later, I started to incorporate reinforcement learning into problems involving mixed traffic systems, in which only some cars are automated. I realized that classical control techniques couldn’t handle the complex nonlinear control problems I was formulating.

Deep RL is now mainstream but it’s by no means pervasive in robotics, which still relies heavily on classical model-based control and planning methods. Deep learning continues to be important for processing raw sensor data like camera images and radio waves, and reinforcement learning is gradually being incorporated. I see traffic systems as gigantic multi-robot systems. I’m excited for an upcoming collaboration with Utah’s Department of Transportation to apply reinforcement learning to coordinate cars with traffic signals, reducing congestion and thus carbon emissions.

Q: You’ve talked about the MIT course, 6.007 (Signals and Systems), and its impact on you. What about it spoke to you?

A: The mindset. That problems that look messy can be analyzed with common, and sometimes simple, tools. Signals are transformed by systems in various ways, but what do these abstract terms mean, anyway? A mechanical system can take a signal like gears turning at some speed and transform it into a lever turning at another speed. A digital system can take binary digits and turn them into other binary digits or a string of letters or an image. Financial systems can take news and transform it via millions of trading decisions into stock prices. People take in signals every day through advertisements, job offers, gossip, and so on, and translate them into actions that in turn influence society and other people. This humble class on signals and systems linked mechanical, digital, and societal systems and showed me how foundational tools can cut through the noise.

Q: In your project with Amazon you’re training warehouse robots to pick up, sort, and deliver goods. What are the technical challenges?

A: This project involves assigning robots to a given task and routing them there. [Professor] Cynthia Barnhart’s team is focused on task assignment, and mine, on path planning. Both problems are considered combinatorial optimization problems because the solution involves a combination of choices. As the number of tasks and robots increases, the number of possible solutions grows exponentially. It’s called the curse of dimensionality. Both problems are what we call NP Hard; there may not be an efficient algorithm to solve them. Our goal is to devise a shortcut.

Routing a single robot for a single task isn’t difficult. It’s like using Google Maps to find the shortest path home. It can be solved efficiently with several algorithms, including Dijkstra’s. But warehouses resemble small cities with hundreds of robots. When traffic jams occur, customers can’t get their packages as quickly. Our goal is to develop algorithms that find the most efficient paths for all of the robots.

Q: Are there other applications?

A: Yes. The algorithms we test in Amazon warehouses might one day help to ease congestion in real cities. Other potential applications include controlling planes on runways, swarms of drones in the air, and even characters in video games. These algorithms could also be used for other robotic planning tasks like scheduling and routing.

Q: AI is evolving rapidly. Where do you hope to see the big breakthroughs coming?

A: I’d like to see deep learning and deep RL used to solve societal problems involving mobility, infrastructure, social media, health care, and education. Deep RL now has a toehold in robotics and industrial applications like chip design, but we still need to be careful in applying it to systems with humans in the loop. Ultimately, we want to design systems for people. Currently, we simply don’t have the right tools.

Q: What worries you most about AI taking on more and more specialized tasks?

A: AI has the potential for tremendous good, but it could also help to accelerate the widening gap between the haves and the have-nots. Our political and regulatory systems could help to integrate AI into society and minimize job losses and income inequality, but I worry that they’re not equipped yet to handle the firehose of AI.

Q: What’s the last great book you read?

A: “How to Avoid a Climate Disaster,” by Bill Gates. I absolutely loved the way that Gates was able to take an overwhelmingly complex topic and distill it down into words that everyone can understand. His optimism inspires me to keep pushing on applications of AI and robotics to help avoid a climate disaster.

Engineers bring a soft touch to commercial robotics

2021 Top Article – How to Find the Right Damper for Your Application

How Automation and Robotics Will Be Used in 2022

Holiday robot videos 2021 (updated)

Happy holidays everyone! Here are some more robot videos to get you into the holiday spirit.

Have a last minute holiday robot video of your own that you’d like to share? Send your submissions to daniel.carrillozapata@robohub.org

And as a late entry, here we have the video from PAL Robotics:

HEIDENHAIN – Linear Encoders for Length Measurement

Robot reinforcement learning: safety in real-world applications

How can we make a robot learn in the real world while ensuring safety? In this work, we show how it’s possible to face this problem. The key idea to exploit domain knowledge and use the constraint definition to our advantage. Following our approach, it’s possible to implement learning robotic agents that can explore and learn in an arbitrary environment while ensuring safety at the same time.

Safety and learning in robots

Safety is a fundamental feature in real-world robotics applications: robots should not cause damage to the environment, to themselves, and they must ensure the safety of people operating around them. To ensure safety when we deploy a new application, we want to avoid constraint violation at any time. These stringent safety constraints are difficult to enforce in a reinforcement learning setting. This is the reason why it is hard to deploy learning agents in the real world. Classical reinforcement learning agents use random exploration, such as Gaussian policies, to act in the environment and extract useful knowledge to improve task performance. However, random exploration may cause constraint violations. These constraint violations must be avoided at all costs in robotic platforms, as they often result in a major system failure.

While the robotic framework is challenging, it is also a very well-known and well-studied problem: thus, we can exploit some key results and knowledge from the field. Indeed, often a robot’s kinematics and dynamics are known and can be exploited by the learning systems. Also, physical constraints e.g., avoiding collisions and enforcing joint limits, can be written in analytical form. All this information can be exploited by the learning robot.

Our approach

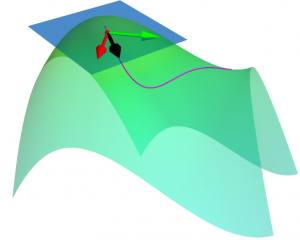

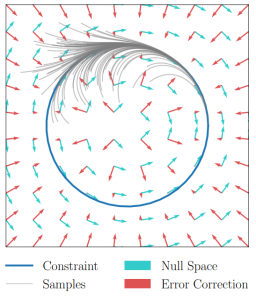

Many reinforcement learning approaches try to solve the safety problem by incorporating the constraint information in the learning process. This approach often results in slower learning performances, while not being able to ensure safety during the whole learning process. Instead, we present a novel point of view to the problem, introducing ATACOM (Acting on the TAngent space of the COnstraint Manifold). Different from other state-of-the-art approaches, ATACOM tries to create a safe action space in which every action is inherently safe. To do so, we need to construct the constraint manifold and exploit the basic domain knowledge of the agent. Once we have the constraint manifold, we define our action space as the tangent space to the constraint manifold.

We can construct the constraint manifold using arbitrary differentiable constraints. The only requirement is that the constraint function must depend only on controllable variables i.e. the variables that we can directly control with our control action. An example could be the robot joint positions and velocities.

We can support both equality and inequality constraints. Inequality constraints are particularly important as they can be used to avoid specific areas of the state space or to enforce the joint limits. However, they don’t define a manifold. To obtain a manifold, we transform the inequality constraints into equality constraints by introducing slack variables.

With ATACOM, we can ensure safety by taking action on the tangent space of the constraint manifold. An intuitive way to see why this is true is to consider the motion on the surface of a sphere: any point with a velocity tangent to the sphere itself will keep moving on the surface of the sphere. The same idea can be extended to more complex robotic systems, considering the acceleration of system variables (or the generalized coordinates, when considering a mechanical system) instead of velocities.

The above-mentioned framework only works if we consider continuous-time systems, when the control action is the instantaneous velocity or acceleration. Unfortunately, the vast majority of robotic controllers and reinforcement learning approaches are discrete-time digital controllers. Thus, even taking the tangent direction of the constraint manifold will result in a constraint violation. It is always possible to reduce the violations by increasing the control frequency. However, error accumulates over time, causing a drift from the constraint manifold. To solve this issue, we introduce an error correction term that ensures that the system stays on the reward manifold. In our work, we implement this term as a simple proportional controller.

Finally, many robotics systems cannot be controlled directly by velocity or accelerations. However, if an inverse dynamics model or a tracking controller is available, we can use it and compute the correct control action.

Results

We tried ATACOM on a simulated air hockey task. We use two different types of robots. The first one is a planar robot. In this task, we enforce joint velocities and we avoid the collision of the end-effector with table boundaries.

The second robot is a Kuka Iiwa 14 arm. In this scenario, we constrained the end-effector to move on the planar surface and we ensure no collision will occur between the robot arm and the table.

In both experiments, we can learn a safe policy using the Soft Actor-Critic algorithm as a learning algorithm in combination with the ATACOM framework. With our approach, we are able to learn good policies fast and we can ensure low constraint violations at any timestep. Unfortunately, the constraint violation cannot be zero due to discretization, but it can be reduced to be arbitrarily small. This is not a major issue in real-world systems, as they are affected by noisy measurements and non-ideal actuation.

Is the safety problem solved now?

The key question to ask is if we can ensure any safety guarantees with ATACOM. Unfortunately, this is not true in general. What we can enforce are state constraints at each timestep. This includes a wide class of constraints, such as fixed obstacle avoidance, joint limits, surface constraints. We can extend our method to constraints considering not (directly) controllable variables. While we can ensure safety to a certain extent also in this scenario, we cannot ensure that the constraint violation will not be violated during the whole trajectory. Indeed, if the not controllable variables act in an adversarial way, they might find a long-term strategy to cause constraint violation in the long term. An easy example is a prey-predator scenario: even if we ensure that the prey avoids each predator, a group of predators can perform a high-level strategy and trap the agent in the long term.

Thus, with ATACOM we can ensure safety at a step level, but we are not able to ensure long-term safety, which requires reasoning at trajectory level. To ensure this kind of safety, more advanced techniques will be needed.

Find out more

The authors were best paper award finalists at CoRL this year, for their work: Robot reinforcement learning on the constraint manifold.

- Read the paper.

- The GitHub page for the work is here.

- Read more about the winning and shortlisted papers for the CoRL awards here.