Engineers teach AI to navigate ocean with minimal energy

These tiny liquid robots never run out of juice as long as they have food

A soft jig that could enhance the performance of general-purpose assembly robots

A wheeled car, quadruped and humanoid robot: Swiss-Mile Robot from ETH Zurich

Engineers build in-pipe sewer robot

How Can the Utilities Industry Benefit from Robotics?

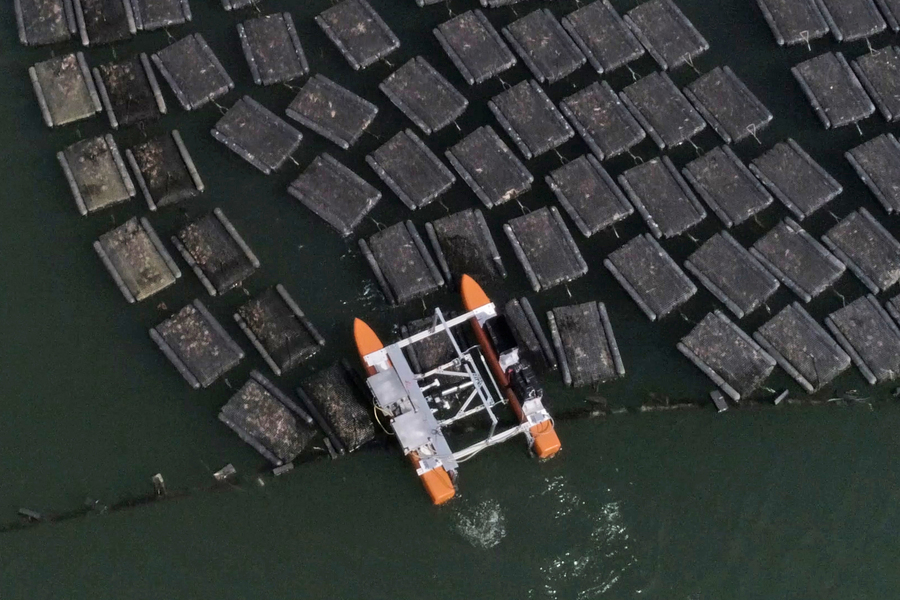

Meet the Oystamaran

MIT students and researchers from MIT Sea Grant work with local oyster farmers in advancing the aquaculture industry by seeking solutions to some of its biggest challenges. Currently, oyster bags have to be manually flipped every one to two weeks to reduce biofouling. Image: John Freidah, MIT MechE

By Michaela Jarvis | Department of Mechanical Engineering

When Michelle Kornberg was about to graduate from MIT, she wanted to use her knowledge of mechanical and ocean engineering to make the world a better place. Luckily, she found the perfect senior capstone class project: supporting sustainable seafood by helping aquaculture farmers grow oysters.

“It’s our responsibility to use our skills and opportunities to work on problems that really matter,” says Kornberg, who now works for an aquaculture company called Innovasea. “Food sustainability is incredibly important from an environmental standpoint, of course, but it also matters on a social level. The most vulnerable will be hurt worst by the climate crisis, and I think food sustainability and availability really matters on that front.”

The project undertaken by Kornberg’s capstone class, 2.017 (Design of Electromechanical Robotic Systems), came out of conversations between Michael Triantafyllou, who is MIT’s Henry L. and Grace Doherty Professor in Ocean Science and Engineering and director of MIT Sea Grant, and Dan Ward. Ward, a seasoned oyster farmer and marine biologist, owns Ward Aquafarms on Cape Cod and has worked extensively to advance the aquaculture industry by seeking solutions to some of its biggest challenges.

Speaking with Triantafyllou at MIT Sea Grant — part of a network of university-based programs established by the federal government to protect the coastal environment and economy — Ward had explained that each of his thousands of floating mesh oyster bags need to be turned over about 11 times a year. The flipping allows algae, barnacles, and other “biofouling” organisms that grow on the part of the bag beneath the water’s surface to be exposed to air and light, so they can dry and chip off. If this task is not performed, water flow to the oysters, which is necessary for their growth, is blocked.

The bags are flipped by a farmworker in a kayak, and the task is monotonous, often performed in rough water and bad weather, and ergonomically injurious. “It’s kind of awful, generally speaking,” Ward says, adding that he pays about $3,500 per year to have the bags turned over at each of his two farm sites — and struggles to find workers who want to do the job of flipping bags that can grow to a weight of 60 or 70 pounds just before the oysters are harvested.

Presented with this problem, the capstone class Kornberg was in — composed of six students in mechanical engineering, ocean engineering, and electrical engineering and computer science — brainstormed solutions. Most of the solutions, Kornberg says, involved an autonomous robot that would take over the bag-flipping. It was during that class that the original version of the “Oystamaran,” a catamaran with a flipping mechanism between its two hulls, was born.

A combination of mechanical engineering, ocean engineering, and electrical engineering and computer sciences students work together to design a robot to help with flipping oyster bags at Ward Aquafarm on Cape Cod. The “Oystamaran” robot uses a vision system to position and flip the bags. Image: Lauren Futami, MIT MechE

Ward’s involvement in the project has been important to its evolution. He says he has reviewed many projects in his work on advisory boards that propose new technologies for aquaculture. Often, they don’t correspond with the actual challenges faced by the industry.

“It was always ‘I already have this remotely operated vehicle; would it be useful to you as an oyster farmer if I strapped on some kind of sensor?’” Ward says. “They try to fit robotics into aquaculture without any industry collaboration, which leads to a robotic product that doesn’t solve any of the issues we experience out on the farm. Having the opportunity to work with MIT Sea Grant to really start from the ground up has been exciting. Their approach has been, ‘What’s the problem, and what’s the best way to solve the problem?’ We do have a real need for robotics in aquaculture, but you have to come at it from the customer-first, not the technology-first, perspective.”

Triantafyllou says that while the task the robot performs is similar to work done by robots in other industries, the “special difficulty” students faced while designing the Oystamaran was its work environment.

“You have a floating device, which must be self-propelled, and which must find these objects in an environment that is not neat,” Triantafyllou says. “It’s a combination of vision and navigation in an environment that changes, with currents, wind, and waves. Very quickly, it becomes a complicated task.”

Kornberg, who had constructed the original central flipping mechanism and the basic structure of the vessel as a staff member at MIT Sea Grant after graduating in May 2020, worked as a lab instructor for the next capstone class related to the project in spring 2021. Andrew Bennett, education administrator at MIT Sea Grant, co-taught that class, in which students designed an Oystamaran version 2.0, which was tested at Ward Aquafarms and managed to flip several rows of bags while being controlled remotely. Next steps will involve making the vessel more autonomous, so it can be launched, navigate autonomously to the oyster bags, flip them, and return to the launching point. A third capstone class related to the project will take place this spring.

The students operate the “Oystamaran” robot remotely from the boat. Image: John Freidah, MIT MechE

Bennett says an ideal project outcome would be, “We have proven the concept, and now somebody in industry says, ‘You know, there’s money to be made in oysters. I think I’ll take over.’ And then we hand it off to them.”

Meanwhile, he says an unexpected challenge arose with getting the Oystamaran to go between tightly packed rows of oyster bags in the center of an array.

“How does a robot shimmy in between things without wrecking something? It’s got to wiggle in somehow, which is a fascinating controls problem,” Bennett says, adding that the problem is a source of excitement, rather than frustration, to him. “I love a new challenge, and I really love when I find a problem that no one expected. Those are the fun ones.”

Triantafyllou calls the Oystamaran “a first for the industry,” explaining that the project has demonstrated that robots can perform extremely useful tasks in the ocean, and will serve as a model for future innovations in aquaculture.

“Just by showing the way, this may be the first of a number of robots,” he says. “It will attract talent to ocean farming, which is a great challenge, and also a benefit for society to have a reliable means of producing food from the ocean.”

A system for designing and training intelligent soft robots

The Future of Robot Programming – Industrial Robot Programming Tool Supports All Phases of Automation

Ameca robot shows off new level of human-like facial expressions

FANUC America and the Manufacturing Skill Standards Council (MSSC) Agree to Co-market the Stackability of Their Certifications to Meet the Shortage of Skilled Industrial Robotics and Automation Operators

Grip or slip: Robots need a human sense of touch

Exploring ROS2 with a wheeled robot – #4 – Obstacle avoidance

By Marco Arruda

In this post you’ll learn how to program a robot to avoid obstacles using ROS2 and C++. Up to the end of the post, the Dolly robot moves autonomously in a scene with many obstacles, simulated using Gazebo 11.

You’ll learn:

- How to publish AND subscribe topics in the same ROS2 Node

- How to avoid obstacles

- How to implement your own algorithm in ROS2 and C++

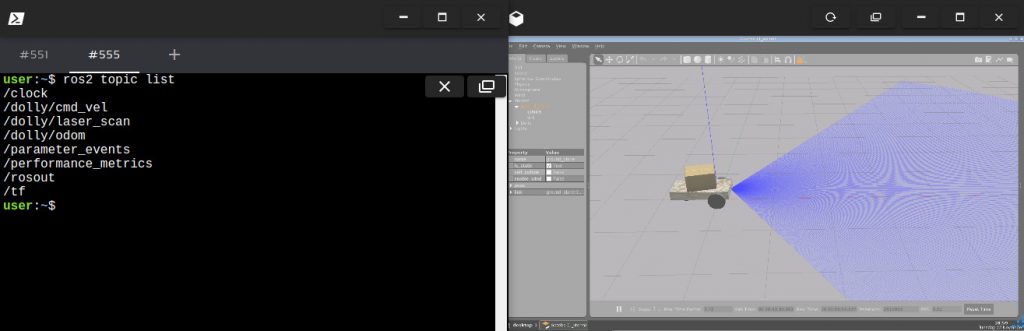

1 – Setup environment – Launch simulation

Before anything else, make sure you have the rosject from the previous post, you can copy it from here.

Launch the simulation in one webshell and in a different tab, checkout the topics we have available. You must get something similar to the image below:

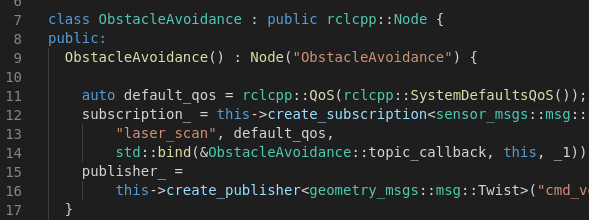

2 – Create the node

In order to have our obstacle avoidance algorithm, let’s create a new executable in the file ~/ros2_ws/src/my_package/obstacle_avoidance.cpp:

#include "geometry_msgs/msg/twist.hpp" // Twist

#include "rclcpp/rclcpp.hpp" // ROS Core Libraries

#include "sensor_msgs/msg/laser_scan.hpp" // Laser Scan

using std::placeholders::_1;

class ObstacleAvoidance : public rclcpp::Node {

public:

ObstacleAvoidance() : Node("ObstacleAvoidance") {

auto default_qos = rclcpp::QoS(rclcpp::SystemDefaultsQoS());

subscription_ = this->create_subscription(

"laser_scan", default_qos,

std::bind(&ObstacleAvoidance::topic_callback, this, _1));

publisher_ =

this->create_publisher("cmd_vel", 10);

}

private:

void topic_callback(const sensor_msgs::msg::LaserScan::SharedPtr _msg) {

// 200 readings, from right to left, from -57 to 57 degress

// calculate new velocity cmd

float min = 10;

for (int i = 0; i < 200; i++) { float current = _msg->ranges[i];

if (current < min) { min = current; } }

auto message = this->calculateVelMsg(min);

publisher_->publish(message);

}

geometry_msgs::msg::Twist calculateVelMsg(float distance) {

auto msg = geometry_msgs::msg::Twist();

// logic

RCLCPP_INFO(this->get_logger(), "Distance is: '%f'", distance);

if (distance < 1) {

// turn around

msg.linear.x = 0;

msg.angular.z = 0.3;

} else {

// go straight ahead

msg.linear.x = 0.3;

msg.angular.z = 0;

}

return msg;

}

rclcpp::Publisher::SharedPtr publisher_;

rclcpp::Subscription::SharedPtr subscription_;

};

int main(int argc, char *argv[]) {

rclcpp::init(argc, argv);

rclcpp::spin(std::make_shared());

rclcpp::shutdown();

return 0;

}

In the main function we have:

- Initialize node rclcpp::init

- Keep it running rclcpp::spin

Inside the class constructor:

- Subcribe to the laser scan messages: subscription_

- Publish to the robot diff driver: publisher_

The obstacle avoidance intelligence goes inside the method calculateVelMsg. This is where decisions are made based on the laser readings. Notice that is depends purely on the minimum distance read from the message.

If you want to customize it, for example, consider only the readings in front of the robot, or even check if it is better to turn left or right, this is the place you need to work on! Remember to adjust the parameters, because the way it is, only the minimum value comes to this method.

3 – Compile the node

This executable depends on both geometry_msgs and sensor_msgs, that we have added in the two previous posts of this series. Make sure you have them at the beginning of the ~/ros2_ws/src/my_package/CMakeLists.txt file:

# find dependencies find_package(ament_cmake REQUIRED) find_package(rclcpp REQUIRED) find_package(geometry_msgs REQUIRED) find_package(sensor_msgs REQUIRED)

And finally, add the executable and install it:

# obstacle avoidance

add_executable(obstacle_avoidance src/obstacle_avoidance.cpp)

ament_target_dependencies(obstacle_avoidance rclcpp sensor_msgs geometry_msgs)

...

install(TARGETS

reading_laser

moving_robot

obstacle_avoidance

DESTINATION lib/${PROJECT_NAME}/

)

Compile the package:

colcon build --symlink-install --packages-select my_package

4 – Run the node

In order to run, use the following command:

ros2 run my_package obstacle_avoidance

It will not work for this robot! Why is that? We are subscribing and publishing to generic topics: cmd_vel and laser_scan.

We need a launch file to remap these topics, let’s create one at ~/ros2_ws/src/my_package/launch/obstacle_avoidance.launch.py:

from launch import LaunchDescription

from launch_ros.actions import Node

def generate_launch_description():

obstacle_avoidance = Node(

package='my_package',

executable='obstacle_avoidance',

output='screen',

remappings=[

('laser_scan', '/dolly/laser_scan'),

('cmd_vel', '/dolly/cmd_vel'),

]

)

return LaunchDescription([obstacle_avoidance])

Recompile the package, source the workspace once more and launch it:

colcon build --symlink-install --packages-select my_package

source ~/ros2_ws/install/setup.bash

ros2 launch my_package obstacle_avoidance.launch.py

Related courses & extra links:

The post Exploring ROS2 with a wheeled robot – #4 – Obstacle Avoidance appeared first on The Construct.