A 7-foot-tall robot at Dallas Love Field is watching for unmasked travelers and curbside loiterers

Soft robotic origami crawlers

Robots are creating images and telling jokes: Five things to know about foundation models and the next generation of AI

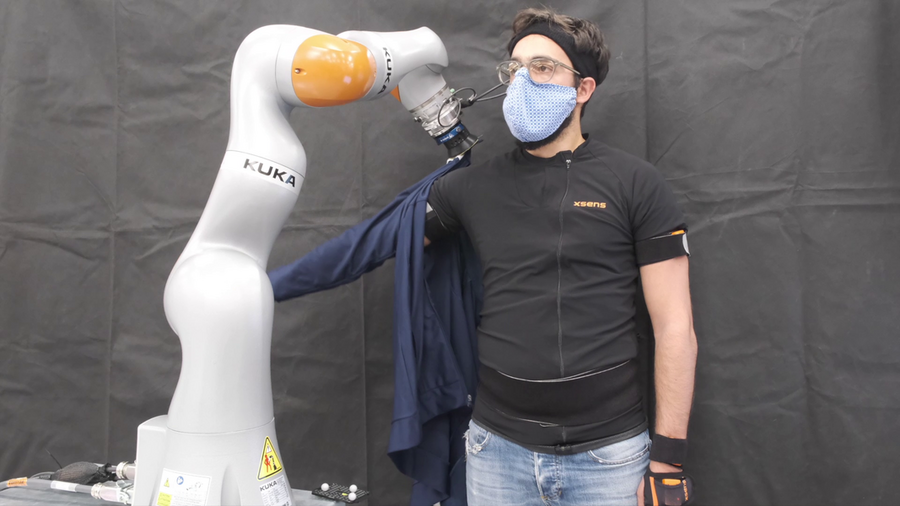

Robots dress humans without the full picture

The robot seen here can’t see the human arm during the entire dressing process, yet it manages to successfully get a jacket sleeve pulled onto the arm. Photo courtesy of MIT CSAIL.

By Steve Nadis | MIT CSAIL

Robots are already adept at certain things, such as lifting objects that are too heavy or cumbersome for people to manage. Another application they’re well suited for is the precision assembly of items like watches that have large numbers of tiny parts — some so small they can barely be seen with the naked eye.

“Much harder are tasks that require situational awareness, involving almost instantaneous adaptations to changing circumstances in the environment,” explains Theodoros Stouraitis, a visiting scientist in the Interactive Robotics Group at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL).

“Things become even more complicated when a robot has to interact with a human and work together to safely and successfully complete a task,” adds Shen Li, a PhD candidate in the MIT Department of Aeronautics and Astronautics.

Li and Stouraitis — along with Michael Gienger of the Honda Research Institute Europe, Professor Sethu Vijayakumar of the University of Edinburgh, and Professor Julie A. Shah of MIT, who directs the Interactive Robotics Group — have selected a problem that offers, quite literally, an armful of challenges: designing a robot that can help people get dressed. Last year, Li and Shah and two other MIT researchers completed a project involving robot-assisted dressing without sleeves. In a new work, described in a paper that appears in an April 2022 issue of IEEE Robotics and Automation, Li, Stouraitis, Gienger, Vijayakumar, and Shah explain the headway they’ve made on a more demanding problem — robot-assisted dressing with sleeved clothes.

The big difference in the latter case is due to “visual occlusion,” Li says. “The robot cannot see the human arm during the entire dressing process.” In particular, it cannot always see the elbow or determine its precise position or bearing. That, in turn, affects the amount of force the robot has to apply to pull the article of clothing — such as a long-sleeve shirt — from the hand to the shoulder.

To deal with obstructed vision in trying to dress a human, an algorithm takes a robot’s measurement of the force applied to a jacket sleeve as input and then estimates the elbow’s position. Image: MIT CSAIL

To deal with the issue of obstructed vision, the team has developed a “state estimation algorithm” that allows them to make reasonably precise educated guesses as to where, at any given moment, the elbow is and how the arm is inclined — whether it is extended straight out or bent at the elbow, pointing upwards, downwards, or sideways — even when it’s completely obscured by clothing. At each instance of time, the algorithm takes the robot’s measurement of the force applied to the cloth as input and then estimates the elbow’s position — not exactly, but placing it within a box or volume that encompasses all possible positions.

That knowledge, in turn, tells the robot how to move, Stouraitis says. “If the arm is straight, then the robot will follow a straight line; if the arm is bent, the robot will have to curve around the elbow.” Getting a reliable picture is important, he adds. “If the elbow estimation is wrong, the robot could decide on a motion that would create an excessive, and unsafe, force.”

The algorithm includes a dynamic model that predicts how the arm will move in the future, and each prediction is corrected by a measurement of the force that’s being exerted on the cloth at a particular time. While other researchers have made state estimation predictions of this sort, what distinguishes this new work is that the MIT investigators and their partners can set a clear upper limit on the uncertainty and guarantee that the elbow will be somewhere within a prescribed box.

The model for predicting arm movements and elbow position and the model for measuring the force applied by the robot both incorporate machine learning techniques. The data used to train the machine learning systems were obtained from people wearing “Xsens” suits with built-sensors that accurately track and record body movements. After the robot was trained, it was able to infer the elbow pose when putting a jacket on a human subject, a man who moved his arm in various ways during the procedure — sometimes in response to the robot’s tugging on the jacket and sometimes engaging in random motions of his own accord.

This work was strictly focused on estimation — determining the location of the elbow and the arm pose as accurately as possible — but Shah’s team has already moved on to the next phase: developing a robot that can continually adjust its movements in response to shifts in the arm and elbow orientation.

In the future, they plan to address the issue of “personalization” — developing a robot that can account for the idiosyncratic ways in which different people move. In a similar vein, they envision robots versatile enough to work with a diverse range of cloth materials, each of which may respond somewhat differently to pulling.

Although the researchers in this group are definitely interested in robot-assisted dressing, they recognize the technology’s potential for far broader utility. “We didn’t specialize this algorithm in any way to make it work only for robot dressing,” Li notes. “Our algorithm solves the general state estimation problem and could therefore lend itself to many possible applications. The key to it all is having the ability to guess, or anticipate, the unobservable state.” Such an algorithm could, for instance, guide a robot to recognize the intentions of its human partner as it works collaboratively to move blocks around in an orderly manner or set a dinner table.

Here’s a conceivable scenario for the not-too-distant future: A robot could set the table for dinner and maybe even clear up the blocks your child left on the dining room floor, stacking them neatly in the corner of the room. It could then help you get your dinner jacket on to make yourself more presentable before the meal. It might even carry the platters to the table and serve appropriate portions to the diners. One thing the robot would not do would be to eat up all the food before you and others make it to the table. Fortunately, that’s one “app” — as in application rather than appetite — that is not on the drawing board.

This research was supported by the U.S. Office of Naval Research, the Alan Turing Institute, and the Honda Research Institute Europe.

Using an LVDT as a Robotic Micrometer or Automated Height Gauge

An empirical analysis of compute-optimal large language model training

Making Robotic Programming Easier for the Fabrication Industry

In Celebration of National Robotics Week, iRobot® Launches the Create® 3 Educational Robot

Black in Robotics ‘Meet The Members’ series: Vuyo Makhuvha

Before droves of people descend on a convention center for a trade show or conference, the hall must be carefully divided up to accommodate corporate show booths, walkways for attendees, spaces for administrators/security and much more. The process of defining the layout and marking it up for construction crews is often done with humans laboriously measuring and marking distances, but Lionel can do all of this for you. Once given a plan, it zooms along empty convention halls while precisely marking all of the dimensions for the schematics that you have in mind.

Lionel, the floor-marking robot, was made specifically for the organizers of trade shows and conferences.

Lionel isn’t a robot that you’d typically think of when you imagine new applications of technology, but it fills a niche that there is strong demand for. Identifying use cases like Lionel’s and showing that there is a robotic solution for them is part of the responsibility of business strategy managers like Vuyo Makhuvha.

Vuyo works in building August Robotics mobile robotics portfolio which at the moment comprises 2 robots. Their latest robot Diego, Disinfection on the Go! Is a mobile UV-C robot design which was released in March 2021. Diego provides hospital-grade disinfection for hotel rooms and public spaces in a way that is safe and easy to use and allows establishments to increase safety and differentiate their hygiene programmes.

Identifying use cases like Lionel’s or Diego’s, and showing that there is a robotic solution for them is part of the responsibility of business strategy managers like Vuyo Makhuvha. Keep reading to see her explanation of how a non-engineer can have a significant impact on robot design, how she managed the experience of leaving her home in South Africa for her new home of Hong Kong, and much more.

Vuyo’s trajectory

Vuyo is a bright mathematical mind from South Africa that had narrowed down her area of study to two subjects when preparing to enter the University of Cape Town (UCT): Engineering and Actuarial Science. In what felt like a gut-wrenching decision, she chose to study Actuarial Science. Unbeknownst to her, she would eventually return to working with engineers in good time.

Vuyo excelled at UCT, eventually earning both a Bachelor’s degree and a Master’s Degree (with a Master’s Thesis)! All the while, her education was enriched by the prestigious Allan Gray Orbis Fellowship, which she had been awarded after graduating high school. The fellowship not only covered her tuition, but also taught her about entrepreneurial thinking as well as placed her amongst a community of like-minded individuals.

And the Fellowship’s support doesn’t end there! “After those four years [with the Allan Gray Orbis Fellowship] you can tap into additional support. For example, if you want to be an entrepreneur, you basically say ‘Hey I want to start a business; Can you help me?” And they’ll have different programs [to support you].’ “

“It’s amazing. It’s one of the experiences that I’ve had that has really encouraged me.”

While getting her Master’s Degree, Vuyo had gotten a taste of the broader world outside of Actuarial Science and decided that she wanted to see more of it by working for a consulting company after she graduated: McKinsey & Company. There, her intention was to “gain exposure to [the] questions that businesses are answering for themselves” and McKinsey was able to give her that exposure. She was placed onto teams where they established “strategies that would grow their revenue by X [or something similarly] for big name companies that you’ve heard of all of your life” or “made certain that a merger went [the way it should have].” This diverse set of experiences was helpful for narrowing down her interests and for increasing her confidence.

“.. What I really took away from [all of that] was that I can do anything. There are a lot of specialized fields … but I can be quite involved in them by answering questions like ‘How do we commercialize this?’ or ‘What features do we need?’”

Her bolstered confidence and passion for commercialization would then carry her across a continent and into her current role at August Robotics. After 2 years at McKinsey, one of which was spent as a consultant in Asia, Vuyo decided she wanted to experience working at an early stage start-up in emerging tech and she happened to find a role on the Alumni network job board.

At that startup, August Robotics, she ended up becoming a Business Strategy Manager, working with a team of highly-experienced engineers and scientists to develop new robotics-based solutions. To describe her role simply, Vuyo explains that “My job every day is to make sure that we’re designing a robot that fulfills our clients’ needs.” This can lead to tension at times, as Vuyo challenges experienced technical experts in their fields.

“I always say that my job as a commercial person is to dream really, really big. Your job as an engineering team is to tell me ‘Woah! Slow down. This is what we can do.’

And if we’re not having that kind of tension, then I’m not doing my job right. Because I need to be the person who can imagine this amazing product for our customers, especially in the first couple of weeks when we’re coming together with the concept.

You’re supposed to say “Woah! We can’t do this right now, let’s pull it back and do this” “Do you really need that feature right now. Isn’t that something we can do far later in the future?”

It’s your job to hold me back.

Otherwise, we’re not going to be able to make new things that our customers never thought of. The tension is there, but it’s a necessary tension. And as long as it’s done in a way that’s professional and respectful, I think it’s good.”

August Robotics has fostered a culture where this tension can be expressed in such a professional and respectful way. It’s one of the reasons that Vuyo loves it there and recommends more passionate Black roboticists join her at August, if possible.

She plans to stay there as long as the culture remains one in which she can freely explore and challenge her peers and herself as they continue to seek out the unique use cases that only a robot can solve in the world. The call of entrepreneurship still lingers in her ear, however, and she plans to one day use the lessons she has learned as an Allan Gray Orbis Fellow to make an impactful business.

Vuyo’s challenges

While she is still relatively new in her current role at August Robotics, Vuyo has overcome several challenges that she thinks others could learn from. Her transition from McKinsey and Company to August Robotics required a transition from her native Africa to Hong Kong. There she went from being in a majority South African context to being in a majority Asian context.

“I think being a Black person in any place that is not predominantly black is always going to be different. Especially in a place like Asia, where there’s so few of us. Yeah, I get stared at [but] it’s not a malicious thing most of the time. I think that most of the time it’s just like: ‘Hm. Why are you here?’”

To overcome the challenge of feeling isolated, she learned to embrace the feeling of benign curiosity coming from her new neighbors. The feeling inspired Vuyo to make an unwritten rule: “Every time I see a black person, I always say hi to them…. I can go through a whole day without seeing another black person. It’s weird. So, [I] just have to acknowledge that we’re both in this whenever I see them.”

Vuyo’s words of wisdom

Vuyo repeatedly expressed how valuable it is to work in an environment as supportive as the one that she has found in August Robotics and recommends that you look for:

“[a place] where you trust the people that you work with, you feel like people respect and trust you, and … you never feel weird about not knowing something. Because then you operate from a place of stability or comfort. Of course, I’m going to work really really hard; I don’t want to do anything wrong and I’m going to be really really careful.

I do it not because I’m in fear about what the repercussions will be. I do it because I’m comfortable enough that I can contribute to what our team is doing, and that I feel that responsibility to contribute and to make our team successful. And so I don’t spend time worrying about things that I don’t need to be worrying about. I spend time worrying about the [robotics] problems that we have.”

Finding and contributing to an environment like that is what has allowed Vuyo to thrive and is what she plans to foster in every organization she works at (or starts!) in the future.

Finally, Vuyo’s advice to young people interested in robotics (from the commercial side or not) is:

“To continue to be driven by what [you] think is interesting and exciting and stimulating, and to not for a second think that [you’re] not capable or not worthy.

Because [you] have to try stuff and not get in your own way. Just believe that you’re good. That you have creative and exciting ideas and then go and apply that creativity to things that you find interesting and exciting.”

More from Vuyo

Feel free to follow August Robotics to find out more about the products Vuyo is helping to launch!

Feel free to connect to Vuyo via LinkedIn.

Acknowledgements

Drafts of this article were corrected and improved by Vuyo Makhuvha, Sophia Williams, and Nailah Seale. All current errors are the fault of Kwesi Rutledge. Please reach out to him if you spot any!

Autonomous Need for Speed

ApexAI is driving advances in ROS2 to make it viable for use in autonomous vehicles. The changes they are implementing are bridging the gap between the automotive world and robotics.

Joe Speed, VP of Product at ApexAI, dives into the current multi-year development process of bringing a car to market, and how ApexAI will transform this process into the shorter development time we see with modern technology. This technology was showcased at the Indy Autonomous Challenge where million-dollar autonomous cars raced each other on a track.

Apex.OS is a certified software framework and SDK for autonomous systems that enable software developers to write safe and certified autonomous driving applications compatible with ROS 2.

Joe Speed

Joe Speed is VP of Product & Chief Evangelist at Apex.AI. Prior to joining Apex.AI, Joe was a member of Open Robotics ROS 2 TSC, Autoware Foundation TSC, Eclipse OpenADx SC, and ADLINK Technology’s Field CTO driving robotics and autonomy.

Joe has spent his career developing and advocating open-source at organizations including Linux Foundation and IBM where he launched IBM IoT and co-founded the IBM AutoLAB automotive incubator. Joe helped make MQTT, IoT protocol, open-source and convinced the automakers to adopt it.

Joe is working to do the same for Apex.AI’s safe ROS 2 distribution and ROS middleware. Joe has developed a dozen advanced technology vehicles but is most proud of helping develop an accessible autonomous bus for older adults and people with disabilities.

Links

- Download mp3 (45.5 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

Solving the challenges of robotic pizza-making

Trustworthy robots

Robots are becoming a more and more important part of our home and work lives and as we come to rely on them, trust is of paramount importance. Successful teams are founded on trust, and the same is true for human-robot teams. But what does it mean to trust a robot?

I’ll be chatting to three roboticists working on various aspects of trustworthiness in robotics: Anouk van Maris (University of the West of England), Faye McCabe (University of Birmingham), Daniel Omeiza (University of Oxford).

Anouk van Maris is a research fellow in responsible robotics. She received her doctorate at the Bristol Robotics Laboratory, where she investigated ethical concerns of social robots. She is currently working on the technical development and implementation of the robot ethical black box, which will be used to generate explanations of the robot’s decision-making process. She is a member of the committee on Ethics for Robots and Autonomous Systems at the British Standards Institute, where she uses her insights and knowledge to support the progress of a standard for ethical design and implementation of robots.

Faye McCabe is a member of the Human Interface Technologies team at the University of Birmingham. She received a Bachelor of Engineering degree in Computer Systems Engineering from the University of Birmingham in 2017. Her PhD focuses on how to design interfaces which support rich, dynamic and appropriate trust-building within Human-Autonomy Teams of the future. Faye’s main area of focus is autonomous maritime platforms, with her research focusing on sonar analysis, and how this could be aided through the use of autonomous decision-aids.

Daniel Omeiza is a PhD student in the department of computer science at the University of Oxford. He is a member of the responsible innovation group and the cognitive robotics group. As part of the RoboTIPS and SAX project, he is investigating and designing new techniques for effective explainability in autonomous driving. Before joining Oxford, he obtained a masters degree from Carnegie Mellon University and conducted research at IBM Research as an intern.

Keywords: assistive, autonomy, defence, hri, trust