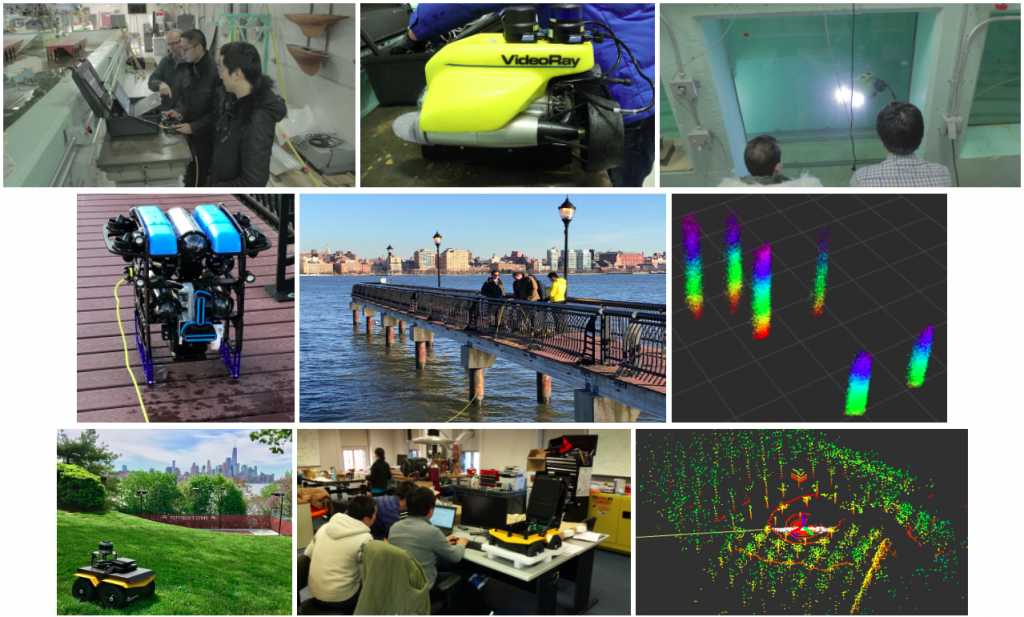

ep.359: Perception and Decision-Making for Underwater Robots, with Brendan Englot

Prof Brendan Englot, from Stevens Institute of Technology, discusses the challenges in perception and decision-making for underwater robots – especially in the field. He discusses ongoing research using the BlueROV platform and autonomous driving simulators.

Brendan Englot

Brendan Englot received his S.B., S.M., and Ph.D. degrees in mechanical engineering from the Massachusetts Institute of Technology in 2007, 2009, and 2012, respectively. He is currently an Associate Professor with the Department of Mechanical Engineering at Stevens Institute of Technology in Hoboken, New Jersey. At Stevens, he also serves as interim director of the Stevens Institute for Artificial Intelligence. He is interested in perception, planning, optimization, and control that enable mobile robots to achieve robust autonomy in complex physical environments, and his recent work has considered sensing tasks motivated by underwater surveillance and inspection applications, and path planning with multiple objectives, unreliable sensors, and imprecise maps.

Links

- Download mp3 (48.0 MB)

- Subscribe to Robohub using iTunes, RSS, or Spotify

- Support us on Patreon

Using reinforcement learning for control of direct ink writing

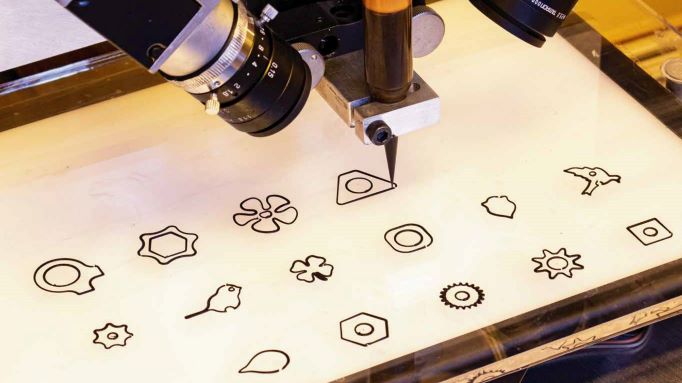

Closed-loop printing enhanced by machine learning. © Michal Piovarči/ISTA

Closed-loop printing enhanced by machine learning. © Michal Piovarči/ISTA

Using fluids for 3D printing may seem paradoxical at first glance, but not all fluids are watery. Many useful materials are more viscous, from inks to hydrogels, and thus qualify for printing. Yet their potential has been relatively unexplored due to the limited control over their behaviour. Now, researchers of the Bickel group at the Institute of Science and Technology Austria (ISTA) are employing machine learning in virtual environments to achieve better results in real-world experiments.

3D printing is on the rise. Many people are familiar with the characteristic plastic structures. However, attention has also turned to different printing materials, such as inks, viscous pastes and hydrogels, which could be potentially be used to 3D-print biomaterials and even food. But printing such fluids is challenging. Exact control over them requires painstaking trial-and-error experiments, because they typically tend to deform and spread after application.

A team of researchers, including Michal Piovarči and Bernd Bickel, are tackling these challenges. In their laboratories at the Institute of Science and Technology Austria (ISTA), they are using reinforcement learning – a type of machine learning – to improve the printing technique of viscous materials. The results were presented at the SIGGRAPH conference, the annual meeting of simulation and visual computing researchers.

A critical component of manufacturing is identifying the parameters that consistently produce high-quality structures. Certainly, an assumption is implicit here: the relationship between parameters and outcome is predictable. However, real processes always exhibit some variability due to the nature of the materials used. In printing with viscous materials, this notion is more prevalent, because they take significant time to settle after deposition. The question is: how can we understand, and deal with, the complex dynamics?

“Instead of printing thousands of samples, which is not only expensive, but rather tedious, we put our expertise in computer simulations to action,” responds Piovarči, lead-author of the study. While computer graphics often trade physical accuracy for faster simulation, here, the team came up with a simulated environment that mirrors the physical processes with accuracy. “We modelled the ink’s current and short-horizon future states based on fluid physics. The efficiency of our model allowed us to simulate hundreds of prints simultaneously, more often than we could ever have done in the experiment. We used the dataset for reinforcement learning and gained the knowledge of how to control the ink and other materials.”

Learning in virtual environments how to control the ink. © Michal Piovarči/ISTA

The machine learning algorithm established various policies, including one to control the movement of the ink-dispensing nozzle at a corner such that no unwanted blobs occur. The printing apparatus would not follow the baseline of the desired shape anymore, but rather take a slightly altered path which eventually yields better results. To verify that these rules can handle various materials, they trained three models using liquids of different viscosity. They tested their method with experiments using inks of various thicknesses.

The team opted for closed-loop forms instead of simple lines or writing, because “closed loops represent the standard case for 3D printing and that is our target application,” explains Piovarči. Although the single-layer printing in this project is sufficient for the use cases in printed electronics, he wants to add another dimension. “Naturally, three dimensional objects are our goal, such that one day we can print optical designs, food or functional mechanisms. I find it fascinating that we as computer graphics community can be the major driving force in machine learning for 3D printing.”

Read the research in full

Closed-Loop Control of Direct Ink Writing via Reinforcement Learning

Michal Piovarči, Michael Foshey, Jie Xu, Timmothy Erps, Vahid Babaei, Piotr Didyk, Szymon Rusinkiewicz, Wojciech Matusik, Bernd Bickel

Why Should Businesses Outsource Payroll?

Payroll processing is something that every business owner or manager has to deal with. No matter the kind of sector you work in or the size of the team you manage, payroll cannot be avoided, and this is an important aspect of your business finances. This process relates to salary information and payments, which can...

The post Why Should Businesses Outsource Payroll? appeared first on 1redDrop.

Automation Is a Game Changer for Supply Chains

Aquabots: Ultrasoft liquid robots for biomedical and environmental applications

HEIDENHAIN at IMTS 2022

Debrief: The Reddit Robotics Showcase 2022

Once again the global robotics community rallied to provide a unique opportunity for amateurs and hobbyists to share their robotics projects alongside academics and industry professionals. Below are the recorded sessions of this year.

Industrial / Automation

Keynote “Matt Whelan (Ocado Technology) – The Ocado 600 Series Robot”

- Nye Mech Works (HAPPA) – Real Power Armor

- 3D Printed 6-Axis Robot Arm

- Vasily Morzhakov (Rembrain) – Cloud Platform for Smart Robotics

Mobile Robots

Keynote “Prof. Marc Hanheide (Lincoln Centre for Autonomous Systems) – Mobile Robots in the Wild”

- Julius Sustarevas – Armstone: Autonomous Mobile 3D Printer

- Camera Controller Hexapod & Screw/Augur All-Terrain Robot

- Keegan Neave – NE-Five

- Dimitar – Gravis and Ricardo *Kamal Carter – Aim-Hack Robot

- Calvin – BeBOT Real Time

Bio-Inspired Robots

Keynote “Dr. Matteo Russo (Rolls-Royce UTC in Manufacturing and On-Wing Technology) – Entering the Maze: Snake-Like Robots from Aerospace to Industry”

- Colin MacKenzie – Humanoid, Hexapod, and Legged Robot Control

- Halid Yildirim – Design of a Modular Quadruped Robot Dog

- Jakub Bartoszek – Honey Badger Quadruped

- Lutz Freitag – 01. RFC Berlin

- Hamburg Bit-Bots

- William Kerber – Human Mode Robotics – Lynx Quadruped and AI Training

- Sanjeev Hegde – Juggernaut

Human Robot Interaction

Dr. Ruth Aylett (The National Robotarium) – Social Agents and Human Robot Interaction”

- Nathan Boyd – Developing humanoids for general purpose applications

- Hand Controlled Artificial Hand

- Ethan Fowler & Rich Walker – The Shadow Robot Company

- Maël Abril – 6 Axis Dynamixel Robot Arm

- Laura Smith (Tentacular) – Interactive Robotic Art

Thanks sincerely on behalf of the RRS22 committee to every applicant, participant, and audience member who took to time to share their passion for robotics. We wish you all the best in your robotics endeavors.

As a volunteer run endeavour in it’s second year, there is still plenty of room for improvement. On reflection, this year’s event had greater enthusiasm and participation from the community, despite a smaller audience during the livestream. The RRS committee is aware of this, and will be making strategy changes to ensure that RRS2023 justifies the effort put in by everyone. I will note that the positive feedback this year has been wonderful, a few people went out of their way to express how much they enjoyed this year’s event, the variety of speakers and the passion of the community. We’re confident that next year’s event we will be able to iron out the kinks and run a brilliant event for an audience worthy of the talent on display.