Japan rolls out ‘humble and lovable’ delivery robots

AI-Powered FRIDA robot collaborates with humans to create art

Investing in AGVs or AMRs? 6 Ways Engineered Mezzanine Flooring Maximizes Implementation Success

Engineers devise a modular system to produce efficient, scalable aquabots

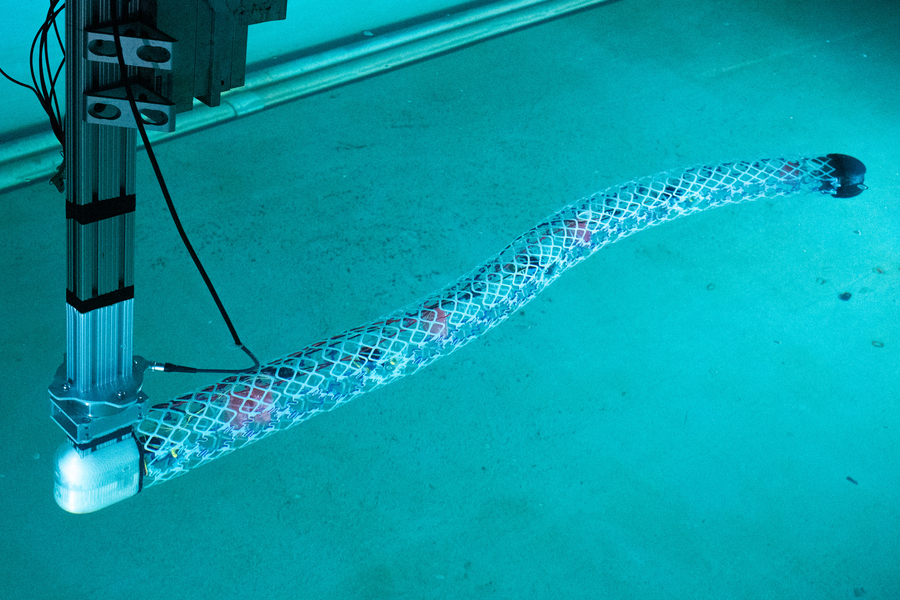

Researchers have come up with an innovative approach to building deformable underwater robots using simple repeating substructures. The team has demonstrated the new system in two different example configurations, one like an eel, pictured here in the MIT tow tank. Credit: Courtesy of the researchers

By David L. Chandler | MIT News Office

Underwater structures that can change their shapes dynamically, the way fish do, push through water much more efficiently than conventional rigid hulls. But constructing deformable devices that can change the curve of their body shapes while maintaining a smooth profile is a long and difficult process. MIT’s RoboTuna, for example, was composed of about 3,000 different parts and took about two years to design and build.

Now, researchers at MIT and their colleagues — including one from the original RoboTuna team — have come up with an innovative approach to building deformable underwater robots, using simple repeating substructures instead of unique components. The team has demonstrated the new system in two different example configurations, one like an eel and the other a wing-like hydrofoil. The principle itself, however, allows for virtually unlimited variations in form and scale, the researchers say.

The work is being reported in the journal Soft Robotics, in a paper by MIT research assistant Alfonso Parra Rubio, professors Michael Triantafyllou and Neil Gershenfeld, and six others.

Existing approaches to soft robotics for marine applications are generally made on small scales, while many useful real-world applications require devices on scales of meters. The new modular system the researchers propose could easily be extended to such sizes and beyond, without requiring the kind of retooling and redesign that would be needed to scale up current systems.

The deformable robots are made with lattice-like pieces, called voxels, that are low density and have high stiffness. The deformable robots are made with lattice-like pieces, called voxels, that are low density and have high stiffness. Credit: Courtesy of the researchers

“Scalability is a strong point for us,” says Parra Rubio. Given the low density and high stiffness of the lattice-like pieces, called voxels, that make up their system, he says, “we have more room to keep scaling up,” whereas most currently used technologies “rely on high-density materials facing drastic problems” in moving to larger sizes.

The individual voxels in the team’s experimental, proof-of-concept devices are mostly hollow structures made up of cast plastic pieces with narrow struts in complex shapes. The box-like shapes are load-bearing in one direction but soft in others, an unusual combination achieved by blending stiff and flexible components in different proportions.

“Treating soft versus hard robotics is a false dichotomy,” Parra Rubio says. “This is something in between, a new way to construct things.” Gershenfeld, head of MIT’s Center for Bits and Atoms, adds that “this is a third way that marries the best elements of both.”

“Smooth flexibility of the body surface allows us to implement flow control that can reduce drag and improve propulsive efficiency, resulting in substantial fuel saving,” says Triantafyllou, who is the Henry L. and Grace Doherty Professor in Ocean Science and Engineering, and was part of the RoboTuna team.

Credit: Courtesy of the researchers.

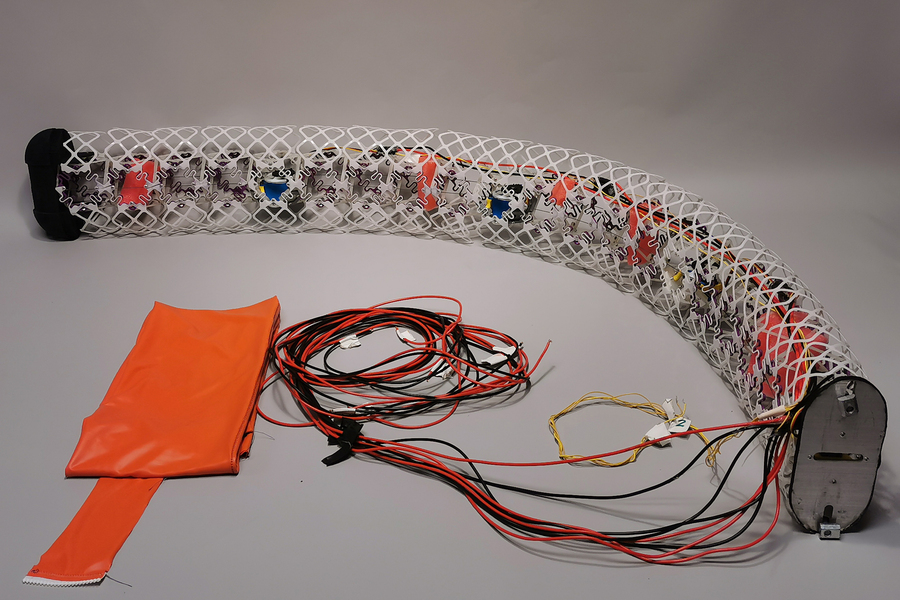

In one of the devices produced by the team, the voxels are attached end-to-end in a long row to form a meter-long, snake-like structure. The body is made up of four segments, each consisting of five voxels, with an actuator in the center that can pull a wire attached to each of the two voxels on either side, contracting them and causing the structure to bend. The whole structure of 20 units is then covered with a rib-like supporting structure, and then a tight-fitting waterproof neoprene skin. The researchers deployed the structure in an MIT tow tank to show its efficiency in the water, and demonstrated that it was indeed capable of generating forward thrust sufficient to propel itself forward using undulating motions.

“There have been many snake-like robots before,” Gershenfeld says. “But they’re generally made of bespoke components, as opposed to these simple building blocks that are scalable.”

For example, Parra Rubio says, a snake-like robot built by NASA was made up of thousands of unique pieces, whereas for this group’s snake, “we show that there are some 60 pieces.” And compared to the two years spent designing and building the MIT RoboTuna, this device was assembled in about two days, he says.

The individual voxels are mostly hollow structures made up of cast plastic pieces with narrow struts in complex shapes. Credit: Courtesy of the researchers

The other device they demonstrated is a wing-like shape, or hydrofoil, made up of an array of the same voxels but able to change its profile shape and therefore control the lift-to-drag ratio and other properties of the wing. Such wing-like shapes could be used for a variety of purposes, ranging from generating power from waves to helping to improve the efficiency of ship hulls — a pressing demand, as shipping is a significant source of carbon emissions.

The wing shape, unlike the snake, is covered in an array of scale-like overlapping tiles, designed to press down on each other to maintain a waterproof seal even as the wing changes its curvature. One possible application might be in some kind of addition to a ship’s hull profile that could reduce the formation of drag-inducing eddies and thus improve its overall efficiency, a possibility that the team is exploring with collaborators in the shipping industry.

The team also created a wing-like hydrofoil. Credit: Courtesy of the researchers

Ultimately, the concept might be applied to a whale-like submersible craft, using its morphable body shape to create propulsion. Such a craft that could evade bad weather by staying below the surface, but without the noise and turbulence of conventional propulsion. The concept could also be applied to parts of other vessels, such as racing yachts, where having a keel or a rudder that could curve gently during a turn instead of remaining straight could provide an extra edge. “Instead of being rigid or just having a flap, if you can actually curve the way fish do, you can morph your way around the turn much more efficiently,” Gershenfeld says.

The research team included Dixia Fan of the Westlake University in China; Benjamin Jenett SM ’15, PhD ’ 20 of Discrete Lattice Industries; Jose del Aguila Ferrandis, Amira Abdel-Rahman and David Preiss of MIT; and Filippos Tourlomousis of the Demokritos Research Center of Greece. The work was supported by the U.S. Army Research Lab, CBA Consortia funding, and the MIT Sea Grant Program.

Towards an interactive cyber-physical human platform to generate contact-rich whole-body motions

What are co-bots?

Co-bot stands for “collaborative robots”. These robots are designed to work alongside humans, by performing repetitive or heavy tasks, which would greatly ease the burden on the human worker while he or she can focus on tasks that require higher skills.

A critical item for making this possible is to implement certain safety mechanisms on these robots, in order to prevent harm to humans. This can be achieved by certain sensors placed on the robots body, in order to prevent impacts to human workers. These sensors include force, torque and ultrasonic sensors. It is also necessary to cover robots body with soft material.

Working alongside humans means that there will be more unexpected circumstances in robots environment, in comparison to unchanging environments of industrial robots. Therefore, these robots must operate with considerably more complex visual recognition and AI abilities. These robots can also be trained for new tasks by literally guiding them physically in addition to classical programming, which is very intuitive and efficient.

The market of collaborative robots is ever growing, since their beginning about 1-2 decades ago.

What are co-bots?

Co-bot stands for “collaborative robots”. These robots are designed to work alongside humans, by performing repetitive or heavy tasks, which would greatly ease the burden on the human worker while he or she can focus on tasks that require higher skills.

A critical item for making this possible is to implement certain safety mechanisms on these robots, in order to prevent harm to humans. This can be achieved by certain sensors placed on the robots body, in order to prevent impacts to human workers. These sensors include force, torque and ultrasonic sensors. It is also necessary to cover robots body with soft material.

Working alongside humans means that there will be more unexpected circumstances in robots environment, in comparison to unchanging environments of industrial robots. Therefore, these robots must operate with considerably more complex visual recognition and AI abilities. These robots can also be trained for new tasks by literally guiding them physically in addition to classical programming, which is very intuitive and efficient.

The market of collaborative robots is ever growing, since their beginning about 1-2 decades ago.

A model that could improve robots’ ability to grasp objects

Engineers devise a modular system to produce efficient, scalable aquabots

TONOMUS announces new ‘next billion’ competition targeting innovative ideas to enable tomorrow’s cognitive communities

Microelectronics give researchers a remote control for biological robots

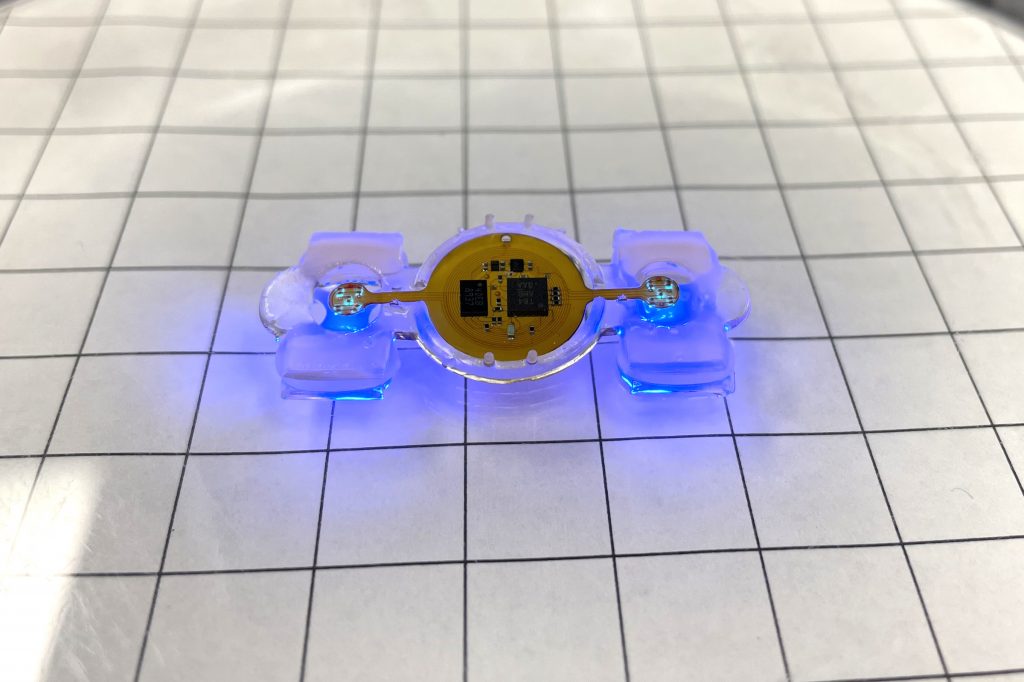

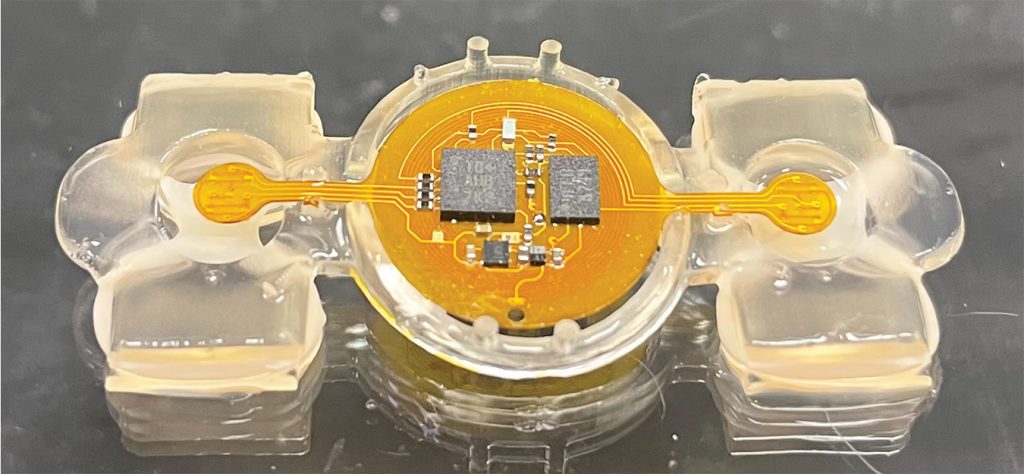

A photograph of an eBiobot prototype, lit with blue microLEDs. Remotely controlled miniature biological robots have many potential applications in medicine, sensing and environmental monitoring. Image courtesy of Yongdeok Kim

By Liz Ahlberg Touchstone

First, they walked. Then, they saw the light. Now, miniature biological robots have gained a new trick: remote control.

The hybrid “eBiobots” are the first to combine soft materials, living muscle and microelectronics, said researchers at the University of Illinois Urbana-Champaign, Northwestern University and collaborating institutions. They described their centimeter-scale biological machines in the journal Science Robotics.

“Integrating microelectronics allows the merger of the biological world and the electronics world, both with many advantages of their own, to now produce these electronic biobots and machines that could be useful for many medical, sensing and environmental applications in the future,” said study co-leader Rashid Bashir, an Illinois professor of bioengineering and dean of the Grainger College of Engineering.

Rashid Bashir. Photo by L. Brian Stauffer

Bashir’s group has pioneered the development of biobots, small biological robots powered by mouse muscle tissue grown on a soft 3D-printed polymer skeleton. They demonstrated walking biobots in 2012 and light-activated biobots in 2016. The light activation gave the researchers some control, but practical applications were limited by the question of how to deliver the light pulses to the biobots outside of a lab setting.

The answer to that question came from Northwestern University professor John A. Rogers, a pioneer in flexible bioelectronics, whose team helped integrate tiny wireless microelectronics and battery-free micro-LEDs. This allowed the researchers to remotely control the eBiobots.

“This unusual combination of technology and biology opens up vast opportunities in creating self-healing, learning, evolving, communicating and self-organizing engineered systems. We feel that it’s a very fertile ground for future research with specific potential applications in biomedicine and environmental monitoring,” said Rogers, a professor of materials science and engineering, biomedical engineering and neurological surgery at Northwestern University and director of the Querrey Simpson Institute for Bioelectronics.

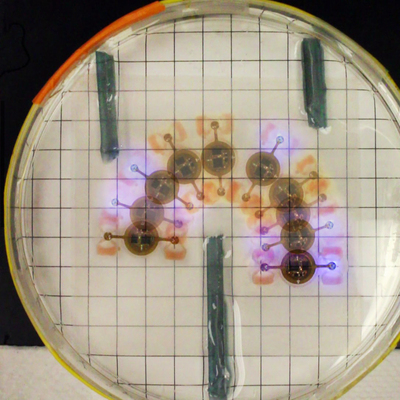

Remote control steering allows the eBiobots to maneuver around obstacles, as shown in this composite image of a bipedal robot traversing a maze. Image courtesy of Yongdeok Kim

To give the biobots the freedom of movement required for practical applications, the researchers set out to eliminate bulky batteries and tethering wires. The eBiobots use a receiver coil to harvest power and provide a regulated output voltage to power the micro-LEDs, said co-first author Zhengwei Li, an assistant professor of biomedical engineering at the University of Houston.

The researchers can send a wireless signal to the eBiobots that prompts the LEDs to pulse. The LEDs stimulate the light-sensitive engineered muscle to contract, moving the polymer legs so that the machines “walk.” The micro-LEDs are so targeted that they can activate specific portions of muscle, making the eBiobot turn in a desired direction. See a video on YouTube.

The researchers used computational modeling to optimize the eBiobot design and component integration for robustness, speed and maneuverability. Illinois professor of mechanical sciences and engineering Mattia Gazzola led the simulation and design of the eBiobots. The iterative design and additive 3D printing of the scaffolds allowed for rapid cycles of experiments and performance improvement, said Gazzola and co-first author Xiaotian Zhang, a postdoctoral researcher in Gazzola’s lab.

The eBiobots are the first wireless bio-hybrid machines, combining biological tissue, microelectronics and 3D-printed soft polymers. Image courtesy of Yongdeok Kim

The design allows for possible future integration of additional microelectronics, such as chemical and biological sensors, or 3D-printed scaffold parts for functions like pushing or transporting things that the biobots encounter, said co-first author Youngdeok Kim, who completed the work as a graduate student at Illinois.

The integration of electronic sensors or biological neurons would allow the eBiobots to sense and respond to toxins in the environment, biomarkers for disease and more possibilities, the researchers said.

“In developing a first-ever hybrid bioelectronic robot, we are opening the door for a new paradigm of applications for health care innovation, such as in-situ biopsies and analysis, minimum invasive surgery or even cancer detection within the human body,” Li said.

The National Science Foundation and the National Institutes of Health supported this work.

Piloting drones reliably with mobile communication technology

A lizard-inspired robot to explore the surface of Mars

Robot Talk Episode 35 – Interview with Emily S. Cross

Claire chatted to Professor Emily S. Cross from the University of Glasgow and Western Sydney University all about neuroscience, social learning, and human-robot interaction.

Emily S. Cross is a Professor of Social Robotics at the University of Glasgow, and a Professor of Human Neuroscience at the MARCS Institute at Western Sydney University. Using interactive learning tasks, brain scanning, and dance, acrobatics and robots, she and her Social Brain in Action Laboratory team explore how we learn by watching others throughout the lifespan, how action experts’ brains enable them to perform physical skills so exquisitely, and the social influences that shape human-robot interaction.