DeepMind’s latest research at ICLR 2023

DeepMind’s latest research at ICLR 2023

DeepMind’s latest research at ICLR 2023

Ingestible ‘electroceutical’ capsule stimulates hunger-regulating hormone

Unlock the Secrets to Success in the Robotics Industry

Robot fish makes splash with motion breakthrough

Jellyfish-like robots could one day clean up the world’s oceans

Miniscule device could help preserve the battery life of tiny sensors

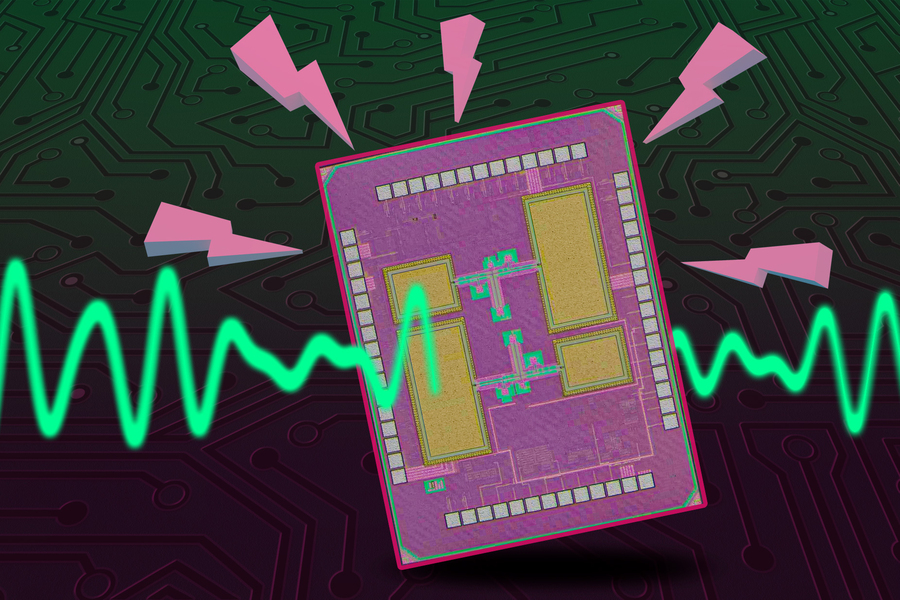

Researchers from MIT and elsewhere have built a wake-up receiver that communicates using terahertz waves, which enabled them to produce a chip more than 10 times smaller than similar devices. Their receiver, which also includes authentication to protect it from a certain type of attack, could help preserve the battery life of tiny sensors or robots. Image: Jose-Luis Olivares/MIT with figure courtesy of the researchers

By Adam Zewe | MIT News Office

Scientists are striving to develop ever-smaller internet-of-things devices, like sensors tinier than a fingertip that could make nearly any object trackable. These diminutive sensors have miniscule batteries which are often nearly impossible to replace, so engineers incorporate wake-up receivers that keep devices in low-power “sleep” mode when not in use, preserving battery life.

Researchers at MIT have developed a new wake-up receiver that is less than one-tenth the size of previous devices and consumes only a few microwatts of power. Their receiver also incorporates a low-power, built-in authentication system, which protects the device from a certain type of attack that could quickly drain its battery.

Many common types of wake-up receivers are built on the centimeter scale since their antennas must be proportional to the size of the radio waves they use to communicate. Instead, the MIT team built a receiver that utilizes terahertz waves, which are about one-tenth the length of radio waves. Their chip is barely more than 1 square millimeter in size.

They used their wake-up receiver to demonstrate effective, wireless communication with a signal source that was several meters away, showcasing a range that would enable their chip to be used in miniaturized sensors.

For instance, the wake-up receiver could be incorporated into microrobots that monitor environmental changes in areas that are either too small or hazardous for other robots to reach. Also, since the device uses terahertz waves, it could be utilized in emerging applications, such as field-deployable radio networks that work as swarms to collect localized data.

“By using terahertz frequencies, we can make an antenna that is only a few hundred micrometers on each side, which is a very small size. This means we can integrate these antennas to the chip, creating a fully integrated solution. Ultimately, this enabled us to build a very small wake-up receiver that could be attached to tiny sensors or radios,” says Eunseok Lee, an electrical engineering and computer science (EECS) graduate student and lead author of a paper on the wake-up receiver.

Lee wrote the paper with his co-advisors and senior authors Anantha Chandrakasan, dean of the MIT School of Engineering and the Vannevar Bush Professor of Electrical Engineering and Computer Science, who leads the Energy-Efficient Circuits and Systems Group, and Ruonan Han, an associate professor in EECS, who leads the Terahertz Integrated Electronics Group in the Research Laboratory of Electronics; as well as others at MIT, the Indian Institute of Science, and Boston University. The research is being presented at the IEEE Custom Integrated Circuits Conference.

Scaling down the receiver

Terahertz waves, found on the electromagnetic spectrum between microwaves and infrared light, have very high frequencies and travel much faster than radio waves. Sometimes called “pencil beams,” terahertz waves travel in a more direct path than other signals, which makes them more secure, Lee explains.

However, the waves have such high frequencies that terahertz receivers often multiply the terahertz signal by another signal to alter the frequency, a process known as frequency mixing modulation. Terahertz mixing consumes a great deal of power.

Instead, Lee and his collaborators developed a zero-power-consumption detector that can detect terahertz waves without the need for frequency mixing. The detector uses a pair of tiny transistors as antennas, which consume very little power.

Even with both antennas on the chip, their wake-up receiver was only 1.54 square millimeters in size and consumed less than 3 microwatts of power. This dual-antenna setup maximizes performance and makes it easier to read signals.

Once received, their chip amplifies a terahertz signal and then converts analog data into a digital signal for processing. This digital signal carries a token, which is a string of bits (0s and 1s). If the token corresponds to the wake-up receiver’s token, it will activate the device.

Ramping up security

In most wake-up receivers, the same token is reused multiple times, so an eavesdropping attacker could figure out what it is. Then the hacker could send a signal that would activate the device over and over again, using what is called a denial-of-sleep attack.

“With a wake-up receiver, the lifetime of a device could be improved from one day to one month, for instance, but an attacker could use a denial-of-sleep attack to drain that entire battery life in even less than a day. That is why we put authentication into our wake-up receiver,” he explains.

They added an authentication block that utilizes an algorithm to randomize the device’s token each time, using a key that is shared with trusted senders. This key acts like a password — if a sender knows the password, they can send a signal with the right token. The researchers do this using a technique known as lightweight cryptography, which ensures the entire authentication process only consumes a few extra nanowatts of power.

They tested their device by sending terahertz signals to the wake-up receiver as they increased the distance between the chip and the terahertz source. In this way, they tested the sensitivity of their receiver — the minimum signal power needed for the device to successfully detect a signal. Signals that travel farther have less power.

“We achieved 5- to 10-meter longer distance demonstrations than others, using a device with a very small size and microwatt level power consumption,” Lee says.

But to be most effective, terahertz waves need to hit the detector dead-on. If the chip is at an angle, some of the signal will be lost. So, the researchers paired their device with a terahertz beam-steerable array, recently developed by the Han group, to precisely direct the terahertz waves. Using this technique, communication could be sent to multiple chips with minimal signal loss.

In the future, Lee and his collaborators want to tackle this problem of signal degradation. If they can find a way to maintain signal strength when receiver chips move or tilt slightly, they could increase the performance of these devices. They also want to demonstrate their wake-up receiver in very small sensors and fine-tune the technology for use in real-world devices.

“We have developed a rich technology portfolio for future millimeter-sized sensing, tagging, and authentication platforms, including terahertz backscattering, energy harvesting, and electrical beam steering and focusing. Now, this portfolio is more complete with Eunseok’s first-ever terahertz wake-up receiver, which is critical to save the extremely limited energy available on those mini platforms,” Han says.

Additional co-authors include Muhammad Ibrahim Wasiq Khan PhD ’22; Xibi Chen, an EECS graduate student; Ustav Banerjee PhD ’21, an assistant professor at the Indian Institute of Science; Nathan Monroe PhD ’22; and Rabia Tugce Yazicigil, an assistant professor of electrical and computer engineering at Boston University.