Inch by inch, this machine is leading soft robotics to a more energy efficient future

What Are The Differences Between Safe LiDAR and LiDAR?

Ghostbuster: Detecting Text Ghostwritten by Large Language Models

The structure of Ghostbuster, our new state-of-the-art method for detecting AI-generated text.

Large language models like ChatGPT write impressively well—so well, in fact, that they’ve become a problem. Students have begun using these models to ghostwrite assignments, leading some schools to ban ChatGPT. In addition, these models are also prone to producing text with factual errors, so wary readers may want to know if generative AI tools have been used to ghostwrite news articles or other sources before trusting them.

What can teachers and consumers do? Existing tools to detect AI-generated text sometimes do poorly on data that differs from what they were trained on. In addition, if these models falsely classify real human writing as AI-generated, they can jeopardize students whose genuine work is called into question.

Our recent paper introduces Ghostbuster, a state-of-the-art method for detecting AI-generated text. Ghostbuster works by finding the probability of generating each token in a document under several weaker language models, then combining functions based on these probabilities as input to a final classifier. Ghostbuster doesn’t need to know what model was used to generate a document, nor the probability of generating the document under that specific model. This property makes Ghostbuster particularly useful for detecting text potentially generated by an unknown model or a black-box model, such as the popular commercial models ChatGPT and Claude, for which probabilities aren’t available. We’re particularly interested in ensuring that Ghostbuster generalizes well, so we evaluated across a range of ways that text could be generated, including different domains (using newly collected datasets of essays, news, and stories), language models, or prompts.

Read MoreAsymmetric Certified Robustness via Feature-Convex Neural Networks

TLDR: We propose the asymmetric certified robustness problem, which requires certified robustness for only one class and reflects real-world adversarial scenarios. This focused setting allows us to introduce feature-convex classifiers, which produce closed-form and deterministic certified radii on the order of milliseconds.

Figure 1. Illustration of feature-convex classifiers and their certification for sensitive-class inputs. This architecture composes a Lipschitz-continuous feature map $\varphi$ with a learned convex function $g$. Since $g$ is convex, it is globally underapproximated by its tangent plane at $\varphi(x)$, yielding certified norm balls in the feature space. Lipschitzness of $\varphi$ then yields appropriately scaled certificates in the original input space.

Despite their widespread usage, deep learning classifiers are acutely vulnerable to adversarial examples: small, human-imperceptible image perturbations that fool machine learning models into misclassifying the modified input. This weakness severely undermines the reliability of safety-critical processes that incorporate machine learning. Many empirical defenses against adversarial perturbations have been proposed—often only to be later defeated by stronger attack strategies. We therefore focus on certifiably robust classifiers, which provide a mathematical guarantee that their prediction will remain constant for an $\ell_p$-norm ball around an input.

Conventional certified robustness methods incur a range of drawbacks, including nondeterminism, slow execution, poor scaling, and certification against only one attack norm. We argue that these issues can be addressed by refining the certified robustness problem to be more aligned with practical adversarial settings.

Read MoreThe long jump: Athletic, insect-scale long jumping robots reach where others can’t

A gecko-inspired twist on robotic handling

A robot inspired by mantis shrimp to explore narrow underwater environments

Delica Drives Efficiency with AGV Installation

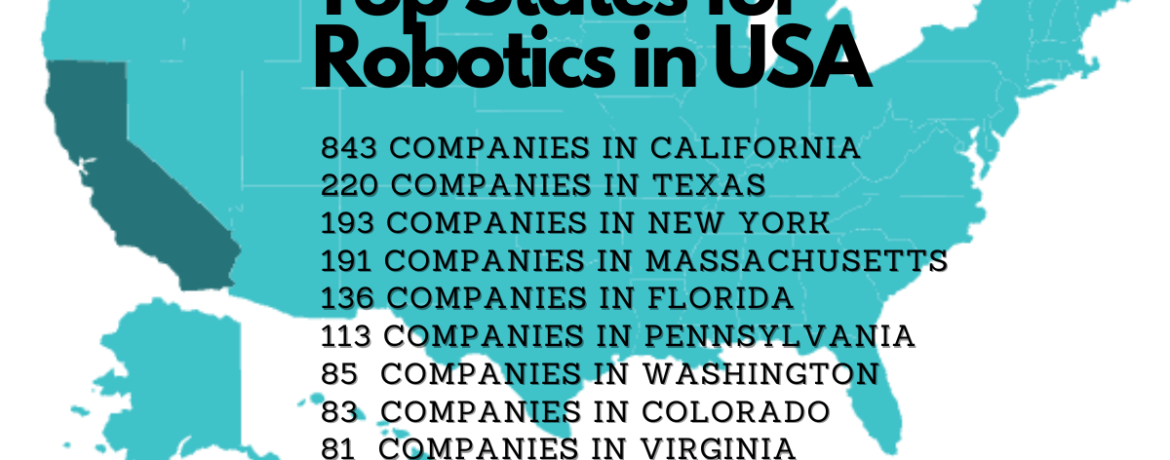

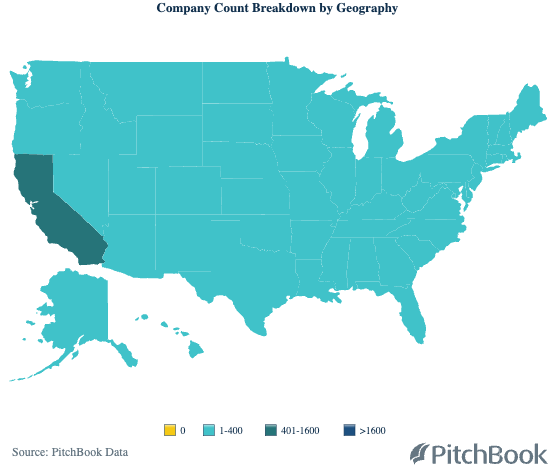

California is the robotics capital of the world

I came to the Silicon Valley region in 2010 because I knew it was the robotics center of the world, but it certainly doesn’t get anywhere near the media attention that some other robotics regions do. In California, robotics technology is a small fish in a much bigger technology pond, and that tends to conceal how important Californian companies are to the robotics revolution.

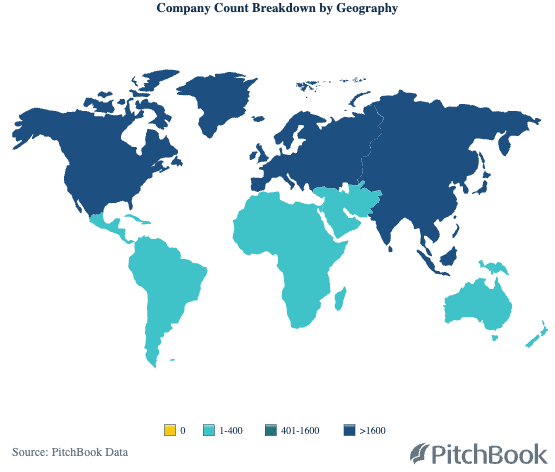

This conservative dataset from Pitchbook [Vertical: Robotics and Drones] provides data for 7166 robotics and drones companies, although a more customized search would provide closer to 10,000 robotics companies world wide. Regions ordered by size are:

- North America 2802

- Asia 2337

- Europe 2285

- Middle East 321

- Oceania 155

- South America 111

- Africa 63

- Central America 13

USA robotics companies by state

- California = 843 (667) * no of companies followed by no of head quarters

- Texas = 220 (159)

- New York = 193 (121)

- Massachusetts = 191 (135)

- Florida = 136 (95)

- Pennsylvania = 113 (89)

- Washington = 85 (61)

- Colorado = 83 (57)

- Virginia = 81 (61)

- Michigan = 70 (56)

- Illinois = 66 (43)

- Ohio = 65 (56)

- Georgia = 64 (46)

- New Jersey = 53 (36)

- Delaware = 49 (18)

- Maryland = 48 (34)

- Arizona = 48 (37)

- Nevada = 42 (29)

- North Carolina = 39 (29)

- Minnesota = 31 (25)

- Utah = 30 (24)

- Indiana = 29 (26)

- Oregon = 29 (20)

- Connecticut = 27 (22)

- DC = 26 (12)

- Alabama = 25 (21)

- Tennessee = 20 (18)

- Iowa = 17 (14)

- New Mexico = 17 (15)

- Missouri = 17 (16)

- Wisconsin = 15 (12)

- North Dakota = 14 (8)

- South Carolina = 13 (11)

- New Hampshire = 13 (12)

- Nebraska = 13 (11)

- Oklahoma = 10 (8)

- Kentucky = 10 (7)

- Kansas = 9 (9)

- Louisiana = 9 (8)

- Rhode Island = 8 (6)

- Idaho = 8 (6)

- Maine = 5 (5)

- Montana = 5 (4)

- Wyoming = 5 (3)

- Mississippi = 3 (1)

- Arkansas = 3 (2)

- Alaska = 3 (3)

- Hawaii = 2 (1)

- West Virginia = 1 (1)

- South Dakota = 1 (0)

Note – this number in brackets is for HQ locations, whereas the first number is for all company locations. The end results and rankings are practically the same.

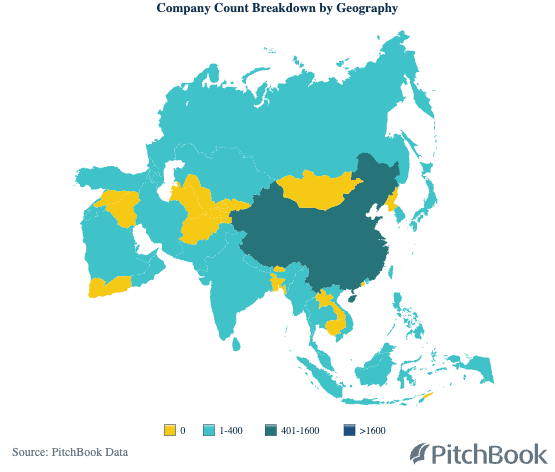

ASIA robotics companies by country

- China = 1350

- Japan = 283

- India = 261

- South Korea = 246

- Israel = 193

- Hong Kong = 72

- Russia = 69

- United Arab Emirates = 50

- Turkey = 48

- Malaysia = 35

- Taiwan = 21

- Saudi Arabia = 19

- Thailand = 13

- Vietnam = 12

- Indonesia = 10

- Lebanon = 7

- Kazakhstan = 3

- Iran = 3

- Kuwait = 3

- Oman = 3

- Qatar = 3

- Pakistan = 3

- Philippines = 2

- Bahrain = 2

- Georgia = 2

- Sri Lanka = 2

- Azerbaijan = 1

- Nepal = 1

- Armenia = 1

- Burma/Myanmar = 1

Countries with no robotics; Yemen, Iraq, Syria, Turkmenistan, Afghanistan, Syria, Jordan, Uzbekistan, Kyrgyzstan, Tajikistan, Bangladesh, Bhutan, Mongolia, Cambodia, Laos, North Korea, East Timor.

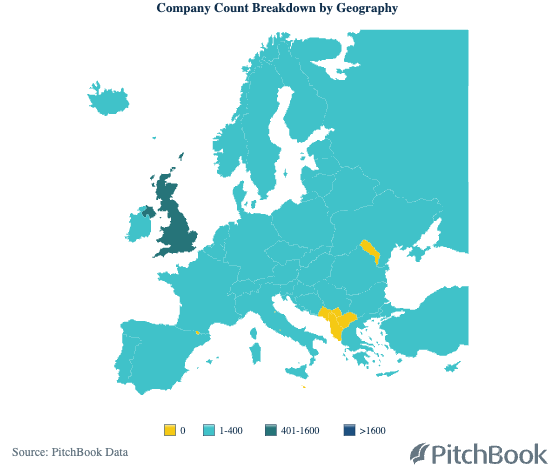

UK/EUROPE robotics companies by country

- United Kingdom = 443

- Germany = 331

- France = 320

- Spain = 159

- Netherlands = 156

- Switzerland = 140

- Italy = 125

- Denmark = 115

- Sweden = 85

- Norway = 80

- Poland = 74

- Belgium = 72

- Russia = 69

- Austria = 51

- Turkey = 48

- Finland = 45

- Portugal = 36

- Ireland = 28

- Estonia = 24

- Ukraine = 22

- Czech Republic = 19

- Romania = 19

- Hungary = 18

- Lithuania = 18

- Latvia = 15

- Greece = 15

- Bulgaria = 11

- Slovakia = 10

- Croatia = 7

- Slovenia = 6

- Serbia = 6

- Belarus = 4

- Iceland = 3

- Cyprus = 2

- Bosnia & Herzegovina = 1

Countries with no robotics; Andorra, Montenegro, Albania, Macedonia, Kosovo, Moldova, Malta, Vatican City.

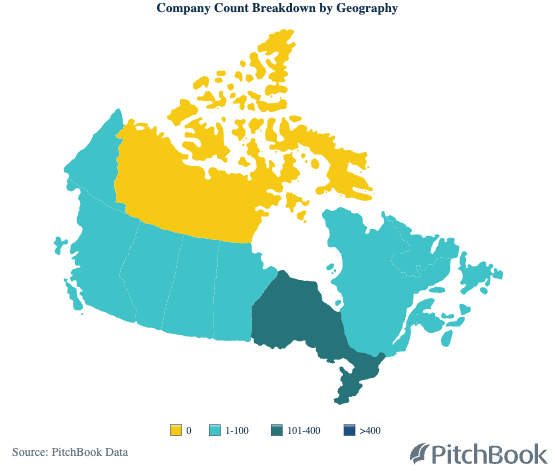

CANADA robotics companies by region

- Ontario = 144

- British Colombia = 60

- Quebec = 53

- Alberta = 34

- Manitoba = 7

- Saskatchewan = 6

- Newfoundland & Labrador = 2

- Yukon = 1

Regions with no robotics; Nunavut, Northwest Territories.

Stable and efficient robotic artificial muscles built upon new material combinations

The World’s First Dual-Arm Autonomous Robotic Sanding Cell

Robot Talk Episode 61 – Masoumeh Mansouri

Claire chatted to Masoumeh (Iran) Mansouri from the University of Birmingham about culturally sensitive robots and planning in complex environments.

Masoumeh Mansouri is an Associate Professor in the School of Computer Science at the University of Birmingham. Her research includes two complementary areas: (i) developing hybrid robot planning methods for unstructured environments shared with humans, and (ii) exploring topics at the intersection of cultural theories and robotics. In the latter, her main goal is to study whether/how robots can be culturally sensitive given the broad definitions of culture in different fields of study.