Artificial intelligence blood test provides a reliable way to identify lung cancer

Painting Robots: Benefits, Applications, and Sourcing Tips

Adoption of Digital Twins Set to Accelerate with the Latest Release of Duality’s Falcon Platform Powered by Unreal Engine

Researchers create an autonomously navigating wheeled-legged robot

How Much Does It Cost To Develop Tinder Dating App?

How Much Does It Cost To Develop Tinder Dating App?

A Guide To Dating App Development

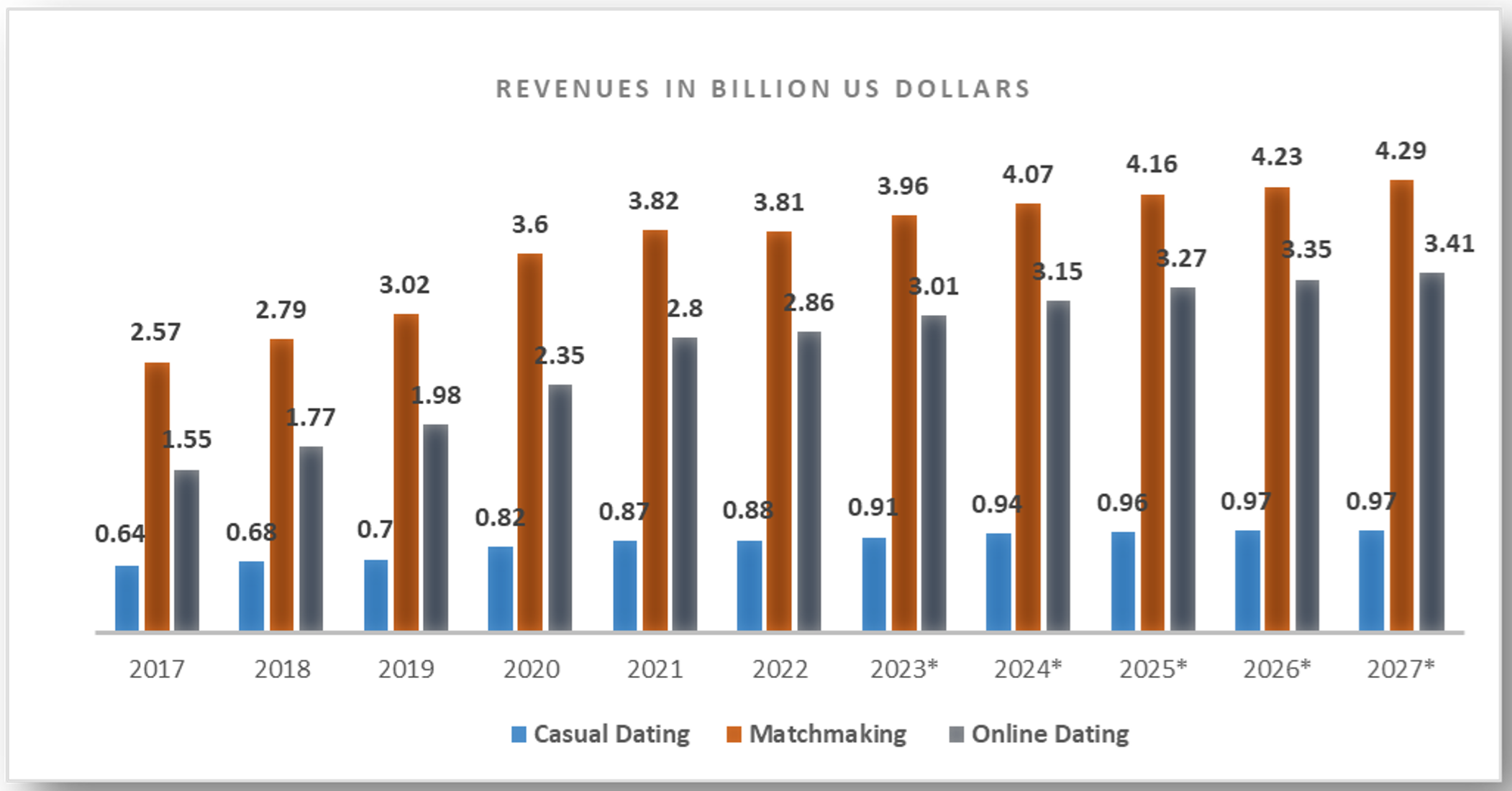

According to Statista, in 2022, approximately 366 million people have used online dating apps globally. By 2027, it is estimated the count is expected to reach 440 million people globally. The below figure represents the ups and downs in the mobile dating app segment worldwide.

Dating Services Revenue Worldwide From 2017 to 2027

The above figure reflects the continuous growth in global online dating services. It is predicted to generate nearly USD 3.41 billion in revenues by 2027 from USD 2.86 billion in 2022.

In particular, the USA is one of the markets for the rapid growth of online dating services. The use of online dating apps in the USA is increasing and approximately over 50 million Americans have installed mobile dating apps for their desired needs in 2022. The number of users is expected to amount to 64.54 million users by 2027.

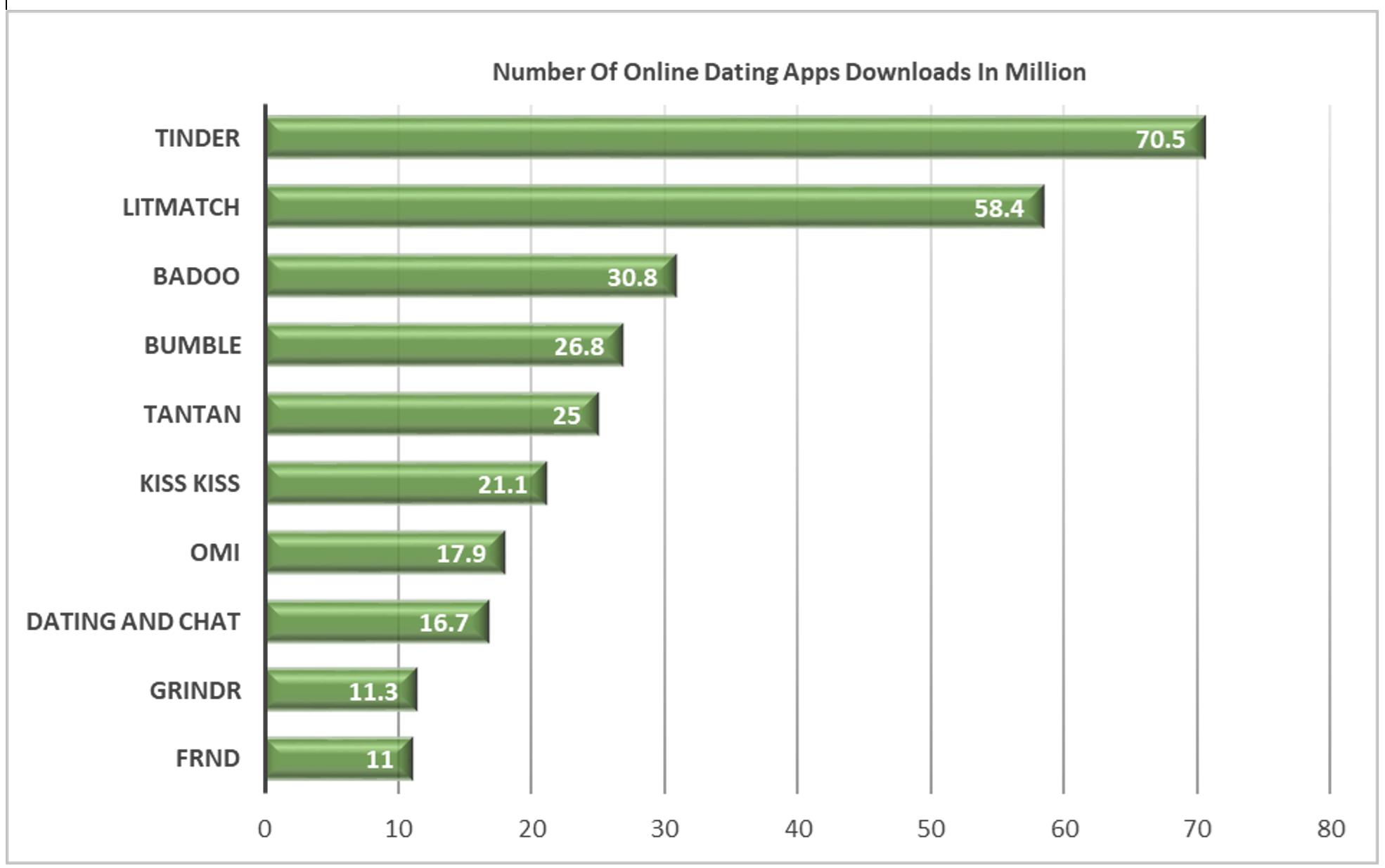

Now, let’s see the most popular online dating mobile apps in the world. The app ranking has been given based on the number of downloads.

Most Downloaded Dating Apps Worldwide 2022

According to Statista, in 2022, with over 70 million app downloads, the Tinder dating platform was the most downloaded mobile dating application worldwide. Litmatch has replaced Bumble’s second position in 2022 by reporting nearly 58 million downloads, an increase of over 31 million mobile dating users located globally wide.

On the USA market front, with over 957 thousand monthly downloads, Tinder is ruling the USA’s online dating services industry and ranked as the number #1 dating app in the USA in 2022. Followed by Bumble with 786 thousand downloads per month, stood as the second largest mobile dating app in the USA.

Hence, be it on a global scale or domestic online dating services segment, Tinder mobile app is a trending app. It is the best right time for Tinder-like a popular dating mobile app development and grab the market opportunities.

If you have plans to develop Tinder-like similar dating apps but are in a dilemma about how to create a dating app like it, here’s a guide that helps you out. Herein, we have given brief and required information on dating app development features, technology stack, and an estimated software development cost.

Let’s start our session with a brief overview of Tinder history.

Who Is Tinder?

Tinder is the world’s most popular free dating app owned by Match Group. In September 2012, the Tinder dating application was introduced in the App Store for iPhone users. Later, on July 15th 2013, it was deployed in the Google Play Store for Android users. Now, the Tinder dating app is available in over 190 countries and become the most popular free social app in the world.

This popular dating and social networking app in the USA with a simple concept of swipe right or swipe left offering a simple way to find and meet their exact matches online. Tinder-like one of the best dating apps in the USA, is now a best buddy for millions of people looking out for a new relationship.

By creating a simple profile, people can easily find potential matches as per their needs & interests and start a conversation over a secure online dating platform. On mutual understanding then can also meet directly and date of their choice.

Currently, with approximately 60 billion successful matches to date, Tinder has occupied the topmost space in the USA’s online dating industry.

How Does Tinder App Work?

To offer a convenient and comfortable app experience to users, Tinder offers the simplest way to find, connect, chat, meet, and date new people. Its user-friendly and seamless performance makes Tinder stand apart from other dating apps.

- Download and Install Tinder App

- Register and profile creation with adding preferences and interests

- Star exploring other profiles

- Real-time location mapping to find nearby profiles

- Swipe right on a photo to like a profile

- On mutually liking each other, people can connect and chat

- Swipe left on a photo to ignore or reject a profile

- Instant chat facility

- In-app Audio/Video calling facility to match

- Hence, the Tinder platform offers an easier and faster to meet or date people

The Most Important Features That You Should Add To A Dating App Like Tinder

#1. Simple Sign-in

The long app registration procedure does not work at all in this advanced mobile app world. Simple and faster sign-up and login procedures offer a faster and easier login process and improve user experiences. So, social media integrations are a must for the latest mobile app development.

Hence, allow users to access the dating mobile application with their existing social accounts like Facebook, Google, and LinkedIn etc.

#2. Profile Creation

User Profile is another significant feature that must be integrated into dating mobile apps like Tinder. An appealing profile with an attractive photo is a must to continue with a dating mobile app and will improve the profile’s reliability and chances of finding many matches.

This feature allows users to add their interests, preferences, needs, gender details, and so many others to find a match they are looking out to make a relationship.

#3. AI Feature for Matching

This advanced feature adds value to dating mobile app development (Android/iOS). Integration of Artificial Intelligence (AI) and Machine Learning enabled features will help users to find a pool of profiles that match their interests, desires, and age.

Hence, based on age and interests, the AI-based profile matching algorithm in Tinder-like dating app helps people find and connect their soulmates with ease.

#4. Swipe Feature

Here is one of the essential features of online dating apps like Tinder. Swipe Right or Swipe left model allows users to easily select people to connect and chat. It makes the profile selection process easier and improves the user experiences.

#5. GPS Tracking

This feature helps in detecting the real-time location of the users. Hence, users can find the nearby connections and make their meeting or dating seamless. Further, this feature also helps users in pinning the specific location where they are looking for new friends or people to date.

#6. Instant Chat

In-app chat feature is an essential feature in dating app development for Android or iOS. On liking each other, the app facilitates instant chatting for better communication and making matches more collaborative.

#7. Audio/Video Calling

It is an advanced feature that you must add to online dating application development like Tinder. With this feature, people can easily make audio or video calls right from the applications and communicate with their connections.

These are a few important features that you must integrate during online dating mobile app development. Tinder-like a top dating app in the USA, is generating a profitable business and has built a vast community with people of all ages.

What Technology Stack Used For The Best Dating App Like Tinder?

Using the best technology stack is significant in the mobile app development process to ensure robust performance. Yes, for ensuring the applications’ flexibility and readability, the programming languages, UI frameworks, testing tools, and debuggers will all play a key role. The global largest dating application Tinder uses a simple tech stack. Here it is:

- Android and iOS mobile app development programming languages: Swift, Objective C, Java, Ruby/Cucumber and Rubymotion

- Database: MongoDB

- Cloud Storage: Amazon S3

- Twilio for sending push notifications

- Google Maps for location tracking in real-time

- Optimizely for dumping testing tools and making multi-variate testing, and multi-page testing

- UI frameworks: Node.js and React Router

- Google Map and Map Kit for integrating in-app location tracking features

- Payment Gateways: PayPal and BrainTree

It’s a basic technology stack used for developing dating apps like Tinder and Bumble. Based on the business objectives and application requirements, top mobile app development companies in the USA will choose the right technologies, UI frameworks, and tools.

[contact-form-7]

How Much Does It Cost To Develop A Tinder-like Dating App or Bumble App?

Further, the actual cost of Tinder-like dating mobile application development will also depends on the hourly price of custom software development companies in the USA.

Hence, we can calculate the final cost of an online dating mobile app by multiplying the number of app development hours with the hourly cost of mobile app development companies in the USA. Considering all these factors, the cost of a dating app might reach to $100,000 and beyond.

We at USM Business Systems, a top custom mobile app development agency in the USA, offers best-in-class dating apps with the advanced AI features and functionalities at affordable price.

Wrapping Up!

Online dating platforms or dating mobile apps like Tinder, Badoo, and Bumble are popularizing in the USA like developed nations. So, if you invest in Tinder clone app development that would be a future-specified decision to turn your business scope in the ever-competitive USA market.

Hire the best mobile application development company like USM, let us know your app requirements, and we create the best dating app that keep your brand the new heights.

[contact-form-7]New energy source powers subsea robots indefinitely

AI and robotics enhance design of sustainable aerogels for wearable tech

Exploring the Intersection of AI and Security

TechSpective Podcast Episode 132 The rapid adoption of generative AI technologies like ChatGPT has taken the tech world by storm. Generative AI models and the LLMs (large language models) they rely on are pervasive–and potentially invasive–which highlights the importance […]

The post Exploring the Intersection of AI and Security appeared first on TechSpective.

Researchers develop advanced mechanosensor inspired by Venus flytrap

Using AI to decode dog vocalizations

New model allows a computer to understand human emotions

Robots could clear snow, assist at crosswalks, monitor sidewalks for traffic

Predictive physics model helps robots grasp the unpredictable

AI-Powered Tools Transforming Task Management and Scheduling

In today’s digital landscape, where efficiency is the new currency, AI-powered productivity tools have become essential allies.

This article marks the beginning of a series dedicated to exploring various AI productivity tools that are reshaping how we work. In this first installment, we delve into AI-enhanced scheduling and task management tools, offering a comprehensive look at some of the market leaders.

From automated scheduling to intelligent project management, AI tools like Motion, Reclaim AI, Clockwise, ClickUp, Taskade, and Asana are designed to streamline workflows and boost productivity. These tools leverage machine learning algorithms to predict and optimize our daily tasks, making it easier to manage time and resources effectively. We will examine their key features, strengths, weaknesses, and pricing to help you make informed decisions about integrating these tools into your workflow.

If this applied AI content is useful for you, subscribe to our AI mailing list to be alerted when we release new material.

Top AI Scheduling and Task Management Tools

In this section, we will explore AI-powered tools that are tailored to streamline the scheduling of meetings and individual tasks, manage projects and tasks efficiently, and even combine both functionalities for a comprehensive solution. The first tool we’ll examine exemplifies this combined approach.

Motion

Motion (funding of $13.2 million, Series A) offers a unique blend of project management and scheduling features, essentially acting as a personal assistant but with enhanced capabilities. This tool is designed to streamline team workflows by integrating advanced AI scheduling with robust project management functionalities.

Key Features

- Project Work Scheduling: Motion integrates project tasks directly into the team’s calendar, allowing for seamless planning and task allocation. Think of it as a combination of Asana and an AI scheduling tool.

- AI Meeting Assistant: This feature automates meeting scheduling and communication, handling the logistics so your team can focus on the work that matters. Tasks are automatically scheduled based on deadlines, priorities, and team availability, with tasks appearing directly in team members’ calendars.

- Native Integrations: Motion connects with Google Calendar, Gmail, Zoom, Microsoft Teams, Google Meet, Zapier, Siri, and more, ensuring smooth workflow integration across various platforms.

Strengths

- Capacity Evaluation: Motion has full access to team calendars, enabling it to accurately assess the available hours for task completion outside of meetings and personal engagements.

- Voice and Email Task Assignment: Using Motion apps on your desktop or phone, you can assign tasks by talking to Siri or forwarding emails to a specific Motion address. Tasks are automatically added to Motion and the calendar, complete with priorities and deadlines.

Weaknesses

- Reliability Issues: Some users report that task priorities can change unexpectedly, leading to rescheduling issues. Similarly, project steps may occasionally alter by themselves, causing potential disruptions in workflow.

Pricing

- Individual: $19 per month (billed annually) or $34 billed monthly.

- Team: $12 per user per month (billed annually) or $20 billed monthly.

Motion aims to enhance productivity by combining powerful scheduling features with project management tools, but it’s essential to consider the reported reliability issues when integrating it into your workflow.

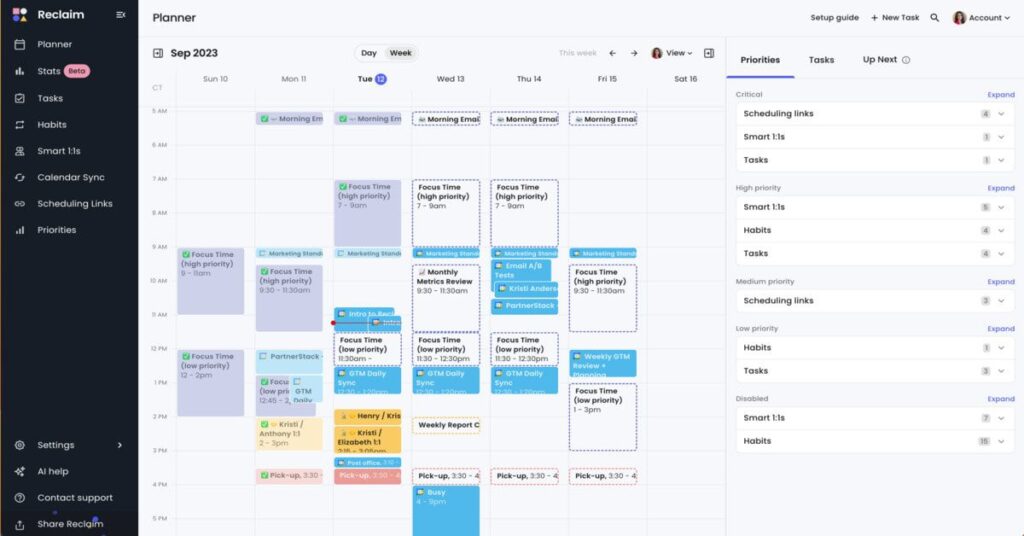

Reclaim AI

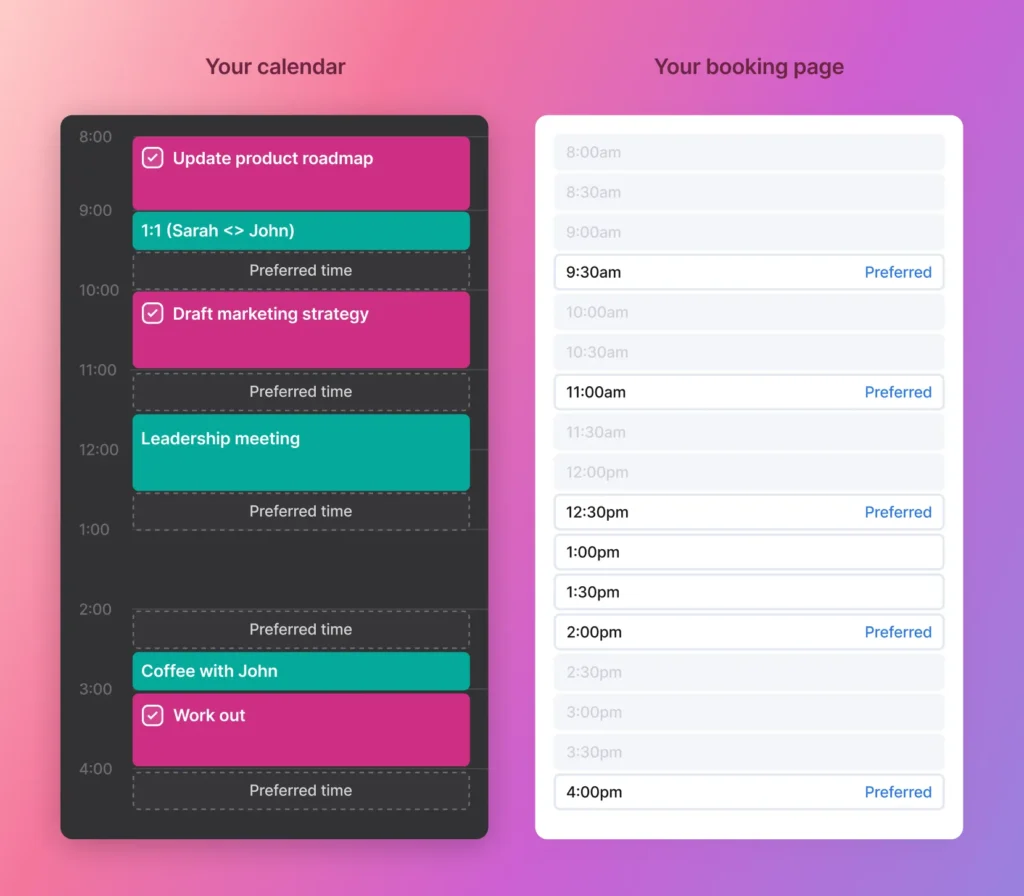

Reclaim AI (funding of $13.3 million, Seed) is designed to enhance team efficiency through intelligent scheduling and time management. This app leverages a smart calendar to optimize time, fostering better productivity, collaboration, and work-life balance. By integrating with various work tools and providing detailed analytics, Reclaim AI aims to streamline the scheduling process.

Key Features

- Automated Task Scheduling: Reclaim AI syncs with your task list to optimize daily planning automatically.

- Focus Time Protection: The app safeguards time for deep work, preventing meeting overruns.

- Time Tracking Report: By connecting your calendar, Reclaim AI offers insights into how you’ve spent your work hours over the past 12 weeks.

- Integrations: Reclaim AI integrates natively with Google Calendar and supports task list synchronization from tools like Asana, ClickUp, and Google Tasks. It also integrates with Zoom for meetings.

Strengths

- Direct Task Scheduling: Users can set deadlines, and Reclaim AI will find the optimal time slots. If tasks aren’t completed, the tool automatically reschedules them.

- Habit and Routine Scheduling: Reclaim AI allows users to set up recurring habits and routines that auto-schedule in the calendar with flexibility based on user settings.

Weaknesses

- Setup Process: The initial setup of Reclaim AI can be cumbersome and not very user-friendly.

- Limited AI Functionality: For example, while Reclaim AI can account for travel time between meetings, users must manually input the travel duration. More advanced AI tools can calculate the travel time automatically based on the location information.

Pricing

- Free Tier: Offers basic tools at no cost.

- Starter Plan: $8 per seat per month (billed annually) or $10 per seat per month (billed monthly) for smaller teams.

- Business Plan: $12 per seat per month (billed annually) or $15 per seat per month (billed monthly) for larger teams.

Reclaim AI focuses on enhancing productivity through smart scheduling and robust integration capabilities, although its setup process and limited AI functionalities might pose challenges for some users.

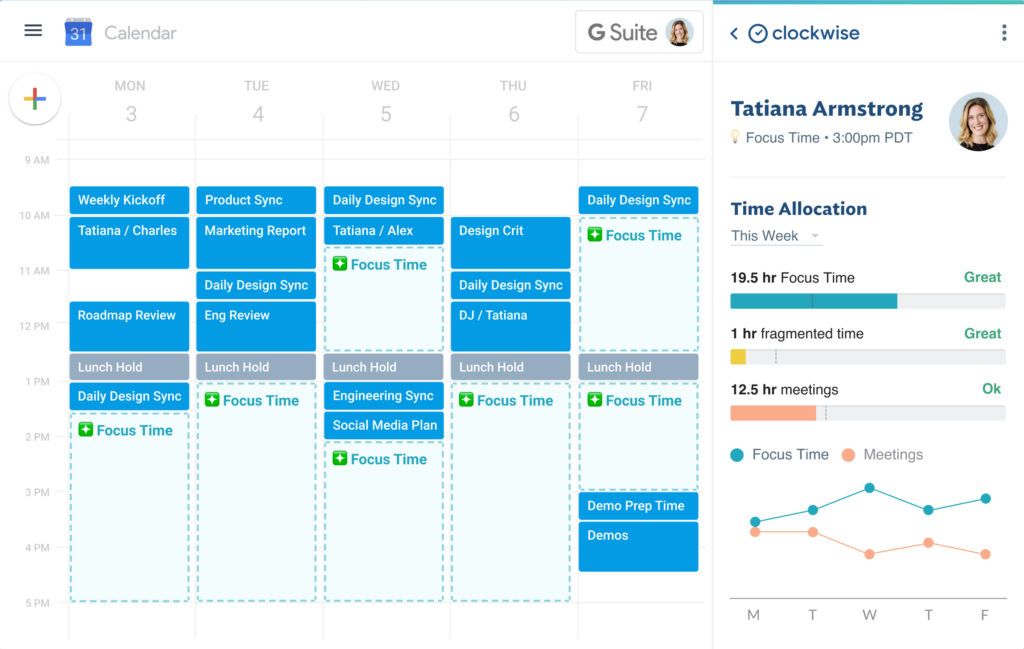

Clockwise

Clockwise (funding of $76.4 million, Series C) is a scheduling tool designed specifically for teams, promising to save an hour per week for each user. Clockwise allows you to adjust settings to craft an ideal day where work, breaks, and meetings coexist harmoniously.

Key Features

- Calendar Integration: Integrates seamlessly with popular productivity tools to streamline scheduling.

- Smart Task and Routine Scheduling: Automatically finds the best time for tasks and routines.

- Personal Time Protection: Safeguards personal time for meals, travel, and appointments.

- Meeting Optimization: Optimizes meeting times to free up uninterrupted blocks of Focus Time for each meeting participant.

- Focus Time Protection: Auto-schedules Focus Time holds to ensure deep work periods.

- Seamless Scheduling Links: Facilitates scheduling outside an organization using scheduling links.

- Organizational Analytics: Measures meeting load and focus time across the entire organization.

- Native Integrations: Integrates with Google Calendar, Slack, Zoom, and Asana, allowing tasks from Asana to be scheduled directly in Clockwise.

Strengths

- Smooth Setup Process: The setup is user-friendly and convenient.

- Automated Buffer Time Calculation: Automatically calculates travel time between meetings based on your primary work location and meeting destinations.

Weaknesses

- Very Team-Oriented Design: Clockwise may not be ideal for freelancers or those working independently, as it is tailored more towards optimizing schedules for teams and maximizing focus time for team members.

Pricing

- Free Tier: Provides basic smart calendar management tools at no cost.

- Teams Plan: $6.75 per user per month, billed annually, suitable for smaller teams.

- Business Plan: $11.50 per user per month, billed annually, ideal for larger organizations.

- Enterprise Plan: Offers advanced security and customization options, with pricing available upon request.

Clockwise excels in creating an optimal schedule for team environments, ensuring that work, breaks, and meetings are perfectly balanced to enhance productivity and focus. However, its team-oriented features may not cater well to individual freelancers.

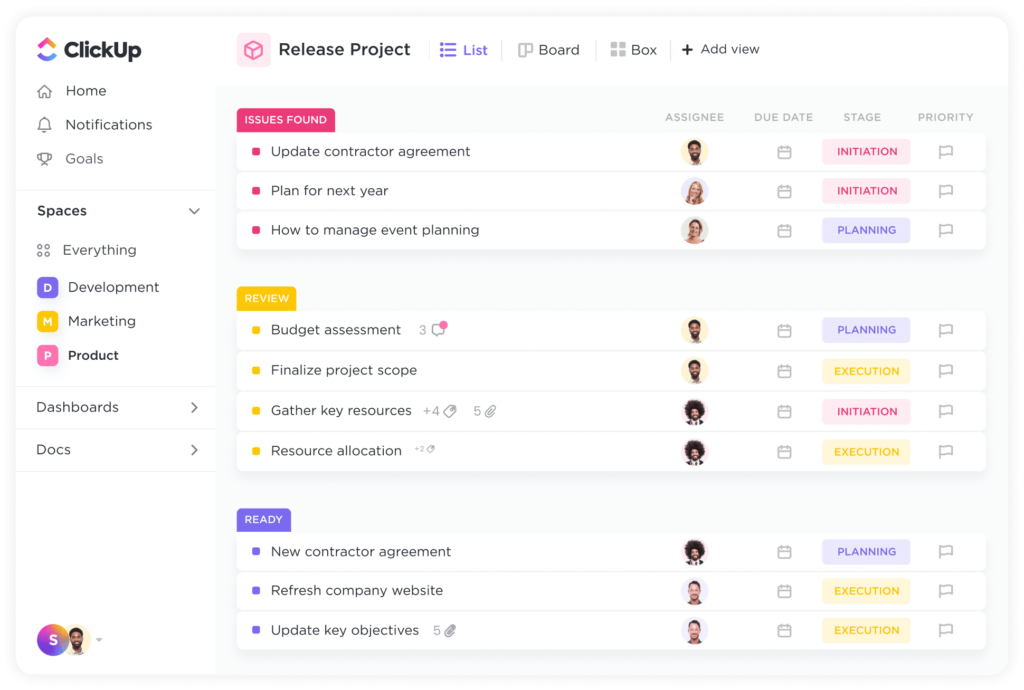

ClickUp

ClickUp (funding of $537.5 million, Series C) is a robust project management platform designed to enhance team communication, goal setting, and deadline management. It offers a suite of features that support various aspects of project and resource management, making it a versatile tool for teams of all sizes.

Key Features

- Project Management: ClickUp provides advanced functionalities for managing multiple projects and product development workflows.

- Knowledge Management: Users can create Docs or Wiki-based knowledge bases, perform searches, or consult an AI assistant for information.

- Resource Management: Features include time tracking, workload views, and goal reviews to effectively manage team resources.

- Collaboration Tools: Enhances team collaboration through Docs, Whiteboards, and Chats, among other tools.

- Extensive Integrations: Integrates with over 1,000 tools, including Google Calendar, Zoom, Microsoft Teams, GitHub, and Slack.

Strengths

- Automations: ClickUp offers over 100 automations to streamline workflows, manage routine tasks, and handle project handoffs.

- Advanced AI Features: Includes AI-powered functionalities such as task summaries, progress updates, writing assistance, prioritizing urgent tasks, and suggesting what to work on next.

Weaknesses

- Lack of Scheduling Functionality: ClickUp does not include scheduling features, requiring users to use a separate tool for meeting scheduling and time allocation.

- Cost: The tool is more expensive compared to alternatives, with AI features priced separately.

Pricing

- Free Plan: Limited storage and some advanced features not available.

- Unlimited Plan: $7 per user per month (billed annually) or $10 per user per month (billed monthly), suitable for small teams.

- Business Plan: $12 per user per month (billed annually) or $19 per user per month (billed monthly), ideal for mid-sized teams.

- Enterprise Plan: Designed for large teams with additional security features; pricing available upon request.

- Advanced AI Features: Available with any paid plan for an additional $5 per user per month.

ClickUp stands out with its comprehensive project management capabilities and advanced AI features, although it requires supplementary tools for scheduling and comes at a higher cost.

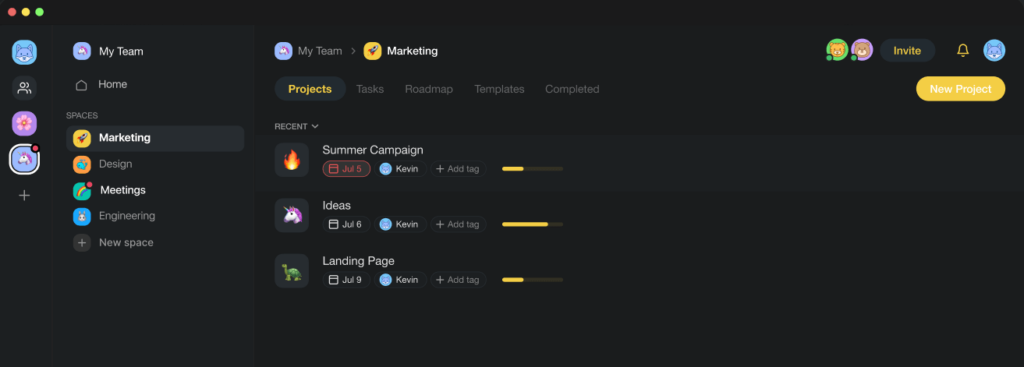

Taskade

Taskade (funding of $5.2 million, Seed) is a comprehensive productivity assistant designed to help teams manage and complete projects more efficiently. This tool integrates AI throughout its functionality, making it a powerful option for various productivity needs.

Key Features

- AI Workflow Generator: Create custom workflows for your projects with the help of AI.

- Custom AI Agents: Design AI agents tailored to specific roles such as marketing, project management, research, etc. These agents can be enriched with specified knowledge bases, personas (e.g., financial analyst), and tools (e.g., web browsing).

- AI Automation and Flows: Automate workflows by connecting Taskade AI with third-party apps to set up triggers and actions. For instance, you can create a WordPress post directly from Taskade.

- AI Writing Assistant: Supports AI-powered writing tasks, including preparing outlines, writing articles, summarizing content, and making notes.

- File and Project Interaction: Upload files and “chat” with them, or interact with your projects to get details.

Strengths

- Comprehensive Functionality: Taskade allows you to plan, research, create documents, and use AI for various tasks, all within the app. It also supports integration with external apps like WordPress.

- Integrated AI: AI is seamlessly integrated throughout the app, enhancing nearly every feature rather than being an add-on.

Weaknesses

- AI Performance: The AI often provides inaccurate information, hallucinates, or omits important details.

Pricing

- Free Plan: Includes very limited AI functionality, like for example, 5 AI requests per month.

- Taskade Pro: $8 per user per month (billed annually) or $10 per user per month (billed monthly).

- Taskade for Teams: $16 per user per month (billed annually) or $20 per user per month (billed monthly).

Taskade excels as an all-in-one productivity assistant with deep AI integration, although its AI capabilities need refinement. Its extensive features make it a versatile tool for teams looking to streamline their project management and productivity workflows.

Asana

When discussing project management tools, it’s impossible to overlook Asana, one of the most widely used platforms in the industry. Despite its popularity, Asana’s current AI functionalities are relatively limited compared to some newer players. However, it does offer a few key AI-driven features that can enhance productivity and task management:

- Generate subtasks based on action points in tasks or meeting notes.

- Summarize tasks, including content from conversations and comments.

- Improve writing by adjusting the tone and length of task descriptions and comments.

Excitingly, this is just the beginning for Asana. Tomorrow, on June 5th, they are set to launch Asana Intelligence, which they claim will make them the number one AI work management platform. This upcoming release is highly anticipated, as it promises to bring more advanced AI functionalities that could significantly enhance how users manage their workflows.

Stay tuned as we follow these developments closely. We will update you on how Asana’s new AI features stack up against other solutions in the market, providing a clearer picture of its capabilities and benefits in the ever-evolving landscape of AI-driven productivity tools.

Embracing AI: The Next Step in Work Management

As AI continues to revolutionize the way we approach productivity, tools like Motion, Reclaim, Clockwise, ClickUp, Taskade, and Asana are at the forefront of this transformation. Each of these platforms brings unique strengths and innovative features designed to streamline scheduling, enhance project management, and boost overall efficiency. While some tools like ClickUp and Taskade offer extensive AI capabilities, others like Clockwise and Asana are just beginning their journey into the realm of AI-driven productivity.

The future of work management is undoubtedly intertwined with AI, promising smarter workflows, better time management, and enhanced collaboration. As we continue this series, we will explore more tools and delve deeper into how AI is shaping the landscape of productivity.

Enjoy this article? Sign up for more AI updates.

We’ll let you know when we release more summary articles like this one.

The post AI-Powered Tools Transforming Task Management and Scheduling appeared first on TOPBOTS.